Lianghua He

Hierarchical Dynamic Masks for Visual Explanation of Neural Networks

Jan 12, 2023Abstract:Saliency methods generating visual explanatory maps representing the importance of image pixels for model classification is a popular technique for explaining neural network decisions. Hierarchical dynamic masks (HDM), a novel explanatory maps generation method, is proposed in this paper to enhance the granularity and comprehensiveness of saliency maps. First, we suggest the dynamic masks (DM), which enables multiple small-sized benchmark mask vectors to roughly learn the critical information in the image through an optimization method. Then the benchmark mask vectors guide the learning of large-sized auxiliary mask vectors so that their superimposed mask can accurately learn fine-grained pixel importance information and reduce the sensitivity to adversarial perturbations. In addition, we construct the HDM by concatenating DM modules. These DM modules are used to find and fuse the regions of interest in the remaining neural network classification decisions in the mask image in a learning-based way. Since HDM forces DM to perform importance analysis in different areas, it makes the fused saliency map more comprehensive. The proposed method outperformed previous approaches significantly in terms of recognition and localization capabilities when tested on natural and medical datasets.

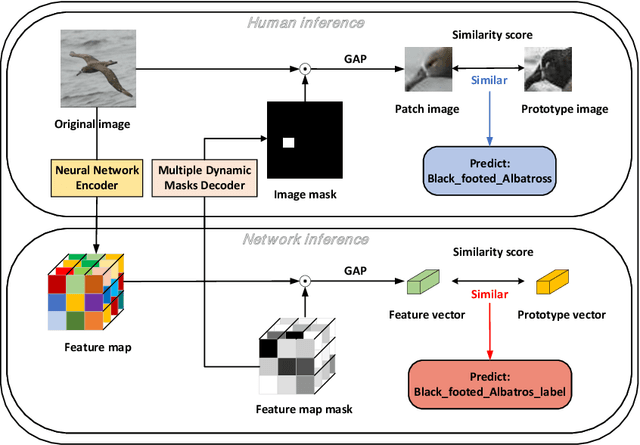

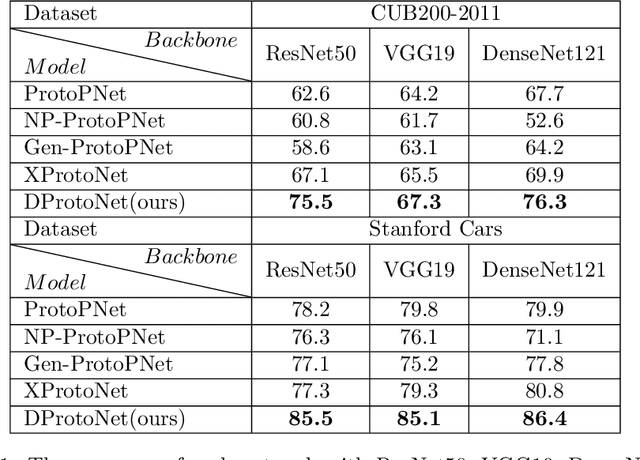

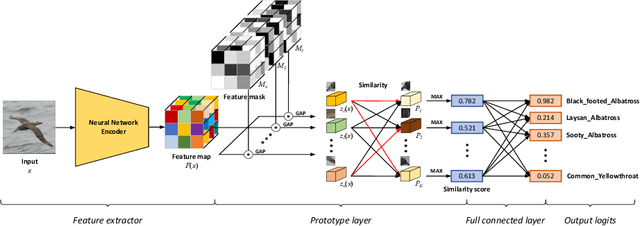

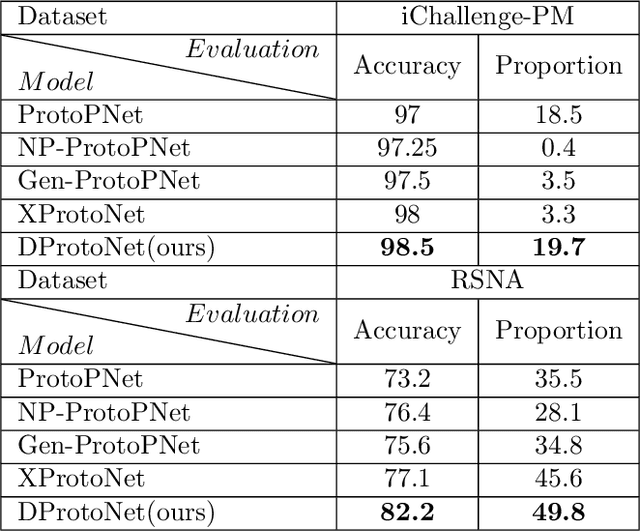

DProtoNet: Decoupling the inference module and the explanation module enables neural networks to have better accuracy and interpretability

Oct 15, 2022

Abstract:The interpretation of decisions made by neural networks is the focus of recent research. In the previous method, by modifying the architecture of the neural network, the network simulates the human reasoning process, that is, by finding the decision elements to make decisions, so that the network has the interpretability of the reasoning process. The specific interpretable architecture will limit the fitting space of the network, resulting in a decrease in the classification performance of the network, unstable convergence, and general interpretability. We propose DProtoNet (Decoupling Prototypical network), it stores the decision basis of the neural network by using feature masks, and it uses Multiple Dynamic Masks (MDM) to explain the decision basis for feature mask retention. It decouples the neural network inference module from the interpretation module, and removes the specific architectural limitations of the interpretable network, so that the decision-making architecture of the network retains the original network architecture as much as possible, making the neural network more expressive, and greatly improving the interpretability. Classification performance and interpretability of explanatory networks. We propose to replace the prototype learning of a single image with the prototype learning of multiple images, which makes the prototype robust, improves the convergence speed of network training, and makes the accuracy of the network more stable during the learning process. We test on multiple datasets, DProtoNet can improve the accuracy of recent advanced interpretable network models by 5% to 10%, and its classification performance is comparable to that of backbone networks without interpretability. It also achieves the state of the art in interpretability performance.

MDM:Visual Explanations for Neural Networks via Multiple Dynamic Mask

Jul 25, 2022

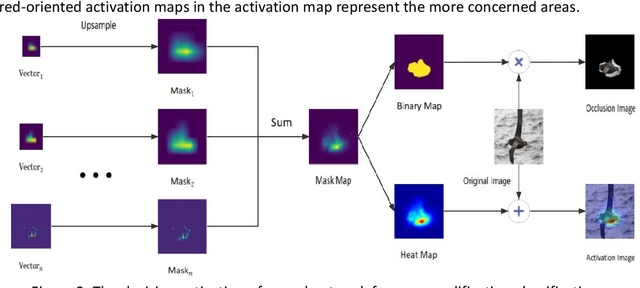

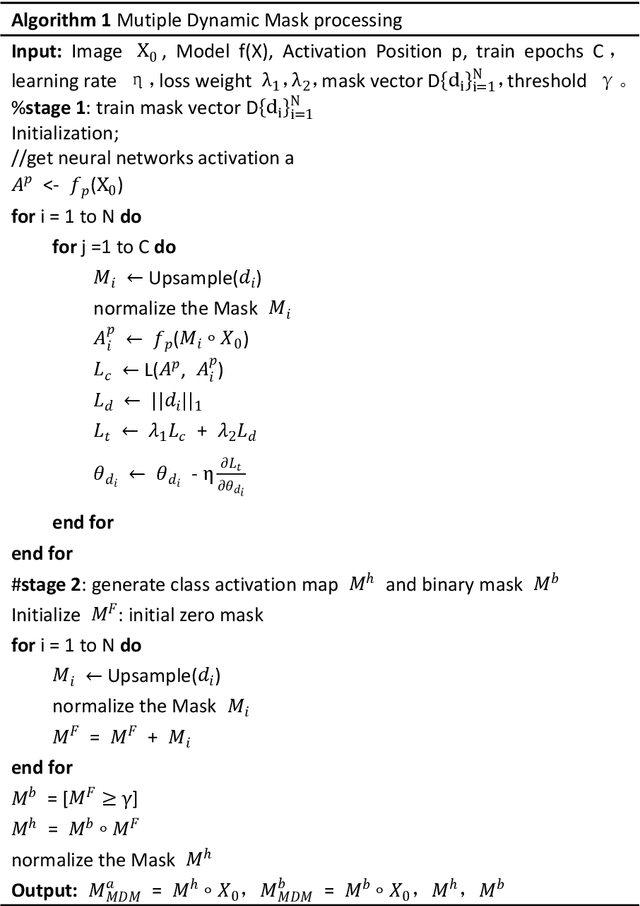

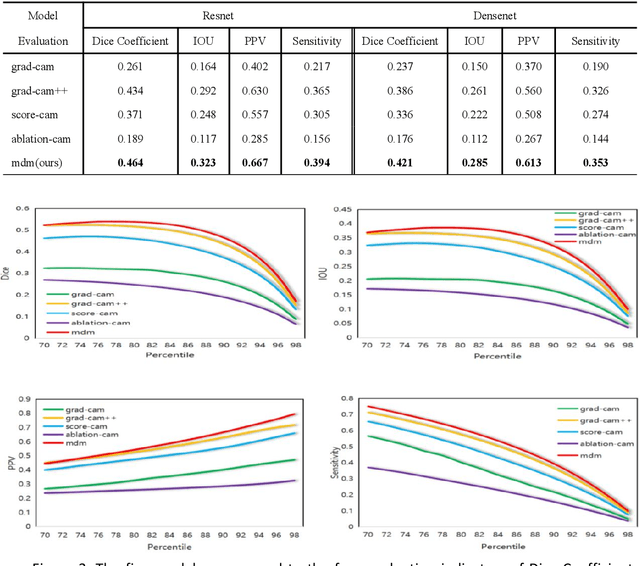

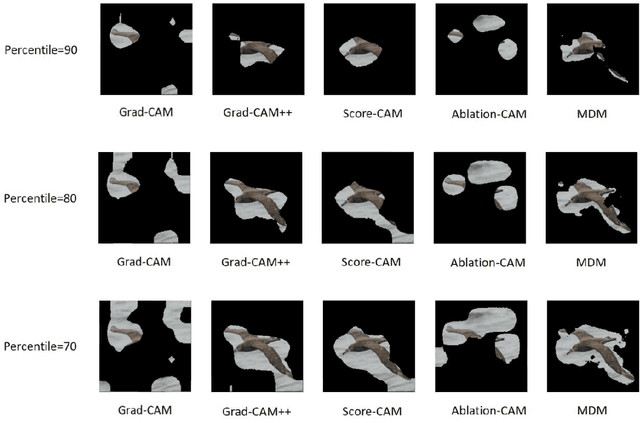

Abstract:The Class Activation Maps(CAM) lookup of a neural network can tell us what regions the neural network is focusing on when making a decision.We propose an algorithm Multiple Dynamic Mask (MDM), which is a general saliency graph query method with interpretability of inference process. The algorithm is based on an assumption: when a picture is input into a trained neural network, only the activation features related to classification will affect the classification results of the neural network, and the features unrelated to classification will hardly affect the classification results of the network. MDM: A learning-based end-to-end algorithm for finding regions of interest for neural network classification.It has the following advantages: 1. It has the interpretability of the reasoning process, and the reasoning process conforms to human cognition. 2. It is universal, it can be used for any neural network and does not depend on the internal structure of the neural network. 3. The search performance is better. The algorithm is based on learning and has the ability to adapt to different data and networks. The performance is better than the method proposed in the previous paper. For the MDM saliency map search algorithm, we experimentally compared ResNet and DenseNet as the trained neural network. The recent advanced saliency map search method and the results of MDM on the performance indicators of each search effect item, the performance of MDM has reached the state of the art. We applied the MDM method to the interpretable neural network ProtoPNet and XProtoNet, which improved the model's interpretability prototype search performance. And we visualize the effect of convolutional neural architecture and Transformer architecture in saliency map search, illustrating the interpretability and generality of MDM.

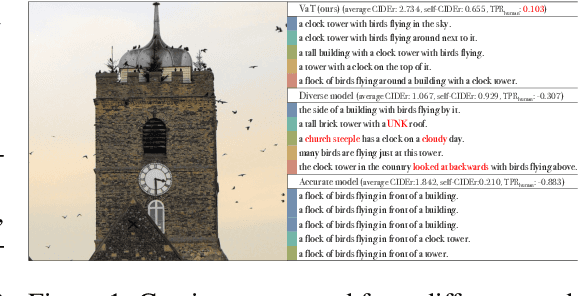

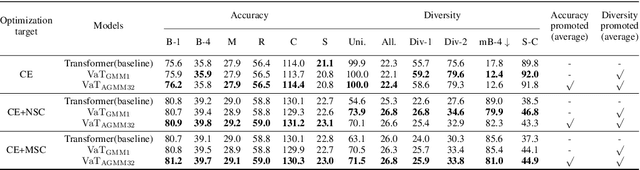

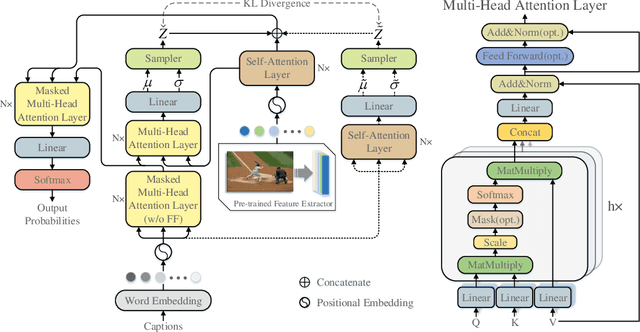

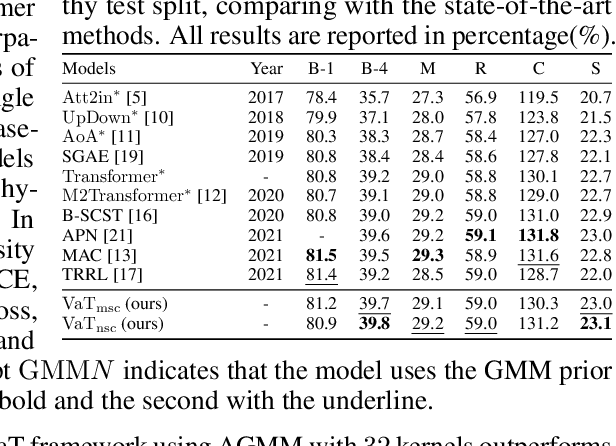

Variational Transformer: A Framework Beyond the Trade-off between Accuracy and Diversity for Image Captioning

May 28, 2022

Abstract:Accuracy and Diversity are two essential metrizable manifestations in generating natural and semantically correct captions. Many efforts have been made to enhance one of them with another decayed due to the trade-off gap. However, compromise does not make the progress. Decayed diversity makes the captioner a repeater, and decayed accuracy makes it a fake advisor. In this work, we exploit a novel Variational Transformer framework to improve accuracy and diversity simultaneously. To ensure accuracy, we introduce the "Invisible Information Prior" along with the "Auto-selectable GMM" to instruct the encoder to learn the precise language information and object relation in different scenes. To ensure diversity, we propose the "Range-Median Reward" baseline to retain more diverse candidates with higher rewards during the RL-based training process. Experiments show that our method achieves the simultaneous promotion of accuracy (CIDEr) and diversity (self-CIDEr), up to 1.1 and 4.8 percent, compared with the baseline. Also, our method outperforms others under the newly proposed measurement of the trade-off gap, with at least 3.55 percent promotion.

Revitalize Region Feature for Democratizing Video-Language Pre-training

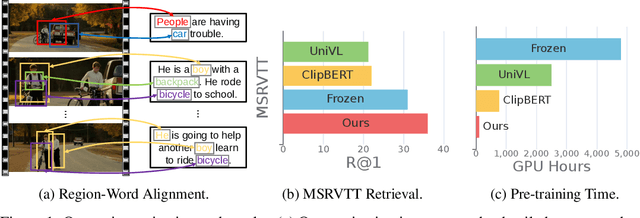

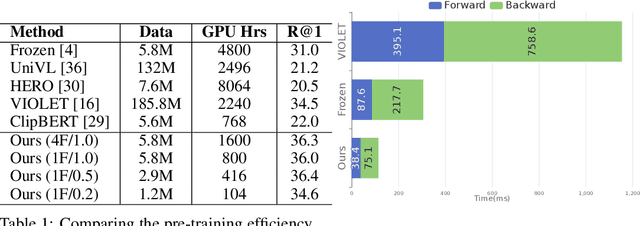

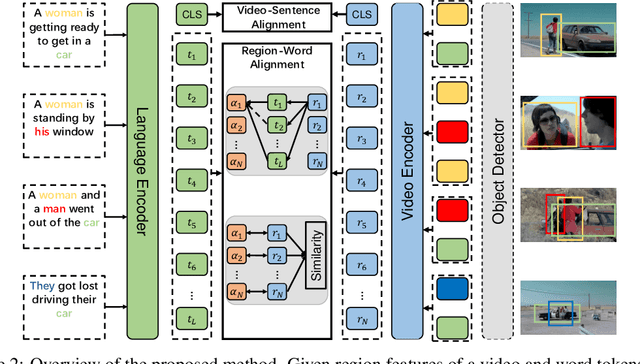

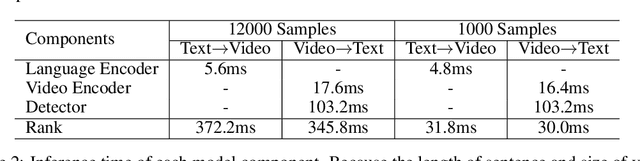

Mar 19, 2022

Abstract:Recent dominant methods for video-language pre-training (VLP) learn transferable representations from the raw pixels in an end-to-end manner to achieve advanced performance on downstream video-language tasks. Despite the impressive results, VLP research becomes extremely expensive with the need for massive data and a long training time, preventing further explorations. In this work, we revitalize region features of sparsely sampled video clips to significantly reduce both spatial and temporal visual redundancy towards democratizing VLP research at the same time achieving state-of-the-art results. Specifically, to fully explore the potential of region features, we introduce a novel bidirectional region-word alignment regularization that properly optimizes the fine-grained relations between regions and certain words in sentences, eliminating the domain/modality disconnections between pre-extracted region features and text. Extensive results of downstream text-to-video retrieval and video question answering tasks on seven datasets demonstrate the superiority of our method on both effectiveness and efficiency, e.g., our method achieves competing results with 80\% fewer data and 85\% less pre-training time compared to the most efficient VLP method so far. The code will be available at \url{https://github.com/showlab/DemoVLP}.

Unsupervised Adaptive Semantic Segmentation with Local Lipschitz Constraint

May 27, 2021

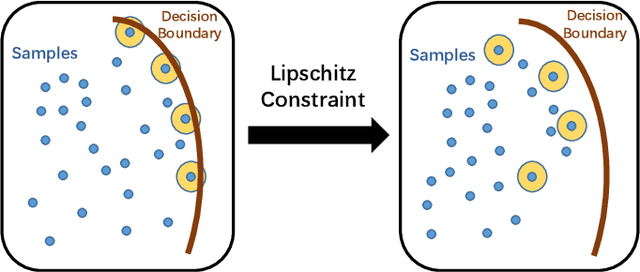

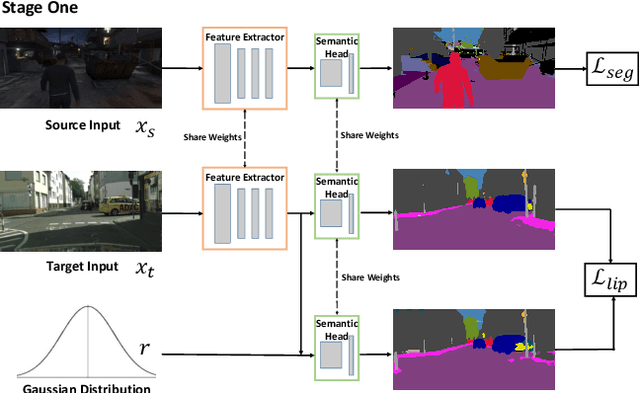

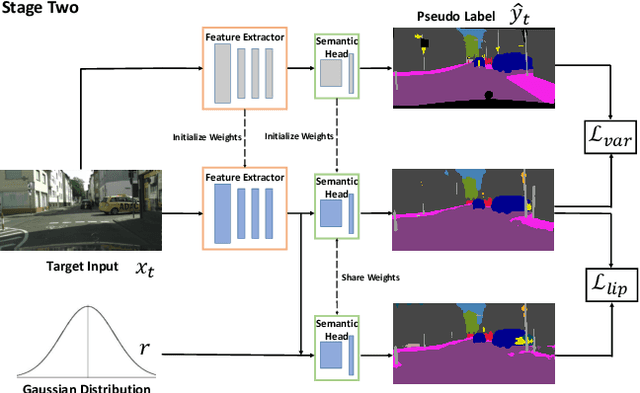

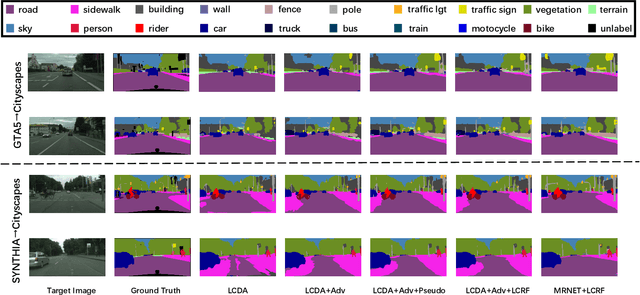

Abstract:Recent advances in unsupervised domain adaptation have seen considerable progress in semantic segmentation. Existing methods either align different domains with adversarial training or involve the self-learning that utilizes pseudo labels to conduct supervised training. The former always suffers from the unstable training caused by adversarial training and only focuses on the inter-domain gap that ignores intra-domain knowledge. The latter tends to put overconfident label prediction on wrong categories, which propagates errors to more samples. To solve these problems, we propose a two-stage adaptive semantic segmentation method based on the local Lipschitz constraint that satisfies both domain alignment and domain-specific exploration under a unified principle. In the first stage, we propose the local Lipschitzness regularization as the objective function to align different domains by exploiting intra-domain knowledge, which explores a promising direction for non-adversarial adaptive semantic segmentation. In the second stage, we use the local Lipschitzness regularization to estimate the probability of satisfying Lipschitzness for each pixel, and then dynamically sets the threshold of pseudo labels to conduct self-learning. Such dynamical self-learning effectively avoids the error propagation caused by noisy labels. Optimization in both stages is based on the same principle, i.e., the local Lipschitz constraint, so that the knowledge learned in the first stage can be maintained in the second stage. Further, due to the model-agnostic property, our method can easily adapt to any CNN-based semantic segmentation networks. Experimental results demonstrate the excellent performance of our method on standard benchmarks.

Part2Whole: Iteratively Enrich Detail for Cross-Modal Retrieval with Partial Query

Mar 02, 2021

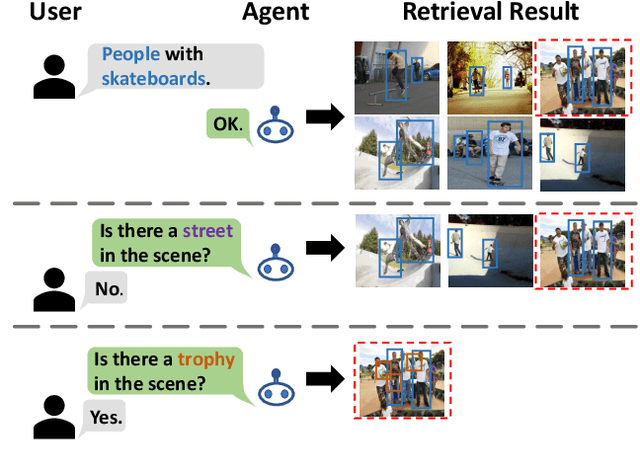

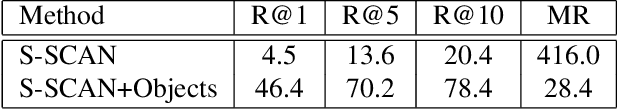

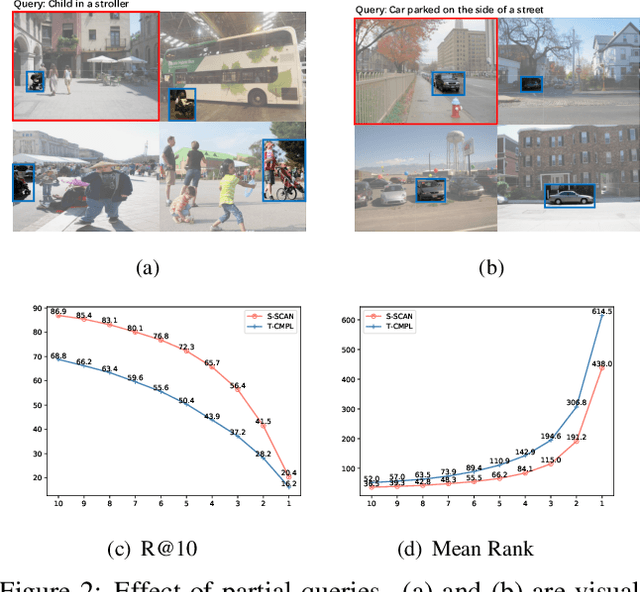

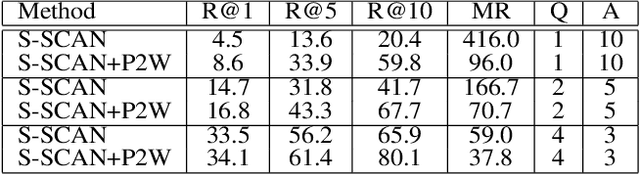

Abstract:Text-based image retrieval has seen considerable progress in recent years. However, the performance of existing methods suffers in real life since the user is likely to provide an incomplete description of a complex scene, which often leads to results filled with false positives that fit the incomplete description. In this work, we introduce the partial-query problem and extensively analyze its influence on text-based image retrieval. We then propose an interactive retrieval framework called Part2Whole to tackle this problem by iteratively enriching the missing details. Specifically, an Interactive Retrieval Agent is trained to build an optimal policy to refine the initial query based on a user-friendly interaction and statistical characteristics of the gallery. Compared to other dialog-based methods that rely heavily on the user to feed back differentiating information, we let AI take over the optimal feedback searching process and hint the user with confirmation-based questions about details. Furthermore, since fully-supervised training is often infeasible due to the difficulty of obtaining human-machine dialog data, we present a weakly-supervised reinforcement learning method that needs no human-annotated data other than the text-image dataset. Experiments show that our framework significantly improves the performance of text-based image retrieval under complex scenes.

Segmenting Medical MRI via Recurrent Decoding Cell

Nov 21, 2019

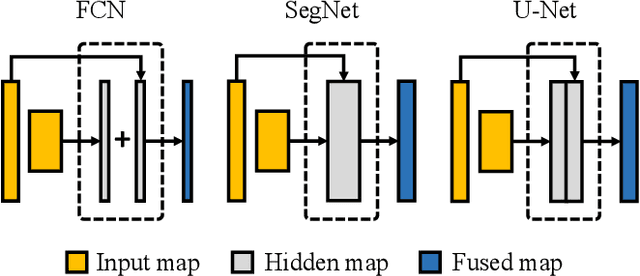

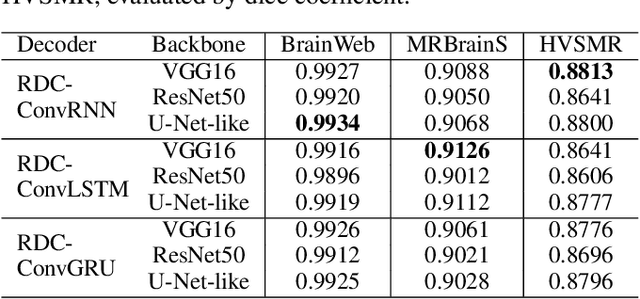

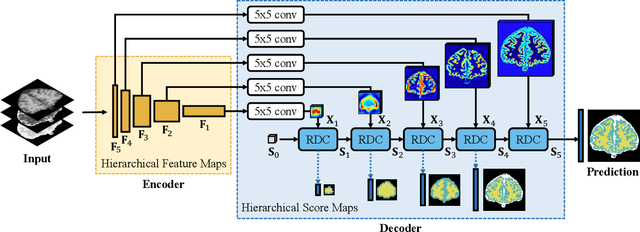

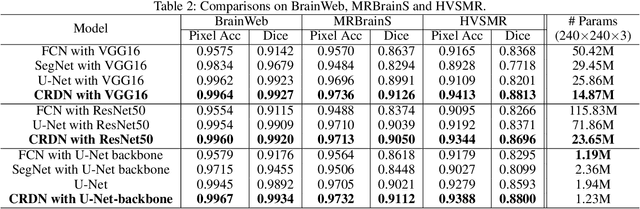

Abstract:The encoder-decoder networks are commonly used in medical image segmentation due to their remarkable performance in hierarchical feature fusion. However, the expanding path for feature decoding and spatial recovery does not consider the long-term dependency when fusing feature maps from different layers, and the universal encoder-decoder network does not make full use of the multi-modality information to improve the network robustness especially for segmenting medical MRI. In this paper, we propose a novel feature fusion unit called Recurrent Decoding Cell (RDC) which leverages convolutional RNNs to memorize the long-term context information from the previous layers in the decoding phase. An encoder-decoder network, named Convolutional Recurrent Decoding Network (CRDN), is also proposed based on RDC for segmenting multi-modality medical MRI. CRDN adopts CNN backbone to encode image features and decode them hierarchically through a chain of RDCs to obtain the final high-resolution score map. The evaluation experiments on BrainWeb, MRBrainS and HVSMR datasets demonstrate that the introduction of RDC effectively improves the segmentation accuracy as well as reduces the model size, and the proposed CRDN owns its robustness to image noise and intensity non-uniformity in medical MRI.

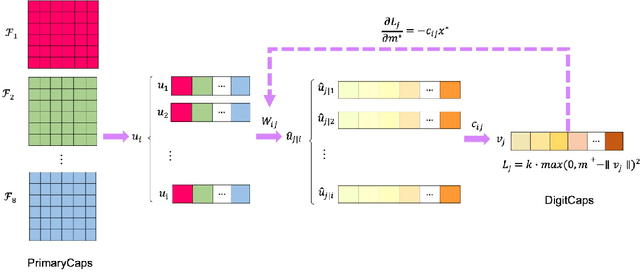

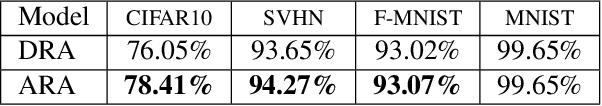

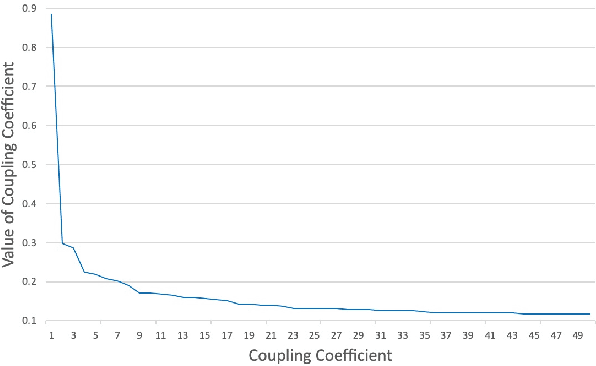

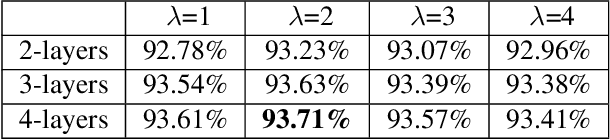

Adaptive Routing Between Capsules

Nov 19, 2019

Abstract:Capsule network is the most recent exciting advancement in the deep learning field and represents positional information by stacking features into vectors. The dynamic routing algorithm is used in the capsule network, however, there are some disadvantages such as the inability to stack multiple layers and a large amount of computation. In this paper, we propose an adaptive routing algorithm that can solve the problems mentioned above. First, the low-layer capsules adaptively adjust their direction and length in the routing algorithm and removing the influence of the coupling coefficient on the gradient propagation, so that the network can work when stacked in multiple layers. Then, the iterative process of routing is simplified to reduce the amount of computation and we introduce the gradient coefficient $\lambda$. Further, we tested the performance of our proposed adaptive routing algorithm on CIFAR10, Fashion-MNIST, SVHN and MNIST, while achieving better results than the dynamic routing algorithm.

Learning Smooth Representation for Unsupervised Domain Adaptation

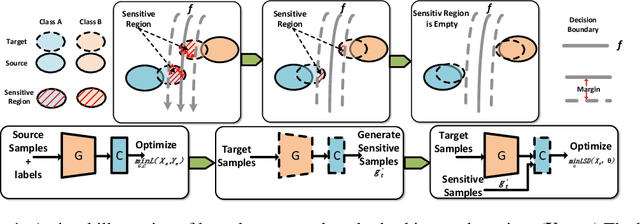

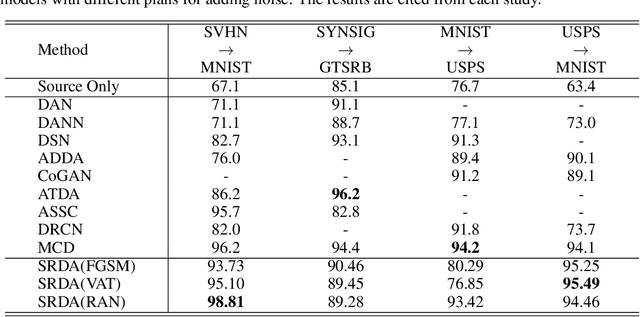

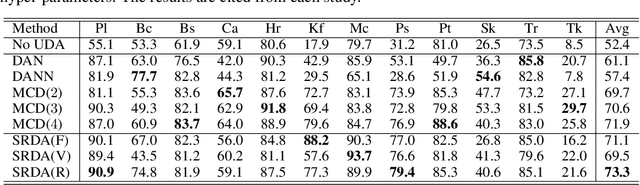

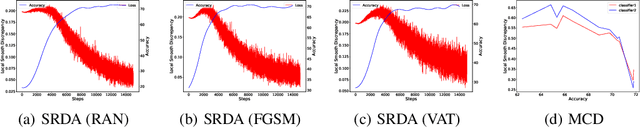

May 26, 2019

Abstract:In unsupervised domain adaptation, existing methods utilizing the boundary decision have achieved remarkable performance, but they lack analysis of the relationship between decision boundary and features. In our work, we propose a new principle that adaptive classifiers and transferable features can be obtained in the target domain by learning smooth representations. We analyze the relationship between decision boundary and ambiguous target features in terms of smoothness. Thereafter, local smooth discrepancy is defined to measure the smoothness of a sample and detect sensitive samples which are easily misclassified. To strengthen the smoothness, sensitive samples are corrected in feature space by optimizing local smooth discrepancy. Moreover, the generalization error upper bound is derived theoretically. Finally, We evaluate our method in several standard benchmark datasets. Empirical evidence shows that the proposed method is comparable or superior to the state-of-the-art methods and local smooth discrepancy is a valid metric to evaluate the performance of a domain adaptation method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge