Liang Jiang

Every Step Evolves: Scaling Reinforcement Learning for Trillion-Scale Thinking Model

Oct 21, 2025

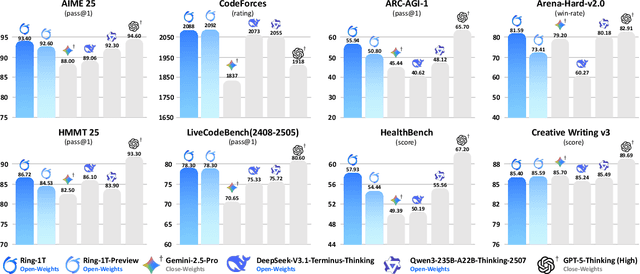

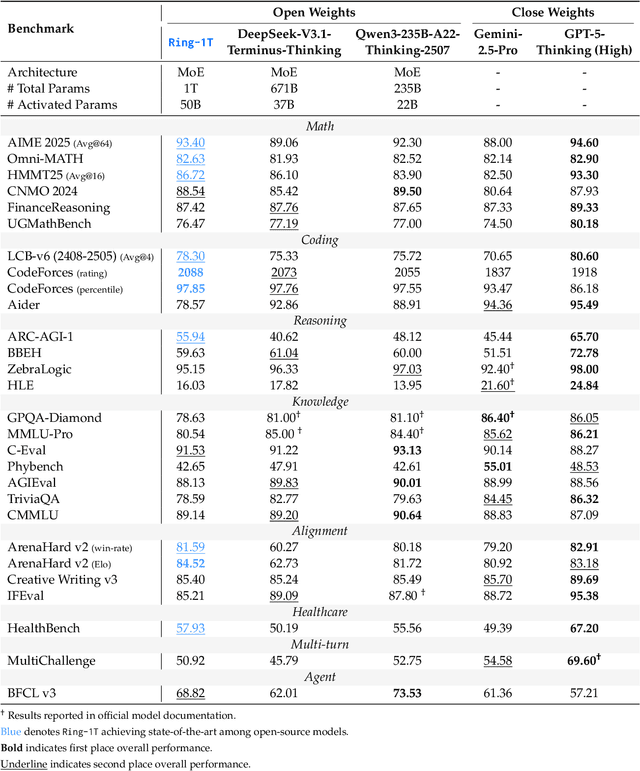

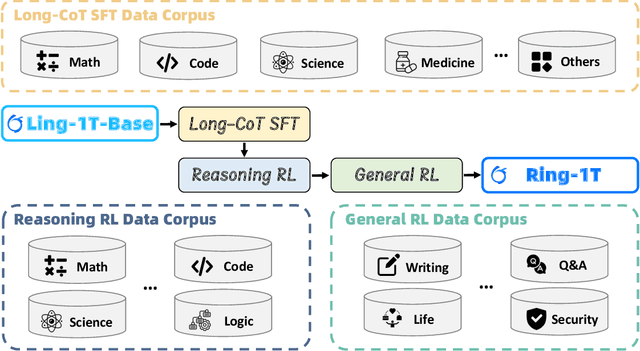

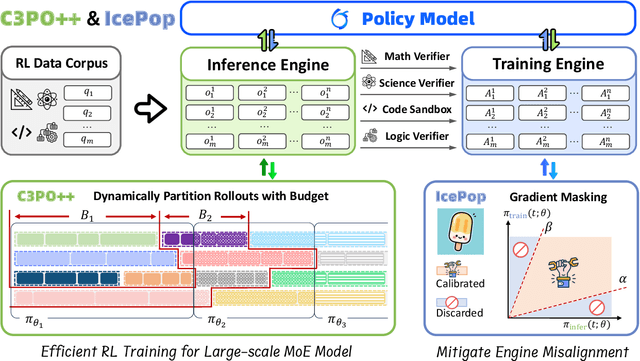

Abstract:We present Ring-1T, the first open-source, state-of-the-art thinking model with a trillion-scale parameter. It features 1 trillion total parameters and activates approximately 50 billion per token. Training such models at a trillion-parameter scale introduces unprecedented challenges, including train-inference misalignment, inefficiencies in rollout processing, and bottlenecks in the RL system. To address these, we pioneer three interconnected innovations: (1) IcePop stabilizes RL training via token-level discrepancy masking and clipping, resolving instability from training-inference mismatches; (2) C3PO++ improves resource utilization for long rollouts under a token budget by dynamically partitioning them, thereby obtaining high time efficiency; and (3) ASystem, a high-performance RL framework designed to overcome the systemic bottlenecks that impede trillion-parameter model training. Ring-1T delivers breakthrough results across critical benchmarks: 93.4 on AIME-2025, 86.72 on HMMT-2025, 2088 on CodeForces, and 55.94 on ARC-AGI-v1. Notably, it attains a silver medal-level result on the IMO-2025, underscoring its exceptional reasoning capabilities. By releasing the complete 1T parameter MoE model to the community, we provide the research community with direct access to cutting-edge reasoning capabilities. This contribution marks a significant milestone in democratizing large-scale reasoning intelligence and establishes a new baseline for open-source model performance.

Ring-lite: Scalable Reasoning via C3PO-Stabilized Reinforcement Learning for LLMs

Jun 18, 2025Abstract:We present Ring-lite, a Mixture-of-Experts (MoE)-based large language model optimized via reinforcement learning (RL) to achieve efficient and robust reasoning capabilities. Built upon the publicly available Ling-lite model, a 16.8 billion parameter model with 2.75 billion activated parameters, our approach matches the performance of state-of-the-art (SOTA) small-scale reasoning models on challenging benchmarks (e.g., AIME, LiveCodeBench, GPQA-Diamond) while activating only one-third of the parameters required by comparable models. To accomplish this, we introduce a joint training pipeline integrating distillation with RL, revealing undocumented challenges in MoE RL training. First, we identify optimization instability during RL training, and we propose Constrained Contextual Computation Policy Optimization(C3PO), a novel approach that enhances training stability and improves computational throughput via algorithm-system co-design methodology. Second, we empirically demonstrate that selecting distillation checkpoints based on entropy loss for RL training, rather than validation metrics, yields superior performance-efficiency trade-offs in subsequent RL training. Finally, we develop a two-stage training paradigm to harmonize multi-domain data integration, addressing domain conflicts that arise in training with mixed dataset. We will release the model, dataset, and code.

Holistic Capability Preservation: Towards Compact Yet Comprehensive Reasoning Models

Apr 09, 2025Abstract:This technical report presents Ring-Lite-Distill, a lightweight reasoning model derived from our open-source Mixture-of-Experts (MoE) Large Language Models (LLMs) Ling-Lite. This study demonstrates that through meticulous high-quality data curation and ingenious training paradigms, the compact MoE model Ling-Lite can be further trained to achieve exceptional reasoning capabilities, while maintaining its parameter-efficient architecture with only 2.75 billion activated parameters, establishing an efficient lightweight reasoning architecture. In particular, in constructing this model, we have not merely focused on enhancing advanced reasoning capabilities, exemplified by high-difficulty mathematical problem solving, but rather aimed to develop a reasoning model with more comprehensive competency coverage. Our approach ensures coverage across reasoning tasks of varying difficulty levels while preserving generic capabilities, such as instruction following, tool use, and knowledge retention. We show that, Ring-Lite-Distill's reasoning ability reaches a level comparable to DeepSeek-R1-Distill-Qwen-7B, while its general capabilities significantly surpass those of DeepSeek-R1-Distill-Qwen-7B. The models are accessible at https://huggingface.co/inclusionAI

Towards efficient and secure quantum-classical communication networks

Nov 05, 2024Abstract:The rapid advancement of quantum technologies calls for the design and deployment of quantum-safe cryptographic protocols and communication networks. There are two primary approaches to achieving quantum-resistant security: quantum key distribution (QKD) and post-quantum cryptography (PQC). While each offers unique advantages, both have drawbacks in practical implementation. In this work, we introduce the pros and cons of these protocols and explore how they can be combined to achieve a higher level of security and/or improved performance in key distribution. We hope our discussion inspires further research into the design of hybrid cryptographic protocols for quantum-classical communication networks.

Practical hybrid PQC-QKD protocols with enhanced security and performance

Nov 05, 2024

Abstract:Quantum resistance is vital for emerging cryptographic systems as quantum technologies continue to advance towards large-scale, fault-tolerant quantum computers. Resistance may be offered by quantum key distribution (QKD), which provides information-theoretic security using quantum states of photons, but may be limited by transmission loss at long distances. An alternative approach uses classical means and is conjectured to be resistant to quantum attacks, so-called post-quantum cryptography (PQC), but it is yet to be rigorously proven, and its current implementations are computationally expensive. To overcome the security and performance challenges present in each, here we develop hybrid protocols by which QKD and PQC inter-operate within a joint quantum-classical network. In particular, we consider different hybrid designs that may offer enhanced speed and/or security over the individual performance of either approach. Furthermore, we present a method for analyzing the security of hybrid protocols in key distribution networks. Our hybrid approach paves the way for joint quantum-classical communication networks, which leverage the advantages of both QKD and PQC and can be tailored to the requirements of various practical networks.

Quantum-data-driven dynamical transition in quantum learning

Oct 02, 2024Abstract:Quantum circuits are an essential ingredient of quantum information processing. Parameterized quantum circuits optimized under a specific cost function -- quantum neural networks (QNNs) -- provide a paradigm for achieving quantum advantage in the near term. Understanding QNN training dynamics is crucial for optimizing their performance. In terms of supervised learning tasks such as classification and regression for large datasets, the role of quantum data in QNN training dynamics remains unclear. We reveal a quantum-data-driven dynamical transition, where the target value and data determine the polynomial or exponential convergence of the training. We analytically derive the complete classification of fixed points from the dynamical equation and reveal a comprehensive `phase diagram' featuring seven distinct dynamics. These dynamics originate from a bifurcation transition with multiple codimensions induced by training data, extending the transcritical bifurcation in simple optimization tasks. Furthermore, perturbative analyses identify an exponential convergence class and a polynomial convergence class among the seven dynamics. We provide a non-perturbative theory to explain the transition via generalized restricted Haar ensemble. The analytical results are confirmed with numerical simulations of QNN training and experimental verification on IBM quantum devices. As the QNN training dynamics is determined by the choice of the target value, our findings provide guidance on constructing the cost function to optimize the speed of convergence.

The curse of random quantum data

Aug 19, 2024Abstract:Quantum machine learning, which involves running machine learning algorithms on quantum devices, may be one of the most significant flagship applications for these devices. Unlike its classical counterparts, the role of data in quantum machine learning has not been fully understood. In this work, we quantify the performances of quantum machine learning in the landscape of quantum data. Provided that the encoding of quantum data is sufficiently random, the performance, we find that the training efficiency and generalization capabilities in quantum machine learning will be exponentially suppressed with the increase in the number of qubits, which we call "the curse of random quantum data". Our findings apply to both the quantum kernel method and the large-width limit of quantum neural networks. Conversely, we highlight that through meticulous design of quantum datasets, it is possible to avoid these curses, thereby achieving efficient convergence and robust generalization. Our conclusions are corroborated by extensive numerical simulations.

Dynamical phase transition in quantum neural networks with large depth

Nov 29, 2023Abstract:Understanding the training dynamics of quantum neural networks is a fundamental task in quantum information science with wide impact in physics, chemistry and machine learning. In this work, we show that the late-time training dynamics of quantum neural networks can be described by the generalized Lotka-Volterra equations, which lead to a dynamical phase transition. When the targeted value of cost function crosses the minimum achievable value from above to below, the dynamics evolve from a frozen-kernel phase to a frozen-error phase, showing a duality between the quantum neural tangent kernel and the total error. In both phases, the convergence towards the fixed point is exponential, while at the critical point becomes polynomial. Via mapping the Hessian of the training dynamics to a Hamiltonian in the imaginary time, we reveal the nature of the phase transition to be second-order with the exponent $\nu=1$, where scale invariance and closing gap are observed at critical point. We also provide a non-perturbative analytical theory to explain the phase transition via a restricted Haar ensemble at late time, when the output state approaches the steady state. The theory findings are verified experimentally on IBM quantum devices.

Tight bounds on Pauli channel learning without entanglement

Sep 23, 2023

Abstract:Entanglement is a useful resource for learning, but a precise characterization of its advantage can be challenging. In this work, we consider learning algorithms without entanglement to be those that only utilize separable states, measurements, and operations between the main system of interest and an ancillary system. These algorithms are equivalent to those that apply quantum circuits on the main system interleaved with mid-circuit measurements and classical feedforward. We prove a tight lower bound for learning Pauli channels without entanglement that closes a cubic gap between the best-known upper and lower bound. In particular, we show that $\Theta(2^n\varepsilon^{-2})$ rounds of measurements are required to estimate each eigenvalue of an $n$-qubit Pauli channel to $\varepsilon$ error with high probability when learning without entanglement. In contrast, a learning algorithm with entanglement only needs $\Theta(\varepsilon^{-2})$ rounds of measurements. The tight lower bound strengthens the foundation for an experimental demonstration of entanglement-enhanced advantages for characterizing Pauli noise.

Quantum Data Center: Perspectives

Sep 12, 2023Abstract:A quantum version of data centers might be significant in the quantum era. In this paper, we introduce Quantum Data Center (QDC), a quantum version of existing classical data centers, with a specific emphasis on combining Quantum Random Access Memory (QRAM) and quantum networks. We argue that QDC will provide significant benefits to customers in terms of efficiency, security, and precision, and will be helpful for quantum computing, communication, and sensing. We investigate potential scientific and business opportunities along this novel research direction through hardware realization and possible specific applications. We show the possible impacts of QDCs in business and science, especially the machine learning and big data industries.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge