Gaurav S. Sukhatme

On Localizing a Camera from a Single Image

Mar 24, 2020

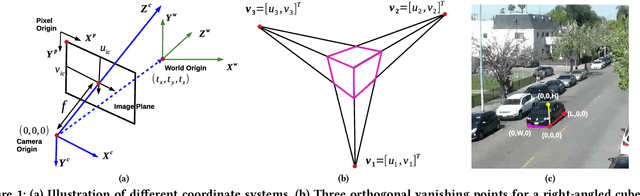

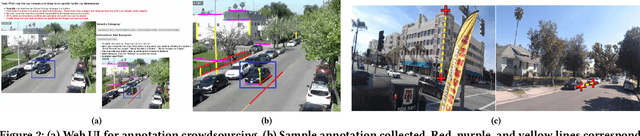

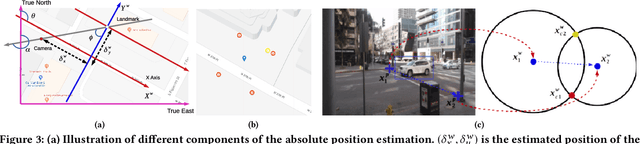

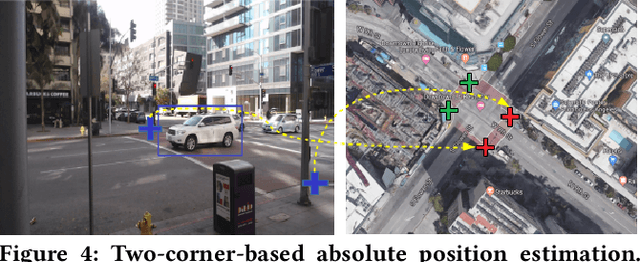

Abstract:Public cameras often have limited metadata describing their attributes. A key missing attribute is the precise location of the camera, using which it is possible to precisely pinpoint the location of events seen in the camera. In this paper, we explore the following question: under what conditions is it possible to estimate the location of a camera from a single image taken by the camera? We show that, using a judicious combination of projective geometry, neural networks, and crowd-sourced annotations from human workers, it is possible to position 95% of the images in our test data set to within 12 m. This performance is two orders of magnitude better than PoseNet, a state-of-the-art neural network that, when trained on a large corpus of images in an area, can estimate the pose of a single image. Finally, we show that the camera's inferred position and intrinsic parameters can help design a number of virtual sensors, all of which are reasonably accurate.

Experimental Comparison of Global Motion Planning Algorithms for Wheeled Mobile Robots

Mar 07, 2020

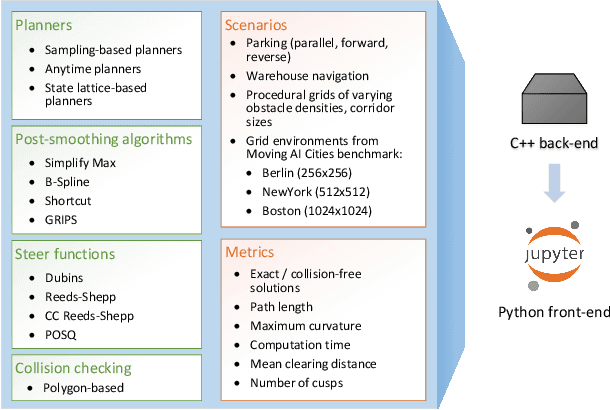

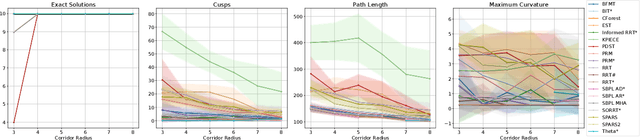

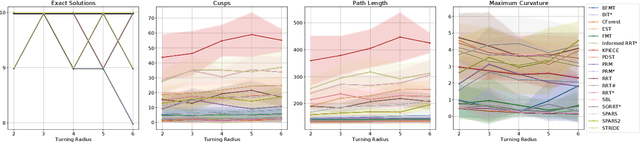

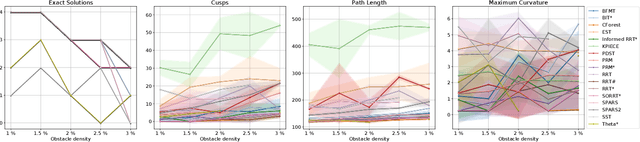

Abstract:Planning smooth and energy-efficient motions for wheeled mobile robots is a central task for applications ranging from autonomous driving to service and intralogistic robotics. Over the past decades, a wide variety of motion planners, steer functions and path-improvement techniques have been proposed for such non-holonomic systems. With the objective of comparing this large assortment of state-of-the-art motion-planning techniques, we introduce a novel open-source motion-planning benchmark for wheeled mobile robots, whose scenarios resemble real-world applications (such as navigating warehouses, moving in cluttered cities or parking), and propose metrics for planning efficiency and path quality. Our benchmark is easy to use and extend, and thus allows practitioners and researchers to evaluate new motion-planning algorithms, scenarios and metrics easily. We use our benchmark to highlight the strengths and weaknesses of several common state-of-the-art motion planners and provide recommendations on when they should be used.

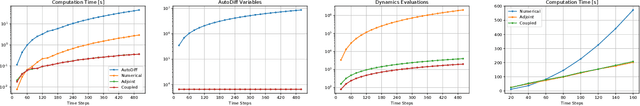

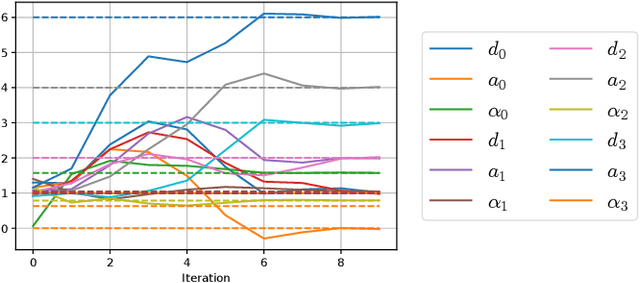

Automatic Differentiation and Continuous Sensitivity Analysis of Rigid Body Dynamics

Jan 22, 2020

Abstract:A key ingredient to achieving intelligent behavior is physical understanding that equips robots with the ability to reason about the effects of their actions in a dynamic environment. Several methods have been proposed to learn dynamics models from data that inform model-based control algorithms. While such learning-based approaches can model locally observed behaviors, they fail to generalize to more complex dynamics and under long time horizons. In this work, we introduce a differentiable physics simulator for rigid body dynamics. Leveraging various techniques for differential equation integration and gradient calculation, we compare different methods for parameter estimation that allow us to infer the simulation parameters that are relevant to estimation and control of physical systems. In the context of trajectory optimization, we introduce a closed-loop model-predictive control algorithm that infers the simulation parameters through experience while achieving cost-minimizing performance.

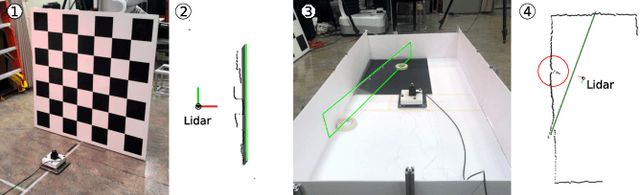

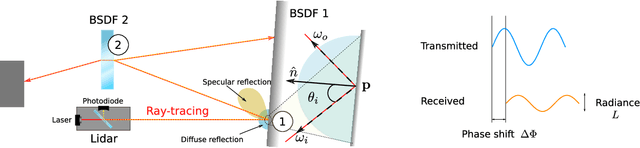

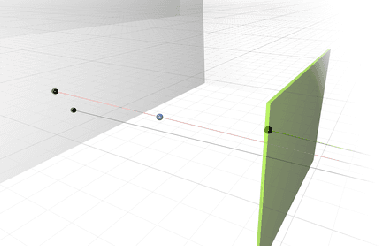

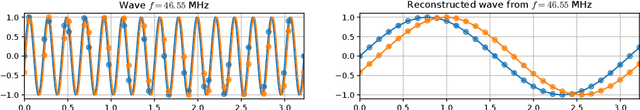

Physics-based Simulation of Continuous-Wave LIDAR for Localization, Calibration and Tracking

Dec 03, 2019

Abstract:Light Detection and Ranging (LIDAR) sensors play an important role in the perception stack of autonomous robots, supplying mapping and localization pipelines with depth measurements of the environment. While their accuracy outperforms other types of depth sensors, such as stereo or time-of-flight cameras, the accurate modeling of LIDAR sensors requires laborious manual calibration that typically does not take into account the interaction of laser light with different surface types, incidence angles and other phenomena that significantly influence measurements. In this work, we introduce a physically plausible model of a 2D continuous-wave LIDAR that accounts for the surface-light interactions and simulates the measurement process in the Hokuyo URG-04LX LIDAR. Through automatic differentiation, we employ gradient-based optimization to estimate model parameters from real sensor measurements.

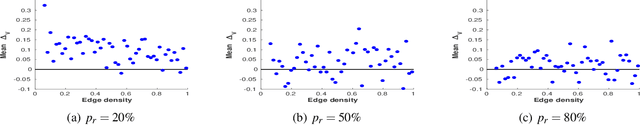

Resilient Coverage: Exploring the Local-to-Global Trade-off

Oct 03, 2019

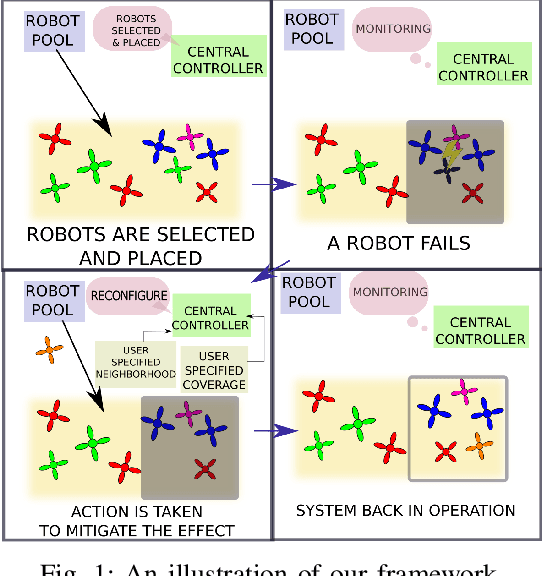

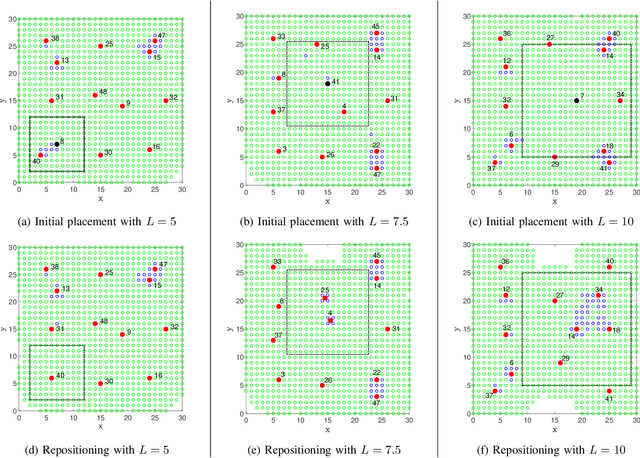

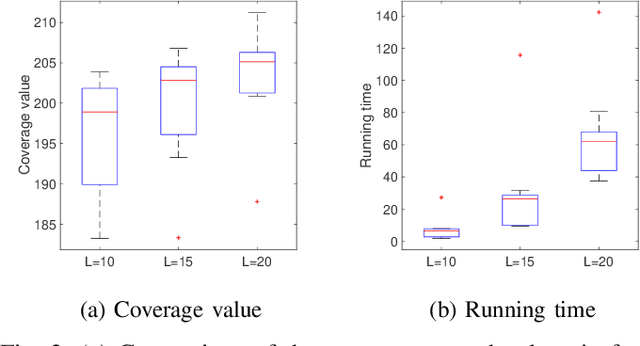

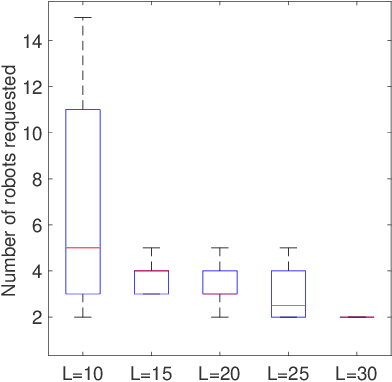

Abstract:We propose a centralized control framework to select suitable robots from a heterogeneous pool and place them at appropriate locations to monitor a region for events of interest. In the event of a robot failure, the framework repositions robots in a user-defined local neighborhood of the failed robot to compensate for the coverage loss. The central controller augments the team with additional robots from the robot pool when simply repositioning robots fails to attain a user-specified level of desired coverage. The size of the local neighborhood around the failed robot and the desired coverage over the region are two settings that can be manipulated to achieve a user-specified balance. We investigate the trade-off between the coverage compensation achieved through local repositioning and the computation required to plan the new robot locations. We also study the relationship between the size of the local neighborhood and the number of additional robots added to the team for a given user-specified level of desired coverage. The computational complexity of our resilient strategy (tunable resilient coordination), is quadratic in both neighborhood size and number of robots in the team. At first glance, it seems that any desired level of coverage can be efficiently achieved by augmenting the robot team with more robots while keeping the neighborhood size fixed. However, we show that to reach a high level of coverage in a neighborhood with a large robot population, it is more efficient to enlarge the neighborhood size, instead of adding additional robots and repositioning them.

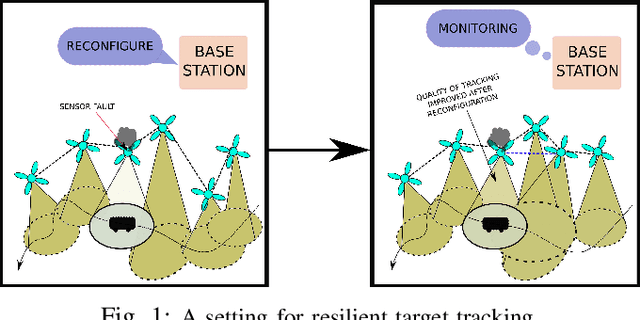

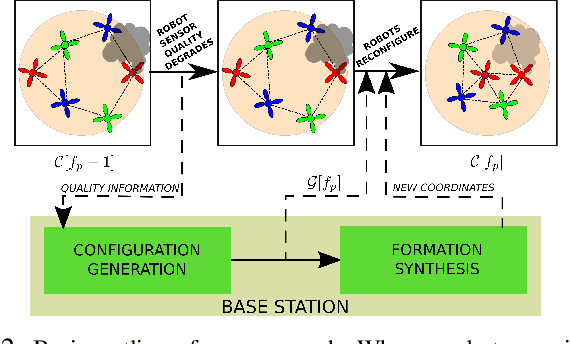

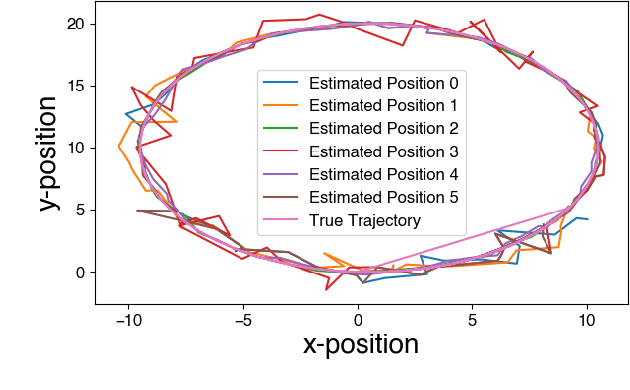

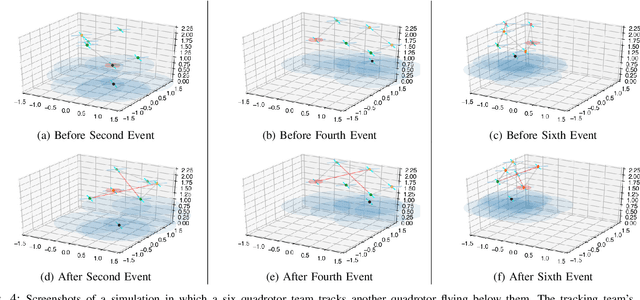

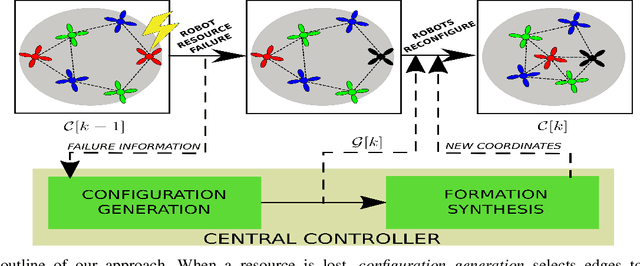

Resilience in multi-robot target tracking through reconfiguration

Oct 03, 2019

Abstract:We address the problem of maintaining resource availability in a networked multi-robot system performing distributed target tracking. In our model, robots are equipped with sensing and computational resources enabling them to track a target's position using a Distributed Kalman Filter (DKF). We use the trace of each robot's sensor measurement noise covariance matrix as a measure of sensing quality. When a robot's sensing quality deteriorates, the system's communication graph is modified by adding edges such that the robot with deteriorating sensor quality may share information with other robots to improve the team's target tracking ability. This computation is performed centrally and is designed to work without a large change in the number of active communication links. We propose two mixed integer semi-definite programming formulations (an 'agent-centric' strategy and a 'team-centric' strategy) to achieve this goal. We implement both formulations and a greedy strategy in simulation and show that the team-centric strategy outperforms the agent-centric and greedy strategies.

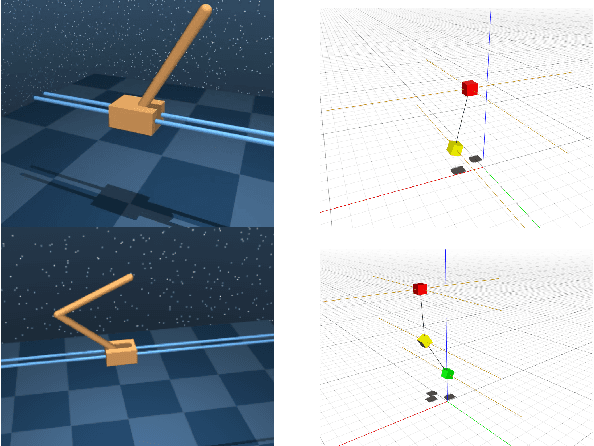

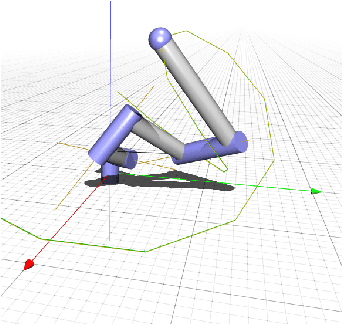

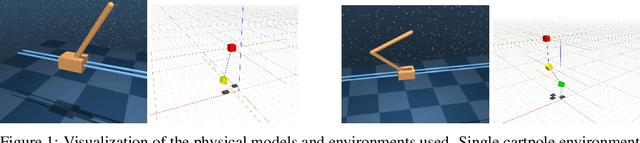

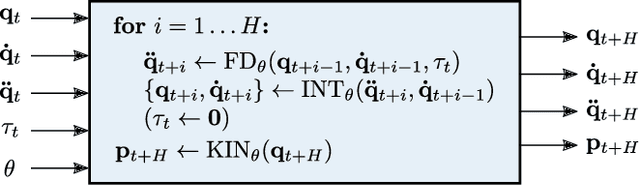

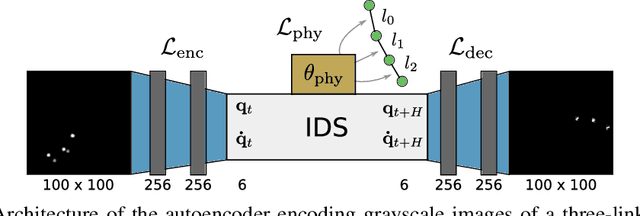

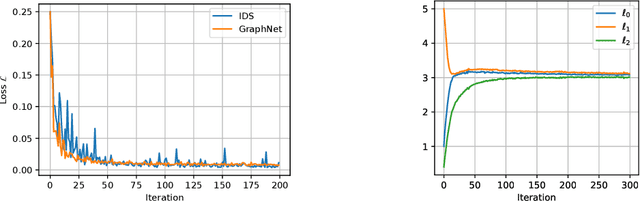

Interactive Differentiable Simulation

May 26, 2019

Abstract:Intelligent agents need a physical understanding of the world to predict the impact of their actions in the future. While learning-based models of the environment dynamics have contributed to significant improvements in sample efficiency compared to model-free reinforcement learning algorithms, they typically fail to generalize to system states beyond the training data, while often grounding their predictions on non-interpretable latent variables. We introduce Interactive Differentiable Simulation (IDS), a differentiable physics engine, that allows for efficient, accurate inference of physical properties of rigid-body systems. Integrated into deep learning architectures, our model is able to accomplish system identification using visual input, leading to an interpretable model of the world whose parameters have physical meaning. We present experiments showing automatic task-based robot design and parameter estimation for nonlinear dynamical systems by automatically calculating gradients in IDS. When integrated into an adaptive model-predictive control algorithm, our approach exhibits orders of magnitude improvements in sample efficiency over model-free reinforcement learning algorithms on challenging nonlinear control domains.

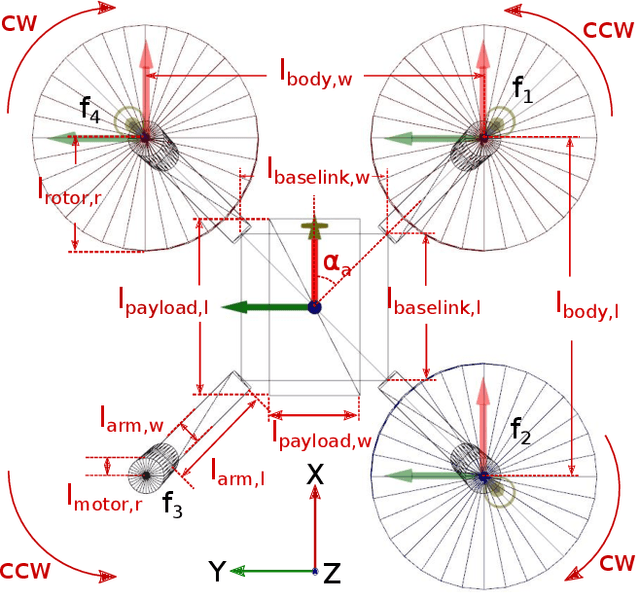

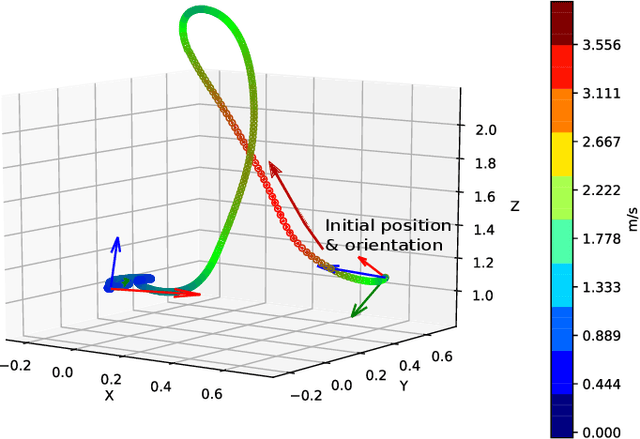

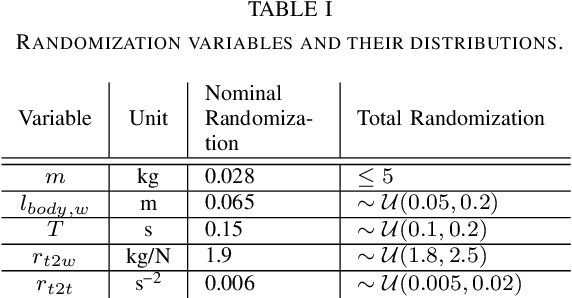

Sim-to--Real: Transfer of Low-Level Robust Control Policies to Multiple Quadrotors

Apr 16, 2019

Abstract:Quadrotor stabilizing controllers often require careful, model-specific tuning for safe operation. We use reinforcement learning to train policies in simulation that transfer remarkably well to multiple different physical quadrotors. Our policies are low-level, i.e., we map the rotorcrafts' state directly to the motor outputs. The trained control policies are very robust to external disturbances and can withstand harsh initial conditions such as throws. We show how different training methodologies (change of the cost function, modeling of noise, use of domain randomization) might affect flight performance. To the best of our knowledge, this is the first work that demonstrates that a simple neural network can learn a robust stabilizing low-level quadrotor controller (without the use of a stabilizing PD controller) that is shown to generalize to multiple quadrotors.

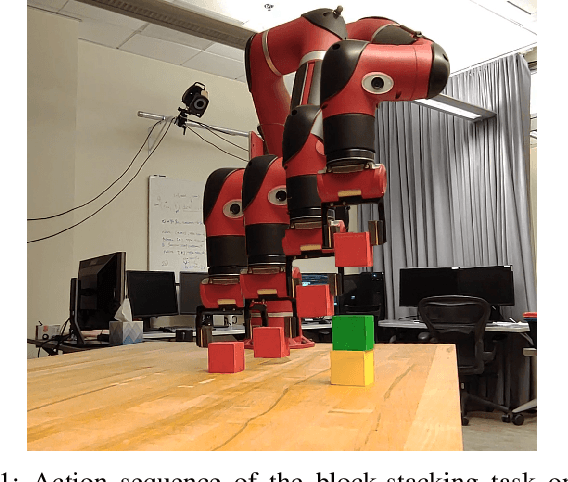

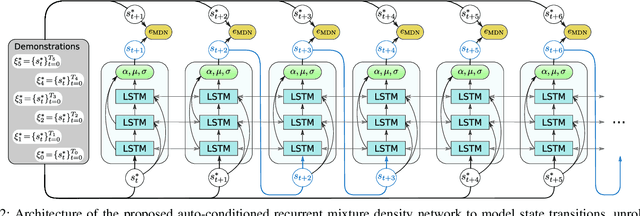

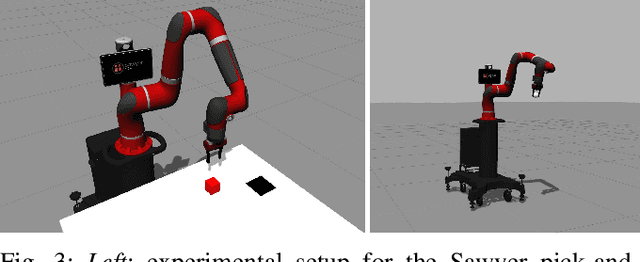

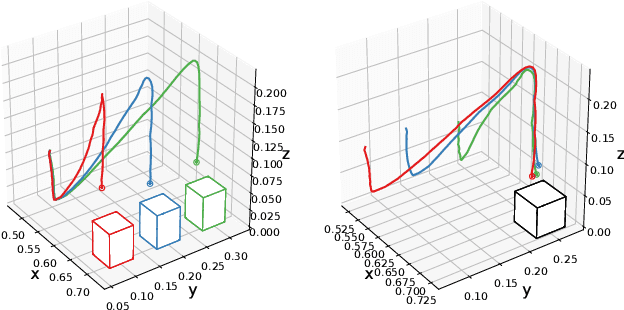

Auto-conditioned Recurrent Mixture Density Networks for Learning Generalizable Robot Skills

Mar 20, 2019

Abstract:Personal robots assisting humans must perform complex manipulation tasks that are typically difficult to specify in traditional motion planning pipelines, where multiple objectives must be met and the high-level context be taken into consideration. Learning from demonstration (LfD) provides a promising way to learn these kind of complex manipulation skills even from non-technical users. However, it is challenging for existing LfD methods to efficiently learn skills that can generalize to task specifications that are not covered by demonstrations. In this paper, we introduce a state transition model (STM) that generates joint-space trajectories by imitating motions from expert behavior. Given a few demonstrations, we show in real robot experiments that the learned STM can quickly generalize to unseen tasks and synthesize motions having longer time horizons than the expert trajectories. Compared to conventional motion planners, our approach enables the robot to accomplish complex behaviors from high-level instructions without laborious hand-engineering of planning objectives, while being able to adapt to changing goals during the skill execution. In conjunction with a trajectory optimizer, our STM can construct a high-quality skeleton of a trajectory that can be further improved in smoothness and precision. In combination with a learned inverse dynamics model, we additionally present results where the STM is used as a high-level planner. A video of our experiments is available at https://youtu.be/85DX9Ojq-90

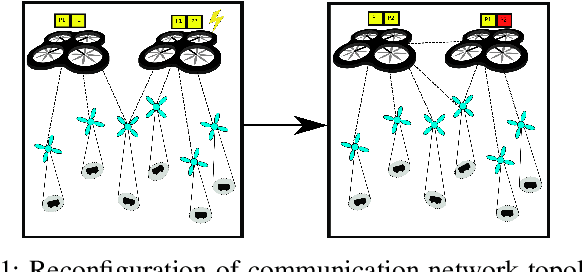

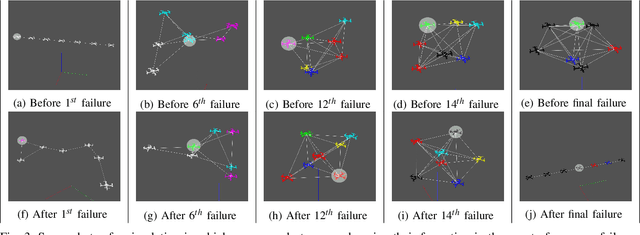

Resilience by Reconfiguration: Exploiting Heterogeneity in Robot Teams

Mar 12, 2019

Abstract:We propose a method to maintain high resource in a networked heterogeneous multi-robot system to resource failures. In our model, resources such as and computation are available on robots. The robots engaged in a joint task using these pooled resources. In our model, a resource on a particular robot becomes unavailable e.g., a sensor ceases to function due to a failure), the system reconfigures so that the robot continues to have to this resource by communicating with other robots. Specifically, we consider the problem of selecting edges to be in the system's communication graph after a resource has occurred. We define a metric that allows us to characterize the quality of the resource distribution in the represented by the communication graph. Upon a resource becoming unavailable due to failure, we reconfigure network so that the resource distribution is brought as to the ideal resource distribution as possible without a big change in the communication cost. Our approach uses integer semi-definite programming to achieve this goal. We also provide a simulated annealing method to compute a formation that satisfies the inter-robot distances imposed by the topology, along with other constraints. Our method can compute a communication topology, spatial formation, and formation change motion planning in a few seconds. We validate our method in simulation and real-robot experiments with a team of seven quadrotors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge