Fanze Kong

LiDAR-based Quadrotor Autonomous Inspection System in Cluttered Environments

Mar 29, 2025Abstract:In recent years, autonomous unmanned aerial vehicle (UAV) technology has seen rapid advancements, significantly improving operational efficiency and mitigating risks associated with manual tasks in domains such as industrial inspection, agricultural monitoring, and search-and-rescue missions. Despite these developments, existing UAV inspection systems encounter two critical challenges: limited reliability in complex, unstructured, and GNSS-denied environments, and a pronounced dependency on skilled operators. To overcome these limitations, this study presents a LiDAR-based UAV inspection system employing a dual-phase workflow: human-in-the-loop inspection and autonomous inspection. During the human-in-the-loop phase, untrained pilots are supported by autonomous obstacle avoidance, enabling them to generate 3D maps, specify inspection points, and schedule tasks. Inspection points are then optimized using the Traveling Salesman Problem (TSP) to create efficient task sequences. In the autonomous phase, the quadrotor autonomously executes the planned tasks, ensuring safe and efficient data acquisition. Comprehensive field experiments conducted in various environments, including slopes, landslides, agricultural fields, factories, and forests, confirm the system's reliability and flexibility. Results reveal significant enhancements in inspection efficiency, with autonomous operations reducing trajectory length by up to 40\% and flight time by 57\% compared to human-in-the-loop operations. These findings underscore the potential of the proposed system to enhance UAV-based inspections in safety-critical and resource-constrained scenarios.

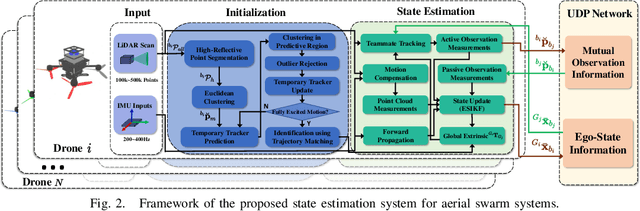

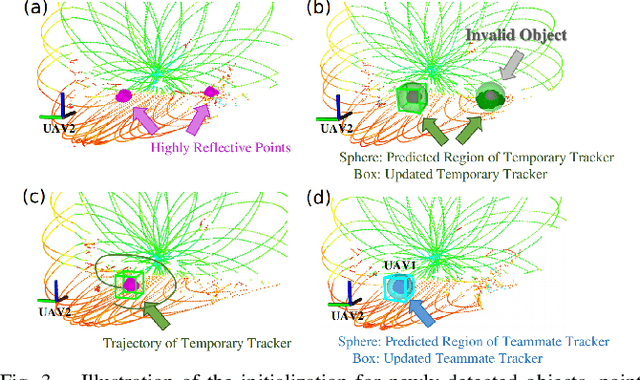

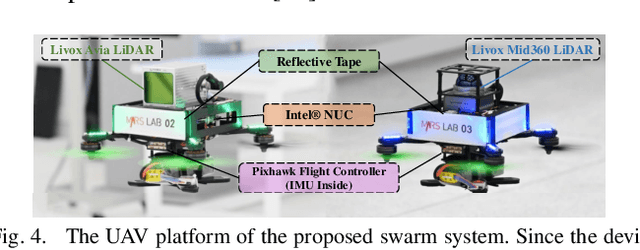

Swarm-LIO2: Decentralized, Efficient LiDAR-inertial Odometry for UAV Swarms

Sep 26, 2024

Abstract:Aerial swarm systems possess immense potential in various aspects, such as cooperative exploration, target tracking, search and rescue. Efficient, accurate self and mutual state estimation are the critical preconditions for completing these swarm tasks, which remain challenging research topics. This paper proposes Swarm-LIO2: a fully decentralized, plug-and-play, computationally efficient, and bandwidth-efficient LiDAR-inertial odometry for aerial swarm systems. Swarm-LIO2 uses a decentralized, plug-and-play network as the communication infrastructure. Only bandwidth-efficient and low-dimensional information is exchanged, including identity, ego-state, mutual observation measurements, and global extrinsic transformations. To support the plug-and-play of new teammate participants, Swarm-LIO2 detects potential teammate UAVs and initializes the temporal offset and global extrinsic transformation all automatically. To enhance the initialization efficiency, novel reflectivity-based UAV detection, trajectory matching, and factor graph optimization methods are proposed. For state estimation, Swarm-LIO2 fuses LiDAR, IMU, and mutual observation measurements within an efficient ESIKF framework, with careful compensation of temporal delay and modeling of measurements to enhance the accuracy and consistency.

Swashplateless-elevon Actuation for a Dual-rotor Tail-sitter VTOL UAV

Sep 24, 2023

Abstract:In this paper, we propose a novel swashplateless-elevon actuation (SEA) for dual-rotor tail-sitter vertical takeoff and landing (VTOL) unmanned aerial vehicles (UAVs). In contrast to the conventional elevon actuation (CEA) which controls both pitch and yaw using elevons, the SEA adopts swashplateless mechanisms to generate an extra moment through motor speed modulation to control pitch and uses elevons solely for controlling yaw, without requiring additional actuators. This decoupled control strategy mitigates the saturation of elevons' deflection needed for large pitch and yaw control actions, thus improving the UAV's control performance on trajectory tracking and disturbance rejection performance in the presence of large external disturbances. Furthermore, the SEA overcomes the actuation degradation issues experienced by the CEA when the UAV is in close proximity to the ground, leading to a smoother and more stable take-off process. We validate and compare the performances of the SEA and the CEA in various real-world flight conditions, including take-off, trajectory tracking, and hover flight and position steps under external disturbance. Experimental results demonstrate that the SEA has better performances than the CEA. Moreover, we verify the SEA's feasibility in the attitude transition process and fixed-wing-mode flight of the VTOL UAV. The results indicate that the SEA can accurately control pitch in the presence of high-speed incoming airflow and maintain a stable attitude during fixed-wing mode flight. Video of all experiments can be found in youtube.com/watch?v=Sx9Rk4Zf7sQ

Joint Intrinsic and Extrinsic LiDAR-Camera Calibration in Targetless Environments Using Plane-Constrained Bundle Adjustment

Aug 24, 2023

Abstract:This paper introduces a novel targetless method for joint intrinsic and extrinsic calibration of LiDAR-camera systems using plane-constrained bundle adjustment (BA). Our method leverages LiDAR point cloud measurements from planes in the scene, alongside visual points derived from those planes. The core novelty of our method lies in the integration of visual BA with the registration between visual points and LiDAR point cloud planes, which is formulated as a unified optimization problem. This formulation achieves concurrent intrinsic and extrinsic calibration, while also imparting depth constraints to the visual points to enhance the accuracy of intrinsic calibration. Experiments are conducted on both public data sequences and self-collected dataset. The results showcase that our approach not only surpasses other state-of-the-art (SOTA) methods but also maintains remarkable calibration accuracy even within challenging environments. For the benefits of the robotics community, we have open sourced our codes.

Occupancy Grid Mapping without Ray-Casting for High-resolution LiDAR Sensors

Jul 17, 2023

Abstract:Occupancy mapping is a fundamental component of robotic systems to reason about the unknown and known regions of the environment. This article presents an efficient occupancy mapping framework for high-resolution LiDAR sensors, termed D-Map. The framework introduces three main novelties to address the computational efficiency challenges of occupancy mapping. Firstly, we use a depth image to determine the occupancy state of regions instead of the traditional ray-casting method. Secondly, we introduce an efficient on-tree update strategy on a tree-based map structure. These two techniques avoid redundant visits to small cells, significantly reducing the number of cells to be updated. Thirdly, we remove known cells from the map at each update by leveraging the low false alarm rate of LiDAR sensors. This approach not only enhances our framework's update efficiency by reducing map size but also endows it with an interesting decremental property, which we have named D-Map. To support our design, we provide theoretical analyses of the accuracy of the depth image projection and time complexity of occupancy updates. Furthermore, we conduct extensive benchmark experiments on various LiDAR sensors in both public and private datasets. Our framework demonstrates superior efficiency in comparison with other state-of-the-art methods while maintaining comparable mapping accuracy and high memory efficiency. We demonstrate two real-world applications of D-Map for real-time occupancy mapping on a handle device and an aerial platform carrying a high-resolution LiDAR. In addition, we open-source the implementation of D-Map on GitHub to benefit society: github.com/hku-mars/D-Map.

Bubble Explorer: Fast UAV Exploration in Large-Scale and Cluttered 3D-Environments using Occlusion-Free Spheres

Apr 03, 2023

Abstract:Autonomous exploration is a crucial aspect of robotics that has numerous applications. Most of the existing methods greedily choose goals that maximize immediate reward. This strategy is computationally efficient but insufficient for overall exploration efficiency. In recent years, some state-of-the-art methods are proposed, which generate a global coverage path and significantly improve overall exploration efficiency. However, global optimization produces high computational overhead, leading to low-frequency planner updates and inconsistent planning motion. In this work, we propose a novel method to support fast UAV exploration in large-scale and cluttered 3-D environments. We introduce a computationally low-cost viewpoints generation method using novel occlusion-free spheres. Additionally, we combine greedy strategy with global optimization, which considers both computational and exploration efficiency. We benchmark our method against state-of-the-art methods to showcase its superiority in terms of exploration efficiency and computational time. We conduct various real-world experiments to demonstrate the excellent performance of our method in large-scale and cluttered environments.

Trajectory Generation and Tracking Control for Aggressive Tail-Sitter Flights

Dec 22, 2022

Abstract:We address the theoretical and practical problems related to the trajectory generation and tracking control of tail-sitter UAVs. Theoretically, we focus on the differential flatness property with full exploitation of actual UAV aerodynamic models, which lays a foundation for generating dynamically feasible trajectory and achieving high-performance tracking control. We have found that a tail-sitter is differentially flat with accurate aerodynamic models within the entire flight envelope, by specifying coordinate flight condition and choosing the vehicle position as the flat output. This fundamental property allows us to fully exploit the high-fidelity aerodynamic models in the trajectory planning and tracking control to achieve accurate tail-sitter flights. Particularly, an optimization-based trajectory planner for tail-sitters is proposed to design high-quality, smooth trajectories with consideration of kinodynamic constraints, singularity-free constraints and actuator saturation. The planned trajectory of flat output is transformed to state trajectory in real-time with consideration of wind in environments. To track the state trajectory, a global, singularity-free, and minimally-parameterized on-manifold MPC is developed, which fully leverages the accurate aerodynamic model to achieve high-accuracy trajectory tracking within the whole flight envelope. The effectiveness of the proposed framework is demonstrated through extensive real-world experiments in both indoor and outdoor field tests, including agile SE(3) flight through consecutive narrow windows requiring specific attitude and with speed up to 10m/s, typical tail-sitter maneuvers (transition, level flight and loiter) with speed up to 20m/s, and extremely aggressive aerobatic maneuvers (Wingover, Loop, Vertical Eight and Cuban Eight) with acceleration up to 2.5g.

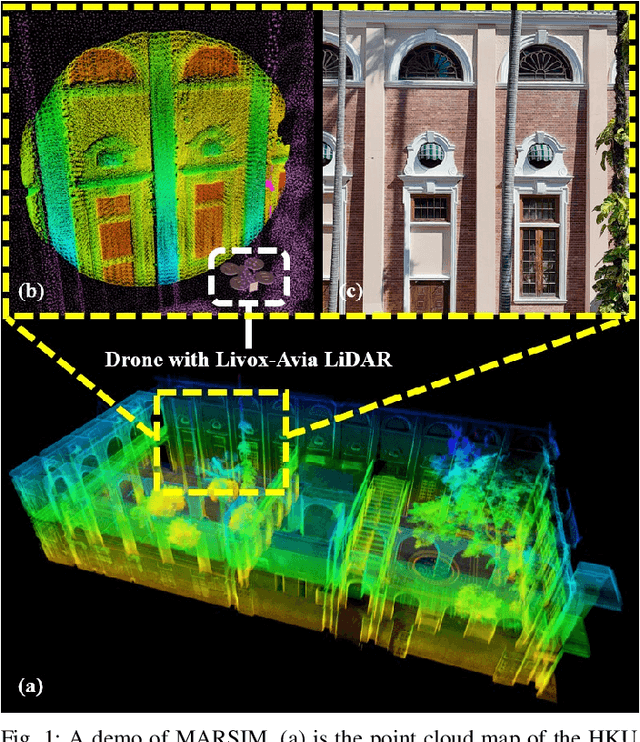

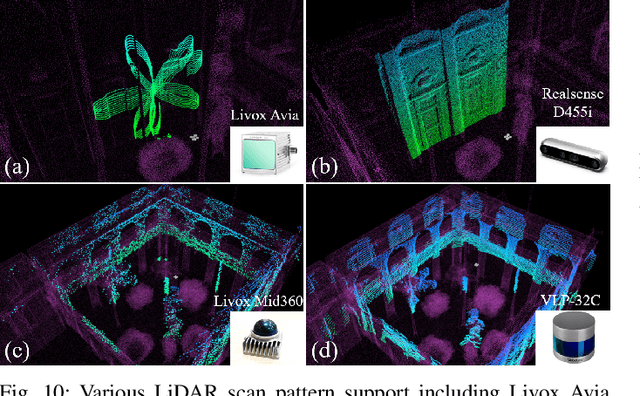

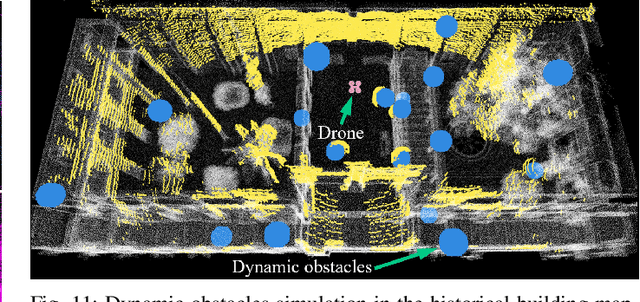

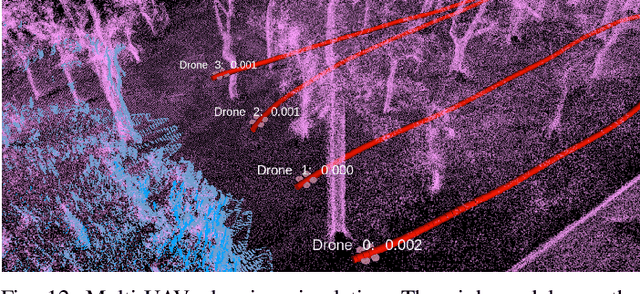

MARSIM: A light-weight point-realistic simulator for LiDAR-based UAVs

Dec 01, 2022

Abstract:The emergence of low-cost, small form factor and light-weight solid-state LiDAR sensors have brought new opportunities for autonomous unmanned aerial vehicles (UAVs) by advancing navigation safety and computation efficiency. Yet the successful developments of LiDAR-based UAVs must rely on extensive simulations. Existing simulators can hardly perform simulations of real-world environments due to the requirements of dense mesh maps that are difficult to obtain. In this paper, we develop a point-realistic simulator of real-world scenes for LiDAR-based UAVs. The key idea is the underlying point rendering method, where we construct a depth image directly from the point cloud map and interpolate it to obtain realistic LiDAR point measurements. Our developed simulator is able to run on a light-weight computing platform and supports the simulation of LiDARs with different resolution and scanning patterns, dynamic obstacles, and multi-UAV systems. Developed in the ROS framework, the simulator can easily communicate with other key modules of an autonomous robot, such as perception, state estimation, planning, and control. Finally, the simulator provides 10 high-resolution point cloud maps of various real-world environments, including forests of different densities, historic building, office, parking garage, and various complex indoor environments. These realistic maps provide diverse testing scenarios for an autonomous UAV. Evaluation results show that the developed simulator achieves superior performance in terms of time and memory consumption against Gazebo and that the simulated UAV flights highly match the actual one in real-world environments. We believe such a point-realistic and light-weight simulator is crucial to bridge the gap between UAV simulation and experiments and will significantly facilitate the research of LiDAR-based autonomous UAVs in the future.

Large-Scale LiDAR Consistent Mapping using Hierachical LiDAR Bundle Adjustment

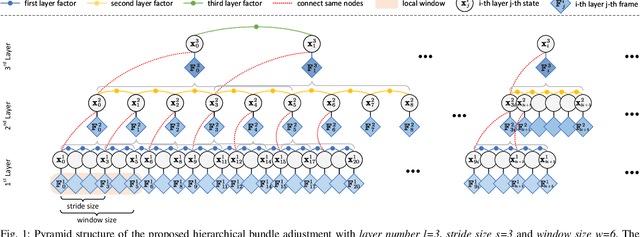

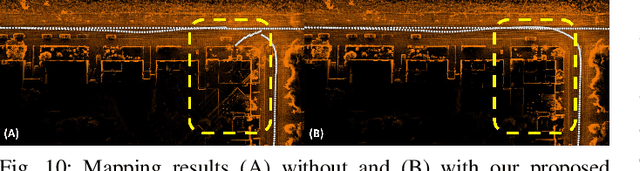

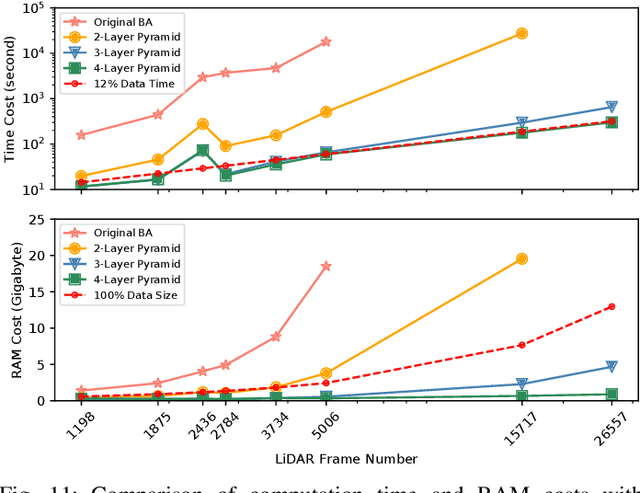

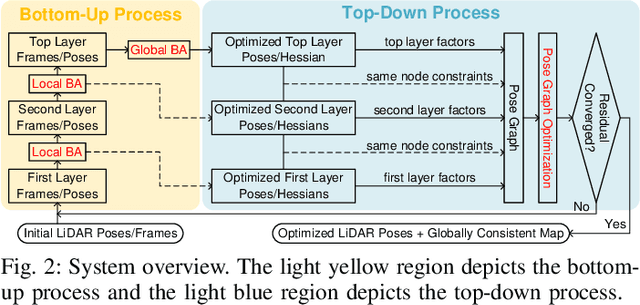

Sep 24, 2022

Abstract:Reconstructing an accurate and consistent large-scale LiDAR point cloud map is crucial for robotics applications. The existing solution, pose graph optimization, though it is time-efficient, does not directly optimize the mapping consistency. LiDAR bundle adjustment (BA) has been recently proposed to resolve this issue; however, it is too time-consuming on large-scale maps. To mitigate this problem, this paper presents a globally consistent and efficient mapping method suitable for large-scale maps. Our proposed work consists of a bottom-up hierarchical BA and a top-down pose graph optimization, which combines the advantages of both methods. With the hierarchical design, we solve multiple BA problems with a much smaller Hessian matrix size than the original BA; with the pose graph optimization, we smoothly and efficiently update the LiDAR poses. The effectiveness and robustness of our proposed approach have been validated on multiple spatially and timely large-scale public spinning LiDAR datasets, i.e., KITTI, MulRan and Newer College, and self-collected solid-state LiDAR datasets under structured and unstructured scenes. With proper setups, we demonstrate our work could generate a globally consistent map with around 12% of the sequence time.

Decentralized LiDAR-inertial Swarm Odometry

Sep 14, 2022

Abstract:Accurate self and relative state estimation are the critical preconditions for completing swarm tasks, e.g., collaborative autonomous exploration, target tracking, search and rescue. This paper proposes a fully decentralized state estimation method for aerial swarm systems, in which each drone performs precise ego-state estimation, exchanges ego-state and mutual observation information by wireless communication, and estimates relative state with respect to (w.r.t.) the rest of UAVs, all in real-time and only based on LiDAR-inertial measurements. A novel 3D LiDAR-based drone detection, identification and tracking method is proposed to obtain observations of teammate drones. The mutual observation measurements are then tightly-coupled with IMU and LiDAR measurements to perform real-time and accurate estimation of ego-state and relative state jointly. Extensive real-world experiments show the broad adaptability to complicated scenarios, including GPS-denied scenes, degenerate scenes for camera (dark night) or LiDAR (facing a single wall). Compared with ground-truth provided by motion capture system, the result shows the centimeter-level localization accuracy which outperforms other state-of-the-art LiDAR-inertial odometry for single UAV system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge