Daniele Pucci

Istituto Italiano di Tecnologia, Genova, Italy

An Integrated Programmable CPG with Bounded Output

Apr 16, 2022

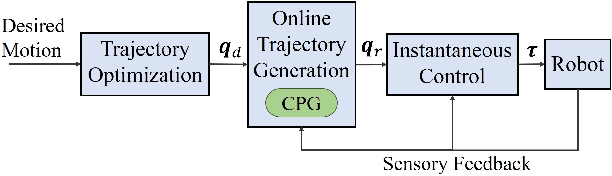

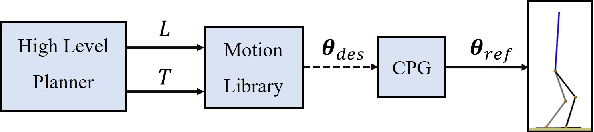

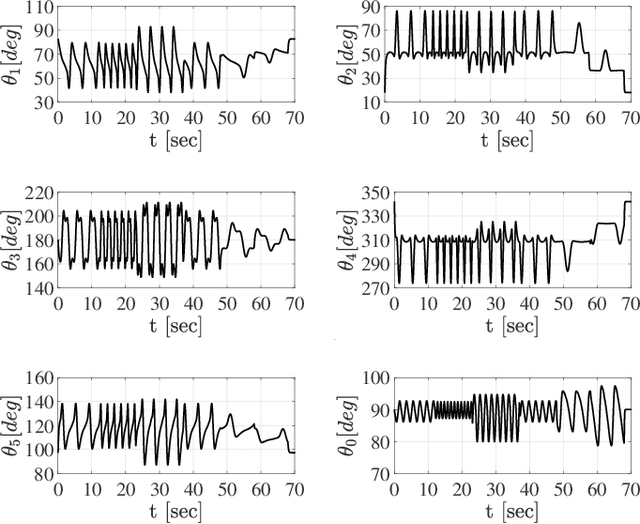

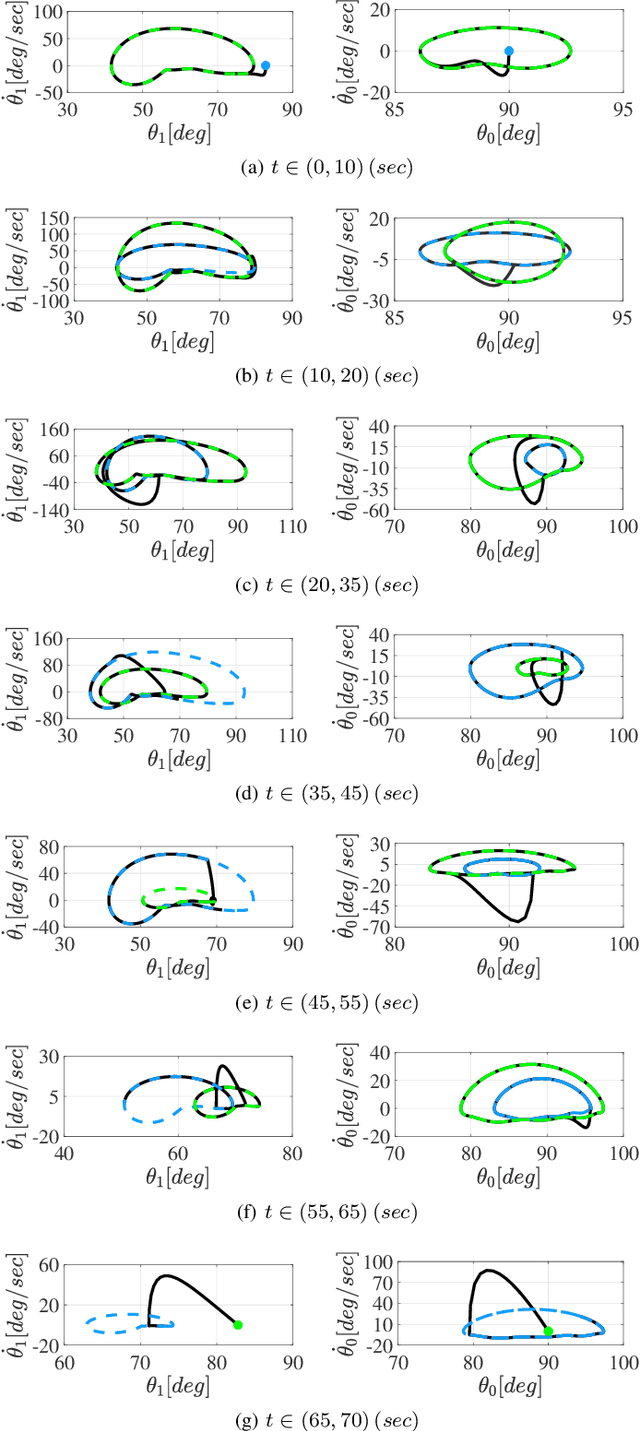

Abstract:Cyclic motions are fundamental patterns in robotic applications including industrial manipulation and legged robot locomotion. This paper proposes an approach for the online modulation of cyclic motions in robotic applications. For this purpose, we present an integrated programmable Central Pattern Generator (CPG) for the online generation of the reference joint trajectory of a robotic system out of a library of desired periodic motions. The reference trajectory is then followed by the lower-level controller of the robot. The proposed CPG generates a smooth reference joint trajectory convergence to the desired one while preserving the position and velocity joint limits of the robot. The integrated programmable CPG consists of one novel bounded output programmable oscillator. We design the programmable oscillator for encoding the desired multidimensional periodic trajectory as a stable limit cycle. We also use the state transformation method to ensure that the oscillator's output and its first-time derivative preserve the joint position and velocity limits of the robot. With the help of Lyapunov-based arguments, We prove that the proposed CPG provides the global stability and convergence of the desired trajectory. The effectiveness of the proposed integrated CPG for trajectory generation is shown in a passive rehabilitation scenario on the Kuka iiwa robot arm, and also in a walking simulation on a seven-link bipedal robot.

* https://ieeexplore.ieee.org/document/9756235

Efficient Geometric Linearization of Moving-Base Rigid Robot Dynamics

Apr 11, 2022

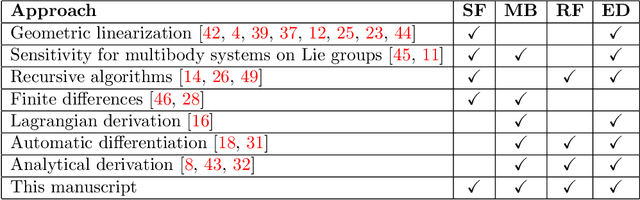

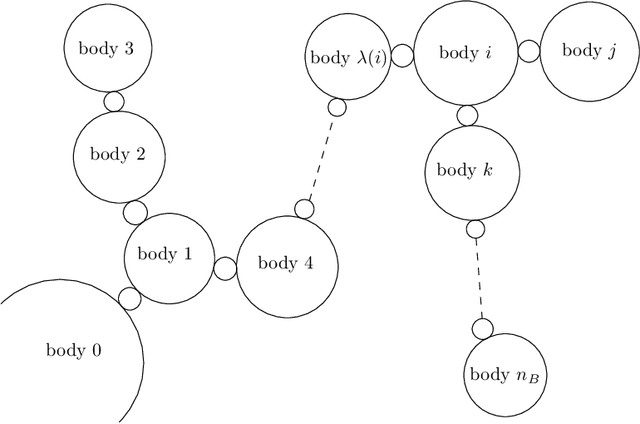

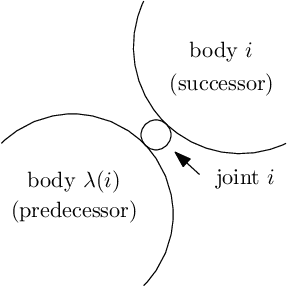

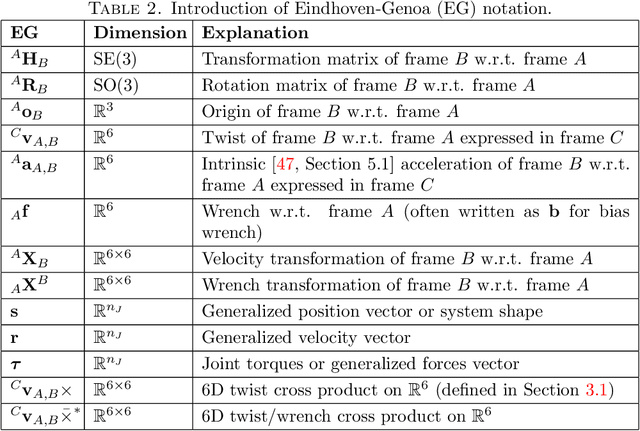

Abstract:The linearization of the equations of motion of a robotics system about a given state-input trajectory, including a controlled equilibrium state, is a valuable tool for model-based planning, closed-loop control, gain tuning, and state estimation. Contrary to the case of fixed based manipulators with prismatic or rotary joints, the state space of moving-base robotic systems such as humanoids, quadruped robots, or aerial manipulators cannot be globally parametrized by a finite number of independent coordinates. This impossibility is a direct consequence of the fact that the state of these systems includes the system's global orientation, formally described as an element of the special orthogonal group SO(3). As a consequence, obtaining the linearization of the equations of motion for these systems is typically resolved, from a practical perspective, by locally parameterizing the system's attitude by means of, e.g., Euler or Cardan angles. This has the drawback, however, of introducing artificial parameterization singularities and extra derivative computations. In this contribution, we show that it is actually possible to define a notion of linearization that does not require the use of a local parameterization for the system's orientation, obtaining a mathematically elegant, recursive, and singularity-free linearization for moving-based robot systems. Recursiveness, in particular, is obtained by proposing a nontrivial modification of existing recursive algorithms to allow for computations of the geometric derivatives of the inverse dynamics and the inverse of the mass matrix of the robotic system. The correctness of the proposed algorithm is validated by means of a numerical comparison with the result obtained via geometric finite difference.

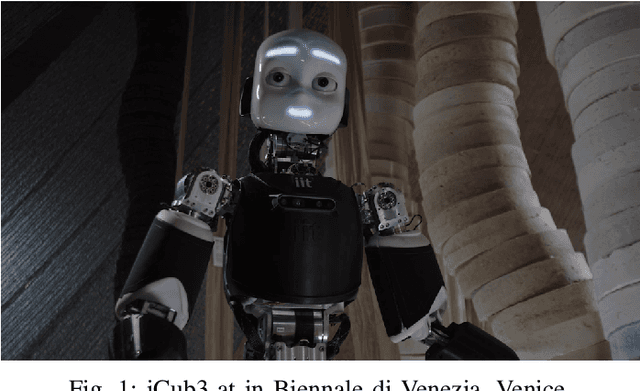

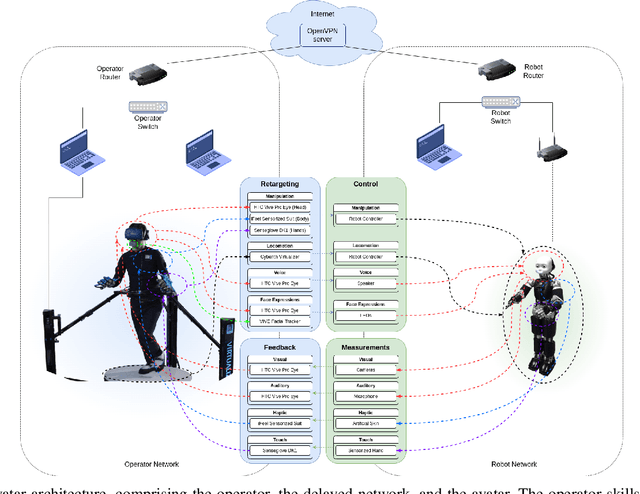

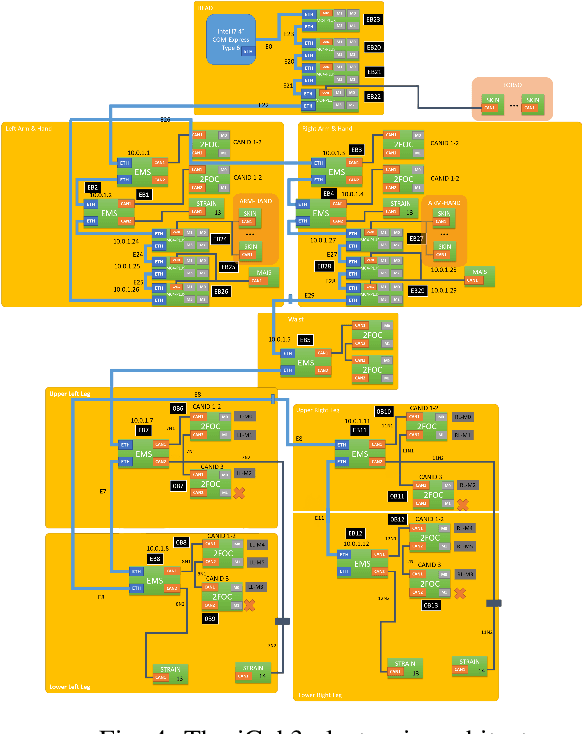

iCub3 Avatar System

Mar 14, 2022

Abstract:We present an avatar system that enables a human operator to visit a remote location via iCub3, a new humanoid robot developed at the Italian Institute of Technology (IIT) paving the way for the next generation of the iCub platforms. On the one hand, we present the humanoid iCub3 that plays the role of the robotic avatar. Particular attention is paid to the differences between iCub3 and the classical iCub humanoid robot. On the other hand, we present the set of technologies of the avatar system at the operator side. They are mainly composed of iFeel, namely, IIT lightweight non-invasive wearable devices for motion tracking and haptic feedback, and of non-IIT technologies designed for virtual reality ecosystems. Finally, we show the effectiveness of the avatar system by describing a demonstration involving a realtime teleoperation of the iCub3. The robot is located in Venice, Biennale di Venezia, while the human operator is at more than 290km distance and located in Genoa, IIT. Using a standard fiber optic internet connection, the avatar system transports the operator locomotion, manipulation, voice, and face expressions to the iCub3 with visual, auditory, haptic and touch feedback.

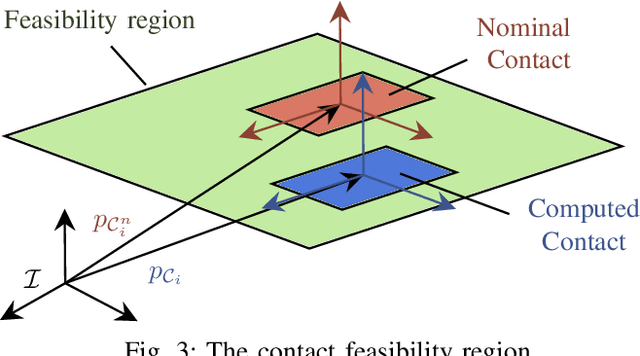

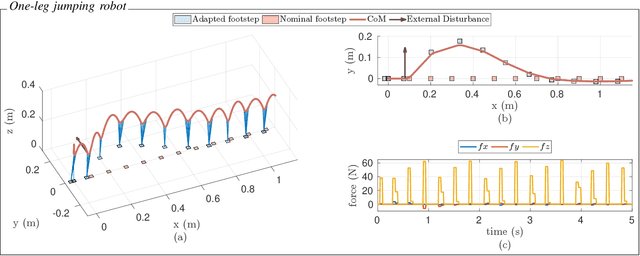

Online Non-linear Centroidal MPC for Humanoid Robot Locomotion with Step Adjustment

Mar 10, 2022

Abstract:This paper presents a Non-Linear Model Predictive Controller for humanoid robot locomotion with online step adjustment capabilities. The proposed controller considers the Centroidal Dynamics of the system to compute the desired contact forces and torques and contact locations. Differently from bipedal walking architectures based on simplified models, the presented approach considers the reduced centroidal model, thus allowing the robot to perform highly dynamic movements while keeping the control problem still treatable online. We show that the proposed controller can automatically adjust the contact location both in single and double support phases. The overall approach is then tested with a simulation of one-leg and two-leg systems performing jumping and running tasks, respectively. We finally validate the proposed controller on the position-controlled Humanoid Robot iCub. Results show that the proposed strategy prevents the robot from falling while walking and pushed with external forces up to 40 Newton for 1 second applied at the robot arm.

* Paper accepted in ICRA 2022

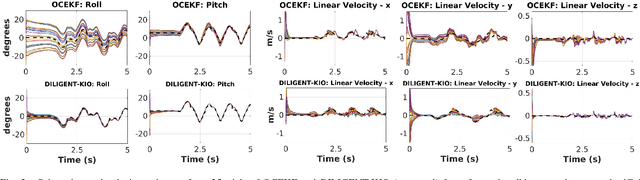

DILIGENT-KIO: A Proprioceptive Base Estimator for Humanoid Robots using Extended Kalman Filtering on Matrix Lie Groups

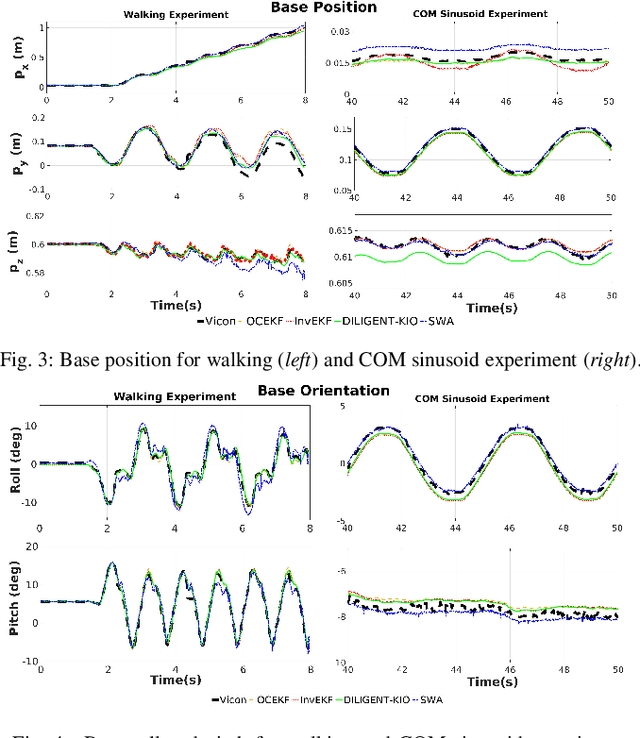

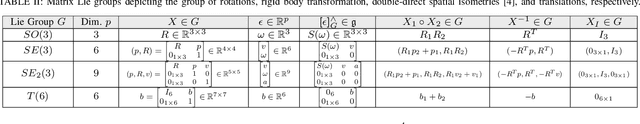

May 31, 2021

Abstract:This paper presents a contact-aided inertial-kinematic floating base estimation for humanoid robots considering an evolution of the state and observations over matrix Lie groups. This is achieved through the application of a geometrically meaningful estimator which is characterized by concentrated Gaussian distributions. The configuration of a floating base system like a humanoid robot usually requires the knowledge of an additional six degrees of freedom which describes its base position-and-orientation. This quantity usually cannot be measured and needs to be estimated. A matrix Lie group, encapsulating the position-and-orientation and linear velocity of the base link, feet positions-and-orientations and Inertial Measurement Units' biases, is used to represent the state while relative positions-and-orientations of contact feet from forward kinematics are used as observations. The proposed estimator exhibits fast convergence for large initialization errors owing to choice of uncertainty parametrization. An experimental validation is done on the iCub humanoid platform.

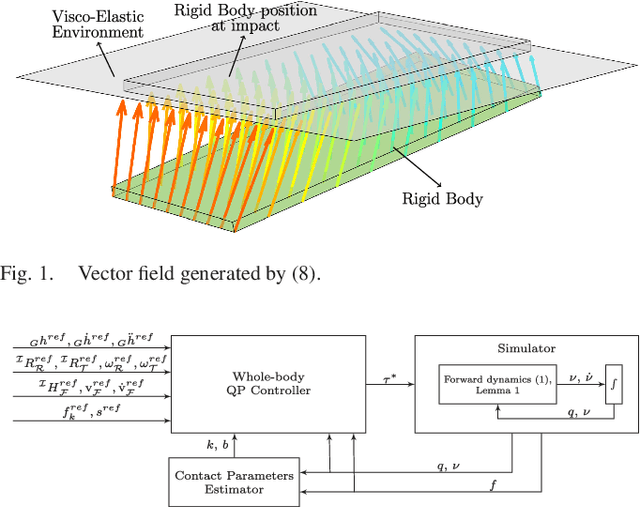

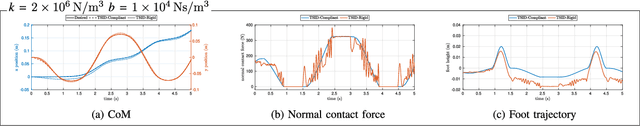

Modeling of Visco-Elastic Environments for Humanoid Robot Motion Control

May 30, 2021

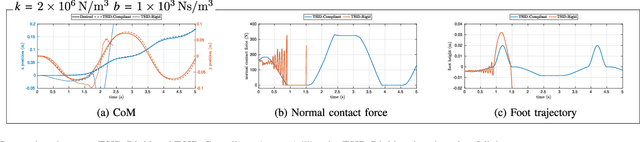

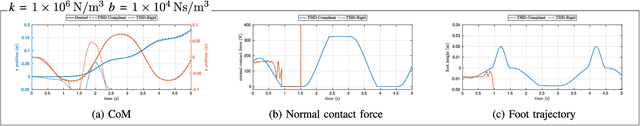

Abstract:This manuscript presents a model of compliant contacts for time-critical humanoid robot motion control. The proposed model considers the environment as a continuum of spring-damper systems, which allows us to compute the equivalent contact force and torque that the environment exerts on the contact surface. We show that the proposed model extends the linear and rotational springs and dampers - classically used to characterize soft terrains - to the case of large contact surface orientations. The contact model is then used for the real-time whole-body control of humanoid robots walking on visco-elastic environments. The overall approach is validated by simulating walking motions of the iCub humanoid robot. Furthermore, the paper compares the proposed whole-body control strategy and state of the art approaches. In this respect, we investigate the terrain compliance that makes the classical approaches assuming rigid contacts fail. We finally analyze the robustness of the presented control design with respect to non-parametric uncertainty in the contact-model.

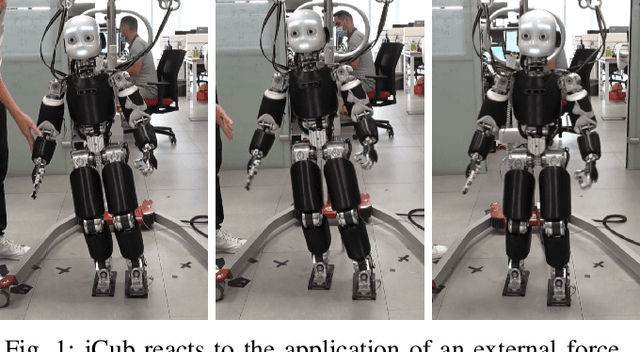

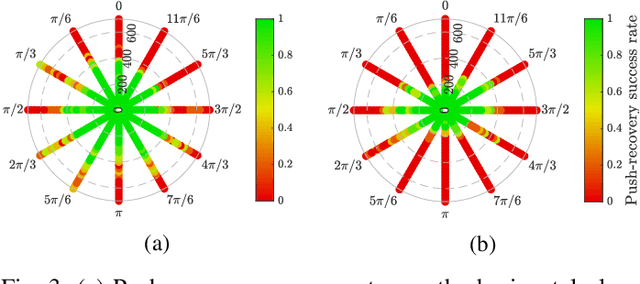

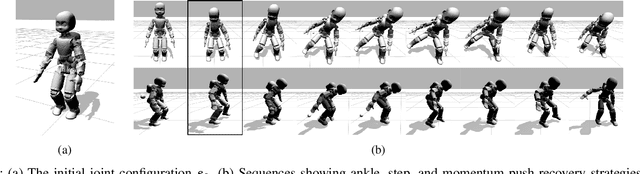

On the Emergence of Whole-body Strategies from Humanoid Robot Push-recovery Learning

Apr 29, 2021

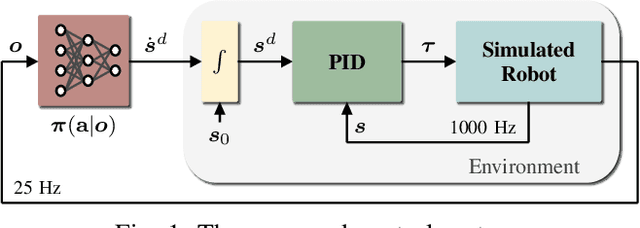

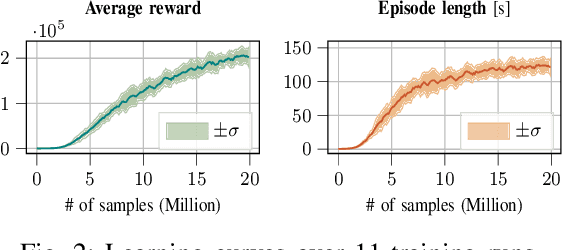

Abstract:Balancing and push-recovery are essential capabilities enabling humanoid robots to solve complex locomotion tasks. In this context, classical control systems tend to be based on simplified physical models and hard-coded strategies. Although successful in specific scenarios, this approach requires demanding tuning of parameters and switching logic between specifically-designed controllers for handling more general perturbations. We apply model-free Deep Reinforcement Learning for training a general and robust humanoid push-recovery policy in a simulation environment. Our method targets high-dimensional whole-body humanoid control and is validated on the iCub humanoid. Reward components incorporating expert knowledge on humanoid control enable fast learning of several robust behaviors by the same policy, spanning the entire body. We validate our method with extensive quantitative analyses in simulation, including out-of-sample tasks which demonstrate policy robustness and generalization, both key requirements towards real-world robot deployment.

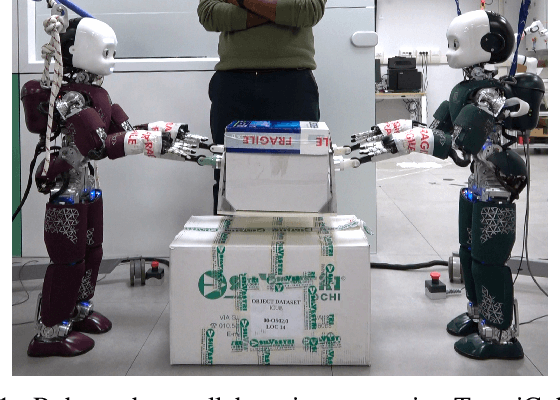

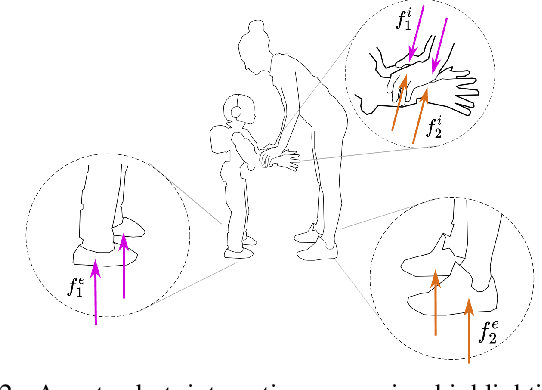

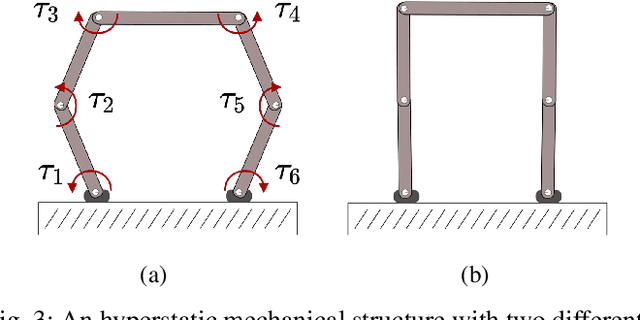

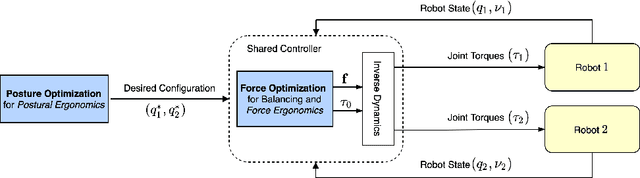

Shared Control of Robot-Robot Collaborative Lifting with Agent Postural and Force Ergonomic Optimization

Apr 28, 2021

Abstract:Humans show specialized strategies for efficient collaboration. Transferring similar strategies to humanoid robots can improve their capability to interact with other agents, leading the way to complex collaborative scenarios with multiple agents acting on a shared environment. In this paper we present a control framework for robot-robot collaborative lifting. The proposed shared controller takes into account the joint action of both the robots thanks to a centralized controller that communicates with them, and solves the whole-system optimization. Efficient collaboration is ensured by taking into account the ergonomic requirements of the robots through the optimization of posture and contact forces. The framework is validated in an experimental scenario with two iCub humanoid robots performing different payload lifting sequences.

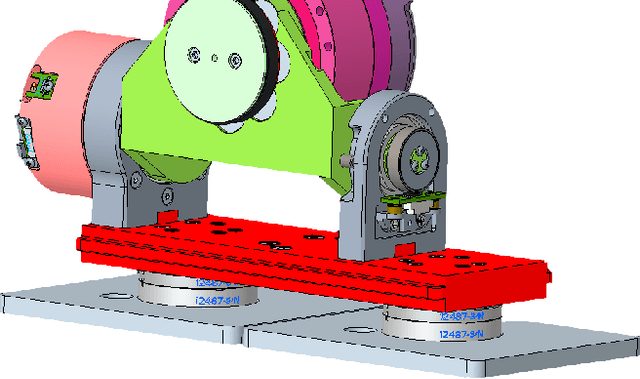

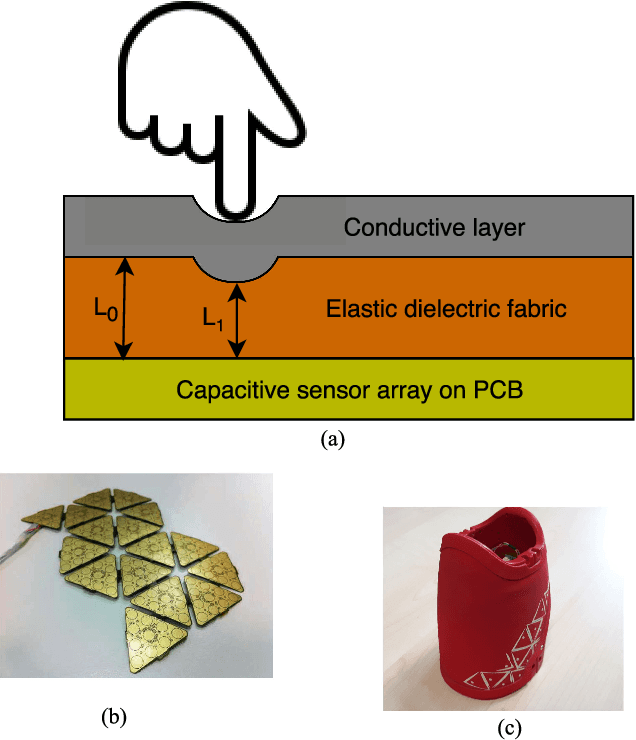

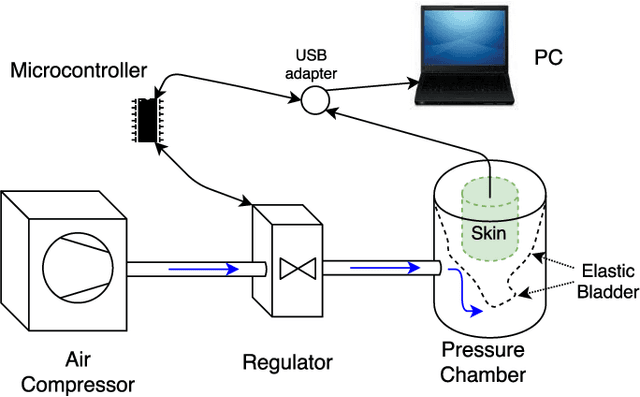

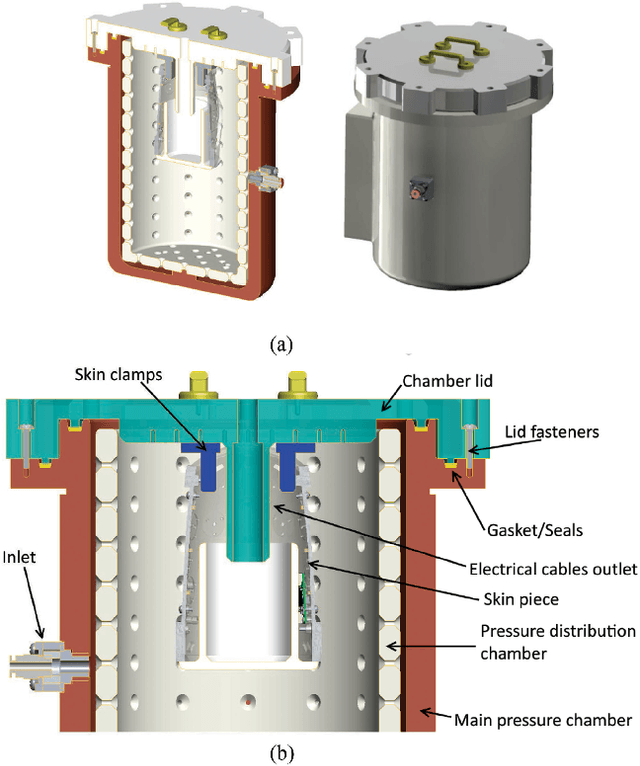

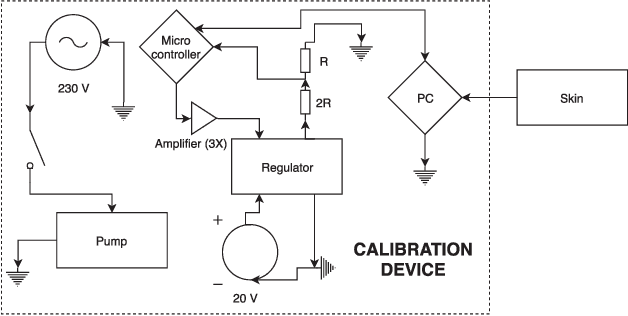

A Plenum-Based Calibration Device for Tactile Sensor Arrays

Mar 23, 2021

Abstract:In modern robotic applications, tactile sensor arrays (i.e., artificial skins) are an emergent solution to determine the locations of contacts between a robot and an external agent. Localizing the point of contact is useful but determining the force applied on the skin provides many additional possibilities. This additional feature usually requires time-consuming calibration procedures to relate the sensor readings to the applied forces. This letter presents a novel device that enables the calibration of tactile sensor arrays in a fast and simple way. The key idea is to design a plenum chamber where the skin is inserted, and then the calibration of the tactile sensors is achieved by relating the air pressure and the sensor readings. This general concept is tested experimentally to calibrate the skin of the iCub robot. The validation of the calibration device is achieved by placing the masses of known weight on the artificial skin and comparing the applied force against the one estimated by the sensors.

* 8 pages, 18 figures

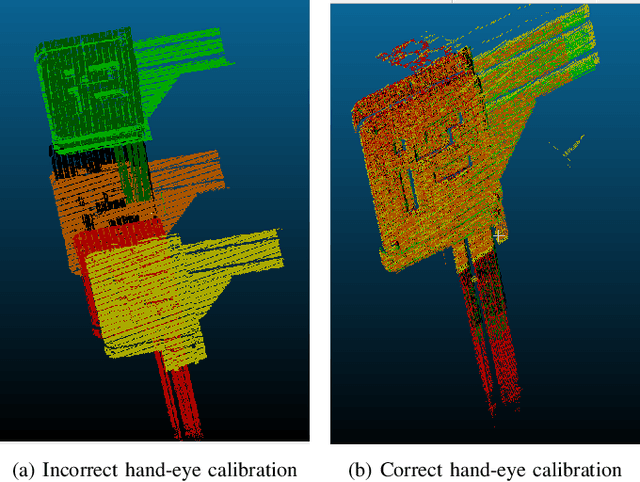

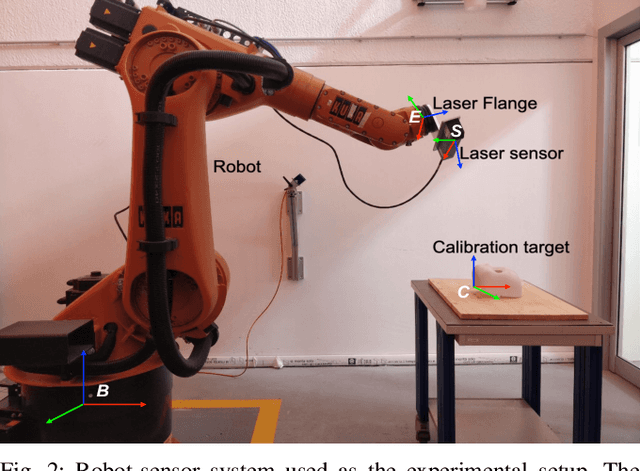

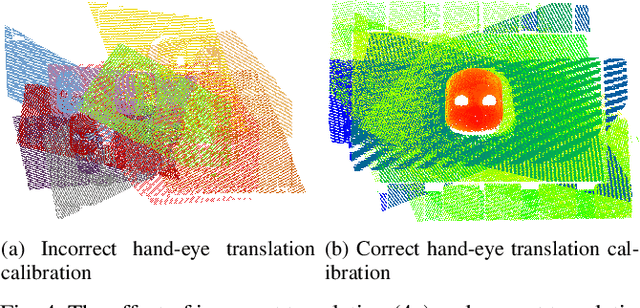

In Situ Translational Hand-Eye Calibration of Laser Profile Sensors using Arbitrary Objects

Mar 22, 2021

Abstract:Hand-eye calibration of laser profile sensors is the process of extracting the homogeneous transformation between the laser profile sensor frame and the end-effector frame of a robot in order to express the data extracted by the sensor in the robot's global coordinate system. For laser profile scanners this is a challenging procedure, as they provide data only in two dimensions and state-of-the-art calibration procedures require the use of specialised calibration targets. This paper presents a novel method to extract the translation-part of the hand-eye calibration matrix with rotation-part known a priori in a target-agnostic way. Our methodology is applicable to any 2D image or 3D object as a calibration target and can also be performed in situ in the final application. The method is experimentally validated on a real robot-sensor setup with 2D and 3D targets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge