Chaojian Li

Celine

FracTrain: Fractionally Squeezing Bit Savings Both Temporally and Spatially for Efficient DNN Training

Dec 24, 2020

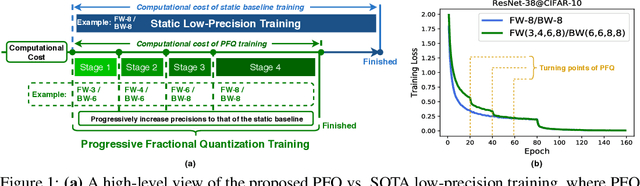

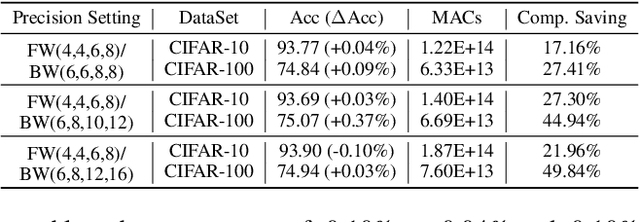

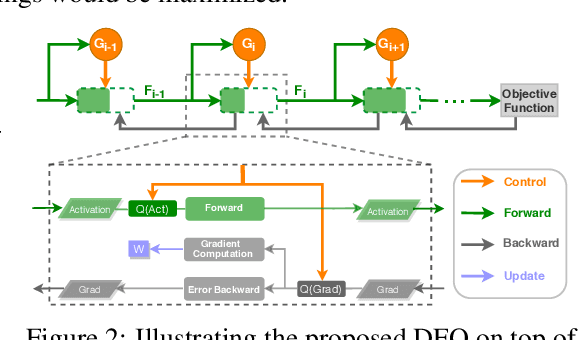

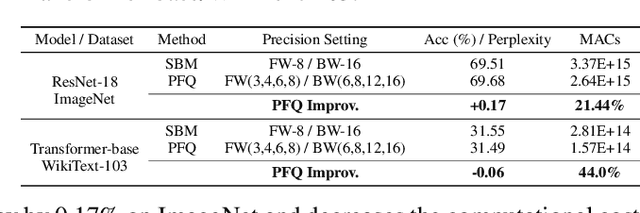

Abstract:Recent breakthroughs in deep neural networks (DNNs) have fueled a tremendous demand for intelligent edge devices featuring on-site learning, while the practical realization of such systems remains a challenge due to the limited resources available at the edge and the required massive training costs for state-of-the-art (SOTA) DNNs. As reducing precision is one of the most effective knobs for boosting training time/energy efficiency, there has been a growing interest in low-precision DNN training. In this paper, we explore from an orthogonal direction: how to fractionally squeeze out more training cost savings from the most redundant bit level, progressively along the training trajectory and dynamically per input. Specifically, we propose FracTrain that integrates (i) progressive fractional quantization which gradually increases the precision of activations, weights, and gradients that will not reach the precision of SOTA static quantized DNN training until the final training stage, and (ii) dynamic fractional quantization which assigns precisions to both the activations and gradients of each layer in an input-adaptive manner, for only "fractionally" updating layer parameters. Extensive simulations and ablation studies (six models, four datasets, and three training settings including standard, adaptation, and fine-tuning) validate the effectiveness of FracTrain in reducing computational cost and hardware-quantified energy/latency of DNN training while achieving a comparable or better (-0.12%~+1.87%) accuracy. For example, when training ResNet-74 on CIFAR-10, FracTrain achieves 77.6% and 53.5% computational cost and training latency savings, respectively, compared with the best SOTA baseline, while achieving a comparable (-0.07%) accuracy. Our codes are available at: https://github.com/RICE-EIC/FracTrain.

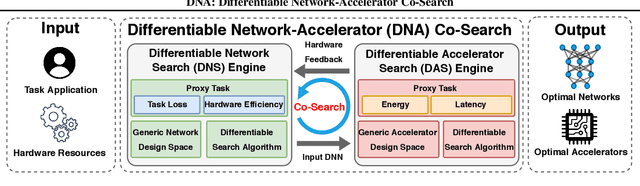

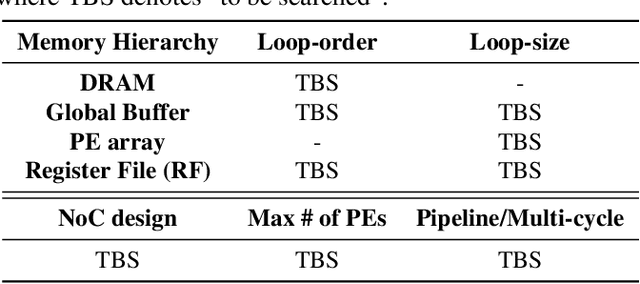

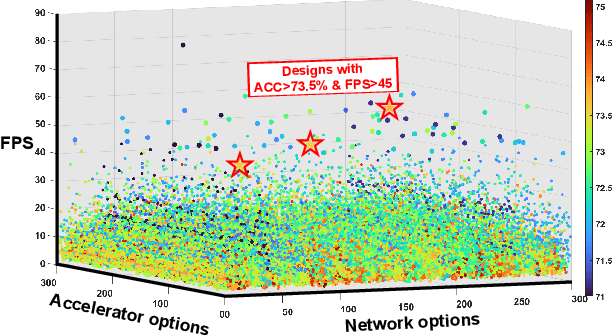

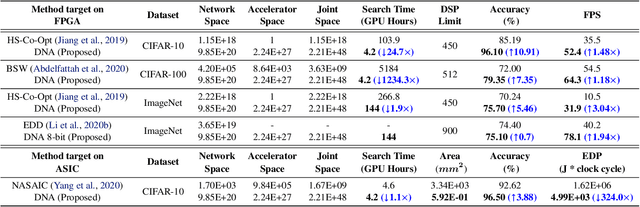

DNA: Differentiable Network-Accelerator Co-Search

Oct 28, 2020

Abstract:Powerful yet complex deep neural networks (DNNs) have fueled a booming demand for efficient DNN solutions to bring DNN-powered intelligence into numerous applications. Jointly optimizing the networks and their accelerators are promising in providing optimal performance. However, the great potential of such solutions have yet to be unleashed due to the challenge of simultaneously exploring the vast and entangled, yet different design spaces of the networks and their accelerators. To this end, we propose DNA, a Differentiable Network-Accelerator co-search framework for automatically searching for matched networks and accelerators to maximize both the task accuracy and acceleration efficiency. Specifically, DNA integrates two enablers: (1) a generic design space for DNN accelerators that is applicable to both FPGA- and ASIC-based DNN accelerators and compatible with DNN frameworks such as PyTorch to enable algorithmic exploration for more efficient DNNs and their accelerators; and (2) a joint DNN network and accelerator co-search algorithm that enables simultaneously searching for optimal DNN structures and their accelerators' micro-architectures and mapping methods to maximize both the task accuracy and acceleration efficiency. Experiments and ablation studies based on FPGA measurements and ASIC synthesis show that the matched networks and accelerators generated by DNA consistently outperform state-of-the-art (SOTA) DNNs and DNN accelerators (e.g., 3.04x better FPS with a 5.46% higher accuracy on ImageNet), while requiring notably reduced search time (up to 1234.3x) over SOTA co-exploration methods, when evaluated over ten SOTA baselines on three datasets. All codes will be released upon acceptance.

ShiftAddNet: A Hardware-Inspired Deep Network

Oct 24, 2020

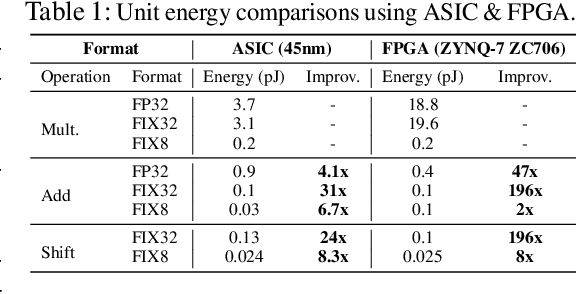

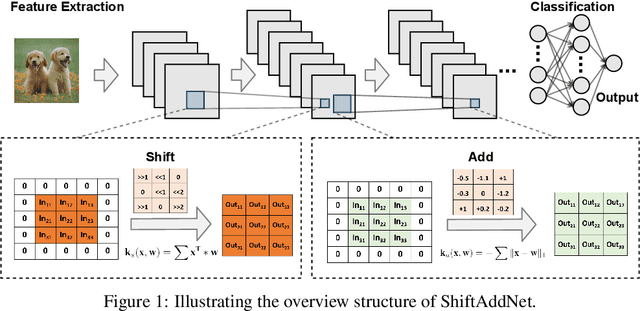

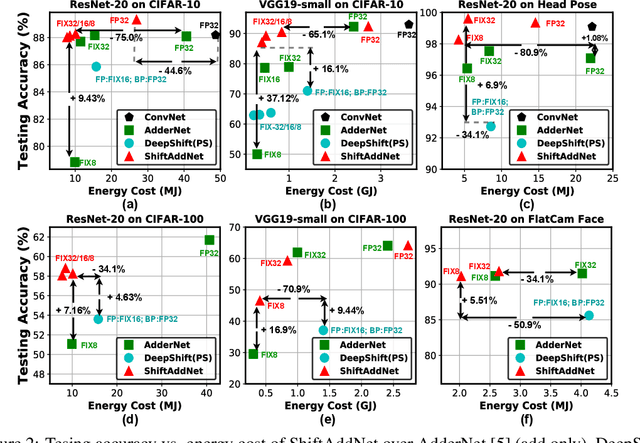

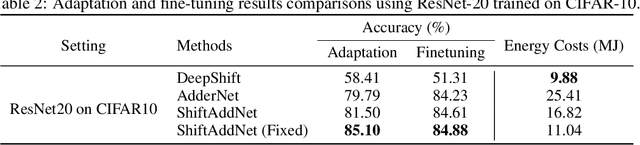

Abstract:Multiplication (e.g., convolution) is arguably a cornerstone of modern deep neural networks (DNNs). However, intensive multiplications cause expensive resource costs that challenge DNNs' deployment on resource-constrained edge devices, driving several attempts for multiplication-less deep networks. This paper presented ShiftAddNet, whose main inspiration is drawn from a common practice in energy-efficient hardware implementation, that is, multiplication can be instead performed with additions and logical bit-shifts. We leverage this idea to explicitly parameterize deep networks in this way, yielding a new type of deep network that involves only bit-shift and additive weight layers. This hardware-inspired ShiftAddNet immediately leads to both energy-efficient inference and training, without compromising the expressive capacity compared to standard DNNs. The two complementary operation types (bit-shift and add) additionally enable finer-grained control of the model's learning capacity, leading to more flexible trade-off between accuracy and (training) efficiency, as well as improved robustness to quantization and pruning. We conduct extensive experiments and ablation studies, all backed up by our FPGA-based ShiftAddNet implementation and energy measurements. Compared to existing DNNs or other multiplication-less models, ShiftAddNet aggressively reduces over 80% hardware-quantified energy cost of DNNs training and inference, while offering comparable or better accuracies. Codes and pre-trained models are available at https://github.com/RICE-EIC/ShiftAddNet.

SmartExchange: Trading Higher-cost Memory Storage/Access for Lower-cost Computation

May 08, 2020

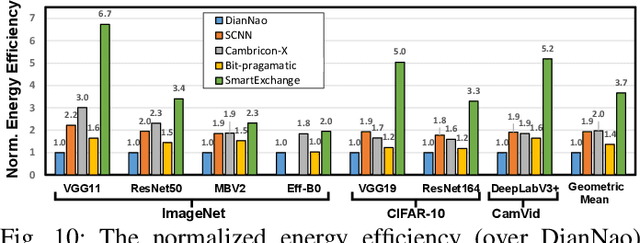

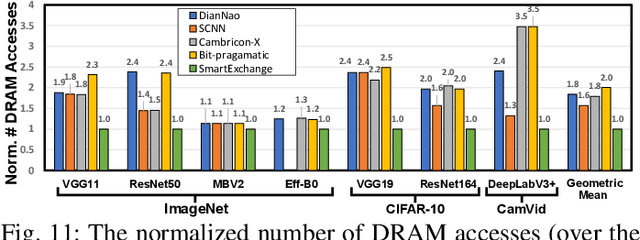

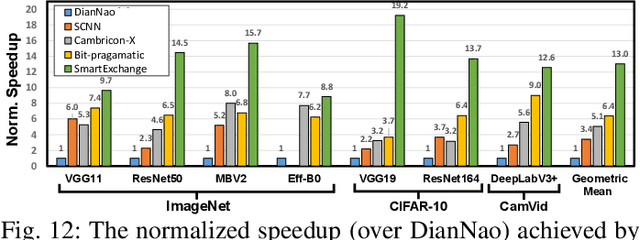

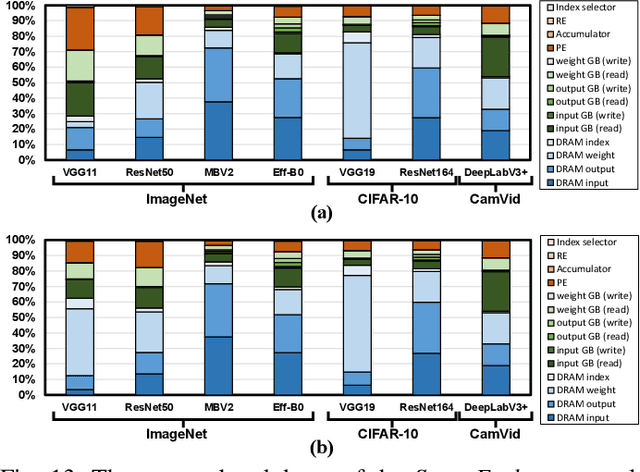

Abstract:We present SmartExchange, an algorithm-hardware co-design framework to trade higher-cost memory storage/access for lower-cost computation, for energy-efficient inference of deep neural networks (DNNs). We develop a novel algorithm to enforce a specially favorable DNN weight structure, where each layerwise weight matrix can be stored as the product of a small basis matrix and a large sparse coefficient matrix whose non-zero elements are all power-of-2. To our best knowledge, this algorithm is the first formulation that integrates three mainstream model compression ideas: sparsification or pruning, decomposition, and quantization, into one unified framework. The resulting sparse and readily-quantized DNN thus enjoys greatly reduced energy consumption in data movement as well as weight storage. On top of that, we further design a dedicated accelerator to fully utilize the SmartExchange-enforced weights to improve both energy efficiency and latency performance. Extensive experiments show that 1) on the algorithm level, SmartExchange outperforms state-of-the-art compression techniques, including merely sparsification or pruning, decomposition, and quantization, in various ablation studies based on nine DNN models and four datasets; and 2) on the hardware level, the proposed SmartExchange based accelerator can improve the energy efficiency by up to 6.7$\times$ and the speedup by up to 19.2$\times$ over four state-of-the-art DNN accelerators, when benchmarked on seven DNN models (including four standard DNNs, two compact DNN models, and one segmentation model) and three datasets.

A New MRAM-based Process In-Memory Accelerator for Efficient Neural Network Training with Floating Point Precision

Mar 02, 2020

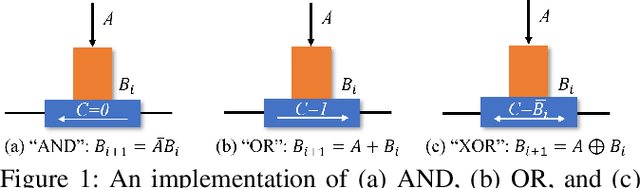

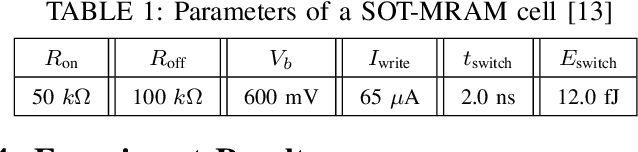

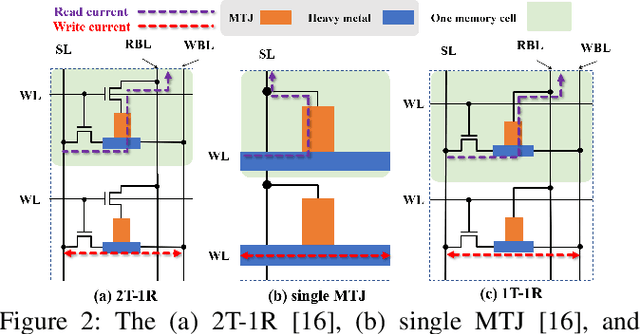

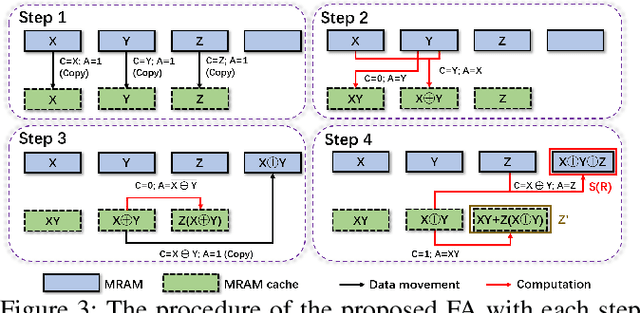

Abstract:The excellent performance of modern deep neural networks (DNNs) comes at an often prohibitive training cost, limiting the rapid development of DNN innovations and raising various environmental concerns. To reduce the dominant data movement cost of training, process in-memory (PIM) has emerged as a promising solution as it alleviates the need to access DNN weights. However, state-of-the-art PIM DNN training accelerators employ either analog/mixed signal computing which has limited precision or digital computing based on a memory technology that supports limited logic functions and thus requires complicated procedure to realize floating point computation. In this paper, we propose a spin orbit torque magnetic random access memory (SOT-MRAM) based digital PIM accelerator that supports floating point precision. Specifically, this new accelerator features an innovative (1) SOT-MRAM cell, (2) full addition design, and (3) floating point computation. Experiment results show that the proposed SOT-MRAM PIM based DNN training accelerator can achieve 3.3x, 1.8x, and 2.5x improvement in terms of energy, latency, and area, respectively, compared with a state-of-the-art PIM based DNN training accelerator.

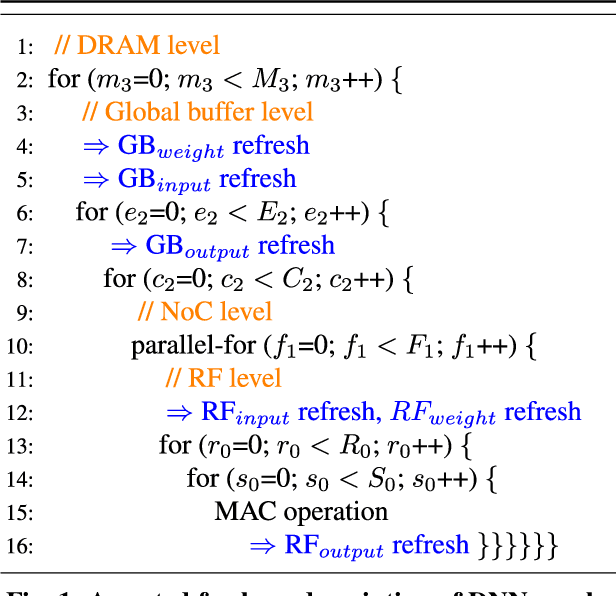

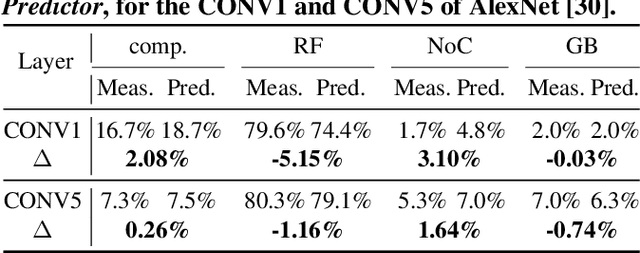

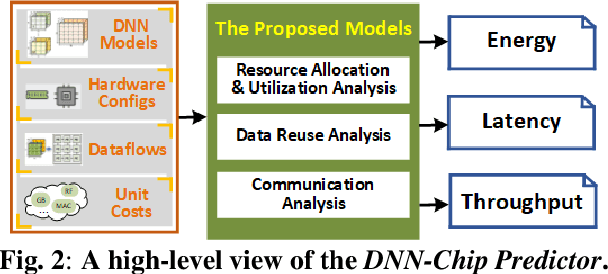

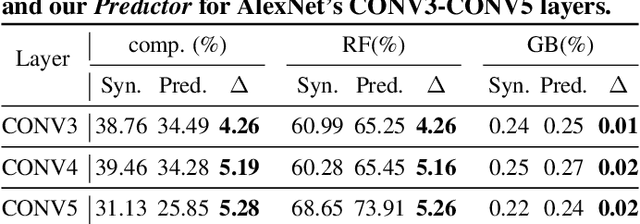

DNN-Chip Predictor: An Analytical Performance Predictor for DNN Accelerators with Various Dataflows and Hardware Architectures

Feb 26, 2020

Abstract:The recent breakthroughs in deep neural networks (DNNs) have spurred a tremendously increased demand for DNN accelerators. However, designing DNN accelerators is non-trivial as it often takes months/years and requires cross-disciplinary knowledge. To enable fast and effective DNN accelerator development, we propose DNN-Chip Predictor, an analytical performance predictor which can accurately predict DNN accelerators' energy, throughput, and latency prior to their actual implementation. Our Predictor features two highlights: (1) its analytical performance formulation of DNN ASIC/FPGA accelerators facilitates fast design space exploration and optimization; and (2) it supports DNN accelerators with different algorithm-to-hardware mapping methods (i.e., dataflows) and hardware architectures. Experiment results based on 2 DNN models and 3 different ASIC/FPGA implementations show that our DNN-Chip Predictor's predicted performance differs from those of chip measurements of FPGA/ASIC implementation by no more than 17.66% when using different DNN models, hardware architectures, and dataflows. We will release code upon acceptance.

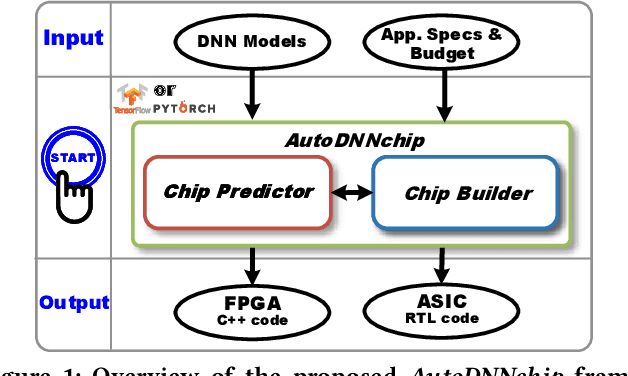

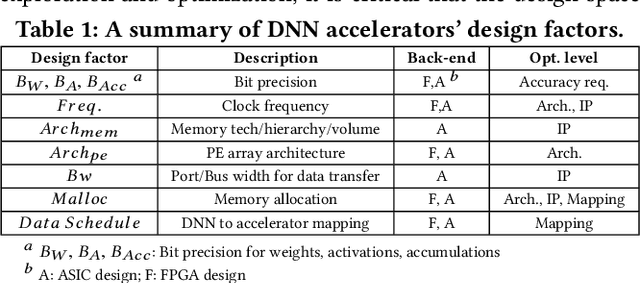

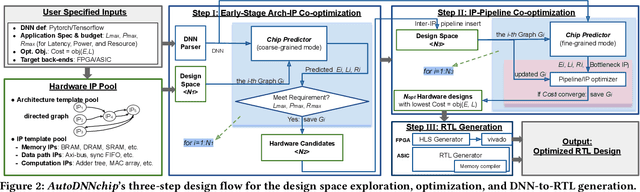

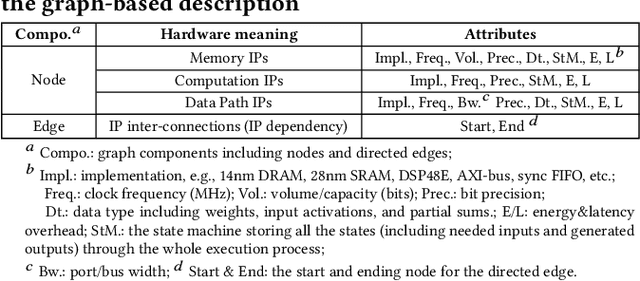

AutoDNNchip: An Automated DNN Chip Predictor and Builder for Both FPGAs and ASICs

Jan 06, 2020

Abstract:Recent breakthroughs in Deep Neural Networks (DNNs) have fueled a growing demand for DNN chips. However, designing DNN chips is non-trivial because: (1) mainstream DNNs have millions of parameters and operations; (2) the large design space due to the numerous design choices of dataflows, processing elements, memory hierarchy, etc.; and (3) an algorithm/hardware co-design is needed to allow the same DNN functionality to have a different decomposition, which would require different hardware IPs to meet the application specifications. Therefore, DNN chips take a long time to design and require cross-disciplinary experts. To enable fast and effective DNN chip design, we propose AutoDNNchip - a DNN chip generator that can automatically generate both FPGA- and ASIC-based DNN chip implementation given DNNs from machine learning frameworks (e.g., PyTorch) for a designated application and dataset. Specifically, AutoDNNchip consists of two integrated enablers: (1) a Chip Predictor, built on top of a graph-based accelerator representation, which can accurately and efficiently predict a DNN accelerator's energy, throughput, and area based on the DNN model parameters, hardware configuration, technology-based IPs, and platform constraints; and (2) a Chip Builder, which can automatically explore the design space of DNN chips (including IP selection, block configuration, resource balancing, etc.), optimize chip design via the Chip Predictor, and then generate optimized synthesizable RTL to achieve the target design metrics. Experimental results show that our Chip Predictor's predicted performance differs from real-measured ones by < 10% when validated using 15 DNN models and 4 platforms (edge-FPGA/TPU/GPU and ASIC). Furthermore, accelerators generated by our AutoDNNchip can achieve better (up to 3.86X improvement) performance than that of expert-crafted state-of-the-art accelerators.

Drawing early-bird tickets: Towards more efficient training of deep networks

Sep 26, 2019

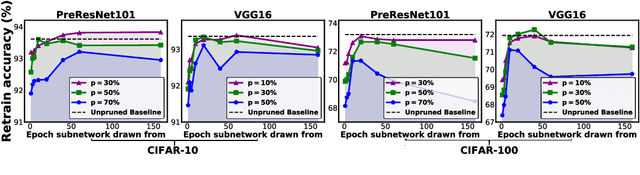

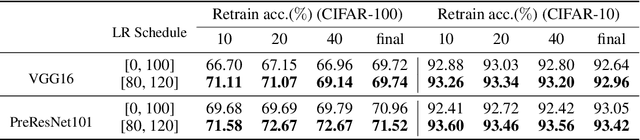

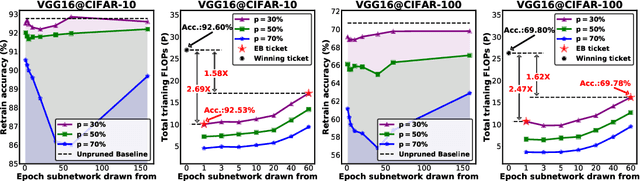

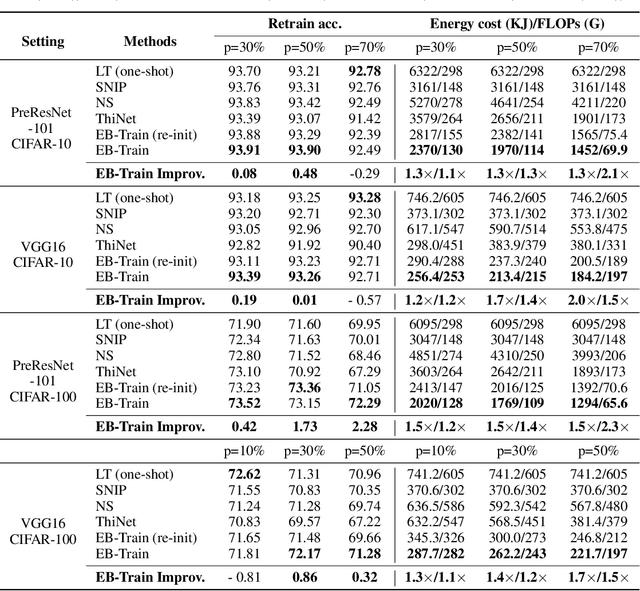

Abstract:(Frankle & Carbin, 2019) shows that there exist winning tickets (small but critical subnetworks) for dense, randomly initialized networks, that can be trained alone to achieve comparable accuracies to the latter in a similar number of iterations. However, the identification of these winning tickets still requires the costly train-prune-retrain process, limiting their practical benefits. In this paper, we discover for the first time that the winning tickets can be identified at the very early training stage, which we term as early-bird (EB) tickets, via low-cost training schemes (e.g., early stopping and low-precision training) at large learning rates. Our finding of EB tickets is consistent with recently reported observations that the key connectivity patterns of neural networks emerge early. Furthermore, we propose a mask distance metric that can be used to identify EB tickets with low computational overhead, without needing to know the true winning tickets that emerge after the full training. Finally, we leverage the existence of EB tickets and the proposed mask distance to develop efficient training methods, which are achieved by first identifying EB tickets via low-cost schemes, and then continuing to train merely the EB tickets towards the target accuracy. Experiments based on various deep networks and datasets validate: 1) the existence of EB tickets, and the effectiveness of mask distance in efficiently identifying them; and 2) that the proposed efficient training via EB tickets can achieve up to 4.7x energy savings while maintaining comparable or even better accuracy, demonstrating a promising and easily adopted method for tackling cost-prohibitive deep network training.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge