Ang Li

PolyMPCNet: Towards ReLU-free Neural Architecture Search in Two-party Computation Based Private Inference

Sep 20, 2022

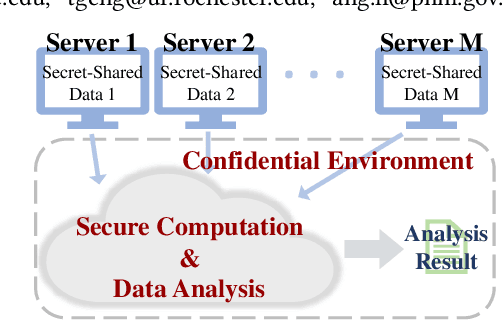

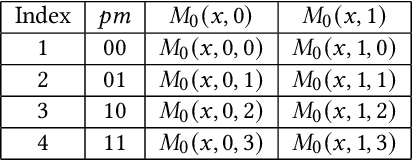

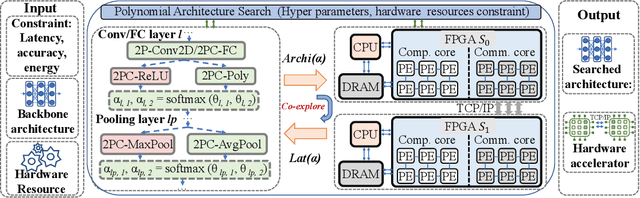

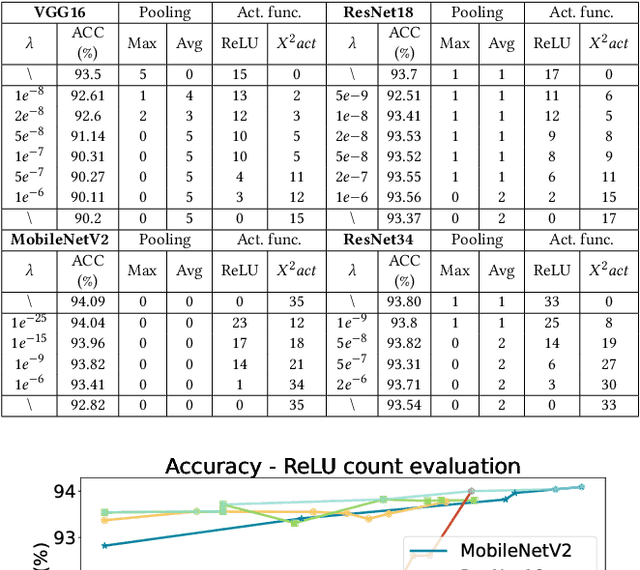

Abstract:The rapid growth and deployment of deep learning (DL) has witnessed emerging privacy and security concerns. To mitigate these issues, secure multi-party computation (MPC) has been discussed, to enable the privacy-preserving DL computation. In practice, they often come at very high computation and communication overhead, and potentially prohibit their popularity in large scale systems. Two orthogonal research trends have attracted enormous interests in addressing the energy efficiency in secure deep learning, i.e., overhead reduction of MPC comparison protocol, and hardware acceleration. However, they either achieve a low reduction ratio and suffer from high latency due to limited computation and communication saving, or are power-hungry as existing works mainly focus on general computing platforms such as CPUs and GPUs. In this work, as the first attempt, we develop a systematic framework, PolyMPCNet, of joint overhead reduction of MPC comparison protocol and hardware acceleration, by integrating hardware latency of the cryptographic building block into the DNN loss function to achieve high energy efficiency, accuracy, and security guarantee. Instead of heuristically checking the model sensitivity after a DNN is well-trained (through deleting or dropping some non-polynomial operators), our key design principle is to em enforce exactly what is assumed in the DNN design -- training a DNN that is both hardware efficient and secure, while escaping the local minima and saddle points and maintaining high accuracy. More specifically, we propose a straight through polynomial activation initialization method for cryptographic hardware friendly trainable polynomial activation function to replace the expensive 2P-ReLU operator. We develop a cryptographic hardware scheduler and the corresponding performance model for Field Programmable Gate Arrays (FPGA) platform.

Empowering GNNs with Fine-grained Communication-Computation Pipelining on Multi-GPU Platforms

Sep 14, 2022

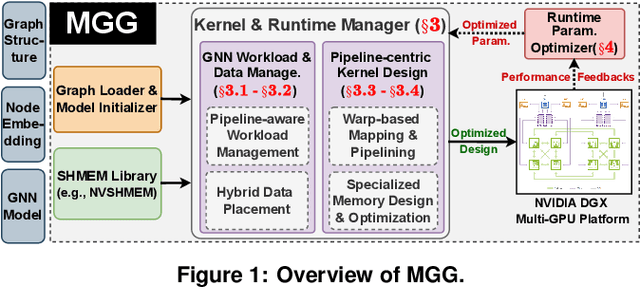

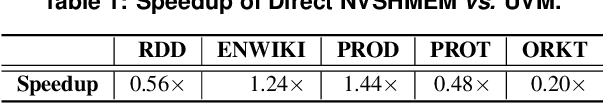

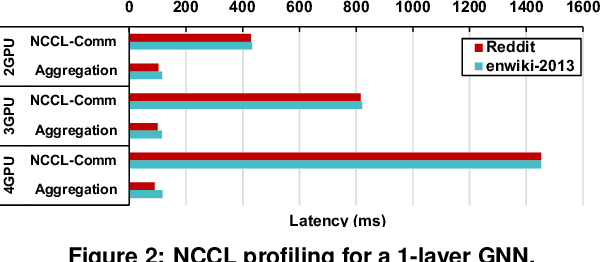

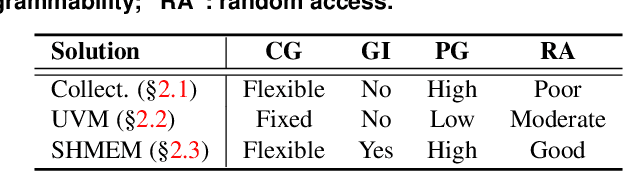

Abstract:The increasing size of input graphs for graph neural networks (GNNs) highlights the demand for using multi-GPU platforms. However, existing multi-GPU GNN solutions suffer from inferior performance due to imbalanced computation and inefficient communication. To this end, we propose MGG, a novel system design to accelerate GNNs on multi-GPU platforms via a GPU-centric software pipeline. MGG explores the potential of hiding remote memory access latency in GNN workloads through fine-grained computation-communication pipelining. Specifically, MGG introduces a pipeline-aware workload management strategy and a hybrid data layout design to facilitate communication-computation overlapping. MGG implements an optimized pipeline-centric kernel. It includes workload interleaving and warp-based mapping for efficient GPU kernel operation pipelining and specialized memory designs and optimizations for better data access performance. Besides, MGG incorporates lightweight analytical modeling and optimization heuristics to dynamically improve the GNN execution performance for different settings at runtime. Comprehensive experiments demonstrate that MGG outperforms state-of-the-art multi-GPU systems across various GNN settings: on average 3.65X faster than multi-GPU systems with a unified virtual memory design and on average 7.38X faster than the DGCL framework.

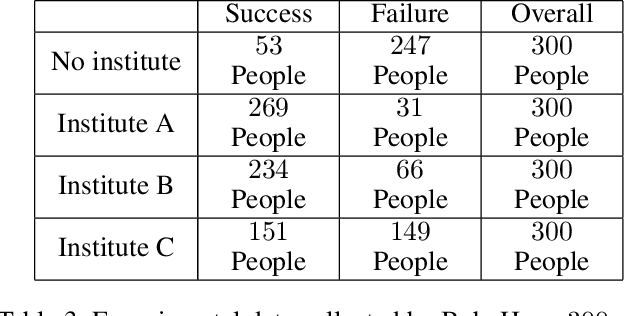

Unit Selection with Nonbinary Treatment and Effect

Aug 20, 2022

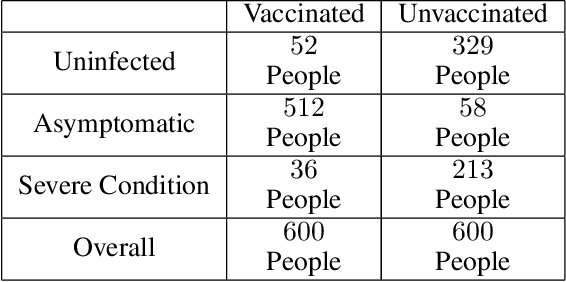

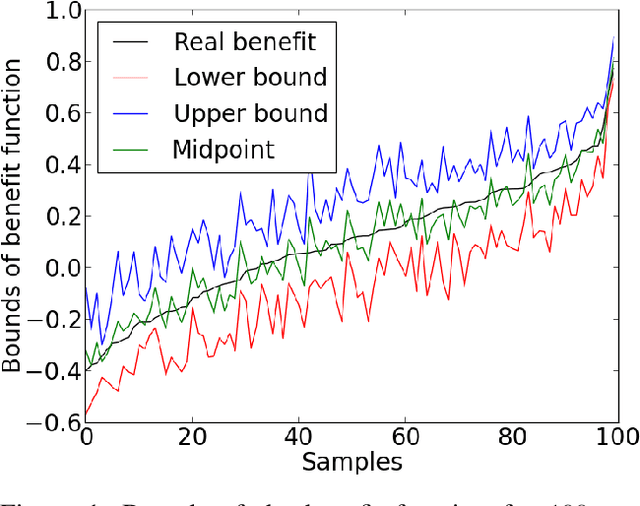

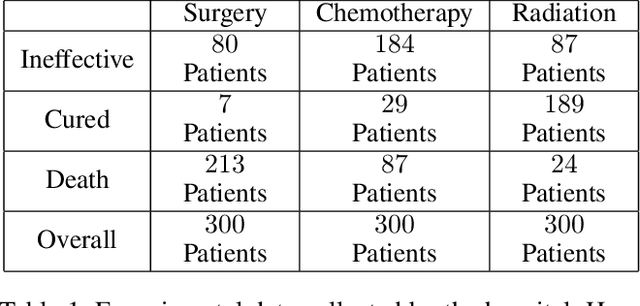

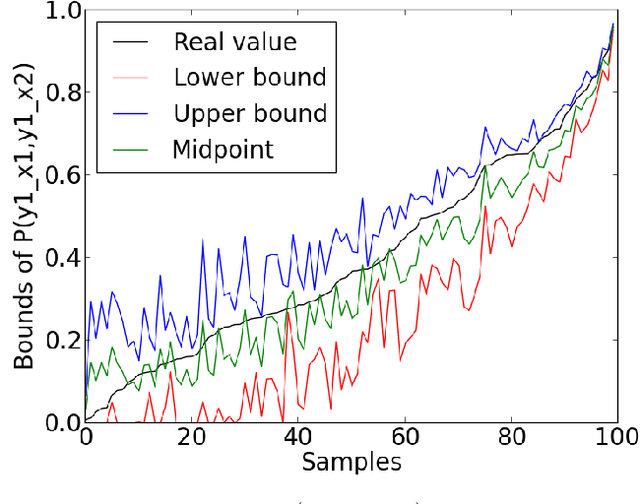

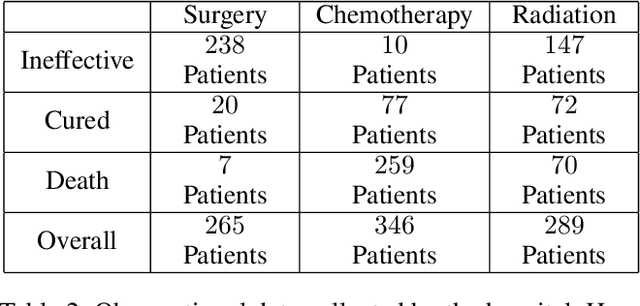

Abstract:The unit selection problem aims to identify a set of individuals who are most likely to exhibit a desired mode of behavior, for example, selecting individuals who would respond one way if encouraged and a different way if not encouraged. Using a combination of experimental and observational data, Li and Pearl derived tight bounds on the "benefit function", which is the payoff/cost associated with selecting an individual with given characteristics. This paper extends the benefit function to the general form such that the treatment and effect are not restricted to binary. We propose an algorithm to test the identifiability of the nonbinary benefit function and an algorithm to compute the bounds of the nonbinary benefit function using experimental and observational data.

Probabilities of Causation with Nonbinary Treatment and Effect

Aug 19, 2022

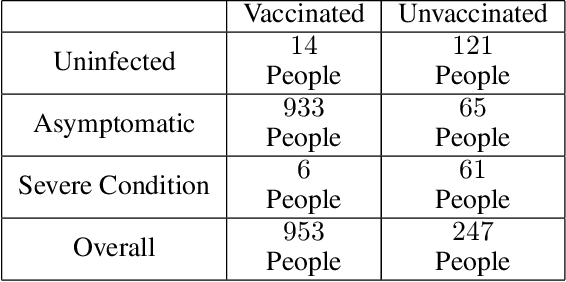

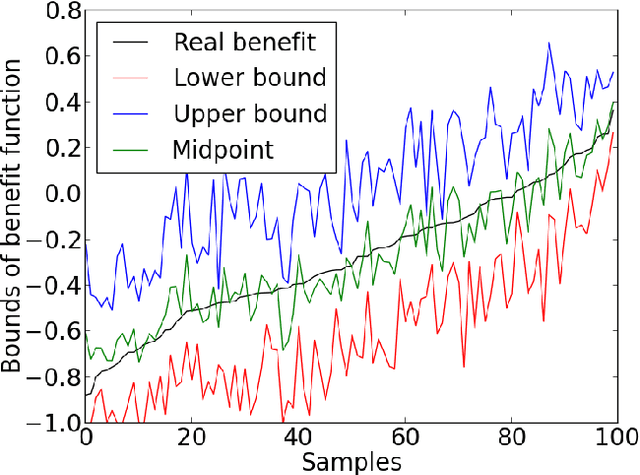

Abstract:This paper deals with the problem of estimating the probabilities of causation when treatment and effect are not binary. Tian and Pearl derived sharp bounds for the probability of necessity and sufficiency (PNS), the probability of sufficiency (PS), and the probability of necessity (PN) using experimental and observational data. In this paper, we provide theoretical bounds for all types of probabilities of causation to multivalued treatments and effects. We further discuss examples where our bounds guide practical decisions and use simulation studies to evaluate how informative the bounds are for various combinations of data.

A Length Adaptive Algorithm-Hardware Co-design of Transformer on FPGA Through Sparse Attention and Dynamic Pipelining

Aug 07, 2022

Abstract:Transformers are considered one of the most important deep learning models since 2018, in part because it establishes state-of-the-art (SOTA) records and could potentially replace existing Deep Neural Networks (DNNs). Despite the remarkable triumphs, the prolonged turnaround time of Transformer models is a widely recognized roadblock. The variety of sequence lengths imposes additional computing overhead where inputs need to be zero-padded to the maximum sentence length in the batch to accommodate the parallel computing platforms. This paper targets the field-programmable gate array (FPGA) and proposes a coherent sequence length adaptive algorithm-hardware co-design for Transformer acceleration. Particularly, we develop a hardware-friendly sparse attention operator and a length-aware hardware resource scheduling algorithm. The proposed sparse attention operator brings the complexity of attention-based models down to linear complexity and alleviates the off-chip memory traffic. The proposed length-aware resource hardware scheduling algorithm dynamically allocates the hardware resources to fill up the pipeline slots and eliminates bubbles for NLP tasks. Experiments show that our design has very small accuracy loss and has 80.2 $\times$ and 2.6 $\times$ speedup compared to CPU and GPU implementation, and 4 $\times$ higher energy efficiency than state-of-the-art GPU accelerator optimized via CUBLAS GEMM.

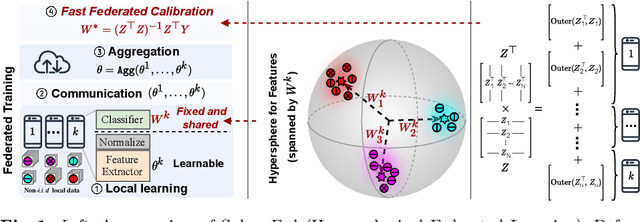

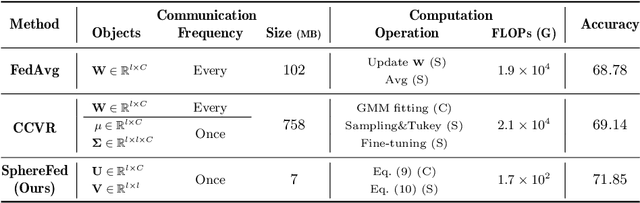

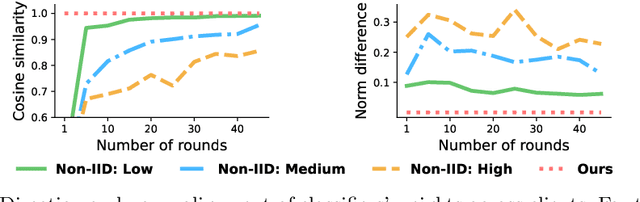

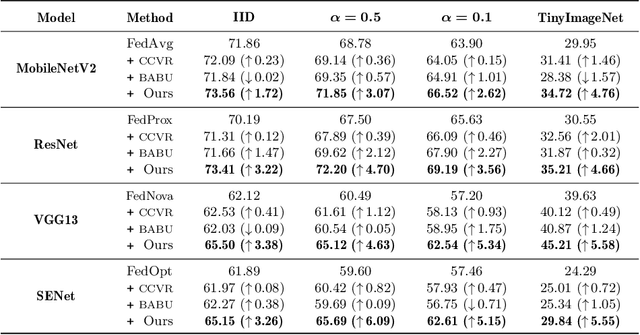

SphereFed: Hyperspherical Federated Learning

Jul 19, 2022

Abstract:Federated Learning aims at training a global model from multiple decentralized devices (i.e. clients) without exchanging their private local data. A key challenge is the handling of non-i.i.d. (independent identically distributed) data across multiple clients that may induce disparities of their local features. We introduce the Hyperspherical Federated Learning (SphereFed) framework to address the non-i.i.d. issue by constraining learned representations of data points to be on a unit hypersphere shared by clients. Specifically, all clients learn their local representations by minimizing the loss with respect to a fixed classifier whose weights span the unit hypersphere. After federated training in improving the global model, this classifier is further calibrated with a closed-form solution by minimizing a mean squared loss. We show that the calibration solution can be computed efficiently and distributedly without direct access of local data. Extensive experiments indicate that our SphereFed approach is able to improve the accuracy of multiple existing federated learning algorithms by a considerable margin (up to 6% on challenging datasets) with enhanced computation and communication efficiency across datasets and model architectures.

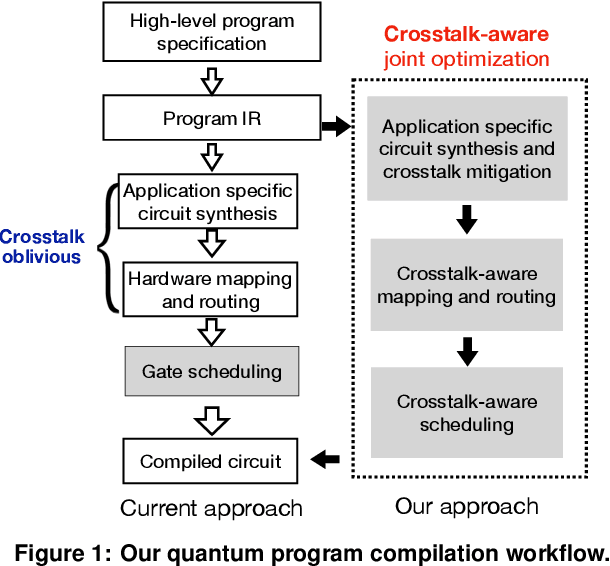

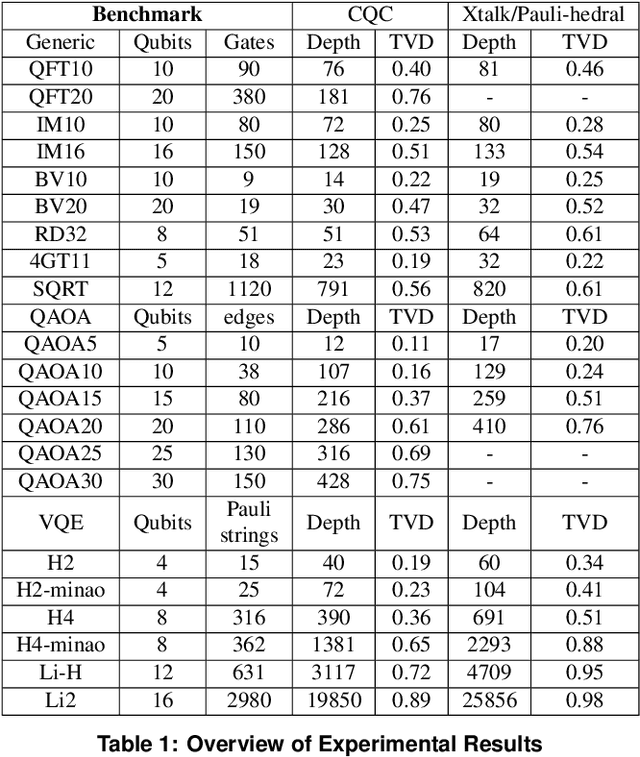

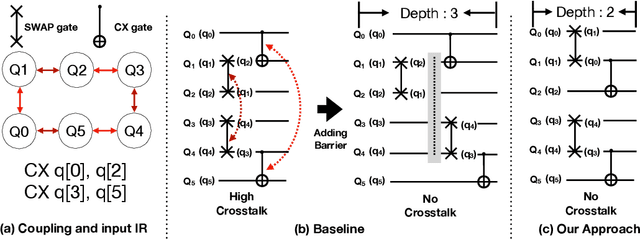

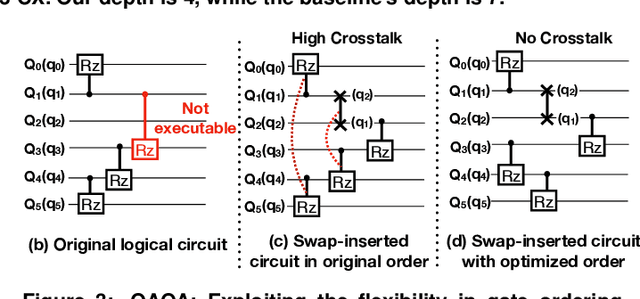

A Synergistic Compilation Workflow for Tackling Crosstalk in Quantum Machines

Jul 19, 2022

Abstract:Near-term quantum systems tend to be noisy. Crosstalk noise has been recognized as one of several major types of noises in superconducting Noisy Intermediate-Scale Quantum (NISQ) devices. Crosstalk arises from the concurrent execution of two-qubit gates on nearby qubits, such as \texttt{CX}. It might significantly raise the error rate of gates in comparison to running them individually. Crosstalk can be mitigated through scheduling or hardware machine tuning. Prior scientific studies, however, manage crosstalk at a really late phase in the compilation process, usually after hardware mapping is done. It may miss great opportunities of optimizing algorithm logic, routing, and crosstalk at the same time. In this paper, we push the envelope by considering all these factors simultaneously at the very early compilation stage. We propose a crosstalk-aware quantum program compilation framework called CQC that can enhance crosstalk mitigation while achieving satisfactory circuit depth. Moreover, we identify opportunities for translation from intermediate representation to the circuit for application-specific crosstalk mitigation, for instance, the \texttt{CX} ladder construction in variational quantum eigensolvers (VQE). Evaluations through simulation and on real IBM-Q devices show that our framework can significantly reduce the error rate by up to 6$\times$, with only $\sim$60\% circuit depth compared to state-of-the-art gate scheduling approaches. In particular, for VQE, we demonstrate 49\% circuit depth reduction with 9.6\% fidelity improvement over prior art on the H4 molecule using IBMQ Guadalupe. Our CQC framework will be released on GitHub.

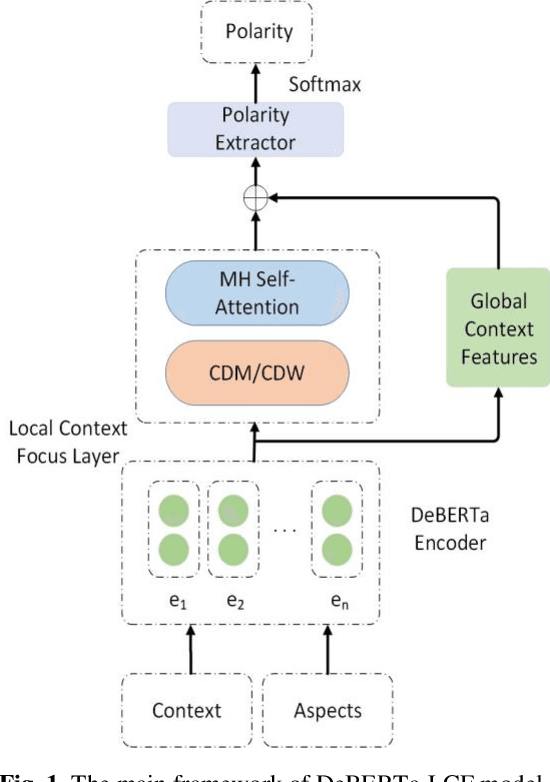

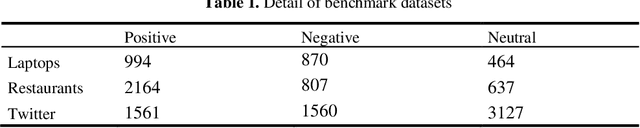

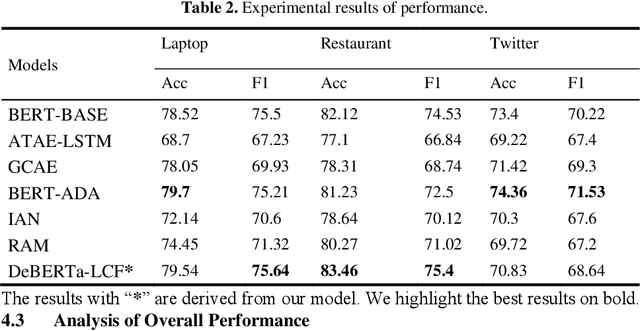

Aspect-Based Sentiment Analysis using Local Context Focus Mechanism with DeBERTa

Jul 07, 2022

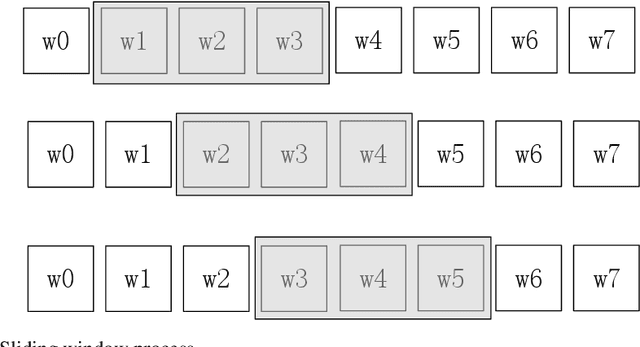

Abstract:Text sentiment analysis, also known as opinion mining, is research on the calculation of people's views, evaluations, attitude and emotions expressed by entities. Text sentiment analysis can be divided into text-level sentiment analysis, sen-tence-level sentiment analysis and aspect-level sentiment analysis. Aspect-Based Sentiment Analysis (ABSA) is a fine-grained task in the field of sentiment analysis, which aims to predict the polarity of aspects. The research of pre-training neural model has significantly improved the performance of many natural language processing tasks. In recent years, pre training model (PTM) has been applied in ABSA. Therefore, there has been a question, which is whether PTMs contain sufficient syntactic information for ABSA. In this paper, we explored the recent DeBERTa model (Decoding-enhanced BERT with disentangled attention) to solve Aspect-Based Sentiment Analysis problem. DeBERTa is a kind of neural language model based on transformer, which uses self-supervised learning to pre-train on a large number of original text corpora. Based on the Local Context Focus (LCF) mechanism, by integrating DeBERTa model, we purpose a multi-task learning model for aspect-based sentiment analysis. The experiments result on the most commonly used the laptop and restaurant datasets of SemEval-2014 and the ACL twitter dataset show that LCF mechanism with DeBERTa has significant improvement.

A Rare Topic Discovery Model for Short Texts Based on Co-occurrence word Network

Jun 30, 2022

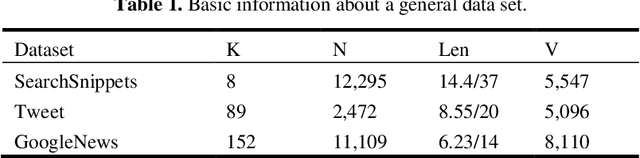

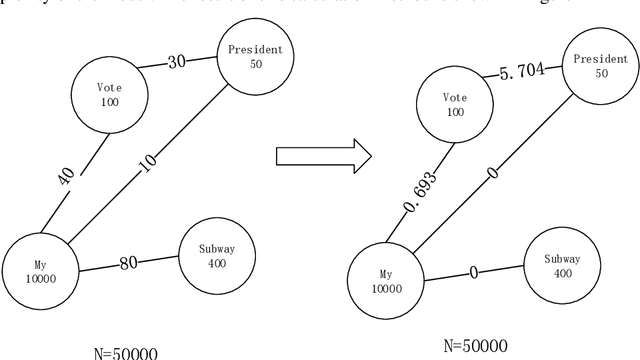

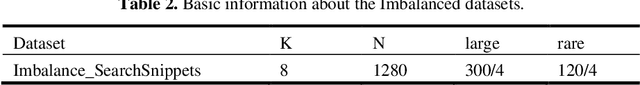

Abstract:We provide a simple and general solution for the discovery of scarce topics in unbalanced short-text datasets, namely, a word co-occurrence network-based model CWIBTD, which can simultaneously address the sparsity and unbalance of short-text topics and attenuate the effect of occasional pairwise occurrences of words, allowing the model to focus more on the discovery of scarce topics. Unlike previous approaches, CWIBTD uses co-occurrence word networks to model the topic distribution of each word, which improves the semantic density of the data space and ensures its sensitivity in identify-ing rare topics by improving the way node activity is calculated and normal-izing scarce topics and large topics to some extent. In addition, using the same Gibbs sampling as LDA makes CWIBTD easy to be extended to vari-ous application scenarios. Extensive experimental validation in the unbal-anced short text dataset confirms the superiority of CWIBTD over the base-line approach in discovering rare topics. Our model can be used for early and accurate discovery of emerging topics or unexpected events on social platforms.

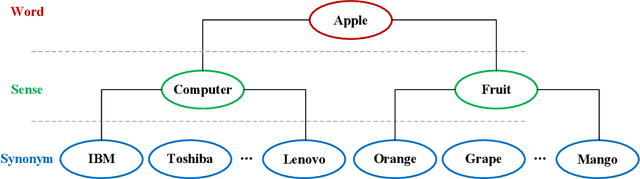

Chinese Word Sense Embedding with SememeWSD and Synonym Set

Jun 29, 2022

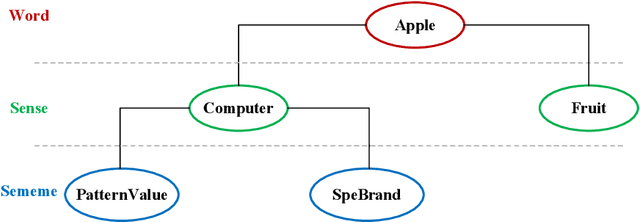

Abstract:Word embedding is a fundamental natural language processing task which can learn feature of words. However, most word embedding methods assign only one vector to a word, even if polysemous words have multi-senses. To address this limitation, we propose SememeWSD Synonym (SWSDS) model to assign a different vector to every sense of polysemous words with the help of word sense disambiguation (WSD) and synonym set in OpenHowNet. We use the SememeWSD model, an unsupervised word sense disambiguation model based on OpenHowNet, to do word sense disambiguation and annotate the polysemous word with sense id. Then, we obtain top 10 synonyms of the word sense from OpenHowNet and calculate the average vector of synonyms as the vector of the word sense. In experiments, We evaluate the SWSDS model on semantic similarity calculation with Gensim's wmdistance method. It achieves improvement of accuracy. We also examine the SememeWSD model on different BERT models to find the more effective model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge