Grammatical Error Detection

Grammatical error detection (GED) is the task of detecting different kinds of errors in text such as spelling, punctuation, grammatical, and word choice errors. Grammatical error detection (GED) is one of the key components in the grammatical error correction (GEC) community.

Papers and Code

IsoSignVid2Aud: Sign Language Video to Audio Conversion without Text Intermediaries

Oct 09, 2025Sign language to spoken language audio translation is important to connect the hearing- and speech-challenged humans with others. We consider sign language videos with isolated sign sequences rather than continuous grammatical signing. Such videos are useful in educational applications and sign prompt interfaces. Towards this, we propose IsoSignVid2Aud, a novel end-to-end framework that translates sign language videos with a sequence of possibly non-grammatic continuous signs to speech without requiring intermediate text representation, providing immediate communication benefits while avoiding the latency and cascading errors inherent in multi-stage translation systems. Our approach combines an I3D-based feature extraction module with a specialized feature transformation network and an audio generation pipeline, utilizing a novel Non-Maximal Suppression (NMS) algorithm for the temporal detection of signs in non-grammatic continuous sequences. Experimental results demonstrate competitive performance on ASL-Citizen-1500 and WLASL-100 datasets with Top-1 accuracies of 72.01\% and 78.67\%, respectively, and audio quality metrics (PESQ: 2.67, STOI: 0.73) indicating intelligible speech output. Code is available at: https://github.com/BheeshmSharma/IsoSignVid2Aud_AIMLsystems-2025.

MetaLogic: Robustness Evaluation of Text-to-Image Models via Logically Equivalent Prompts

Oct 01, 2025Recent advances in text-to-image (T2I) models, especially diffusion-based architectures, have significantly improved the visual quality of generated images. However, these models continue to struggle with a critical limitation: maintaining semantic consistency when input prompts undergo minor linguistic variations. Despite being logically equivalent, such prompt pairs often yield misaligned or semantically inconsistent images, exposing a lack of robustness in reasoning and generalisation. To address this, we propose MetaLogic, a novel evaluation framework that detects T2I misalignment without relying on ground truth images. MetaLogic leverages metamorphic testing, generating image pairs from prompts that differ grammatically but are semantically identical. By directly comparing these image pairs, the framework identifies inconsistencies that signal failures in preserving the intended meaning, effectively diagnosing robustness issues in the model's logic understanding. Unlike existing evaluation methods that compare a generated image to a single prompt, MetaLogic evaluates semantic equivalence between paired images, offering a scalable, ground-truth-free approach to identifying alignment failures. It categorises these alignment errors (e.g., entity omission, duplication, positional misalignment) and surfaces counterexamples that can be used for model debugging and refinement. We evaluate MetaLogic across multiple state-of-the-art T2I models and reveal consistent robustness failures across a range of logical constructs. We find that even the SOTA text-to-image models like Flux.dev and DALLE-3 demonstrate a 59 percent and 71 percent misalignment rate, respectively. Our results show that MetaLogic is not only efficient and scalable, but also effective in uncovering fine-grained logical inconsistencies that are overlooked by existing evaluation metrics.

A Representation Level Analysis of NMT Model Robustness to Grammatical Errors

May 27, 2025Understanding robustness is essential for building reliable NLP systems. Unfortunately, in the context of machine translation, previous work mainly focused on documenting robustness failures or improving robustness. In contrast, we study robustness from a model representation perspective by looking at internal model representations of ungrammatical inputs and how they evolve through model layers. For this purpose, we perform Grammatical Error Detection (GED) probing and representational similarity analysis. Our findings indicate that the encoder first detects the grammatical error, then corrects it by moving its representation toward the correct form. To understand what contributes to this process, we turn to the attention mechanism where we identify what we term Robustness Heads. We find that Robustness Heads attend to interpretable linguistic units when responding to grammatical errors, and that when we fine-tune models for robustness, they tend to rely more on Robustness Heads for updating the ungrammatical word representation.

Detecting Spelling and Grammatical Anomalies in Russian Poetry Texts

May 07, 2025The quality of natural language texts in fine-tuning datasets plays a critical role in the performance of generative models, particularly in computational creativity tasks such as poem or song lyric generation. Fluency defects in generated poems significantly reduce their value. However, training texts are often sourced from internet-based platforms without stringent quality control, posing a challenge for data engineers to manage defect levels effectively. To address this issue, we propose the use of automated linguistic anomaly detection to identify and filter out low-quality texts from training datasets for creative models. In this paper, we present a comprehensive comparison of unsupervised and supervised text anomaly detection approaches, utilizing both synthetic and human-labeled datasets. We also introduce the RUPOR dataset, a collection of Russian-language human-labeled poems designed for cross-sentence grammatical error detection, and provide the full evaluation code. Our work aims to empower the community with tools and insights to improve the quality of training datasets for generative models in creative domains.

ARWI: Arabic Write and Improve

Apr 16, 2025Although Arabic is spoken by over 400 million people, advanced Arabic writing assistance tools remain limited. To address this gap, we present ARWI, a new writing assistant that helps learners improve essay writing in Modern Standard Arabic. ARWI is the first publicly available Arabic writing assistant to include a prompt database for different proficiency levels, an Arabic text editor, state-of-the-art grammatical error detection and correction, and automated essay scoring aligned with the Common European Framework of Reference standards for language attainment. Moreover, ARWI can be used to gather a growing auto-annotated corpus, facilitating further research on Arabic grammar correction and essay scoring, as well as profiling patterns of errors made by native speakers and non-native learners. A preliminary user study shows that ARWI provides actionable feedback, helping learners identify grammatical gaps, assess language proficiency, and guide improvement.

Scaling and Prompting for Improved End-to-End Spoken Grammatical Error Correction

May 27, 2025

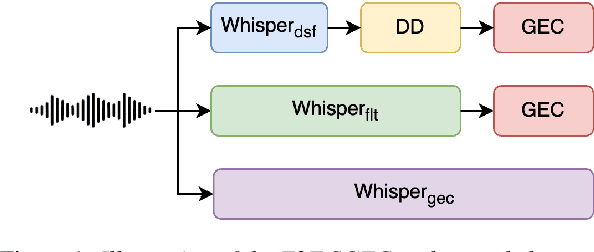

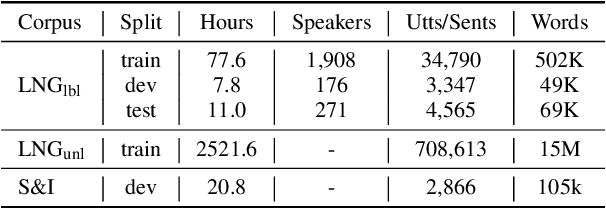

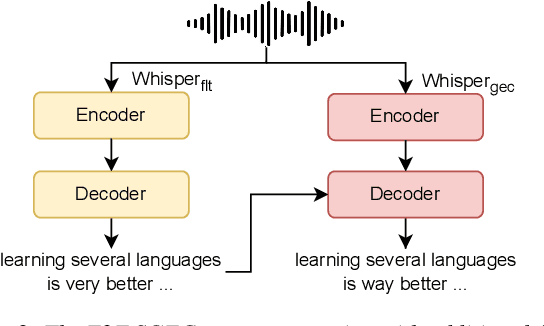

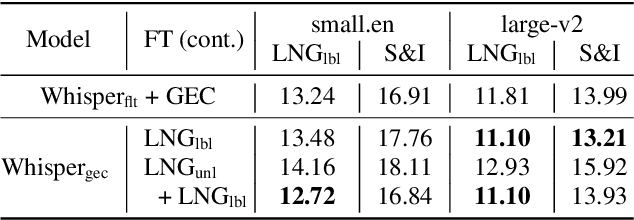

Spoken Grammatical Error Correction (SGEC) and Feedback (SGECF) are crucial for second language learners, teachers and test takers. Traditional SGEC systems rely on a cascaded pipeline consisting of an ASR, a module for disfluency detection (DD) and removal and one for GEC. With the rise of end-to-end (E2E) speech foundation models, we investigate their effectiveness in SGEC and feedback generation. This work introduces a pseudo-labelling process to address the challenge of limited labelled data, expanding the training data size from 77 hours to approximately 2500 hours, leading to improved performance. Additionally, we prompt an E2E Whisper-based SGEC model with fluent transcriptions, showing a slight improvement in SGEC performance, with more significant gains in feedback generation. Finally, we assess the impact of increasing model size, revealing that while pseudo-labelled data does not yield performance gain for a larger Whisper model, training with prompts proves beneficial.

Bangla Grammatical Error Detection Leveraging Transformer-based Token Classification

Nov 13, 2024Bangla is the seventh most spoken language by a total number of speakers in the world, and yet the development of an automated grammar checker in this language is an understudied problem. Bangla grammatical error detection is a task of detecting sub-strings of a Bangla text that contain grammatical, punctuation, or spelling errors, which is crucial for developing an automated Bangla typing assistant. Our approach involves breaking down the task as a token classification problem and utilizing state-of-the-art transformer-based models. Finally, we combine the output of these models and apply rule-based post-processing to generate a more reliable and comprehensive result. Our system is evaluated on a dataset consisting of over 25,000 texts from various sources. Our best model achieves a Levenshtein distance score of 1.04. Finally, we provide a detailed analysis of different components of our system.

Enhancing Grammatical Error Detection using BERT with Cleaned Lang-8 Dataset

Nov 23, 2024This paper presents an improved LLM based model for Grammatical Error Detection (GED), which is a very challenging and equally important problem for many applications. The traditional approach to GED involved hand-designed features, but recently, Neural Networks (NN) have automated the discovery of these features, improving performance in GED. Traditional rule-based systems have an F1 score of 0.50-0.60 and earlier machine learning models give an F1 score of 0.65-0.75, including decision trees and simple neural networks. Previous deep learning models, for example, Bi-LSTM, have reported F1 scores within the range from 0.80 to 0.90. In our study, we have fine-tuned various transformer models using the Lang8 dataset rigorously cleaned by us. In our experiments, the BERT-base-uncased model gave an impressive performance with an F1 score of 0.91 and accuracy of 98.49% on training data and 90.53% on testing data, also showcasing the importance of data cleaning. Increasing model size using BERT-large-uncased or RoBERTa-large did not give any noticeable improvements in performance or advantage for this task, underscoring that larger models are not always better. Our results clearly show how far rigorous data cleaning and simple transformer-based models can go toward significantly improving the quality of GED.

Loss-Aware Curriculum Learning for Chinese Grammatical Error Correction

Dec 31, 2024Chinese grammatical error correction (CGEC) aims to detect and correct errors in the input Chinese sentences. Recently, Pre-trained Language Models (PLMS) have been employed to improve the performance. However, current approaches ignore that correction difficulty varies across different instances and treat these samples equally, enhancing the challenge of model learning. To address this problem, we propose a multi-granularity Curriculum Learning (CL) framework. Specifically, we first calculate the correction difficulty of these samples and feed them into the model from easy to hard batch by batch. Then Instance-Level CL is employed to help the model optimize in the appropriate direction automatically by regulating the loss function. Extensive experimental results and comprehensive analyses of various datasets prove the effectiveness of our method.

GECTurk WEB: An Explainable Online Platform for Turkish Grammatical Error Detection and Correction

Oct 16, 2024Sophisticated grammatical error detection/correction tools are available for a small set of languages such as English and Chinese. However, it is not straightforward -- if not impossible -- to adapt them to morphologically rich languages with complex writing rules like Turkish which has more than 80 million speakers. Even though several tools exist for Turkish, they primarily focus on spelling errors rather than grammatical errors and lack features such as web interfaces, error explanations and feedback mechanisms. To fill this gap, we introduce GECTurk WEB, a light, open-source, and flexible web-based system that can detect and correct the most common forms of Turkish writing errors, such as the misuse of diacritics, compound and foreign words, pronouns, light verbs along with spelling mistakes. Our system provides native speakers and second language learners an easily accessible tool to detect/correct such mistakes and also to learn from their mistakes by showing the explanation for the violated rule(s). The proposed system achieves 88,3 system usability score, and is shown to help learn/remember a grammatical rule (confirmed by 80% of the participants). The GECTurk WEB is available both as an offline tool at https://github.com/GGLAB-KU/gecturkweb or online at www.gecturk.net.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge