Zhiqiang Guo

GSE: Evaluating Sticker Visual Semantic Similarity via a General Sticker Encoder

Nov 07, 2025

Abstract:Stickers have become a popular form of visual communication, yet understanding their semantic relationships remains challenging due to their highly diverse and symbolic content. In this work, we formally {define the Sticker Semantic Similarity task} and introduce {Triple-S}, the first benchmark for this task, consisting of 905 human-annotated positive and negative sticker pairs. Through extensive evaluation, we show that existing pretrained vision and multimodal models struggle to capture nuanced sticker semantics. To address this, we propose the {General Sticker Encoder (GSE)}, a lightweight and versatile model that learns robust sticker embeddings using both Triple-S and additional datasets. GSE achieves superior performance on unseen stickers, and demonstrates strong results on downstream tasks such as emotion classification and sticker-to-sticker retrieval. By releasing both Triple-S and GSE, we provide standardized evaluation tools and robust embeddings, enabling future research in sticker understanding, retrieval, and multimodal content generation. The Triple-S benchmark and GSE have been publicly released and are available here.

Short Video Segment-level User Dynamic Interests Modeling in Personalized Recommendation

Apr 05, 2025

Abstract:The rapid growth of short videos has necessitated effective recommender systems to match users with content tailored to their evolving preferences. Current video recommendation models primarily treat each video as a whole, overlooking the dynamic nature of user preferences with specific video segments. In contrast, our research focuses on segment-level user interest modeling, which is crucial for understanding how users' preferences evolve during video browsing. To capture users' dynamic segment interests, we propose an innovative model that integrates a hybrid representation module, a multi-modal user-video encoder, and a segment interest decoder. Our model addresses the challenges of capturing dynamic interest patterns, missing segment-level labels, and fusing different modalities, achieving precise segment-level interest prediction. We present two downstream tasks to evaluate the effectiveness of our segment interest modeling approach: video-skip prediction and short video recommendation. Our experiments on real-world short video datasets with diverse modalities show promising results on both tasks. It demonstrates that segment-level interest modeling brings a deep understanding of user engagement and enhances video recommendations. We also release a unique dataset that includes segment-level video data and diverse user behaviors, enabling further research in segment-level interest modeling. This work pioneers a novel perspective on understanding user segment-level preference, offering the potential for more personalized and engaging short video experiences.

A 106K Multi-Topic Multilingual Conversational User Dataset with Emoticons

Feb 26, 2025Abstract:Instant messaging has become a predominant form of communication, with texts and emoticons enabling users to express emotions and ideas efficiently. Emoticons, in particular, have gained significant traction as a medium for conveying sentiments and information, leading to the growing importance of emoticon retrieval and recommendation systems. However, one of the key challenges in this area has been the absence of datasets that capture both the temporal dynamics and user-specific interactions with emoticons, limiting the progress of personalized user modeling and recommendation approaches. To address this, we introduce the emoticon dataset, a comprehensive resource that includes time-based data along with anonymous user identifiers across different conversations. As the largest publicly accessible emoticon dataset to date, it comprises 22K unique users, 370K emoticons, and 8.3M messages. The data was collected from a widely-used messaging platform across 67 conversations and 720 hours of crawling. Strict privacy and safety checks were applied to ensure the integrity of both text and image data. Spanning across 10 distinct domains, the emoticon dataset provides rich insights into temporal, multilingual, and cross-domain behaviors, which were previously unavailable in other emoticon-based datasets. Our in-depth experiments, both quantitative and qualitative, demonstrate the dataset's potential in modeling user behavior and personalized recommendation systems, opening up new possibilities for research in personalized retrieval and conversational AI. The dataset is freely accessible.

Multimodal Difference Learning for Sequential Recommendation

Dec 11, 2024

Abstract:Sequential recommendations have drawn significant attention in modeling the user's historical behaviors to predict the next item. With the booming development of multimodal data (e.g., image, text) on internet platforms, sequential recommendation also benefits from the incorporation of multimodal data. Most methods introduce modal features of items as side information and simply concatenates them to learn unified user interests. Nevertheless, these methods encounter the limitation in modeling multimodal differences. We argue that user interests and item relationships vary across different modalities. To address this problem, we propose a novel Multimodal Difference Learning framework for Sequential Recommendation, MDSRec for brevity. Specifically, we first explore the differences in item relationships by constructing modal-aware item relation graphs with behavior signal to enhance item representations. Then, to capture the differences in user interests across modalities, we design a interest-centralized attention mechanism to independently model user sequence representations in different modalities. Finally, we fuse the user embeddings from multiple modalities to achieve accurate item recommendation. Experimental results on five real-world datasets demonstrate the superiority of MDSRec over state-of-the-art baselines and the efficacy of multimodal difference learning.

PerSRV: Personalized Sticker Retrieval with Vision-Language Model

Oct 29, 2024

Abstract:Instant Messaging is a popular means for daily communication, allowing users to send text and stickers. As the saying goes, "a picture is worth a thousand words", so developing an effective sticker retrieval technique is crucial for enhancing user experience. However, existing sticker retrieval methods rely on labeled data to interpret stickers, and general-purpose Vision-Language Models (VLMs) often struggle to capture the unique semantics of stickers. Additionally, relevant-based sticker retrieval methods lack personalization, creating a gap between diverse user expectations and retrieval results. To address these, we propose the Personalized Sticker Retrieval with Vision-Language Model framework, namely PerSRV, structured into offline calculations and online processing modules. The online retrieval part follows the paradigm of relevant recall and personalized ranking, supported by the offline pre-calculation parts, which are sticker semantic understanding, utility evaluation and personalization modules. Firstly, for sticker-level semantic understanding, we supervised fine-tuned LLaVA-1.5-7B to generate human-like sticker semantics, complemented by textual content extracted from figures and historical interaction queries. Secondly, we investigate three crowd-sourcing metrics for sticker utility evaluation. Thirdly, we cluster style centroids based on users' historical interactions to achieve personal preference modeling. Finally, we evaluate our proposed PerSRV method on a public sticker retrieval dataset from WeChat, containing 543,098 candidates and 12,568 interactions. Experimental results show that PerSRV significantly outperforms existing methods in multi-modal sticker retrieval. Additionally, our fine-tuned VLM delivers notable improvements in sticker semantic understandings.

StepTool: A Step-grained Reinforcement Learning Framework for Tool Learning in LLMs

Oct 10, 2024

Abstract:Despite having powerful reasoning and inference capabilities, Large Language Models (LLMs) still need external tools to acquire real-time information retrieval or domain-specific expertise to solve complex tasks, which is referred to as tool learning. Existing tool learning methods primarily rely on tuning with expert trajectories, focusing on token-sequence learning from a linguistic perspective. However, there are several challenges: 1) imitating static trajectories limits their ability to generalize to new tasks. 2) even expert trajectories can be suboptimal, and better solution paths may exist. In this work, we introduce StepTool, a novel step-grained reinforcement learning framework to improve tool learning in LLMs. It consists of two components: Step-grained Reward Shaping, which assigns rewards at each tool interaction based on tool invocation success and its contribution to the task, and Step-grained Optimization, which uses policy gradient methods to optimize the model in a multi-step manner. Experimental results demonstrate that StepTool significantly outperforms existing methods in multi-step, tool-based tasks, providing a robust solution for complex task environments. Codes are available at https://github.com/yuyq18/StepTool.

DualVAE: Dual Disentangled Variational AutoEncoder for Recommendation

Jan 10, 2024

Abstract:Learning precise representations of users and items to fit observed interaction data is the fundamental task of collaborative filtering. Existing studies usually infer entangled representations to fit such interaction data, neglecting to model the diverse matching relationships between users and items behind their interactions, leading to limited performance and weak interpretability. To address this problem, we propose a Dual Disentangled Variational AutoEncoder (DualVAE) for collaborative recommendation, which combines disentangled representation learning with variational inference to facilitate the generation of implicit interaction data. Specifically, we first implement the disentangling concept by unifying an attention-aware dual disentanglement and disentangled variational autoencoder to infer the disentangled latent representations of users and items. Further, to encourage the correspondence and independence of disentangled representations of users and items, we design a neighborhood-enhanced representation constraint with a customized contrastive mechanism to improve the representation quality. Extensive experiments on three real-world benchmarks show that our proposed model significantly outperforms several recent state-of-the-art baselines. Further empirical experimental results also illustrate the interpretability of the disentangled representations learned by DualVAE.

LGMRec: Local and Global Graph Learning for Multimodal Recommendation

Dec 27, 2023

Abstract:The multimodal recommendation has gradually become the infrastructure of online media platforms, enabling them to provide personalized service to users through a joint modeling of user historical behaviors (e.g., purchases, clicks) and item various modalities (e.g., visual and textual). The majority of existing studies typically focus on utilizing modal features or modal-related graph structure to learn user local interests. Nevertheless, these approaches encounter two limitations: (1) Shared updates of user ID embeddings result in the consequential coupling between collaboration and multimodal signals; (2) Lack of exploration into robust global user interests to alleviate the sparse interaction problems faced by local interest modeling. To address these issues, we propose a novel Local and Global Graph Learning-guided Multimodal Recommender (LGMRec), which jointly models local and global user interests. Specifically, we present a local graph embedding module to independently learn collaborative-related and modality-related embeddings of users and items with local topological relations. Moreover, a global hypergraph embedding module is designed to capture global user and item embeddings by modeling insightful global dependency relations. The global embeddings acquired within the hypergraph embedding space can then be combined with two decoupled local embeddings to improve the accuracy and robustness of recommendations. Extensive experiments conducted on three benchmark datasets demonstrate the superiority of our LGMRec over various state-of-the-art recommendation baselines, showcasing its effectiveness in modeling both local and global user interests.

Heterogeneous Graph Collaborative Filtering using Textual Information

Oct 04, 2020

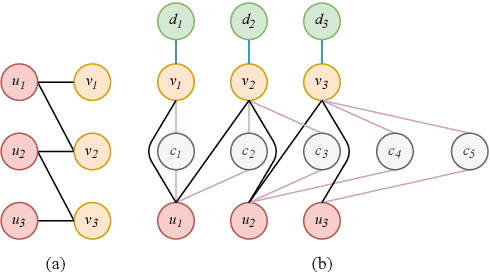

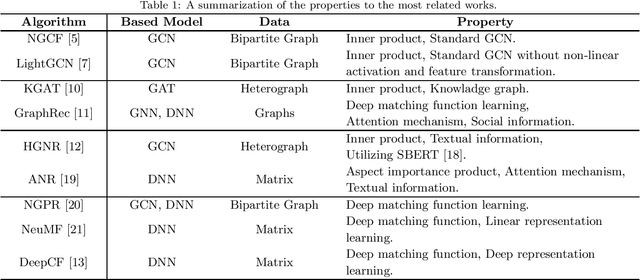

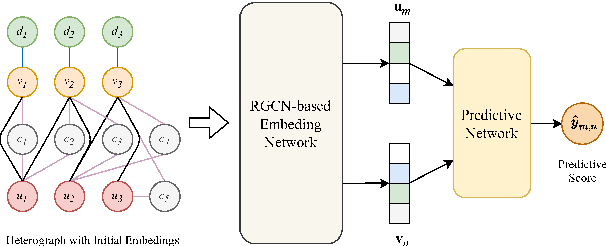

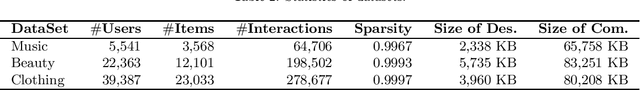

Abstract:Due to the development of graph neural network models, like graph convolutional network (GCN), graph-based representation learning methods have made great progress in recommender systems. However, the data sparsity is still a challenging problem that graph-based methods are confronted with. Recent works try to solve this problem by utilizing the side information. In this paper, we introduce easily accessible textual information to alleviate the negative effects of data sparsity. Specifically, to incorporate with rich textual knowledge, we utilize a pre-trained context-awareness natural language processing model to initialize the embeddings of text nodes. By a GCN-based node information propagation on the constructed heterogeneous graph, the embeddings of users and items can finally be enriched by the textual knowledge. The matching function used by most graph-based representation learning methods is the inner product, this linear operation can not fit complex semantics well. We design a predictive network, which can combine the graph-based representation learning with the matching function learning, and demonstrate that this predictive architecture can gain significant improvements. Extensive experiments are conducted on three public datasets and the results verify the superior performance of our method over several baselines.

A Text-based Deep Reinforcement Learning Framework for Interactive Recommendation

Apr 14, 2020

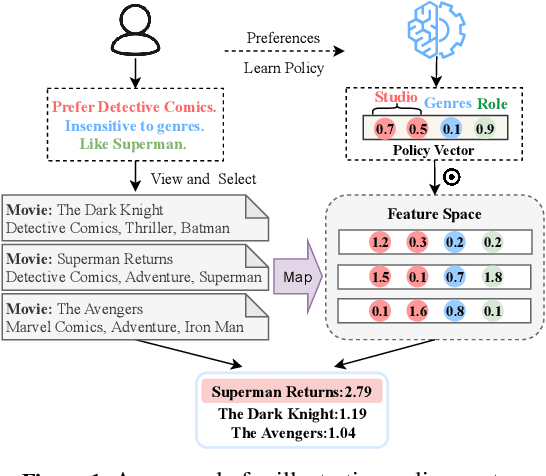

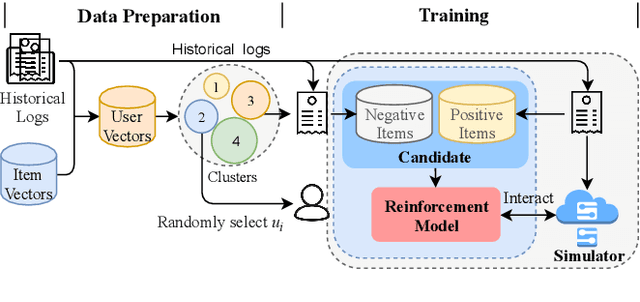

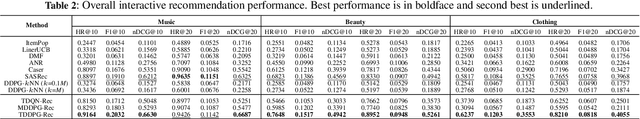

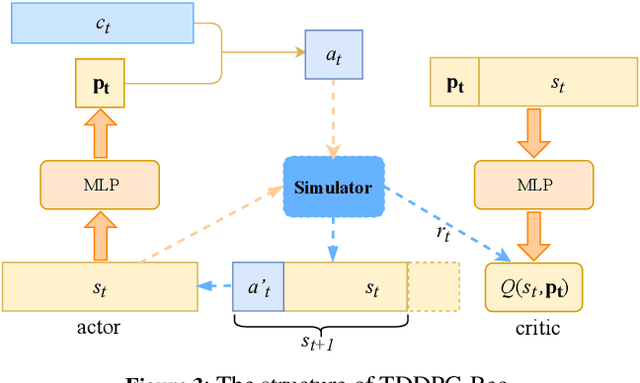

Abstract:Due to its nature of learning from dynamic interactions and planning for long-run performance, reinforcement learning (RL) recently has received much attention in interactive recommender systems (IRSs). IRSs usually face the large discrete action space problem, which makes most of the existing RL-based recommendation methods inefficient. Moreover, data sparsity is another challenging problem that most IRSs are confronted with. While the textual information like reviews and descriptions is less sensitive to sparsity, existing RL-based recommendation methods either neglect or are not suitable for incorporating textual information. To address these two problems, in this paper, we propose a Text-based Deep Deterministic Policy Gradient framework (TDDPG-Rec) for IRSs. Specifically, we leverage textual information to map items and users into a feature space, which greatly alleviates the sparsity problem. Moreover, we design an effective method to construct an action candidate set. By the policy vector dynamically learned from TDDPG-Rec that expresses the user's preference, we can select actions from the candidate set effectively. Through experiments on three public datasets, we demonstrate that TDDPG-Rec achieves state-of-the-art performance over several baselines in a time-efficient manner.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge