Yuan Zhuang

Combining Graph Neural Networks with Expert Knowledge for Smart Contract Vulnerability Detection

Jul 24, 2021

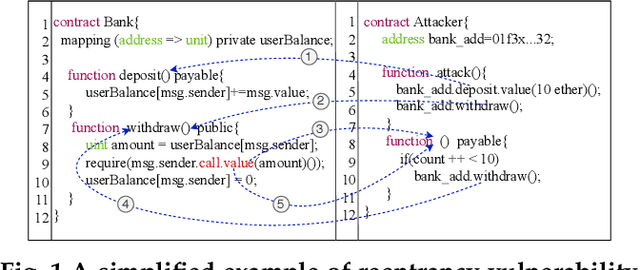

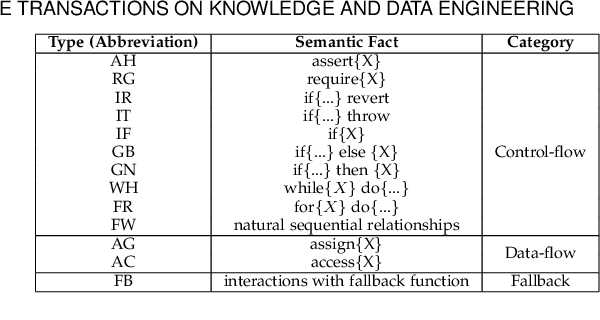

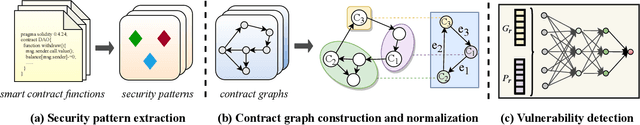

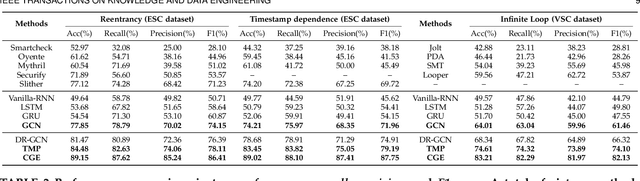

Abstract:Smart contract vulnerability detection draws extensive attention in recent years due to the substantial losses caused by hacker attacks. Existing efforts for contract security analysis heavily rely on rigid rules defined by experts, which are labor-intensive and non-scalable. More importantly, expert-defined rules tend to be error-prone and suffer the inherent risk of being cheated by crafty attackers. Recent researches focus on the symbolic execution and formal analysis of smart contracts for vulnerability detection, yet to achieve a precise and scalable solution. Although several methods have been proposed to detect vulnerabilities in smart contracts, there is still a lack of effort that considers combining expert-defined security patterns with deep neural networks. In this paper, we explore using graph neural networks and expert knowledge for smart contract vulnerability detection. Specifically, we cast the rich control- and data- flow semantics of the source code into a contract graph. To highlight the critical nodes in the graph, we further design a node elimination phase to normalize the graph. Then, we propose a novel temporal message propagation network to extract the graph feature from the normalized graph, and combine the graph feature with designed expert patterns to yield a final detection system. Extensive experiments are conducted on all the smart contracts that have source code in Ethereum and VNT Chain platforms. Empirical results show significant accuracy improvements over the state-of-the-art methods on three types of vulnerabilities, where the detection accuracy of our method reaches 89.15%, 89.02%, and 83.21% for reentrancy, timestamp dependence, and infinite loop vulnerabilities, respectively.

Signal Acquisition of Luojia-1A Low Earth Orbit Navigation Augmentation System with Software Defined Receiver

May 31, 2021

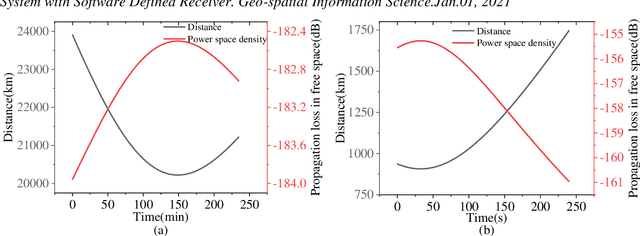

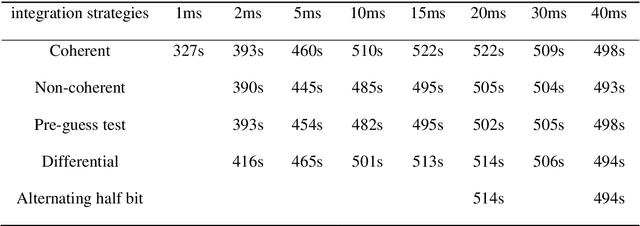

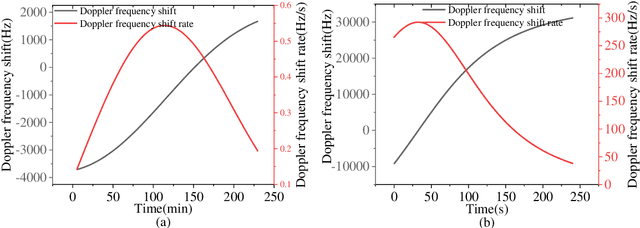

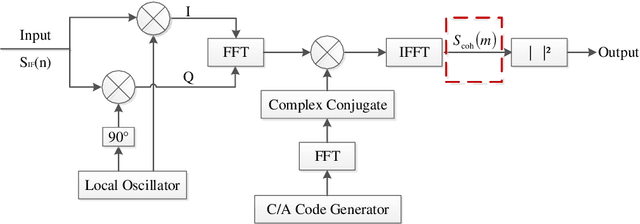

Abstract:Low earth orbit (LEO) satellite navigation signal can be used as an opportunity signal in case of a Global navigation satellite system (GNSS) outage, or as an enhancement means of traditional GNSS positioning algorithms. No matter which service mode is used, signal acquisition is the prerequisite of providing enhanced LEO navigation service. Compared with the medium orbit satellite, the transit time of the LEO satellite is shorter. Thus, it is of great significance to expand the successful acquisition time range of the LEO signal. Previous studies on LEO signal acquisition are based on simulation data. However, signal acquisition research based on real data is very important. In this work, the signal characteristics of LEO satellite: power space density in free space and the Doppler shift of LEO satellite are individually studied. The unified symbol definitions of several integration algorithms based on the parallel search signal acquisition algorithm are given. To verify these algorithms for LEO signal acquisition, a software-defined receiver (SDR) is developed. The performance of those integration algorithms on expanding the successful acquisition time range is verified by the real data collected from the Luojia-1A satellite. The experimental results show that the integration strategy can expand the successful acquisition time range, and it will not expand indefinitely with the integration duration.

Consistent Right-Invariant Fixed-Lag Smoother with Application to Visual Inertial SLAM

Feb 17, 2021

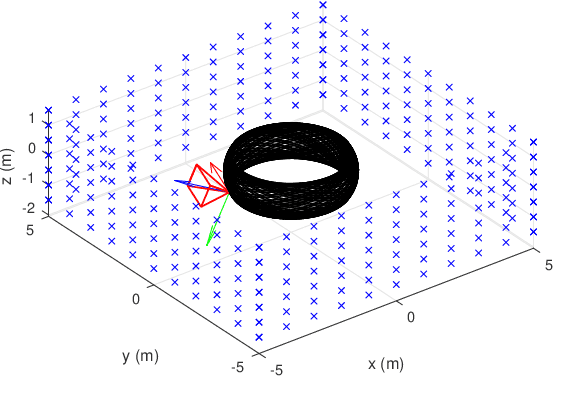

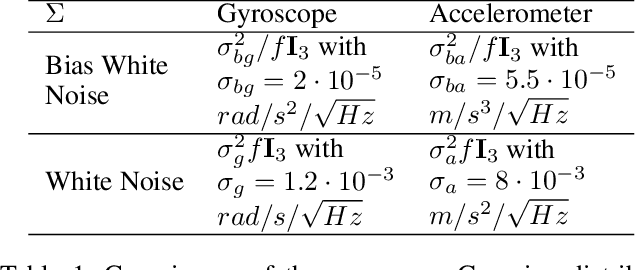

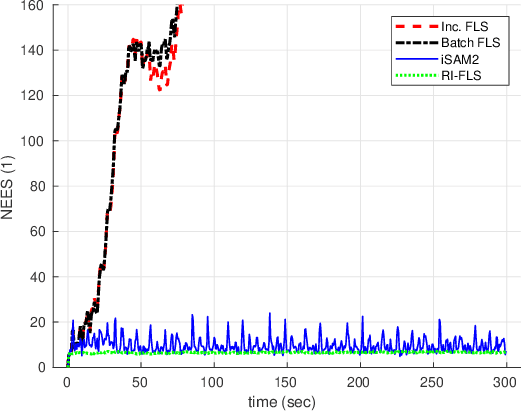

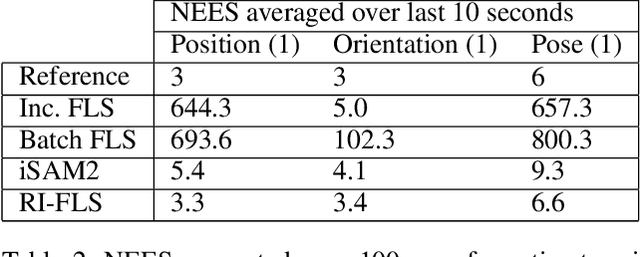

Abstract:State estimation problems that use relative observations routinely arise in navigation of unmanned aerial vehicles, autonomous ground vehicles, \etc whose proper operation relies on accurate state estimates and reliable covariances. These problems have immanent unobservable directions. Traditional causal estimators, however, usually gain spurious information on the unobservable directions, leading to over confident covariance inconsistent with the actual estimator errors. The consistency problem of fixed-lag smoothers (FLSs) has only been attacked by the first estimate Jacobian (FEJ) technique because of the complexity to analyze their observability property. But the FEJ has several drawbacks hampering its wide adoption. To ensure the consistency of a FLS, this paper introduces the right invariant error formulation into the FLS framework. To our knowledge, we are the first to analyze the observability of a FLS with the right invariant error. Our main contributions are twofold. As the first novelty, to bypass the complexity of analysis with the classic observability matrix, we show that observability analysis of FLSs can be done equivalently on the linearized system. Second, we prove that the inconsistency issue in the traditional FLS can be elegantly solved by the right invariant error formulation without artificially correcting Jacobians. By applying the proposed FLS to the monocular visual inertial simultaneous localization and mapping (SLAM) problem, we confirm that the method consistently estimates covariance similarly to a batch smoother in simulation and that our method achieved comparable accuracy as traditional FLSs on real data.

A Versatile Keyframe-Based Structureless Filter for Visual Inertial Odometry

Jan 02, 2021

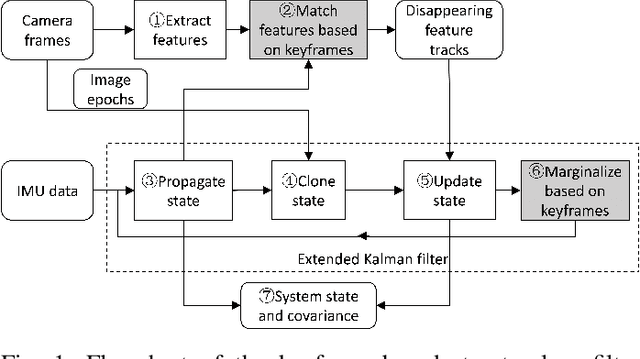

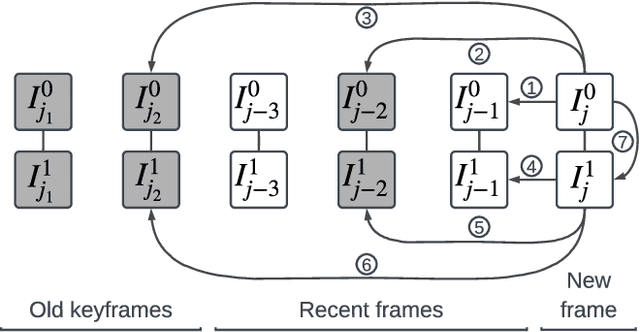

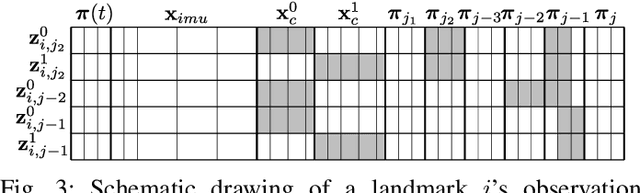

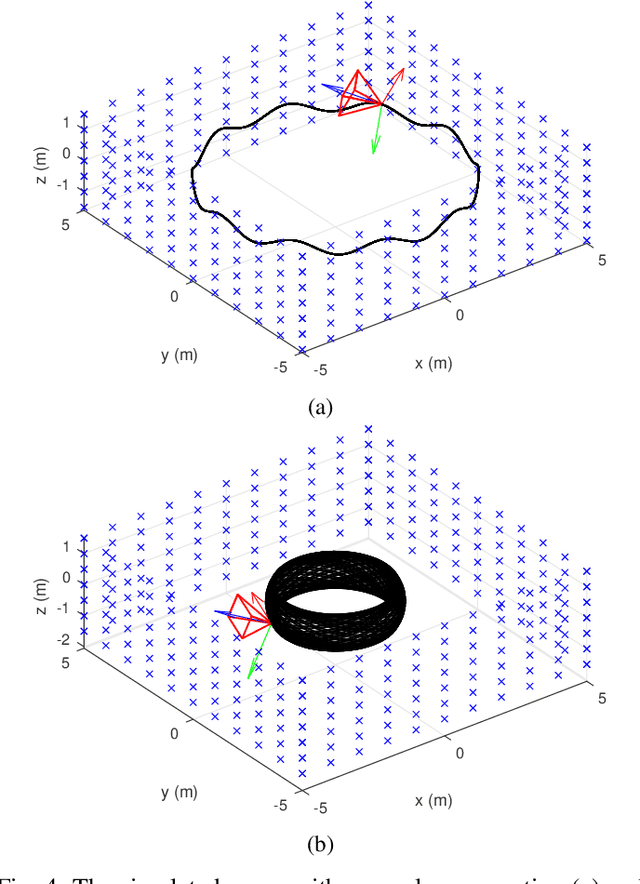

Abstract:Motion estimation by fusing data from at least a camera and an Inertial Measurement Unit (IMU) enables many applications in robotics. However, among the multitude of Visual Inertial Odometry (VIO) methods, few efficiently estimate device motion with consistent covariance, and calibrate sensor parameters online for handling data from consumer sensors. This paper addresses the gap with a Keyframe-based Structureless Filter (KSF). For efficiency, landmarks are not included in the filter's state vector. For robustness, KSF associates feature observations and manages state variables using the concept of keyframes. For flexibility, KSF supports anytime calibration of IMU systematic errors, as well as extrinsic, intrinsic, and temporal parameters of each camera. Estimator consistency and observability of sensor parameters were analyzed by simulation. Sensitivity to design options, e.g., feature matching method and camera count was studied with the EuRoC benchmark. Sensor parameter estimation was evaluated on raw TUM VI sequences and smartphone data. Moreover, pose estimation accuracy was evaluated on EuRoC and TUM VI sequences versus recent VIO methods. These tests confirm that KSF reliably calibrates sensor parameters when the data contain adequate motion, and consistently estimate motion with accuracy rivaling recent VIO methods. Our implementation runs at 42 Hz with stereo camera images on a consumer laptop.

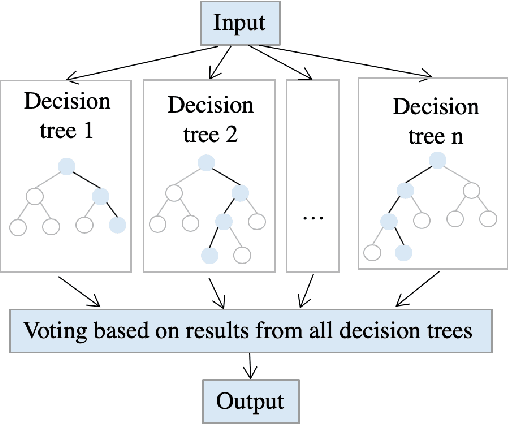

Evolutionary Architecture Search for Graph Neural Networks

Sep 21, 2020

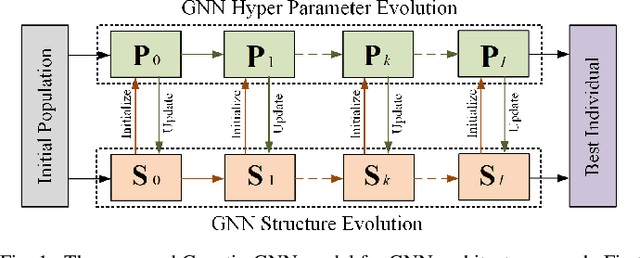

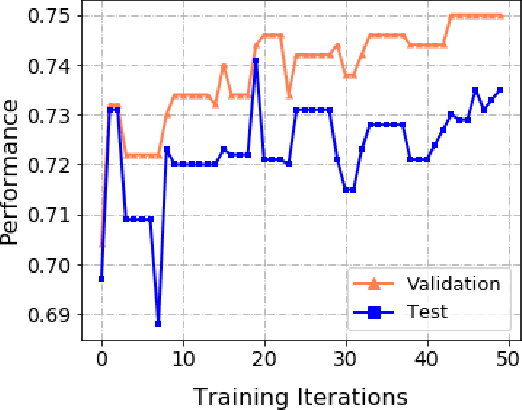

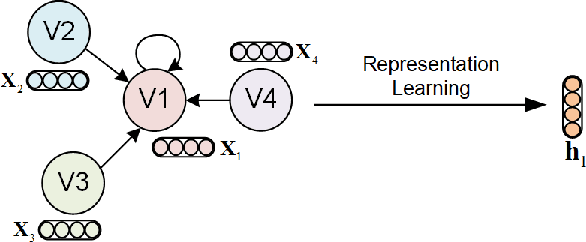

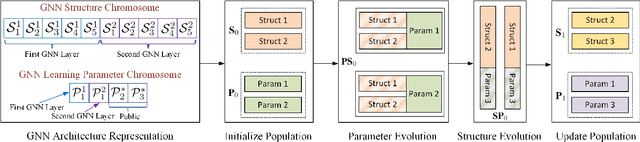

Abstract:Automated machine learning (AutoML) has seen a resurgence in interest with the boom of deep learning over the past decade. In particular, Neural Architecture Search (NAS) has seen significant attention throughout the AutoML research community, and has pushed forward the state-of-the-art in a number of neural models to address grid-like data such as texts and images. However, very litter work has been done about Graph Neural Networks (GNN) learning on unstructured network data. Given the huge number of choices and combinations of components such as aggregator and activation function, determining the suitable GNN structure for a specific problem normally necessitates tremendous expert knowledge and laborious trails. In addition, the slight variation of hyper parameters such as learning rate and dropout rate could dramatically hurt the learning capacity of GNN. In this paper, we propose a novel AutoML framework through the evolution of individual models in a large GNN architecture space involving both neural structures and learning parameters. Instead of optimizing only the model structures with fixed parameter settings as existing work, an alternating evolution process is performed between GNN structures and learning parameters to dynamically find the best fit of each other. To the best of our knowledge, this is the first work to introduce and evaluate evolutionary architecture search for GNN models. Experiments and validations demonstrate that evolutionary NAS is capable of matching existing state-of-the-art reinforcement learning approaches for both the semi-supervised transductive and inductive node representation learning and classification.

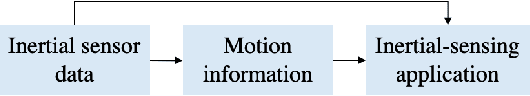

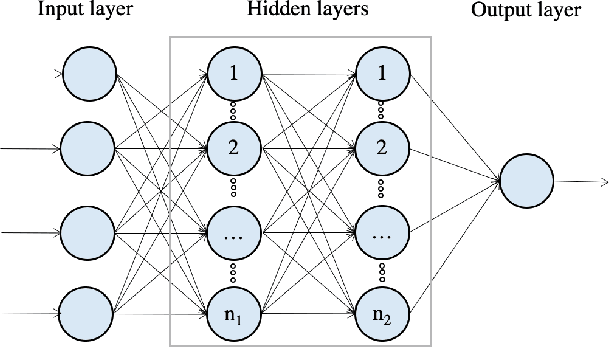

Inertial Sensing Meets Artificial Intelligence: Opportunity or Challenge?

Jul 13, 2020

Abstract:The inertial navigation system (INS) has been widely used to provide self-contained and continuous motion estimation in intelligent transportation systems. Recently, the emergence of chip-level inertial sensors has expanded the relevant applications from positioning, navigation, and mobile mapping to location-based services, unmanned systems, and transportation big data. Meanwhile, benefit from the emergence of big data and the improvement of algorithms and computing power, artificial intelligence (AI) has become a consensus tool that has been successfully applied in various fields. This article reviews the research on using AI technology to enhance inertial sensing from various aspects, including sensor design and selection, calibration and error modeling, navigation and motion-sensing algorithms, multi-sensor information fusion, system evaluation, and practical application. Based on the over 30 representative articles selected from the nearly 300 related publications, this article summarizes the state of the art, advantages, and challenges on each aspect. Finally, it summarizes nine advantages and nine challenges of AI-enhanced inertial sensing and then points out future research directions.

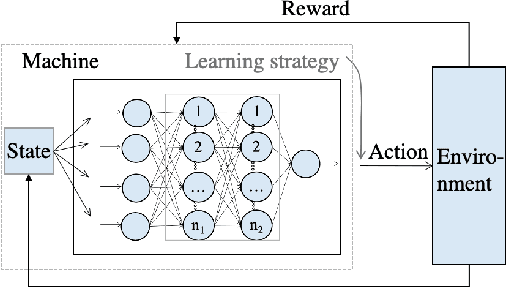

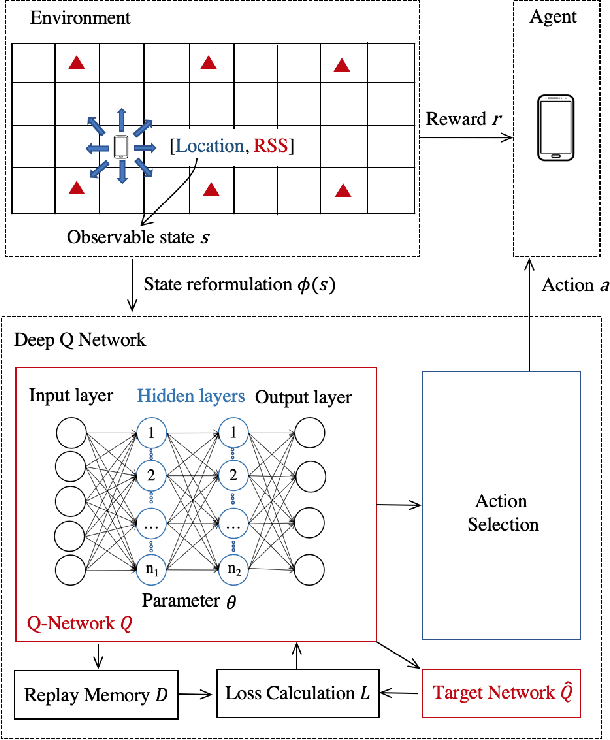

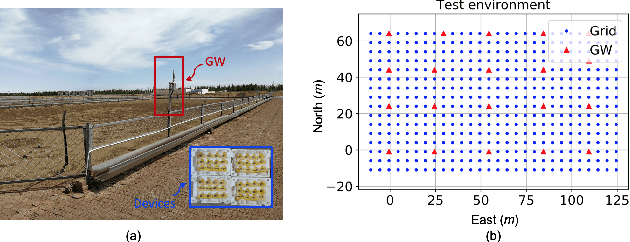

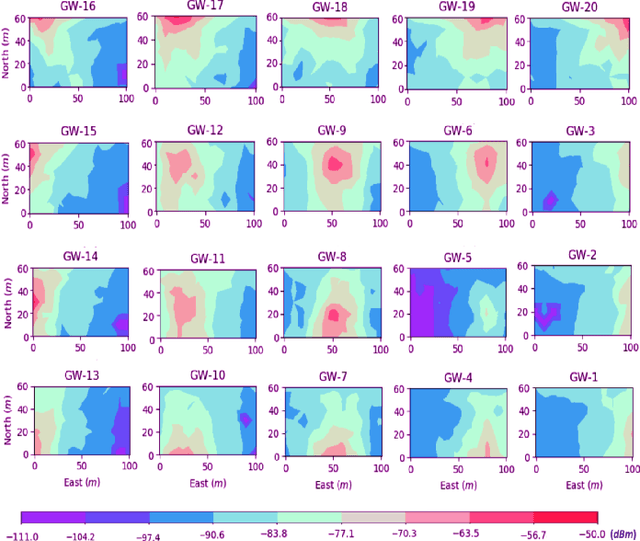

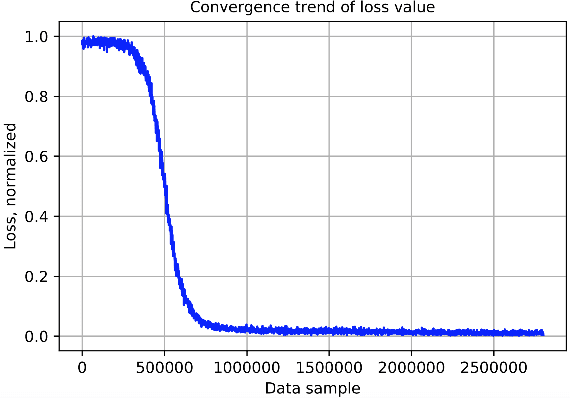

Deep Reinforcement Learning (DRL): Another Perspective for Unsupervised Wireless Localization

Apr 09, 2020

Abstract:Location is key to spatialize internet-of-things (IoT) data. However, it is challenging to use low-cost IoT devices for robust unsupervised localization (i.e., localization without training data that have known location labels). Thus, this paper proposes a deep reinforcement learning (DRL) based unsupervised wireless-localization method. The main contributions are as follows. (1) This paper proposes an approach to model a continuous wireless-localization process as a Markov decision process (MDP) and process it within a DRL framework. (2) To alleviate the challenge of obtaining rewards when using unlabeled data (e.g., daily-life crowdsourced data), this paper presents a reward-setting mechanism, which extracts robust landmark data from unlabeled wireless received signal strengths (RSS). (3) To ease requirements for model re-training when using DRL for localization, this paper uses RSS measurements together with agent location to construct DRL inputs. The proposed method was tested by using field testing data from multiple Bluetooth 5 smart ear tags in a pasture. Meanwhile, the experimental verification process reflected the advantages and challenges for using DRL in wireless localization.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge