Yuan Yan Tang

A Survey on Small Sample Imbalance Problem: Metrics, Feature Analysis, and Solutions

Apr 21, 2025Abstract:The small sample imbalance (S&I) problem is a major challenge in machine learning and data analysis. It is characterized by a small number of samples and an imbalanced class distribution, which leads to poor model performance. In addition, indistinct inter-class feature distributions further complicate classification tasks. Existing methods often rely on algorithmic heuristics without sufficiently analyzing the underlying data characteristics. We argue that a detailed analysis from the data perspective is essential before developing an appropriate solution. Therefore, this paper proposes a systematic analytical framework for the S\&I problem. We first summarize imbalance metrics and complexity analysis methods, highlighting the need for interpretable benchmarks to characterize S&I problems. Second, we review recent solutions for conventional, complexity-based, and extreme S&I problems, revealing methodological differences in handling various data distributions. Our summary finds that resampling remains a widely adopted solution. However, we conduct experiments on binary and multiclass datasets, revealing that classifier performance differences significantly exceed the improvements achieved through resampling. Finally, this paper highlights open questions and discusses future trends.

ColorVein: Colorful Cancelable Vein Biometrics

Apr 19, 2025

Abstract:Vein recognition technologies have become one of the primary solutions for high-security identification systems. However, the issue of biometric information leakage can still pose a serious threat to user privacy and anonymity. Currently, there is no cancelable biometric template generation scheme specifically designed for vein biometrics. Therefore, this paper proposes an innovative cancelable vein biometric generation scheme: ColorVein. Unlike previous cancelable template generation schemes, ColorVein does not destroy the original biometric features and introduces additional color information to grayscale vein images. This method significantly enhances the information density of vein images by transforming static grayscale information into dynamically controllable color representations through interactive colorization. ColorVein allows users/administrators to define a controllable pseudo-random color space for grayscale vein images by editing the position, number, and color of hint points, thereby generating protected cancelable templates. Additionally, we propose a new secure center loss to optimize the training process of the protected feature extraction model, effectively increasing the feature distance between enrolled users and any potential impostors. Finally, we evaluate ColorVein's performance on all types of vein biometrics, including recognition performance, unlinkability, irreversibility, and revocability, and conduct security and privacy analyses. ColorVein achieves competitive performance compared with state-of-the-art methods.

Underwater Organism Color Enhancement via Color Code Decomposition, Adaptation and Interpolation

Sep 29, 2024

Abstract:Underwater images often suffer from quality degradation due to absorption and scattering effects. Most existing underwater image enhancement algorithms produce a single, fixed-color image, limiting user flexibility and application. To address this limitation, we propose a method called \textit{ColorCode}, which enhances underwater images while offering a range of controllable color outputs. Our approach involves recovering an underwater image to a reference enhanced image through supervised training and decomposing it into color and content codes via self-reconstruction and cross-reconstruction. The color code is explicitly constrained to follow a Gaussian distribution, allowing for efficient sampling and interpolation during inference. ColorCode offers three key features: 1) color enhancement, producing an enhanced image with a fixed color; 2) color adaptation, enabling controllable adjustments of long-wavelength color components using guidance images; and 3) color interpolation, allowing for the smooth generation of multiple colors through continuous sampling of the color code. Quantitative and visual evaluations on popular and challenging benchmark datasets demonstrate the superiority of ColorCode over existing methods in providing diverse, controllable, and color-realistic enhancement results. The source code is available at https://github.com/Xiaofeng-life/ColorCode.

Improving Fast Adversarial Training via Self-Knowledge Guidance

Sep 26, 2024

Abstract:Adversarial training has achieved remarkable advancements in defending against adversarial attacks. Among them, fast adversarial training (FAT) is gaining attention for its ability to achieve competitive robustness with fewer computing resources. Existing FAT methods typically employ a uniform strategy that optimizes all training data equally without considering the influence of different examples, which leads to an imbalanced optimization. However, this imbalance remains unexplored in the field of FAT. In this paper, we conduct a comprehensive study of the imbalance issue in FAT and observe an obvious class disparity regarding their performances. This disparity could be embodied from a perspective of alignment between clean and robust accuracy. Based on the analysis, we mainly attribute the observed misalignment and disparity to the imbalanced optimization in FAT, which motivates us to optimize different training data adaptively to enhance robustness. Specifically, we take disparity and misalignment into consideration. First, we introduce self-knowledge guided regularization, which assigns differentiated regularization weights to each class based on its training state, alleviating class disparity. Additionally, we propose self-knowledge guided label relaxation, which adjusts label relaxation according to the training accuracy, alleviating the misalignment and improving robustness. By combining these methods, we formulate the Self-Knowledge Guided FAT (SKG-FAT), leveraging naturally generated knowledge during training to enhance the adversarial robustness without compromising training efficiency. Extensive experiments on four standard datasets demonstrate that the SKG-FAT improves the robustness and preserves competitive clean accuracy, outperforming the state-of-the-art methods.

Unrevealed Threats: A Comprehensive Study of the Adversarial Robustness of Underwater Image Enhancement Models

Sep 10, 2024

Abstract:Learning-based methods for underwater image enhancement (UWIE) have undergone extensive exploration. However, learning-based models are usually vulnerable to adversarial examples so as the UWIE models. To the best of our knowledge, there is no comprehensive study on the adversarial robustness of UWIE models, which indicates that UWIE models are potentially under the threat of adversarial attacks. In this paper, we propose a general adversarial attack protocol. We make a first attempt to conduct adversarial attacks on five well-designed UWIE models on three common underwater image benchmark datasets. Considering the scattering and absorption of light in the underwater environment, there exists a strong correlation between color correction and underwater image enhancement. On the basis of that, we also design two effective UWIE-oriented adversarial attack methods Pixel Attack and Color Shift Attack targeting different color spaces. The results show that five models exhibit varying degrees of vulnerability to adversarial attacks and well-designed small perturbations on degraded images are capable of preventing UWIE models from generating enhanced results. Further, we conduct adversarial training on these models and successfully mitigated the effectiveness of adversarial attacks. In summary, we reveal the adversarial vulnerability of UWIE models and propose a new evaluation dimension of UWIE models.

Illumination Controllable Dehazing Network based on Unsupervised Retinex Embedding

Jun 09, 2023

Abstract:On the one hand, the dehazing task is an illposedness problem, which means that no unique solution exists. On the other hand, the dehazing task should take into account the subjective factor, which is to give the user selectable dehazed images rather than a single result. Therefore, this paper proposes a multi-output dehazing network by introducing illumination controllable ability, called IC-Dehazing. The proposed IC-Dehazing can change the illumination intensity by adjusting the factor of the illumination controllable module, which is realized based on the interpretable Retinex theory. Moreover, the backbone dehazing network of IC-Dehazing consists of a Transformer with double decoders for high-quality image restoration. Further, the prior-based loss function and unsupervised training strategy enable IC-Dehazing to complete the parameter learning process without the need for paired data. To demonstrate the effectiveness of the proposed IC-Dehazing, quantitative and qualitative experiments are conducted on image dehazing, semantic segmentation, and object detection tasks. Code is available at https://github.com/Xiaofeng-life/ICDehazing.

Adversarial Attack and Defense for Dehazing Networks

Mar 30, 2023

Abstract:The research on single image dehazing task has been widely explored. However, as far as we know, no comprehensive study has been conducted on the robustness of the well-trained dehazing models. Therefore, there is no evidence that the dehazing networks can resist malicious attacks. In this paper, we focus on designing a group of attack methods based on first order gradient to verify the robustness of the existing dehazing algorithms. By analyzing the general goal of image dehazing task, five attack methods are proposed, which are prediction, noise, mask, ground-truth and input attack. The corresponding experiments are conducted on six datasets with different scales. Further, the defense strategy based on adversarial training is adopted for reducing the negative effects caused by malicious attacks. In summary, this paper defines a new challenging problem for image dehazing area, which can be called as adversarial attack on dehazing networks (AADN). Code is available at https://github.com/guijiejie/AADN.

AlignVE: Visual Entailment Recognition Based on Alignment Relations

Nov 16, 2022

Abstract:Visual entailment (VE) is to recognize whether the semantics of a hypothesis text can be inferred from the given premise image, which is one special task among recent emerged vision and language understanding tasks. Currently, most of the existing VE approaches are derived from the methods of visual question answering. They recognize visual entailment by quantifying the similarity between the hypothesis and premise in the content semantic features from multi modalities. Such approaches, however, ignore the VE's unique nature of relation inference between the premise and hypothesis. Therefore, in this paper, a new architecture called AlignVE is proposed to solve the visual entailment problem with a relation interaction method. It models the relation between the premise and hypothesis as an alignment matrix. Then it introduces a pooling operation to get feature vectors with a fixed size. Finally, it goes through the fully-connected layer and normalization layer to complete the classification. Experiments show that our alignment-based architecture reaches 72.45\% accuracy on SNLI-VE dataset, outperforming previous content-based models under the same settings.

PFENet++: Boosting Few-shot Semantic Segmentation with the Noise-filtered Context-aware Prior Mask

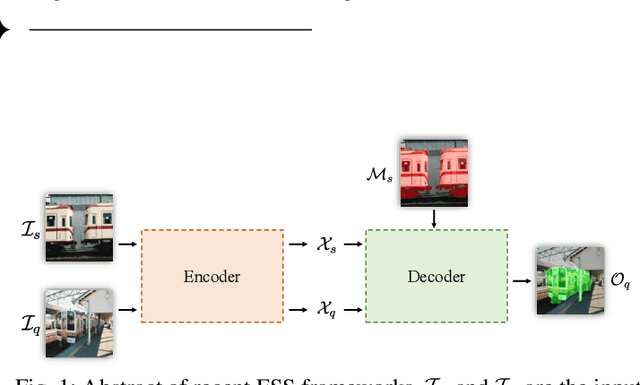

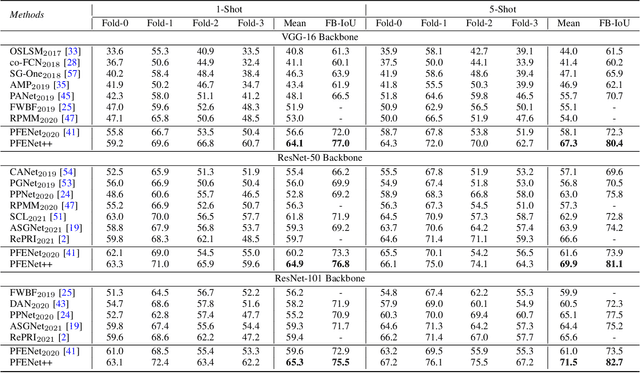

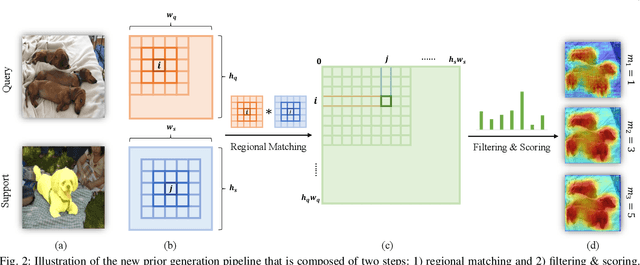

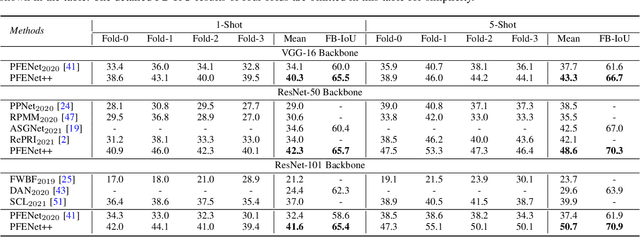

Sep 28, 2021

Abstract:In this work, we revisit the prior mask guidance proposed in "Prior Guided Feature Enrichment Network for Few-Shot Segmentation". The prior mask serves as an indicator that highlights the region of interests of unseen categories, and it is effective in achieving better performance on different frameworks of recent studies. However, the current method directly takes the maximum element-to-element correspondence between the query and support features to indicate the probability of belonging to the target class, thus the broader contextual information is seldom exploited during the prior mask generation. To address this issue, first, we propose the Context-aware Prior Mask (CAPM) that leverages additional nearby semantic cues for better locating the objects in query images. Second, since the maximum correlation value is vulnerable to noisy features, we take one step further by incorporating a lightweight Noise Suppression Module (NSM) to screen out the unnecessary responses, yielding high-quality masks for providing the prior knowledge. Both two contributions are experimentally shown to have substantial practical merit, and the new model named PFENet++ significantly outperforms the baseline PFENet as well as all other competitors on three challenging benchmarks PASCAL-5$^i$, COCO-20$^i$ and FSS-1000. The new state-of-the-art performance is achieved without compromising the efficiency, manifesting the potential for being a new strong baseline in few-shot semantic segmentation. Our code will be available at https://github.com/dvlab-research/PFENet++.

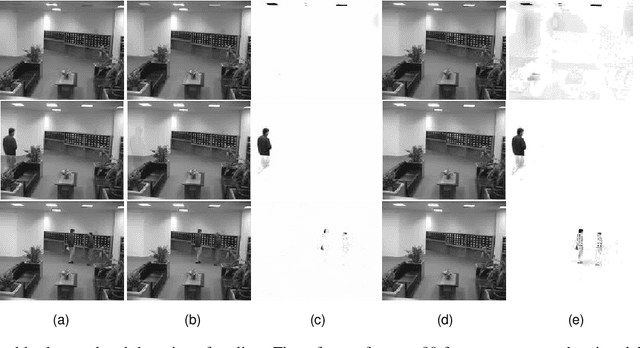

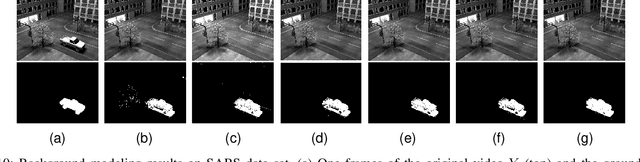

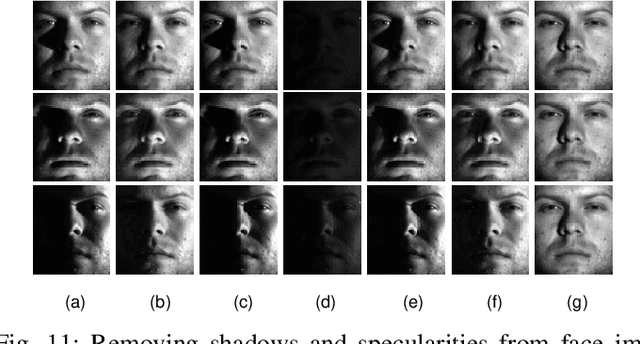

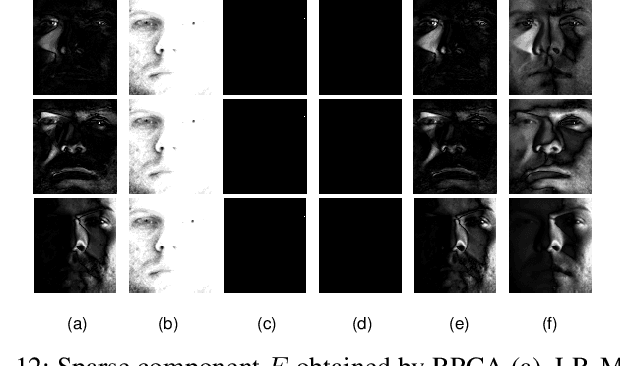

Low-Rank Matrix Recovery from Noisy via an MDL Framework-based Atomic Norm

Sep 17, 2020

Abstract:The recovery of the underlying low-rank structure of clean data corrupted with sparse noise/outliers is attracting increasing interest. However, in many low-level vision problems, the exact target rank of the underlying structure, the particular locations and values of the sparse outliers are not known. Thus, the conventional methods can not separate the low-rank and sparse components completely, especially gross outliers or deficient observations. Therefore, in this study, we employ the Minimum Description Length (MDL) principle and atomic norm for low-rank matrix recovery to overcome these limitations. First, we employ the atomic norm to find all the candidate atoms of low-rank and sparse terms, and then we minimize the description length of the model in order to select the appropriate atoms of low-rank and the sparse matrix, respectively. Our experimental analyses show that the proposed approach can obtain a higher success rate than the state-of-the-art methods even when the number of observations is limited or the corruption ratio is high. Experimental results about synthetic data and real sensing applications (high dynamic range imaging, background modeling, removing shadows and specularities) demonstrate the effectiveness, robustness and efficiency of the proposed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge