Ying Fu

EventHDR: from Event to High-Speed HDR Videos and Beyond

Sep 25, 2024

Abstract:Event cameras are innovative neuromorphic sensors that asynchronously capture the scene dynamics. Due to the event-triggering mechanism, such cameras record event streams with much shorter response latency and higher intensity sensitivity compared to conventional cameras. On the basis of these features, previous works have attempted to reconstruct high dynamic range (HDR) videos from events, but have either suffered from unrealistic artifacts or failed to provide sufficiently high frame rates. In this paper, we present a recurrent convolutional neural network that reconstruct high-speed HDR videos from event sequences, with a key frame guidance to prevent potential error accumulation caused by the sparse event data. Additionally, to address the problem of severely limited real dataset, we develop a new optical system to collect a real-world dataset with paired high-speed HDR videos and event streams, facilitating future research in this field. Our dataset provides the first real paired dataset for event-to-HDR reconstruction, avoiding potential inaccuracies from simulation strategies. Experimental results demonstrate that our method can generate high-quality, high-speed HDR videos. We further explore the potential of our work in cross-camera reconstruction and downstream computer vision tasks, including object detection, panoramic segmentation, optical flow estimation, and monocular depth estimation under HDR scenarios.

Frequency-aware Feature Fusion for Dense Image Prediction

Aug 23, 2024Abstract:Dense image prediction tasks demand features with strong category information and precise spatial boundary details at high resolution. To achieve this, modern hierarchical models often utilize feature fusion, directly adding upsampled coarse features from deep layers and high-resolution features from lower levels. In this paper, we observe rapid variations in fused feature values within objects, resulting in intra-category inconsistency due to disturbed high-frequency features. Additionally, blurred boundaries in fused features lack accurate high frequency, leading to boundary displacement. Building upon these observations, we propose Frequency-Aware Feature Fusion (FreqFusion), integrating an Adaptive Low-Pass Filter (ALPF) generator, an offset generator, and an Adaptive High-Pass Filter (AHPF) generator. The ALPF generator predicts spatially-variant low-pass filters to attenuate high-frequency components within objects, reducing intra-class inconsistency during upsampling. The offset generator refines large inconsistent features and thin boundaries by replacing inconsistent features with more consistent ones through resampling, while the AHPF generator enhances high-frequency detailed boundary information lost during downsampling. Comprehensive visualization and quantitative analysis demonstrate that FreqFusion effectively improves feature consistency and sharpens object boundaries. Extensive experiments across various dense prediction tasks confirm its effectiveness. The code is made publicly available at https://github.com/Linwei-Chen/FreqFusion.

FCDFusion: a Fast, Low Color Deviation Method for Fusing Visible and Infrared Image Pairs

Aug 02, 2024

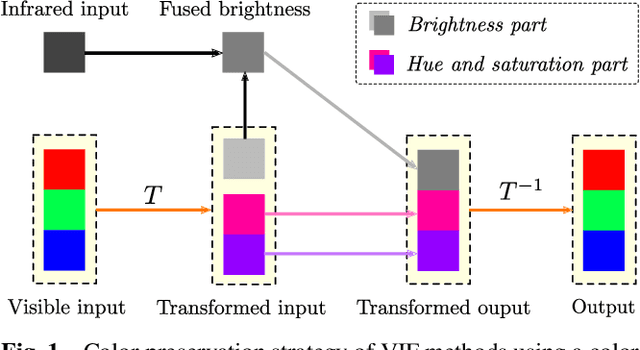

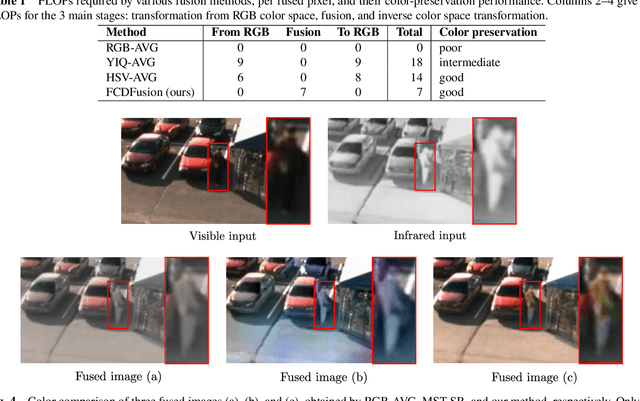

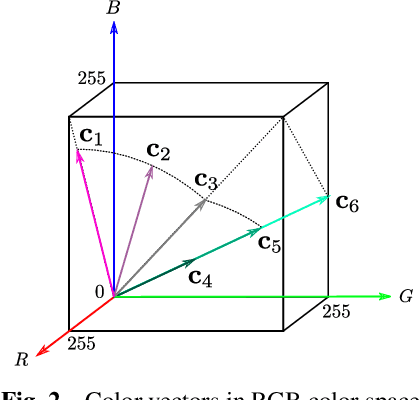

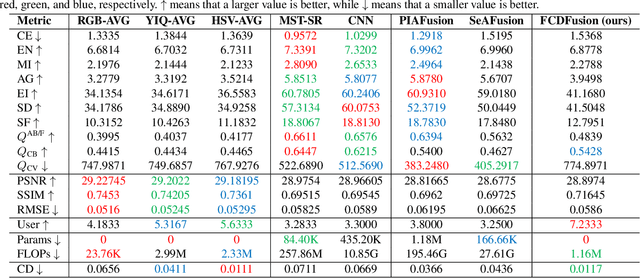

Abstract:Visible and infrared image fusion (VIF) aims to combine information from visible and infrared images into a single fused image. Previous VIF methods usually employ a color space transformation to keep the hue and saturation from the original visible image. However, for fast VIF methods, this operation accounts for the majority of the calculation and is the bottleneck preventing faster processing. In this paper, we propose a fast fusion method, FCDFusion, with little color deviation. It preserves color information without color space transformations, by directly operating in RGB color space. It incorporates gamma correction at little extra cost, allowing color and contrast to be rapidly improved. We regard the fusion process as a scaling operation on 3D color vectors, greatly simplifying the calculations. A theoretical analysis and experiments show that our method can achieve satisfactory results in only 7 FLOPs per pixel. Compared to state-of-the-art fast, color-preserving methods using HSV color space, our method provides higher contrast at only half of the computational cost. We further propose a new metric, color deviation, to measure the ability of a VIF method to preserve color. It is specifically designed for VIF tasks with color visible-light images, and overcomes deficiencies of existing VIF metrics used for this purpose. Our code is available at https://github.com/HeasonLee/FCDFusion.

PerlDiff: Controllable Street View Synthesis Using Perspective-Layout Diffusion Models

Jul 08, 2024

Abstract:Controllable generation is considered a potentially vital approach to address the challenge of annotating 3D data, and the precision of such controllable generation becomes particularly imperative in the context of data production for autonomous driving. Existing methods focus on the integration of diverse generative information into controlling inputs, utilizing frameworks such as GLIGEN or ControlNet, to produce commendable outcomes in controllable generation. However, such approaches intrinsically restrict generation performance to the learning capacities of predefined network architectures. In this paper, we explore the integration of controlling information and introduce PerlDiff (Perspective-Layout Diffusion Models), a method for effective street view image generation that fully leverages perspective 3D geometric information. Our PerlDiff employs 3D geometric priors to guide the generation of street view images with precise object-level control within the network learning process, resulting in a more robust and controllable output. Moreover, it demonstrates superior controllability compared to alternative layout control methods. Empirical results justify that our PerlDiff markedly enhances the precision of generation on the NuScenes and KITTI datasets. Our codes and models are publicly available at https://github.com/LabShuHangGU/PerlDiff.

Technique Report of CVPR 2024 PBDL Challenges

Jun 15, 2024

Abstract:The intersection of physics-based vision and deep learning presents an exciting frontier for advancing computer vision technologies. By leveraging the principles of physics to inform and enhance deep learning models, we can develop more robust and accurate vision systems. Physics-based vision aims to invert the processes to recover scene properties such as shape, reflectance, light distribution, and medium properties from images. In recent years, deep learning has shown promising improvements for various vision tasks, and when combined with physics-based vision, these approaches can enhance the robustness and accuracy of vision systems. This technical report summarizes the outcomes of the Physics-Based Vision Meets Deep Learning (PBDL) 2024 challenge, held in CVPR 2024 workshop. The challenge consisted of eight tracks, focusing on Low-Light Enhancement and Detection as well as High Dynamic Range (HDR) Imaging. This report details the objectives, methodologies, and results of each track, highlighting the top-performing solutions and their innovative approaches.

Multi-Object Tracking in the Dark

May 10, 2024

Abstract:Low-light scenes are prevalent in real-world applications (e.g. autonomous driving and surveillance at night). Recently, multi-object tracking in various practical use cases have received much attention, but multi-object tracking in dark scenes is rarely considered. In this paper, we focus on multi-object tracking in dark scenes. To address the lack of datasets, we first build a Low-light Multi-Object Tracking (LMOT) dataset. LMOT provides well-aligned low-light video pairs captured by our dual-camera system, and high-quality multi-object tracking annotations for all videos. Then, we propose a low-light multi-object tracking method, termed as LTrack. We introduce the adaptive low-pass downsample module to enhance low-frequency components of images outside the sensor noises. The degradation suppression learning strategy enables the model to learn invariant information under noise disturbance and image quality degradation. These components improve the robustness of multi-object tracking in dark scenes. We conducted a comprehensive analysis of our LMOT dataset and proposed LTrack. Experimental results demonstrate the superiority of the proposed method and its competitiveness in real night low-light scenes. Dataset and Code: https: //github.com/ying-fu/LMOT

Transformer based Pluralistic Image Completion with Reduced Information Loss

Apr 15, 2024

Abstract:Transformer based methods have achieved great success in image inpainting recently. However, we find that these solutions regard each pixel as a token, thus suffering from an information loss issue from two aspects: 1) They downsample the input image into much lower resolutions for efficiency consideration. 2) They quantize $256^3$ RGB values to a small number (such as 512) of quantized color values. The indices of quantized pixels are used as tokens for the inputs and prediction targets of the transformer. To mitigate these issues, we propose a new transformer based framework called "PUT". Specifically, to avoid input downsampling while maintaining computation efficiency, we design a patch-based auto-encoder P-VQVAE. The encoder converts the masked image into non-overlapped patch tokens and the decoder recovers the masked regions from the inpainted tokens while keeping the unmasked regions unchanged. To eliminate the information loss caused by input quantization, an Un-quantized Transformer is applied. It directly takes features from the P-VQVAE encoder as input without any quantization and only regards the quantized tokens as prediction targets. Furthermore, to make the inpainting process more controllable, we introduce semantic and structural conditions as extra guidance. Extensive experiments show that our method greatly outperforms existing transformer based methods on image fidelity and achieves much higher diversity and better fidelity than state-of-the-art pluralistic inpainting methods on complex large-scale datasets (e.g., ImageNet). Codes are available at https://github.com/liuqk3/PUT.

Aligning LLMs for FL-free Program Repair

Apr 13, 2024Abstract:Large language models (LLMs) have achieved decent results on automated program repair (APR). However, the next token prediction training objective of decoder-only LLMs (e.g., GPT-4) is misaligned with the masked span prediction objective of current infilling-style methods, which impedes LLMs from fully leveraging pre-trained knowledge for program repair. In addition, while some LLMs are capable of locating and repairing bugs end-to-end when using the related artifacts (e.g., test cases) as input, existing methods regard them as separate tasks and ask LLMs to generate patches at fixed locations. This restriction hinders LLMs from exploring potential patches beyond the given locations. In this paper, we investigate a new approach to adapt LLMs to program repair. Our core insight is that LLM's APR capability can be greatly improved by simply aligning the output to their training objective and allowing them to refine the whole program without first performing fault localization. Based on this insight, we designed D4C, a straightforward prompting framework for APR. D4C can repair 180 bugs correctly in Defects4J, with each patch being sampled only 10 times. This surpasses the SOTA APR methods with perfect fault localization by 10% and reduces the patch sampling number by 90%. Our findings reveal that (1) objective alignment is crucial for fully exploiting LLM's pre-trained capability, and (2) replacing the traditional localize-then-repair workflow with direct debugging is more effective for LLM-based APR methods. Thus, we believe this paper introduces a new mindset for harnessing LLMs in APR.

Binarized Low-light Raw Video Enhancement

Mar 29, 2024

Abstract:Recently, deep neural networks have achieved excellent performance on low-light raw video enhancement. However, they often come with high computational complexity and large memory costs, which hinder their applications on resource-limited devices. In this paper, we explore the feasibility of applying the extremely compact binary neural network (BNN) to low-light raw video enhancement. Nevertheless, there are two main issues with binarizing video enhancement models. One is how to fuse the temporal information to improve low-light denoising without complex modules. The other is how to narrow the performance gap between binary convolutions with the full precision ones. To address the first issue, we introduce a spatial-temporal shift operation, which is easy-to-binarize and effective. The temporal shift efficiently aggregates the features of neighbor frames and the spatial shift handles the misalignment caused by the large motion in videos. For the second issue, we present a distribution-aware binary convolution, which captures the distribution characteristics of real-valued input and incorporates them into plain binary convolutions to alleviate the degradation in performance. Extensive quantitative and qualitative experiments have shown our high-efficiency binarized low-light raw video enhancement method can attain a promising performance.

Infrared Small Target Detection with Scale and Location Sensitivity

Mar 28, 2024

Abstract:Recently, infrared small target detection (IRSTD) has been dominated by deep-learning-based methods. However, these methods mainly focus on the design of complex model structures to extract discriminative features, leaving the loss functions for IRSTD under-explored. For example, the widely used Intersection over Union (IoU) and Dice losses lack sensitivity to the scales and locations of targets, limiting the detection performance of detectors. In this paper, we focus on boosting detection performance with a more effective loss but a simpler model structure. Specifically, we first propose a novel Scale and Location Sensitive (SLS) loss to handle the limitations of existing losses: 1) for scale sensitivity, we compute a weight for the IoU loss based on target scales to help the detector distinguish targets with different scales: 2) for location sensitivity, we introduce a penalty term based on the center points of targets to help the detector localize targets more precisely. Then, we design a simple Multi-Scale Head to the plain U-Net (MSHNet). By applying SLS loss to each scale of the predictions, our MSHNet outperforms existing state-of-the-art methods by a large margin. In addition, the detection performance of existing detectors can be further improved when trained with our SLS loss, demonstrating the effectiveness and generalization of our SLS loss. The code is available at https://github.com/ying-fu/MSHNet.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge