Xiaobing Li

TISDiSS: A Training-Time and Inference-Time Scalable Framework for Discriminative Source Separation

Sep 19, 2025Abstract:Source separation is a fundamental task in speech, music, and audio processing, and it also provides cleaner and larger data for training generative models. However, improving separation performance in practice often depends on increasingly large networks, inflating training and deployment costs. Motivated by recent advances in inference-time scaling for generative modeling, we propose Training-Time and Inference-Time Scalable Discriminative Source Separation (TISDiSS), a unified framework that integrates early-split multi-loss supervision, shared-parameter design, and dynamic inference repetitions. TISDiSS enables flexible speed-performance trade-offs by adjusting inference depth without retraining additional models. We further provide systematic analyses of architectural and training choices and show that training with more inference repetitions improves shallow-inference performance, benefiting low-latency applications. Experiments on standard speech separation benchmarks demonstrate state-of-the-art performance with a reduced parameter count, establishing TISDiSS as a scalable and practical framework for adaptive source separation.

ELGAR: Expressive Cello Performance Motion Generation for Audio Rendition

May 07, 2025Abstract:The art of instrument performance stands as a vivid manifestation of human creativity and emotion. Nonetheless, generating instrument performance motions is a highly challenging task, as it requires not only capturing intricate movements but also reconstructing the complex dynamics of the performer-instrument interaction. While existing works primarily focus on modeling partial body motions, we propose Expressive ceLlo performance motion Generation for Audio Rendition (ELGAR), a state-of-the-art diffusion-based framework for whole-body fine-grained instrument performance motion generation solely from audio. To emphasize the interactive nature of the instrument performance, we introduce Hand Interactive Contact Loss (HICL) and Bow Interactive Contact Loss (BICL), which effectively guarantee the authenticity of the interplay. Moreover, to better evaluate whether the generated motions align with the semantic context of the music audio, we design novel metrics specifically for string instrument performance motion generation, including finger-contact distance, bow-string distance, and bowing score. Extensive evaluations and ablation studies are conducted to validate the efficacy of the proposed methods. In addition, we put forward a motion generation dataset SPD-GEN, collated and normalized from the MoCap dataset SPD. As demonstrated, ELGAR has shown great potential in generating instrument performance motions with complicated and fast interactions, which will promote further development in areas such as animation, music education, interactive art creation, etc.

NotaGen: Advancing Musicality in Symbolic Music Generation with Large Language Model Training Paradigms

Feb 26, 2025Abstract:We introduce NotaGen, a symbolic music generation model aiming to explore the potential of producing high-quality classical sheet music. Inspired by the success of Large Language Models (LLMs), NotaGen adopts pre-training, fine-tuning, and reinforcement learning paradigms (henceforth referred to as the LLM training paradigms). It is pre-trained on 1.6M pieces of music, and then fine-tuned on approximately 9K high-quality classical compositions conditioned on "period-composer-instrumentation" prompts. For reinforcement learning, we propose the CLaMP-DPO method, which further enhances generation quality and controllability without requiring human annotations or predefined rewards. Our experiments demonstrate the efficacy of CLaMP-DPO in symbolic music generation models with different architectures and encoding schemes. Furthermore, subjective A/B tests show that NotaGen outperforms baseline models against human compositions, greatly advancing musical aesthetics in symbolic music generation. The project homepage is https://electricalexis.github.io/notagen-demo.

CLaMP 2: Multimodal Music Information Retrieval Across 101 Languages Using Large Language Models

Oct 17, 2024

Abstract:Challenges in managing linguistic diversity and integrating various musical modalities are faced by current music information retrieval systems. These limitations reduce their effectiveness in a global, multimodal music environment. To address these issues, we introduce CLaMP 2, a system compatible with 101 languages that supports both ABC notation (a text-based musical notation format) and MIDI (Musical Instrument Digital Interface) for music information retrieval. CLaMP 2, pre-trained on 1.5 million ABC-MIDI-text triplets, includes a multilingual text encoder and a multimodal music encoder aligned via contrastive learning. By leveraging large language models, we obtain refined and consistent multilingual descriptions at scale, significantly reducing textual noise and balancing language distribution. Our experiments show that CLaMP 2 achieves state-of-the-art results in both multilingual semantic search and music classification across modalities, thus establishing a new standard for inclusive and global music information retrieval.

MelodyT5: A Unified Score-to-Score Transformer for Symbolic Music Processing

Jul 02, 2024

Abstract:In the domain of symbolic music research, the progress of developing scalable systems has been notably hindered by the scarcity of available training data and the demand for models tailored to specific tasks. To address these issues, we propose MelodyT5, a novel unified framework that leverages an encoder-decoder architecture tailored for symbolic music processing in ABC notation. This framework challenges the conventional task-specific approach, considering various symbolic music tasks as score-to-score transformations. Consequently, it integrates seven melody-centric tasks, from generation to harmonization and segmentation, within a single model. Pre-trained on MelodyHub, a newly curated collection featuring over 261K unique melodies encoded in ABC notation and encompassing more than one million task instances, MelodyT5 demonstrates superior performance in symbolic music processing via multi-task transfer learning. Our findings highlight the efficacy of multi-task transfer learning in symbolic music processing, particularly for data-scarce tasks, challenging the prevailing task-specific paradigms and offering a comprehensive dataset and framework for future explorations in this domain.

Beyond Language Models: Byte Models are Digital World Simulators

Feb 29, 2024

Abstract:Traditional deep learning often overlooks bytes, the basic units of the digital world, where all forms of information and operations are encoded and manipulated in binary format. Inspired by the success of next token prediction in natural language processing, we introduce bGPT, a model with next byte prediction to simulate the digital world. bGPT matches specialized models in performance across various modalities, including text, audio, and images, and offers new possibilities for predicting, simulating, and diagnosing algorithm or hardware behaviour. It has almost flawlessly replicated the process of converting symbolic music data, achieving a low error rate of 0.0011 bits per byte in converting ABC notation to MIDI format. In addition, bGPT demonstrates exceptional capabilities in simulating CPU behaviour, with an accuracy exceeding 99.99% in executing various operations. Leveraging next byte prediction, models like bGPT can directly learn from vast binary data, effectively simulating the intricate patterns of the digital world.

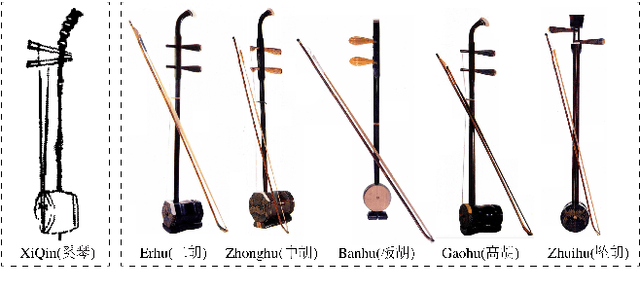

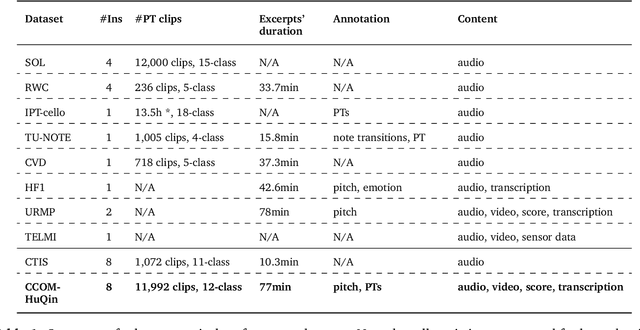

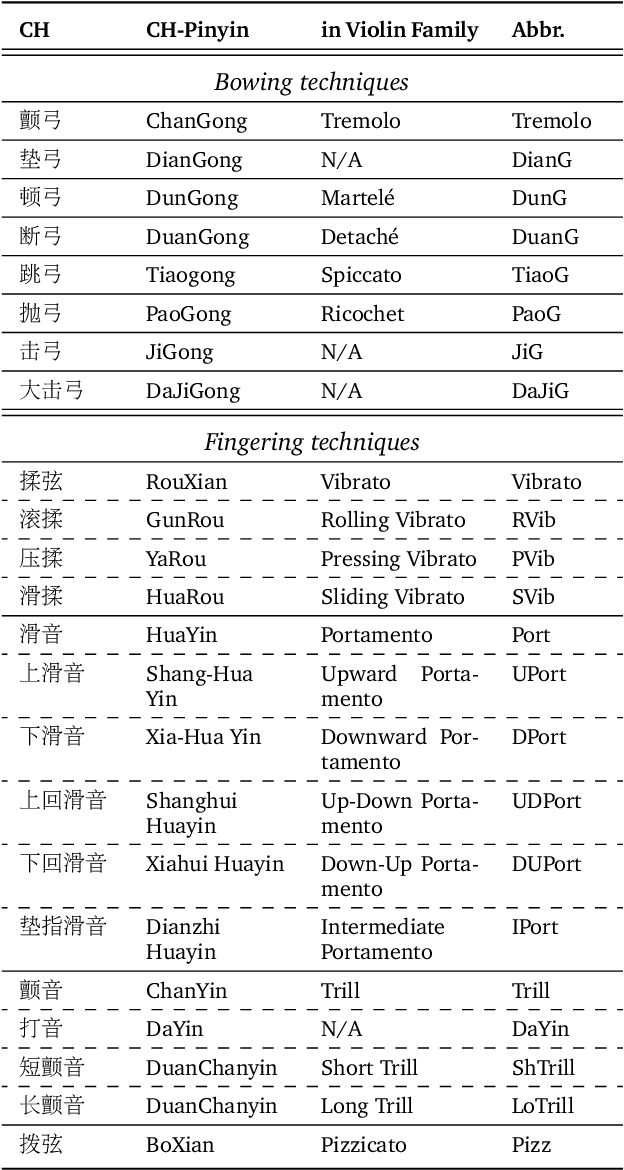

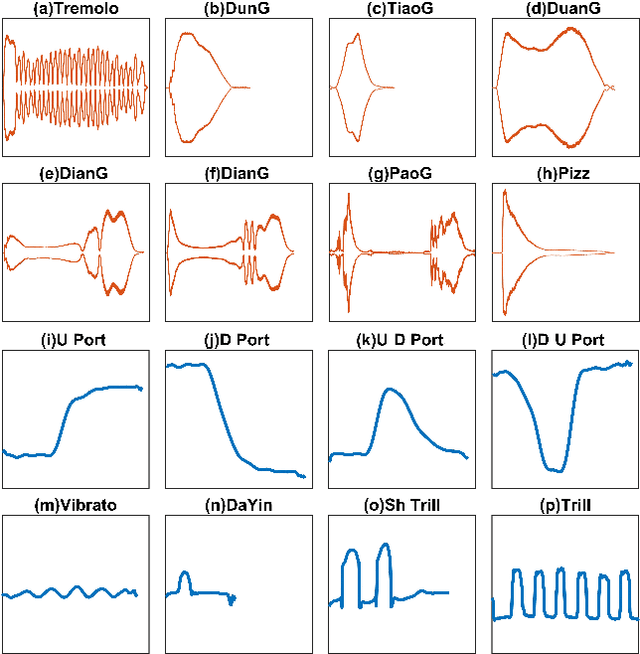

CCOM-HuQin: an Annotated Multimodal Chinese Fiddle Performance Dataset

Sep 14, 2022

Abstract:HuQin is a family of traditional Chinese bowed string instruments. Playing techniques(PTs) embodied in various playing styles add abundant emotional coloring and aesthetic feelings to HuQin performance. The complex applied techniques make HuQin music a challenging source for fundamental MIR tasks such as pitch analysis, transcription and score-audio alignment. In this paper, we present a multimodal performance dataset of HuQin music that contains audio-visual recordings of 11,992 single PT clips and 57 annotated musical pieces of classical excerpts. We systematically describe the HuQin PT taxonomy based on musicological theory and practical use cases. Then we introduce the dataset creation methodology and highlight the annotation principles featuring PTs. We analyze the statistics in different aspects to demonstrate the variety of PTs played in HuQin subcategories and perform preliminary experiments to show the potential applications of the dataset in various MIR tasks and cross-cultural music studies. Finally, we propose future work to be extended on the dataset.

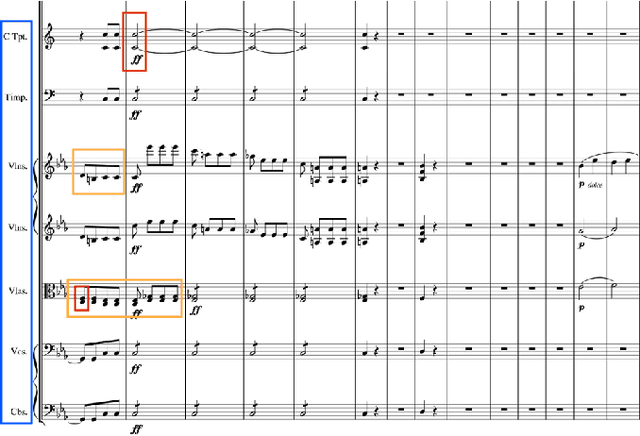

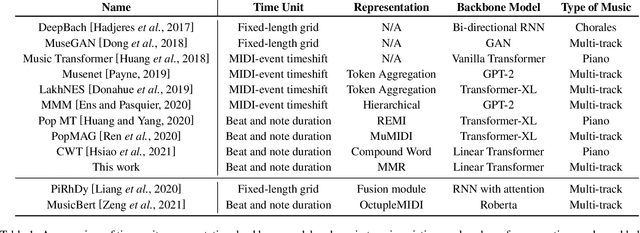

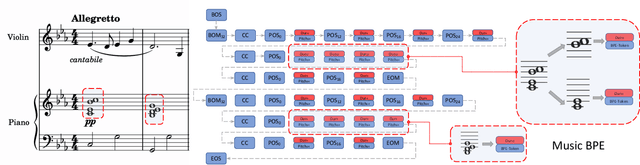

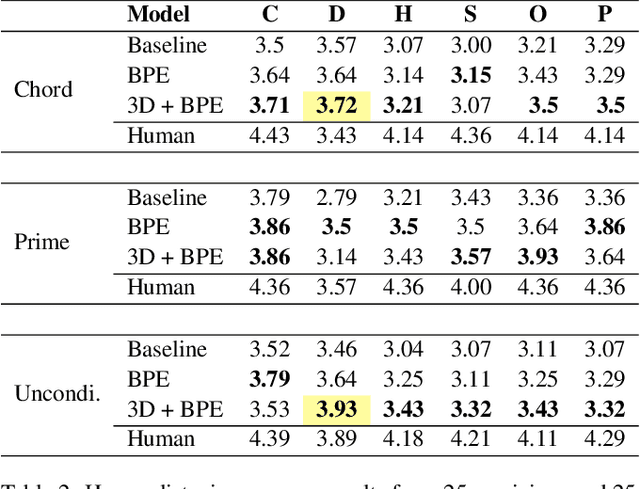

Symphony Generation with Permutation Invariant Language Model

May 10, 2022

Abstract:In this work, we present a symbolic symphony music generation solution, SymphonyNet, based on a permutation invariant language model. To bridge the gap between text generation and symphony generation task, we propose a novel Multi-track Multi-instrument Repeatable (MMR) representation with particular 3-D positional embedding and a modified Byte Pair Encoding algorithm (Music BPE) for music tokens. A novel linear transformer decoder architecture is introduced as a backbone for modeling extra-long sequences of symphony tokens. Meanwhile, we train the decoder to learn automatic orchestration as a joint task by masking instrument information from the input. We also introduce a large-scale symbolic symphony dataset for the advance of symphony generation research. Our empirical results show that our proposed approach can generate coherent, novel, complex and harmonious symphony compared to human composition, which is the pioneer solution for multi-track multi-instrument symbolic music generation.

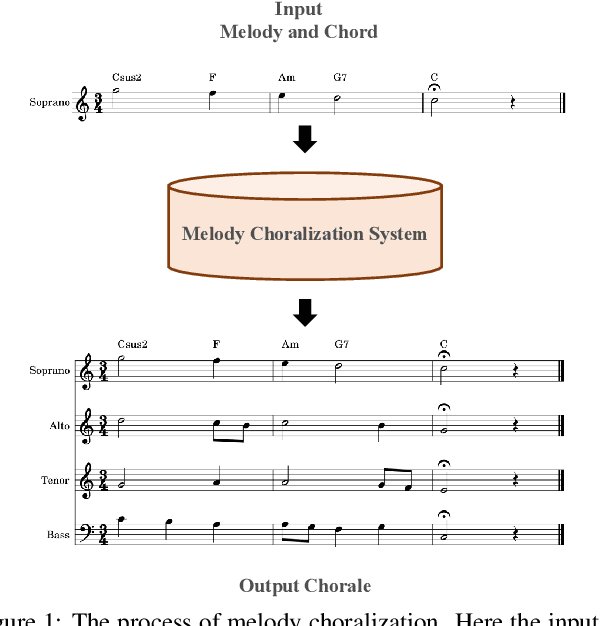

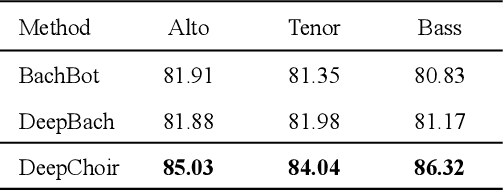

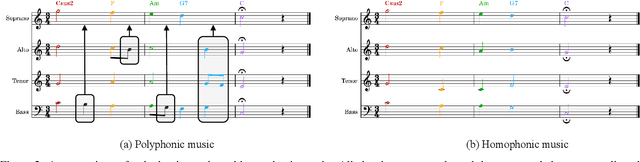

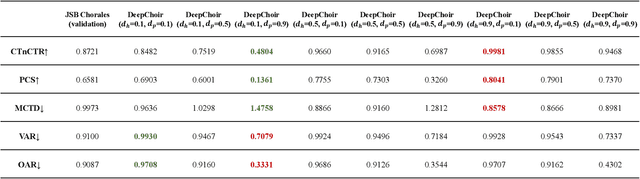

Chord-Conditioned Melody Choralization with Controllable Harmonicity and Polyphonicity

Feb 17, 2022

Abstract:Melody choralization, i.e. generating a four-part chorale based on a user-given melody, has long been closely associated with J.S. Bach chorales. Previous neural network-based systems rarely focus on chorale generation conditioned on a chord progression, and none of them realised controllable melody choralization. To enable neural networks to learn the general principles of counterpoint from Bach's chorales, we first design a music representation that encoded chord symbols for chord conditioning. We then propose DeepChoir, a melody choralization system, which can generate a four-part chorale for a given melody conditioned on a chord progression. Furthermore, with the improved density sampling, a user can control the extent of harmonicity and polyphonicity for the chorale generated by DeepChoir. Experimental results reveal the effectiveness of our data representation and the controllability of DeepChoir over harmonicity and polyphonicity. The code and generated samples (chorales, folk songs and a symphony) of DeepChoir, and the dataset we use now are available at https://github.com/sander-wood/deepchoir.

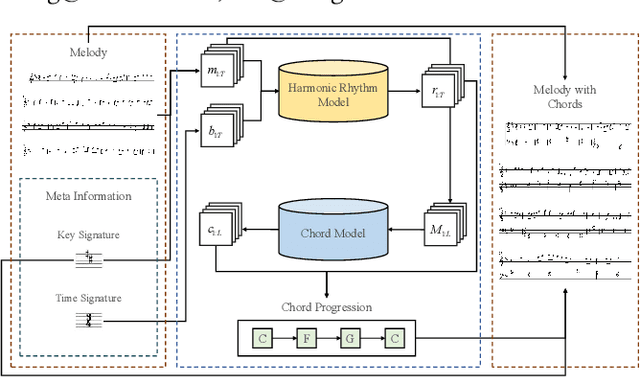

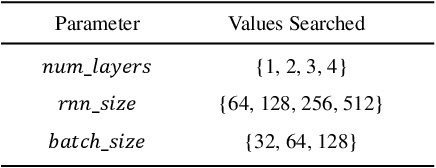

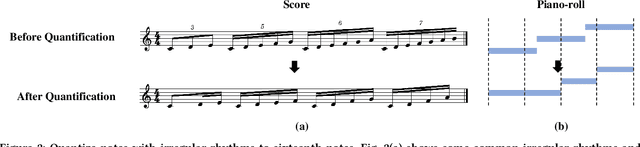

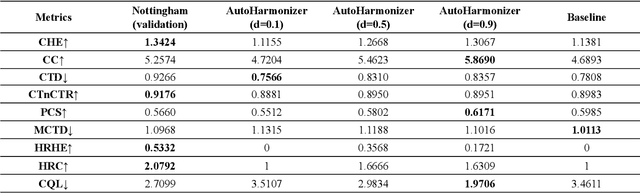

Melody Harmonization with Controllable Harmonic Rhythm

Dec 21, 2021

Abstract:Melody harmonization, namely generating a chord progression for a user-given melody, remains a challenging task to this day. Although previous neural network-based systems can effectively generate an appropriate chord progression for a melody, few studies focus on controllable melody harmonization, and none of them can generate flexible harmonic rhythms. To achieve harmonic rhythm-controllable melody harmonization, we propose AutoHarmonizer, a neural network-based melody harmonization system that can generate denser or sparser chord progressions with the use of a new sampling method for controllable generation proposed in this paper. This system mainly consists of two parts: a harmonic rhythm model provides coarse-grained chord onset information, while a chord model generates specific pitches for chords based on the given melody and the corresponding harmonic rhythm sequence previously generated. To evaluate the performance of AutoHarmonizer, we use nine metrics to compare the chord progressions from humans, the system proposed in this paper and the baseline. Experimental results show that AutoHarmonizer not only generates harmonic rhythms comparable to the human level, but generates chords with overall better quality than baseline at different settings. In addition, we use AutoHarmonizer to harmonize the Session Dataset (which were originally chordless), and ended with 40,925 traditional Irish folk songs with harmonies, named the Session Lead Sheet Dataset, which is the largest lead sheet dataset to date.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge