Witold Pedrycz

Theoretical Convergence of SMOTE-Generated Samples

Jan 05, 2026Abstract:Imbalanced data affects a wide range of machine learning applications, from healthcare to network security. As SMOTE is one of the most popular approaches to addressing this issue, it is imperative to validate it not only empirically but also theoretically. In this paper, we provide a rigorous theoretical analysis of SMOTE's convergence properties. Concretely, we prove that the synthetic random variable Z converges in probability to the underlying random variable X. We further prove a stronger convergence in mean when X is compact. Finally, we show that lower values of the nearest neighbor rank lead to faster convergence offering actionable guidance to practitioners. The theoretical results are supported by numerical experiments using both real-life and synthetic data. Our work provides a foundational understanding that enhances data augmentation techniques beyond imbalanced data scenarios.

GuardFed: A Trustworthy Federated Learning Framework Against Dual-Facet Attacks

Nov 12, 2025Abstract:Federated learning (FL) enables privacy-preserving collaborative model training but remains vulnerable to adversarial behaviors that compromise model utility or fairness across sensitive groups. While extensive studies have examined attacks targeting either objective, strategies that simultaneously degrade both utility and fairness remain largely unexplored. To bridge this gap, we introduce the Dual-Facet Attack (DFA), a novel threat model that concurrently undermines predictive accuracy and group fairness. Two variants, Synchronous DFA (S-DFA) and Split DFA (Sp-DFA), are further proposed to capture distinct real-world collusion scenarios. Experimental results show that existing robust FL defenses, including hybrid aggregation schemes, fail to resist DFAs effectively. To counter these threats, we propose GuardFed, a self-adaptive defense framework that maintains a fairness-aware reference model using a small amount of clean server data augmented with synthetic samples. In each training round, GuardFed computes a dual-perspective trust score for every client by jointly evaluating its utility deviation and fairness degradation, thereby enabling selective aggregation of trustworthy updates. Extensive experiments on real-world datasets demonstrate that GuardFed consistently preserves both accuracy and fairness under diverse non-IID and adversarial conditions, achieving state-of-the-art performance compared with existing robust FL methods.

Multivariate Time series Anomaly Detection:A Framework of Hidden Markov Models

Nov 11, 2025Abstract:In this study, we develop an approach to multivariate time series anomaly detection focused on the transformation of multivariate time series to univariate time series. Several transformation techniques involving Fuzzy C-Means (FCM) clustering and fuzzy integral are studied. In the sequel, a Hidden Markov Model (HMM), one of the commonly encountered statistical methods, is engaged here to detect anomalies in multivariate time series. We construct HMM-based anomaly detectors and in this context compare several transformation methods. A suite of experimental studies along with some comparative analysis is reported.

* 25 pages, 8 figures, 6 tables

Clustering-based Anomaly Detection in Multivariate Time Series Data

Nov 11, 2025

Abstract:Multivariate time series data come as a collection of time series describing different aspects of a certain temporal phenomenon. Anomaly detection in this type of data constitutes a challenging problem yet with numerous applications in science and engineering because anomaly scores come from the simultaneous consideration of the temporal and variable relationships. In this paper, we propose a clustering-based approach to detect anomalies concerning the amplitude and the shape of multivariate time series. First, we use a sliding window to generate a set of multivariate subsequences and thereafter apply an extended fuzzy clustering to reveal a structure present within the generated multivariate subsequences. Finally, a reconstruction criterion is employed to reconstruct the multivariate subsequences with the optimal cluster centers and the partition matrix. We construct a confidence index to quantify a level of anomaly detected in the series and apply Particle Swarm Optimization as an optimization vehicle for the problem of anomaly detection. Experimental studies completed on several synthetic and six real-world datasets suggest that the proposed methods can detect the anomalies in multivariate time series. With the help of available clusters revealed by the extended fuzzy clustering, the proposed framework can detect anomalies in the multivariate time series and is suitable for identifying anomalous amplitude and shape patterns in various application domains such as health care, weather data analysis, finance, and disease outbreak detection.

* 33 pages, 20 figures

An Integrated Fusion Framework for Ensemble Learning Leveraging Gradient Boosting and Fuzzy Rule-Based Models

Nov 11, 2025Abstract:The integration of different learning paradigms has long been a focus of machine learning research, aimed at overcoming the inherent limitations of individual methods. Fuzzy rule-based models excel in interpretability and have seen widespread application across diverse fields. However, they face challenges such as complex design specifications and scalability issues with large datasets. The fusion of different techniques and strategies, particularly Gradient Boosting, with Fuzzy Rule-Based Models offers a robust solution to these challenges. This paper proposes an Integrated Fusion Framework that merges the strengths of both paradigms to enhance model performance and interpretability. At each iteration, a Fuzzy Rule-Based Model is constructed and controlled by a dynamic factor to optimize its contribution to the overall ensemble. This control factor serves multiple purposes: it prevents model dominance, encourages diversity, acts as a regularization parameter, and provides a mechanism for dynamic tuning based on model performance, thus mitigating the risk of overfitting. Additionally, the framework incorporates a sample-based correction mechanism that allows for adaptive adjustments based on feedback from a validation set. Experimental results substantiate the efficacy of the presented gradient boosting framework for fuzzy rule-based models, demonstrating performance enhancement, especially in terms of mitigating overfitting and complexity typically associated with many rules. By leveraging an optimal factor to govern the contribution of each model, the framework improves performance, maintains interpretability, and simplifies the maintenance and update of the models.

HyColor: An Efficient Heuristic Algorithm for Graph Coloring

Jun 09, 2025

Abstract:The graph coloring problem (GCP) is a classic combinatorial optimization problem that aims to find the minimum number of colors assigned to vertices of a graph such that no two adjacent vertices receive the same color. GCP has been extensively studied by researchers from various fields, including mathematics, computer science, and biological science. Due to the NP-hard nature, many heuristic algorithms have been proposed to solve GCP. However, existing GCP algorithms focus on either small hard graphs or large-scale sparse graphs (with up to 10^7 vertices). This paper presents an efficient hybrid heuristic algorithm for GCP, named HyColor, which excels in handling large-scale sparse graphs while achieving impressive results on small dense graphs. The efficiency of HyColor comes from the following three aspects: a local decision strategy to improve the lower bound on the chromatic number; a graph-reduction strategy to reduce the working graph; and a k-core and mixed degree-based greedy heuristic for efficiently coloring graphs. HyColor is evaluated against three state-of-the-art GCP algorithms across four benchmarks, comprising three large-scale sparse graph benchmarks and one small dense graph benchmark, totaling 209 instances. The results demonstrate that HyColor consistently outperforms existing heuristic algorithms in both solution accuracy and computational efficiency for the majority of instances. Notably, HyColor achieved the best solutions in 194 instances (over 93%), with 34 of these solutions significantly surpassing those of other algorithms. Furthermore, HyColor successfully determined the chromatic number and achieved optimal coloring in 128 instances.

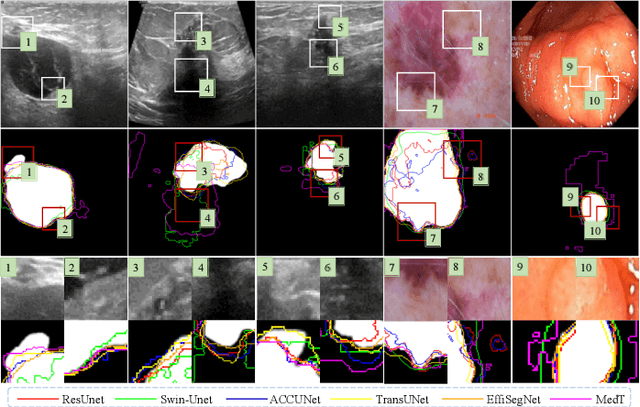

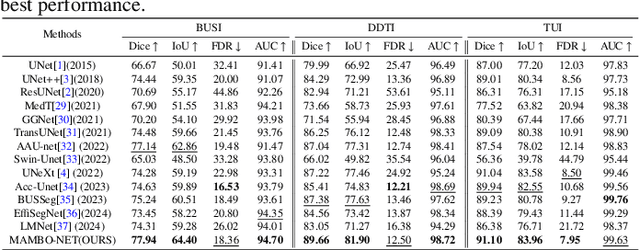

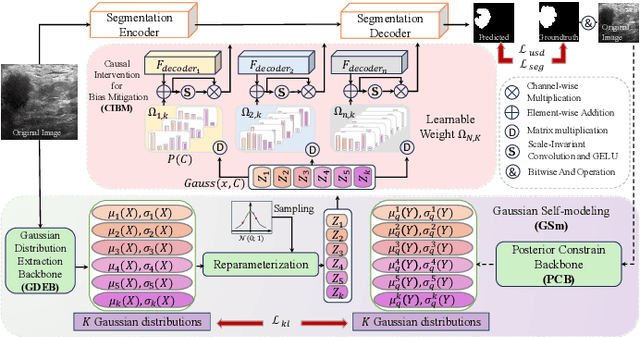

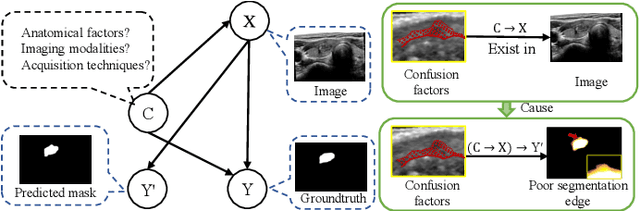

MAMBO-NET: Multi-Causal Aware Modeling Backdoor-Intervention Optimization for Medical Image Segmentation Network

May 28, 2025

Abstract:Medical image segmentation methods generally assume that the process from medical image to segmentation is unbiased, and use neural networks to establish conditional probability models to complete the segmentation task. This assumption does not consider confusion factors, which can affect medical images, such as complex anatomical variations and imaging modality limitations. Confusion factors obfuscate the relevance and causality of medical image segmentation, leading to unsatisfactory segmentation results. To address this issue, we propose a multi-causal aware modeling backdoor-intervention optimization (MAMBO-NET) network for medical image segmentation. Drawing insights from causal inference, MAMBO-NET utilizes self-modeling with multi-Gaussian distributions to fit the confusion factors and introduce causal intervention into the segmentation process. Moreover, we design appropriate posterior probability constraints to effectively train the distributions of confusion factors. For the distributions to effectively guide the segmentation and mitigate and eliminate the Impact of confusion factors on the segmentation, we introduce classical backdoor intervention techniques and analyze their feasibility in the segmentation task. To evaluate the effectiveness of our approach, we conducted extensive experiments on five medical image datasets. The results demonstrate that our method significantly reduces the influence of confusion factors, leading to enhanced segmentation accuracy.

Margin-aware Fuzzy Rough Feature Selection: Bridging Uncertainty Characterization and Pattern Classification

May 21, 2025Abstract:Fuzzy rough feature selection (FRFS) is an effective means of addressing the curse of dimensionality in high-dimensional data. By removing redundant and irrelevant features, FRFS helps mitigate classifier overfitting, enhance generalization performance, and lessen computational overhead. However, most existing FRFS algorithms primarily focus on reducing uncertainty in pattern classification, neglecting that lower uncertainty does not necessarily result in improved classification performance, despite it commonly being regarded as a key indicator of feature selection effectiveness in the FRFS literature. To bridge uncertainty characterization and pattern classification, we propose a Margin-aware Fuzzy Rough Feature Selection (MAFRFS) framework that considers both the compactness and separation of label classes. MAFRFS effectively reduces uncertainty in pattern classification tasks, while guiding the feature selection towards more separable and discriminative label class structures. Extensive experiments on 15 public datasets demonstrate that MAFRFS is highly scalable and more effective than FRFS. The algorithms developed using MAFRFS outperform six state-of-the-art feature selection algorithms.

LGBQPC: Local Granular-Ball Quality Peaks Clustering

May 16, 2025Abstract:The density peaks clustering (DPC) algorithm has attracted considerable attention for its ability to detect arbitrarily shaped clusters based on a simple yet effective assumption. Recent advancements integrating granular-ball (GB) computing with DPC have led to the GB-based DPC (GBDPC) algorithm, which improves computational efficiency. However, GBDPC demonstrates limitations when handling complex clustering tasks, particularly those involving data with complex manifold structures or non-uniform density distributions. To overcome these challenges, this paper proposes the local GB quality peaks clustering (LGBQPC) algorithm, which offers comprehensive improvements to GBDPC in both GB generation and clustering processes based on the principle of justifiable granularity (POJG). Firstly, an improved GB generation method, termed GB-POJG+, is developed, which systematically refines the original GB-POJG in four key aspects: the objective function, termination criterion for GB division, definition of abnormal GB, and granularity level adaptation strategy. GB-POJG+ simplifies parameter configuration by requiring only a single penalty coefficient and ensures high-quality GB generation while maintaining the number of generated GBs within an acceptable range. In the clustering phase, two key innovations are introduced based on the GB k-nearest neighbor graph: relative GB quality for density estimation and geodesic distance for GB distance metric. These modifications substantially improve the performance of GBDPC on datasets with complex manifold structures or non-uniform density distributions. Extensive numerical experiments on 40 benchmark datasets, including both synthetic and publicly available datasets, validate the superior performance of the proposed LGBQPC algorithm.

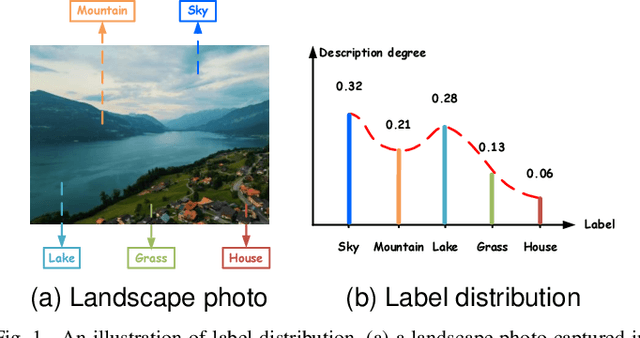

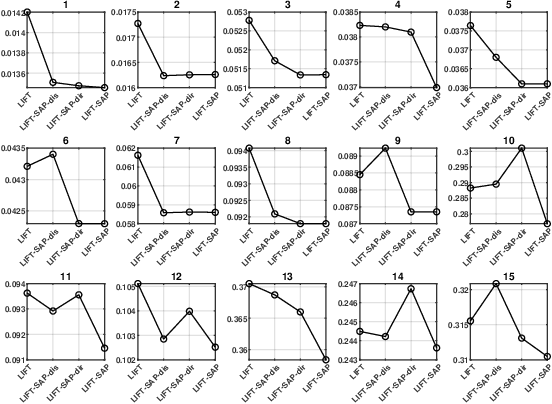

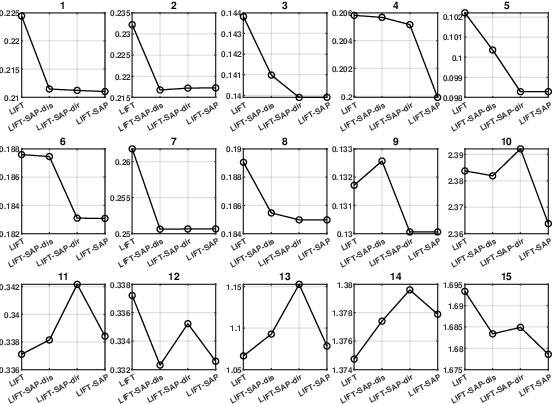

Rethinking Label-specific Features for Label Distribution Learning

Apr 27, 2025

Abstract:Label distribution learning (LDL) is an emerging learning paradigm designed to capture the relative importance of labels for each instance. Label-specific features (LSFs), constructed by LIFT, have proven effective for learning tasks with label ambiguity by leveraging clustering-based prototypes for each label to re-characterize instances. However, directly introducing LIFT into LDL tasks can be suboptimal, as the prototypes it collects primarily reflect intra-cluster relationships while neglecting interactions among distinct clusters. Additionally, constructing LSFs using multi-perspective information, rather than relying solely on Euclidean distance, provides a more robust and comprehensive representation of instances, mitigating noise and bias that may arise from a single distance perspective. To address these limitations, we introduce Structural Anchor Points (SAPs) to capture inter-cluster interactions. This leads to a novel LSFs construction strategy, LIFT-SAP, which enhances LIFT by integrating both distance and direction information of each instance relative to SAPs. Furthermore, we propose a novel LDL algorithm, Label Distribution Learning via Label-specifIc FeaTure with SAPs (LDL-LIFT-SAP), which unifies multiple label description degrees predicted from different LSF spaces into a cohesive label distribution. Extensive experiments on 15 real-world datasets demonstrate the effectiveness of LIFT-SAP over LIFT, as well as the superiority of LDL-LIFT-SAP compared to seven other well-established algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge