Modeling Paragraph-Level Vision-Language Semantic Alignment for Multi-Modal Summarization

Aug 24, 2022Xinnian Liang, Chenhao Cui, Shuangzhi Wu, Jiali Zeng, Yufan Jiang, Zhoujun Li

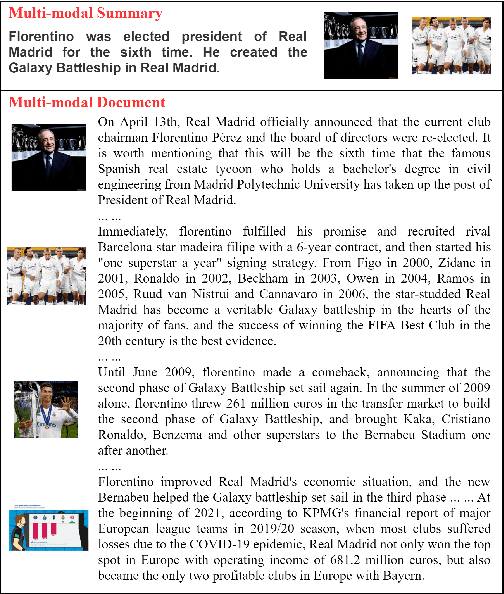

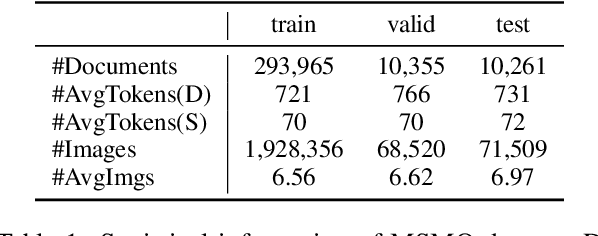

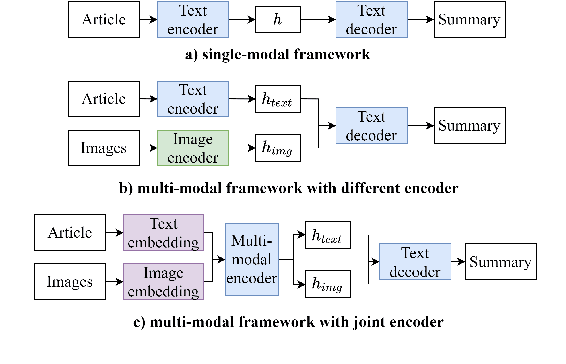

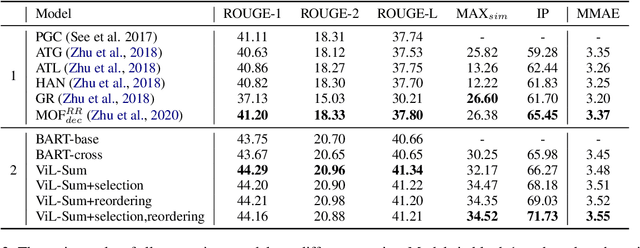

Most current multi-modal summarization methods follow a cascaded manner, where an off-the-shelf object detector is first used to extract visual features, then these features are fused with language representations to generate the summary with an encoder-decoder model. The cascaded way cannot capture the semantic alignments between images and paragraphs, which are crucial to a precise summary. In this paper, we propose ViL-Sum to jointly model paragraph-level \textbf{Vi}sion-\textbf{L}anguage Semantic Alignment and Multi-Modal \textbf{Sum}marization. The core of ViL-Sum is a joint multi-modal encoder with two well-designed tasks, image reordering and image selection. The joint multi-modal encoder captures the interactions between modalities, where the reordering task guides the model to learn paragraph-level semantic alignment and the selection task guides the model to selected summary-related images in the final summary. Experimental results show that our proposed ViL-Sum significantly outperforms current state-of-the-art methods. In further analysis, we find that two well-designed tasks and joint multi-modal encoder can effectively guide the model to learn reasonable paragraphs-images and summary-images relations.

An Efficient Coarse-to-Fine Facet-Aware Unsupervised Summarization Framework based on Semantic Blocks

Aug 17, 2022Xinnian Liang, Jing Li, Shuangzhi Wu, Jiali Zeng, Yufan Jiang, Mu Li, Zhoujun Li

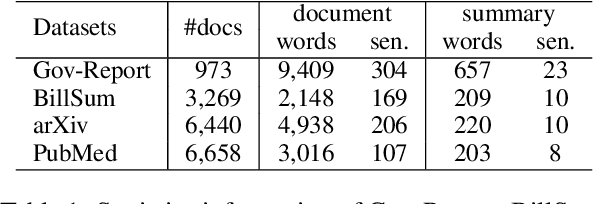

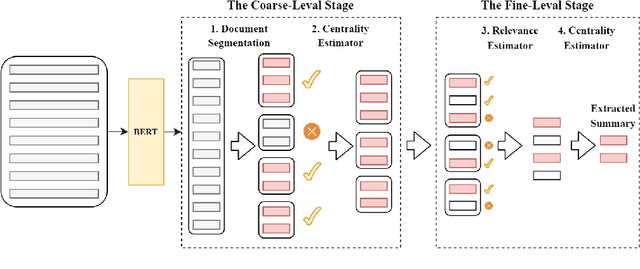

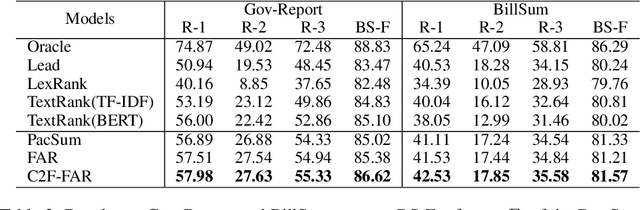

Unsupervised summarization methods have achieved remarkable results by incorporating representations from pre-trained language models. However, existing methods fail to consider efficiency and effectiveness at the same time when the input document is extremely long. To tackle this problem, in this paper, we proposed an efficient Coarse-to-Fine Facet-Aware Ranking (C2F-FAR) framework for unsupervised long document summarization, which is based on the semantic block. The semantic block refers to continuous sentences in the document that describe the same facet. Specifically, we address this problem by converting the one-step ranking method into the hierarchical multi-granularity two-stage ranking. In the coarse-level stage, we propose a new segment algorithm to split the document into facet-aware semantic blocks and then filter insignificant blocks. In the fine-level stage, we select salient sentences in each block and then extract the final summary from selected sentences. We evaluate our framework on four long document summarization datasets: Gov-Report, BillSum, arXiv, and PubMed. Our C2F-FAR can achieve new state-of-the-art unsupervised summarization results on Gov-Report and BillSum. In addition, our method speeds up 4-28 times more than previous methods.\footnote{\url{https://github.com/xnliang98/c2f-far}}

UM4: Unified Multilingual Multiple Teacher-Student Model for Zero-Resource Neural Machine Translation

Jul 11, 2022Jian Yang, Yuwei Yin, Shuming Ma, Dongdong Zhang, Shuangzhi Wu, Hongcheng Guo, Zhoujun Li, Furu Wei

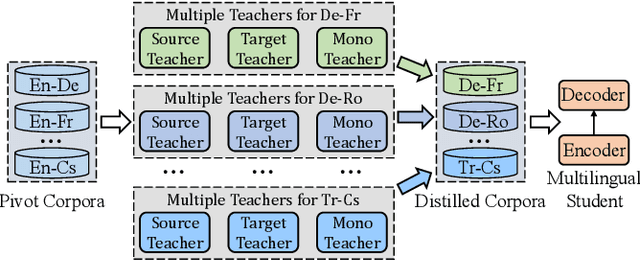

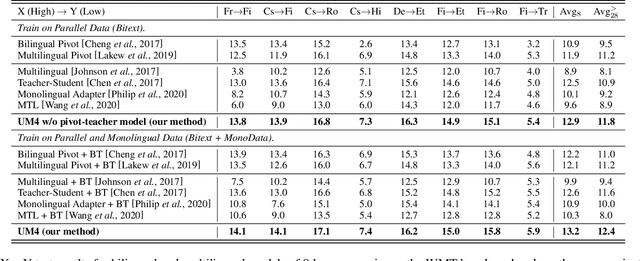

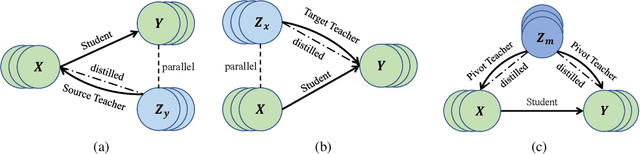

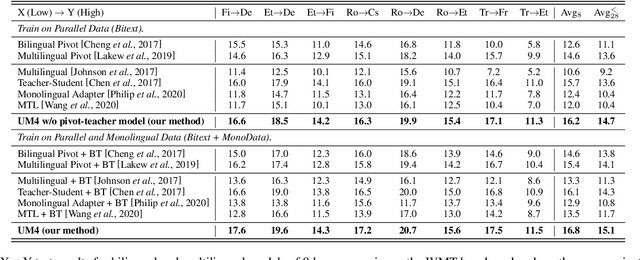

Most translation tasks among languages belong to the zero-resource translation problem where parallel corpora are unavailable. Multilingual neural machine translation (MNMT) enables one-pass translation using shared semantic space for all languages compared to the two-pass pivot translation but often underperforms the pivot-based method. In this paper, we propose a novel method, named as Unified Multilingual Multiple teacher-student Model for NMT (UM4). Our method unifies source-teacher, target-teacher, and pivot-teacher models to guide the student model for the zero-resource translation. The source teacher and target teacher force the student to learn the direct source to target translation by the distilled knowledge on both source and target sides. The monolingual corpus is further leveraged by the pivot-teacher model to enhance the student model. Experimental results demonstrate that our model of 72 directions significantly outperforms previous methods on the WMT benchmark.

Modeling Multi-Granularity Hierarchical Features for Relation Extraction

Apr 09, 2022Xinnian Liang, Shuangzhi Wu, Mu Li, Zhoujun Li

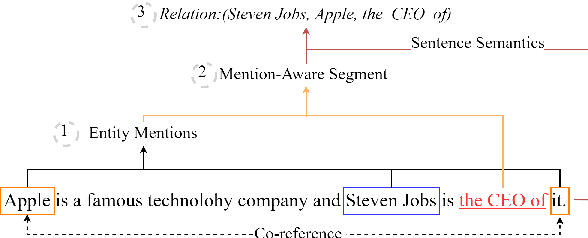

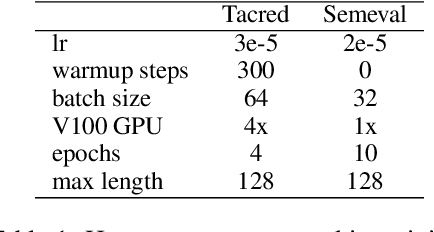

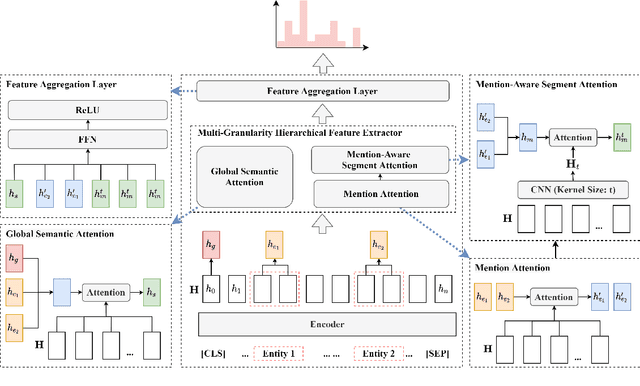

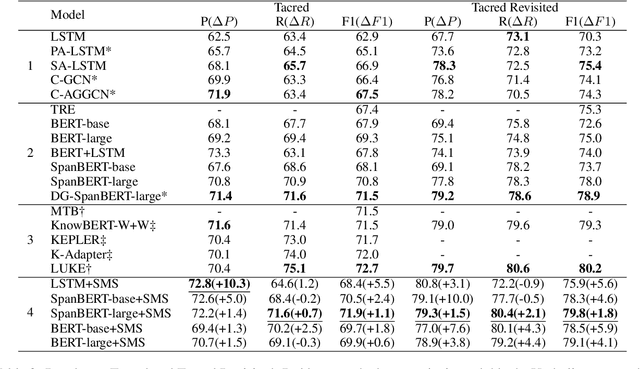

Relation extraction is a key task in Natural Language Processing (NLP), which aims to extract relations between entity pairs from given texts. Recently, relation extraction (RE) has achieved remarkable progress with the development of deep neural networks. Most existing research focuses on constructing explicit structured features using external knowledge such as knowledge graph and dependency tree. In this paper, we propose a novel method to extract multi-granularity features based solely on the original input sentences. We show that effective structured features can be attained even without external knowledge. Three kinds of features based on the input sentences are fully exploited, which are in entity mention level, segment level, and sentence level. All the three are jointly and hierarchically modeled. We evaluate our method on three public benchmarks: SemEval 2010 Task 8, Tacred, and Tacred Revisited. To verify the effectiveness, we apply our method to different encoders such as LSTM and BERT. Experimental results show that our method significantly outperforms existing state-of-the-art models that even use external knowledge. Extensive analyses demonstrate that the performance of our model is contributed by the capture of multi-granularity features and the model of their hierarchical structure. Code and data are available at \url{https://github.com/xnliang98/sms}.

Task-guided Disentangled Tuning for Pretrained Language Models

Mar 22, 2022Jiali Zeng, Yufan Jiang, Shuangzhi Wu, Yongjing Yin, Mu Li

Pretrained language models (PLMs) trained on large-scale unlabeled corpus are typically fine-tuned on task-specific downstream datasets, which have produced state-of-the-art results on various NLP tasks. However, the data discrepancy issue in domain and scale makes fine-tuning fail to efficiently capture task-specific patterns, especially in the low data regime. To address this issue, we propose Task-guided Disentangled Tuning (TDT) for PLMs, which enhances the generalization of representations by disentangling task-relevant signals from the entangled representations. For a given task, we introduce a learnable confidence model to detect indicative guidance from context, and further propose a disentangled regularization to mitigate the over-reliance problem. Experimental results on GLUE and CLUE benchmarks show that TDT gives consistently better results than fine-tuning with different PLMs, and extensive analysis demonstrates the effectiveness and robustness of our method. Code is available at https://github.com/lemon0830/TDT.

Learning Confidence for Transformer-based Neural Machine Translation

Mar 22, 2022Yu Lu, Jiali Zeng, Jiajun Zhang, Shuangzhi Wu, Mu Li

Confidence estimation aims to quantify the confidence of the model prediction, providing an expectation of success. A well-calibrated confidence estimate enables accurate failure prediction and proper risk measurement when given noisy samples and out-of-distribution data in real-world settings. However, this task remains a severe challenge for neural machine translation (NMT), where probabilities from softmax distribution fail to describe when the model is probably mistaken. To address this problem, we propose an unsupervised confidence estimate learning jointly with the training of the NMT model. We explain confidence as how many hints the NMT model needs to make a correct prediction, and more hints indicate low confidence. Specifically, the NMT model is given the option to ask for hints to improve translation accuracy at the cost of some slight penalty. Then, we approximate their level of confidence by counting the number of hints the model uses. We demonstrate that our learned confidence estimate achieves high accuracy on extensive sentence/word-level quality estimation tasks. Analytical results verify that our confidence estimate can correctly assess underlying risk in two real-world scenarios: (1) discovering noisy samples and (2) detecting out-of-domain data. We further propose a novel confidence-based instance-specific label smoothing approach based on our learned confidence estimate, which outperforms standard label smoothing.

One Model, Multiple Tasks: Pathways for Natural Language Understanding

Mar 07, 2022Duyu Tang, Fan Zhang, Yong Dai, Cong Zhou, Shuangzhi Wu, Shuming Shi

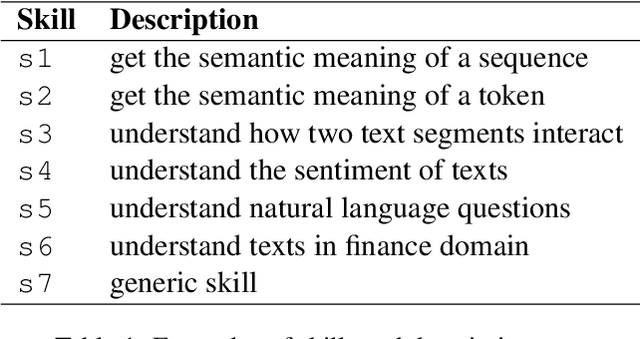

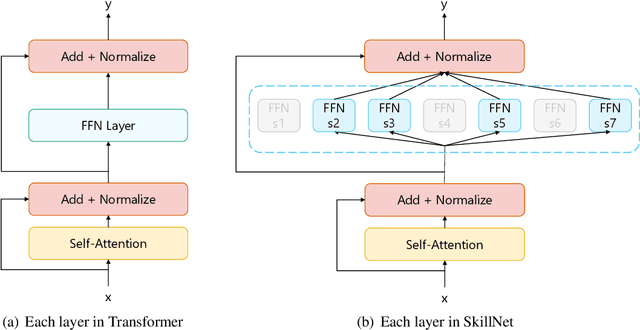

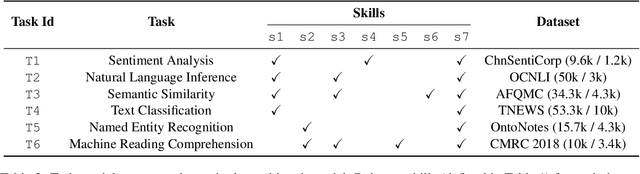

This paper presents a Pathways approach to handle many tasks at once. Our approach is general-purpose and sparse. Unlike prevailing single-purpose models that overspecialize at individual tasks and learn from scratch when being extended to new tasks, our approach is general-purpose with the ability of stitching together existing skills to learn new tasks more effectively. Different from traditional dense models that always activate all the model parameters, our approach is sparsely activated: only relevant parts of the model (like pathways through the network) are activated. We take natural language understanding as a case study and define a set of skills like \textit{the skill of understanding the sentiment of text} and \textit{the skill of understanding natural language questions}. These skills can be reused and combined to support many different tasks and situations. We develop our system using Transformer as the backbone. For each skill, we implement skill-specific feed-forward networks, which are activated only if the skill is relevant to the task. An appealing feature of our model is that it not only supports sparsely activated fine-tuning, but also allows us to pretrain skills in the same sparse way with masked language modeling and next sentence prediction. We call this model \textbf{SkillNet}. We have three major findings. First, with only one model checkpoint, SkillNet performs better than task-specific fine-tuning and two multi-task learning baselines (i.e., dense model and Mixture-of-Experts model) on six tasks. Second, sparsely activated pre-training further improves the overall performance. Third, SkillNet significantly outperforms baseline systems when being extended to new tasks.

Pretraining without Wordpieces: Learning Over a Vocabulary of Millions of Words

Feb 24, 2022Zhangyin Feng, Duyu Tang, Cong Zhou, Junwei Liao, Shuangzhi Wu, Xiaocheng Feng, Bing Qin, Yunbo Cao, Shuming Shi

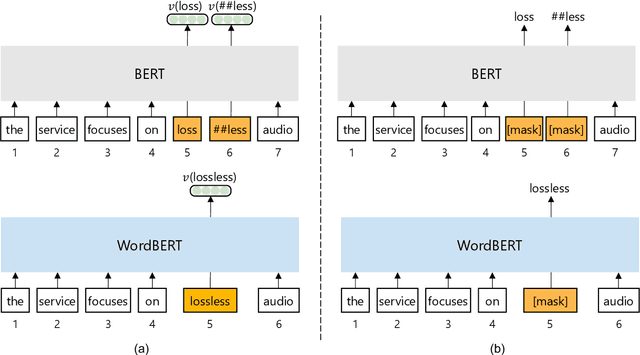

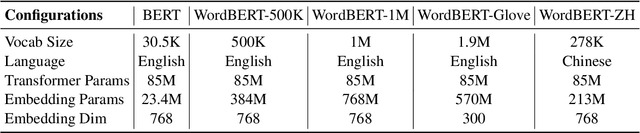

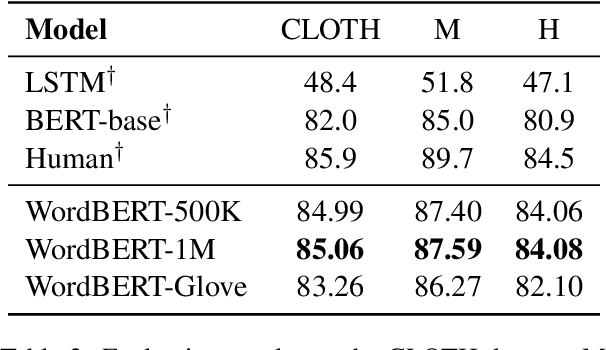

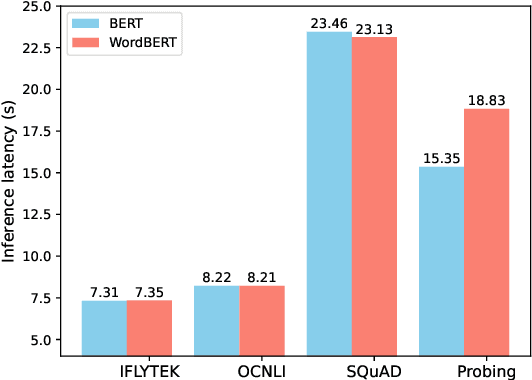

The standard BERT adopts subword-based tokenization, which may break a word into two or more wordpieces (e.g., converting "lossless" to "loss" and "less"). This will bring inconvenience in following situations: (1) what is the best way to obtain the contextual vector of a word that is divided into multiple wordpieces? (2) how to predict a word via cloze test without knowing the number of wordpieces in advance? In this work, we explore the possibility of developing BERT-style pretrained model over a vocabulary of words instead of wordpieces. We call such word-level BERT model as WordBERT. We train models with different vocabulary sizes, initialization configurations and languages. Results show that, compared to standard wordpiece-based BERT, WordBERT makes significant improvements on cloze test and machine reading comprehension. On many other natural language understanding tasks, including POS tagging, chunking and NER, WordBERT consistently performs better than BERT. Model analysis indicates that the major advantage of WordBERT over BERT lies in the understanding for low-frequency words and rare words. Furthermore, since the pipeline is language-independent, we train WordBERT for Chinese language and obtain significant gains on five natural language understanding datasets. Lastly, the analyse on inference speed illustrates WordBERT has comparable time cost to BERT in natural language understanding tasks.

Unsupervised Keyphrase Extraction by Jointly Modeling Local and Global Context

Sep 15, 2021Xinnian Liang, Shuangzhi Wu, Mu Li, Zhoujun Li

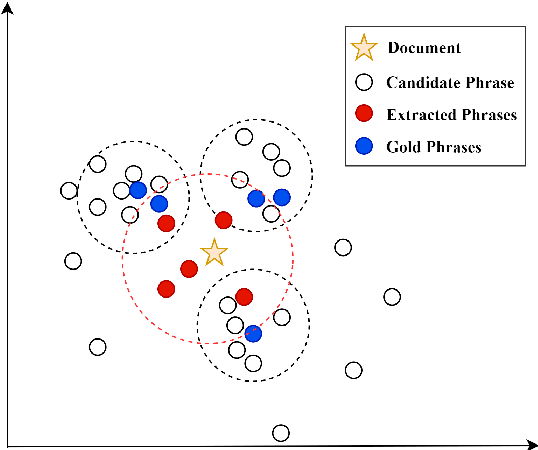

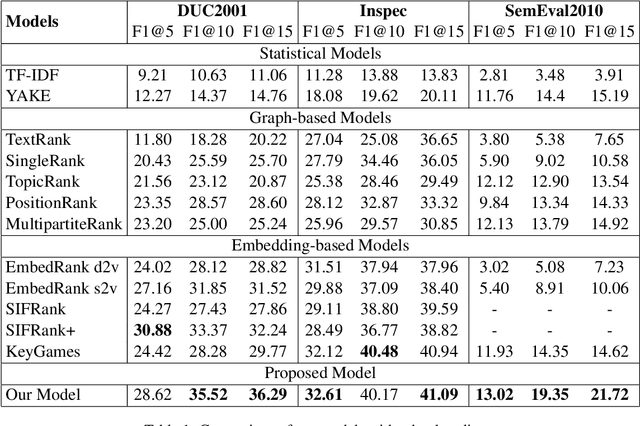

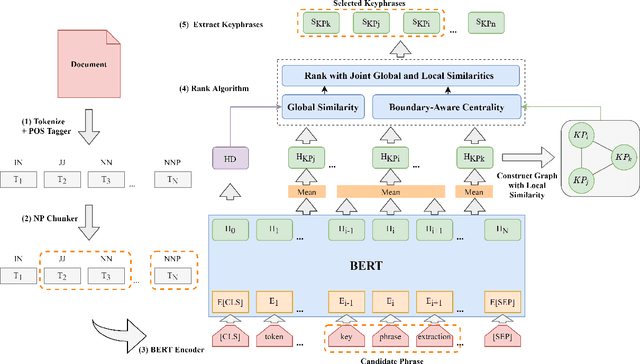

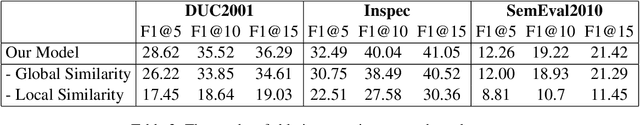

Embedding based methods are widely used for unsupervised keyphrase extraction (UKE) tasks. Generally, these methods simply calculate similarities between phrase embeddings and document embedding, which is insufficient to capture different context for a more effective UKE model. In this paper, we propose a novel method for UKE, where local and global contexts are jointly modeled. From a global view, we calculate the similarity between a certain phrase and the whole document in the vector space as transitional embedding based models do. In terms of the local view, we first build a graph structure based on the document where phrases are regarded as vertices and the edges are similarities between vertices. Then, we proposed a new centrality computation method to capture local salient information based on the graph structure. Finally, we further combine the modeling of global and local context for ranking. We evaluate our models on three public benchmarks (Inspec, DUC 2001, SemEval 2010) and compare with existing state-of-the-art models. The results show that our model outperforms most models while generalizing better on input documents with different domains and length. Additional ablation study shows that both the local and global information is crucial for unsupervised keyphrase extraction tasks.

Improving Machine Reading Comprehension with Single-choice Decision and Transfer Learning

Nov 06, 2020Yufan Jiang, Shuangzhi Wu, Jing Gong, Yahui Cheng, Peng Meng, Weiliang Lin, Zhibo Chen, Mu li

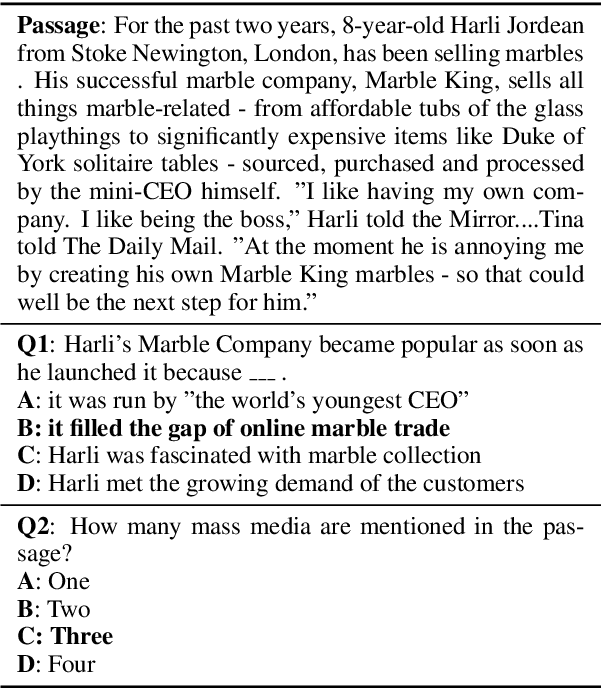

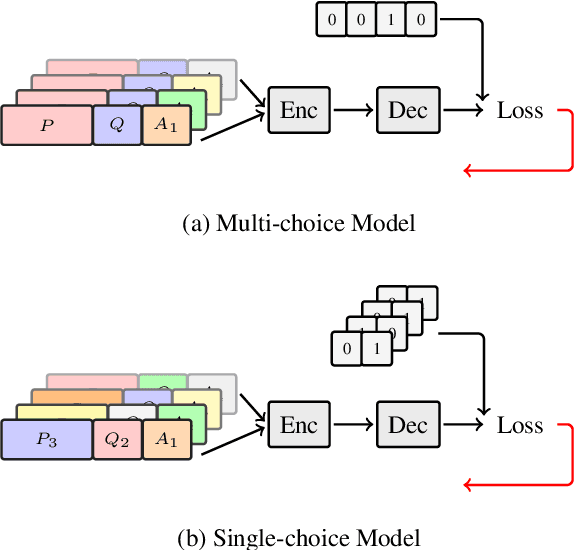

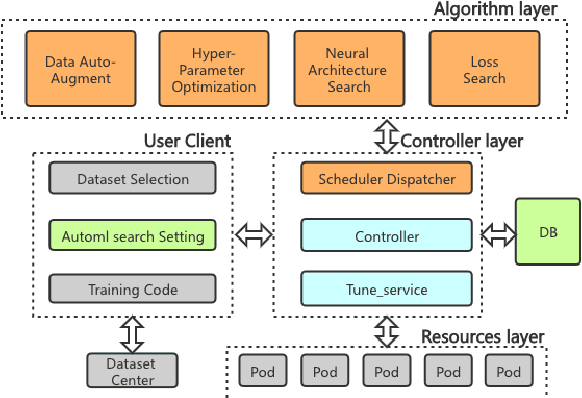

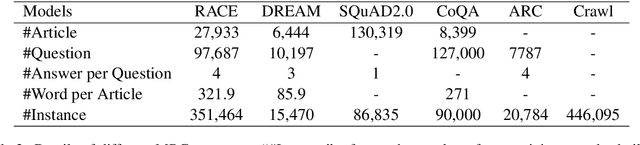

Multi-choice Machine Reading Comprehension (MMRC) aims to select the correct answer from a set of options based on a given passage and question. Due to task specific of MMRC, it is no-trivial to transfer knowledge from other MRC tasks such as SQuAD, Dream. In this paper, we simply reconstruct multi-choice to single-choice by training a binary classification to distinguish whether a certain answer is correct. Then select the option with the highest confidence score. We construct our model upon ALBERT-xxlarge model and estimate it on the RACE dataset. During training, We adopt AutoML strategy to tune better parameters. Experimental results show that the single-choice is better than multi-choice. In addition, by transferring knowledge from other kinds of MRC tasks, our model achieves the new state of the art results in both single and ensemble settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge