Signal Processing Grand Challenge 2023 -- e-Prevention: Sleep Behavior as an Indicator of Relapses in Psychotic Patients

Apr 17, 2023Kleanthis Avramidis, Kranti Adsul, Digbalay Bose, Shrikanth Narayanan

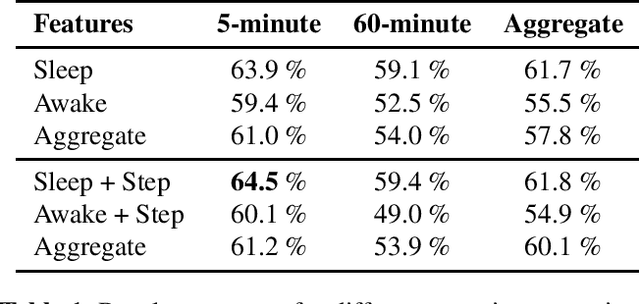

This paper presents the approach and results of USC SAIL's submission to the Signal Processing Grand Challenge 2023 - e-Prevention (Task 2), on detecting relapses in psychotic patients. Relapse prediction has proven to be challenging, primarily due to the heterogeneity of symptoms and responses to treatment between individuals. We address these challenges by investigating the use of sleep behavior features to estimate relapse days as outliers in an unsupervised machine learning setting. We extract informative features from human activity and heart rate data collected in the wild, and evaluate various combinations of feature types and time resolutions. We found that short-time sleep behavior features outperformed their awake counterparts and larger time intervals. Our submission was ranked 3rd in the Task's official leaderboard, demonstrating the potential of such features as an objective and non-invasive predictor of psychotic relapses.

Designing and Evaluating Speech Emotion Recognition Systems: A reality check case study with IEMOCAP

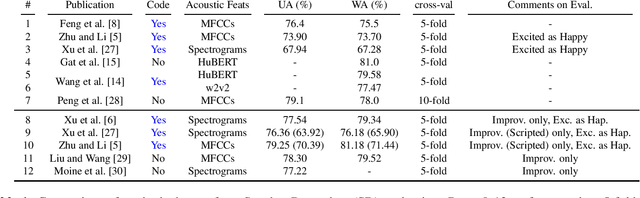

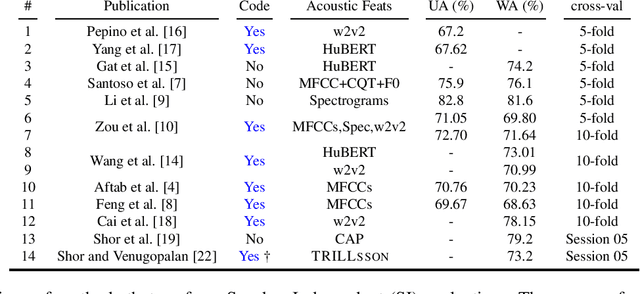

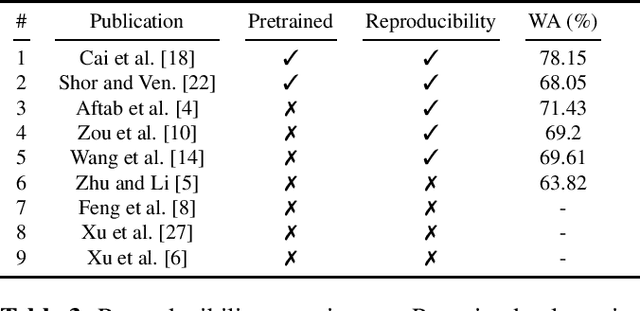

Apr 03, 2023Nikolaos Antoniou, Athanasios Katsamanis, Theodoros Giannakopoulos, Shrikanth Narayanan

There is an imminent need for guidelines and standard test sets to allow direct and fair comparisons of speech emotion recognition (SER). While resources, such as the Interactive Emotional Dyadic Motion Capture (IEMOCAP) database, have emerged as widely-adopted reference corpora for researchers to develop and test models for SER, published work reveals a wide range of assumptions and variety in its use that challenge reproducibility and generalization. Based on a critical review of the latest advances in SER using IEMOCAP as the use case, our work aims at two contributions: First, using an analysis of the recent literature, including assumptions made and metrics used therein, we provide a set of SER evaluation guidelines. Second, using recent publications with open-sourced implementations, we focus on reproducibility assessment in SER.

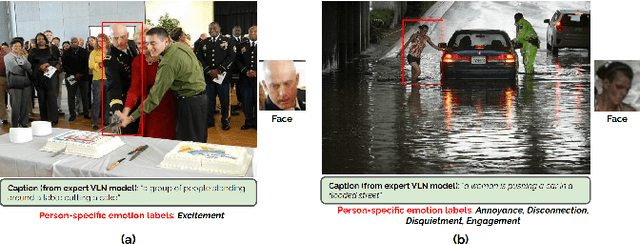

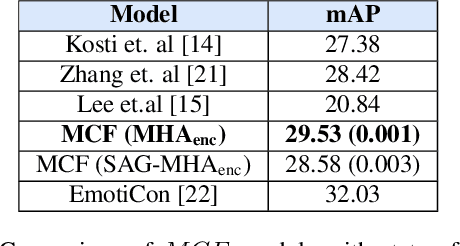

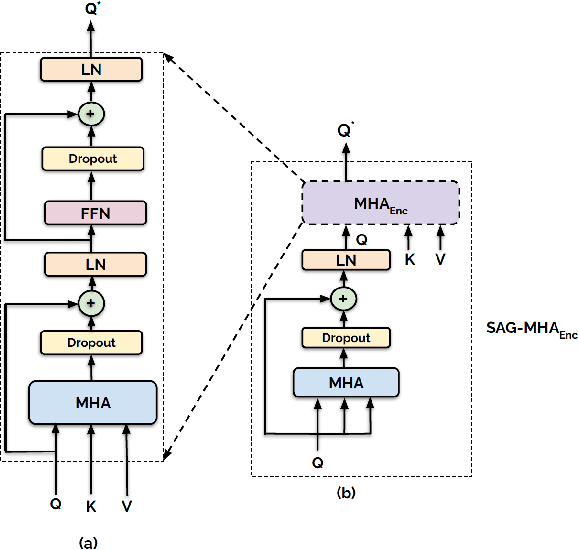

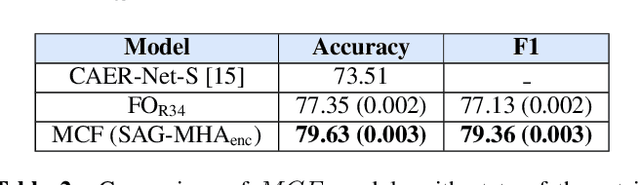

Contextually-rich human affect perception using multimodal scene information

Mar 13, 2023Digbalay Bose, Rajat Hebbar, Krishna Somandepalli, Shrikanth Narayanan

The process of human affect understanding involves the ability to infer person specific emotional states from various sources including images, speech, and language. Affect perception from images has predominantly focused on expressions extracted from salient face crops. However, emotions perceived by humans rely on multiple contextual cues including social settings, foreground interactions, and ambient visual scenes. In this work, we leverage pretrained vision-language (VLN) models to extract descriptions of foreground context from images. Further, we propose a multimodal context fusion (MCF) module to combine foreground cues with the visual scene and person-based contextual information for emotion prediction. We show the effectiveness of our proposed modular design on two datasets associated with natural scenes and TV shows.

A dataset for Audio-Visual Sound Event Detection in Movies

Feb 14, 2023Rajat Hebbar, Digbalay Bose, Krishna Somandepalli, Veena Vijai, Shrikanth Narayanan

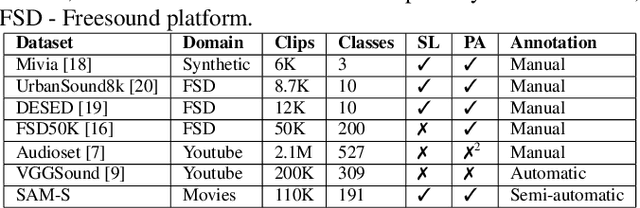

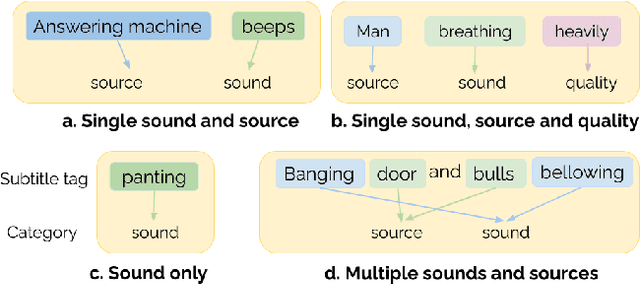

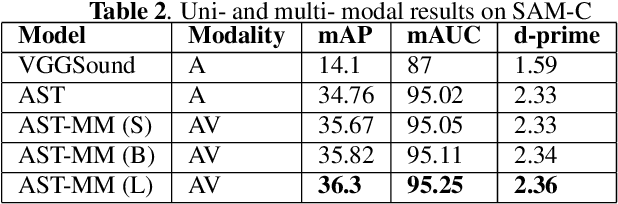

Audio event detection is a widely studied audio processing task, with applications ranging from self-driving cars to healthcare. In-the-wild datasets such as Audioset have propelled research in this field. However, many efforts typically involve manual annotation and verification, which is expensive to perform at scale. Movies depict various real-life and fictional scenarios which makes them a rich resource for mining a wide-range of audio events. In this work, we present a dataset of audio events called Subtitle-Aligned Movie Sounds (SAM-S). We use publicly-available closed-caption transcripts to automatically mine over 110K audio events from 430 movies. We identify three dimensions to categorize audio events: sound, source, quality, and present the steps involved to produce a final taxonomy of 245 sounds. We discuss the choices involved in generating the taxonomy, and also highlight the human-centered nature of sounds in our dataset. We establish a baseline performance for audio-only sound classification of 34.76% mean average precision and show that incorporating visual information can further improve the performance by about 5%. Data and code are made available for research at https://github.com/usc-sail/mica-subtitle-aligned-movie-sounds

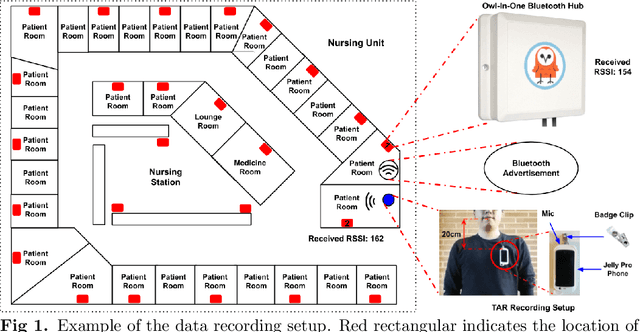

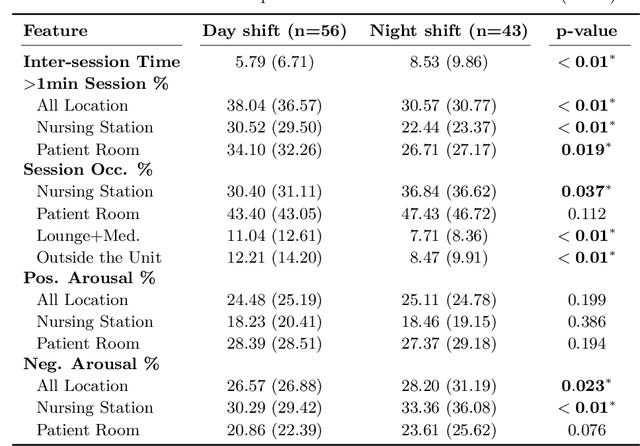

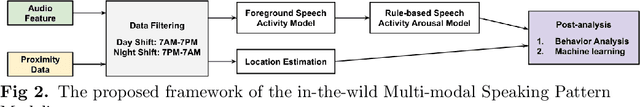

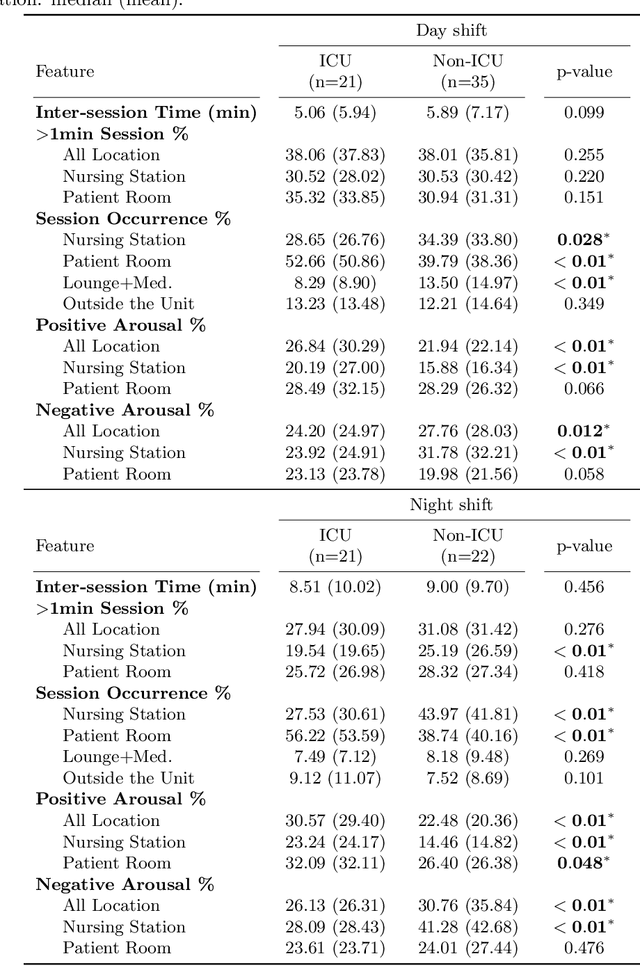

Exploring Workplace Behaviors through Speaking Patterns using Large-scale Multimodal Wearable Recordings: A Study of Healthcare Providers

Dec 18, 2022Tiantian Feng, Shrikanth Narayanan

Interpersonal spoken communication is central to human interaction and the exchange of information. Such interactive processes involve not only speech and spoken language but also non-verbal cues such as hand gestures, facial expressions, and nonverbal vocalization, that are used to express feelings and provide feedback. These multimodal communication signals carry a variety of information about the people: traits like gender and age as well as about physical and psychological states and behavior. This work uses wearable multimodal sensors to investigate interpersonal communication behaviors focusing on speaking patterns among healthcare providers with a focus on nurses. We analyze longitudinal data collected from $99$ nurses in a large hospital setting over ten weeks. The results indicate that speaking pattern differences across shift schedules and working units. Moreover, results show that speaking patterns combined with physiological measures can be used to predict affect measures and life satisfaction scores. The implementation of this work can be accessed at https://github.com/usc-sail/tiles-audio-arousal.

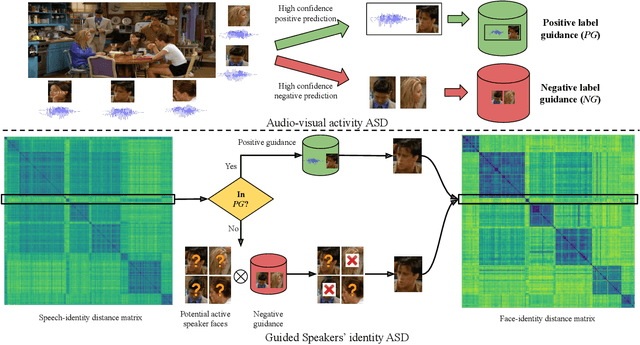

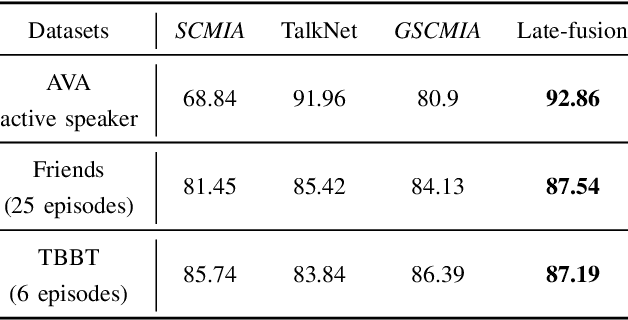

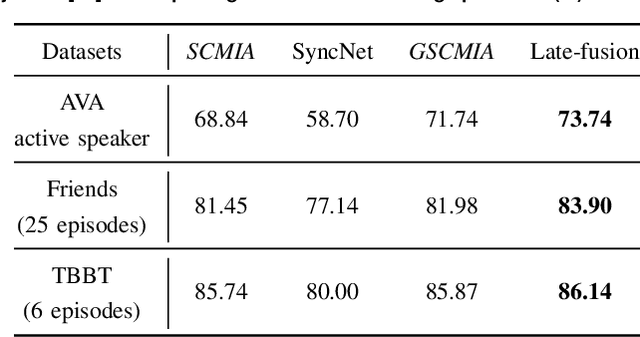

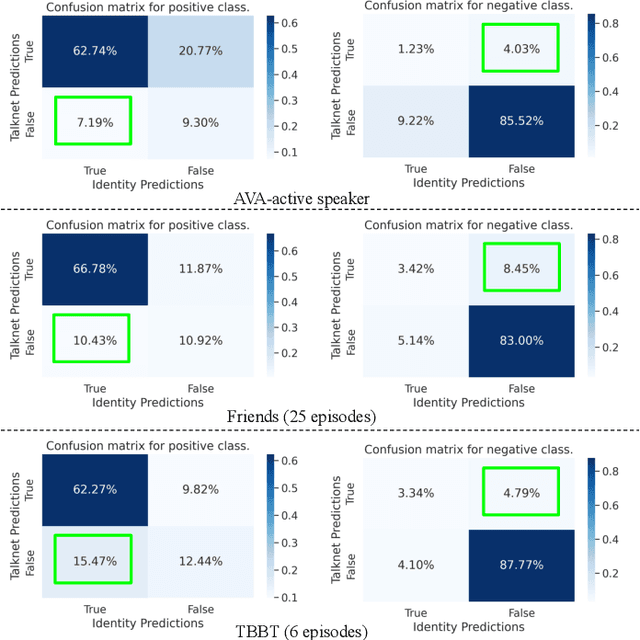

Audio-Visual Activity Guided Cross-Modal Identity Association for Active Speaker Detection

Dec 01, 2022Rahul Sharma, Shrikanth Narayanan

Active speaker detection in videos addresses associating a source face, visible in the video frames, with the underlying speech in the audio modality. The two primary sources of information to derive such a speech-face relationship are i) visual activity and its interaction with the speech signal and ii) co-occurrences of speakers' identities across modalities in the form of face and speech. The two approaches have their limitations: the audio-visual activity models get confused with other frequently occurring vocal activities, such as laughing and chewing, while the speakers' identity-based methods are limited to videos having enough disambiguating information to establish a speech-face association. Since the two approaches are independent, we investigate their complementary nature in this work. We propose a novel unsupervised framework to guide the speakers' cross-modal identity association with the audio-visual activity for active speaker detection. Through experiments on entertainment media videos from two benchmark datasets, the AVA active speaker (movies) and Visual Person Clustering Dataset (TV shows), we show that a simple late fusion of the two approaches enhances the active speaker detection performance.

Can Knowledge of End-to-End Text-to-Speech Models Improve Neural MIDI-to-Audio Synthesis Systems?

Nov 25, 2022Xuan Shi, Erica Cooper, Xin Wang, Junichi Yamagishi, Shrikanth Narayanan

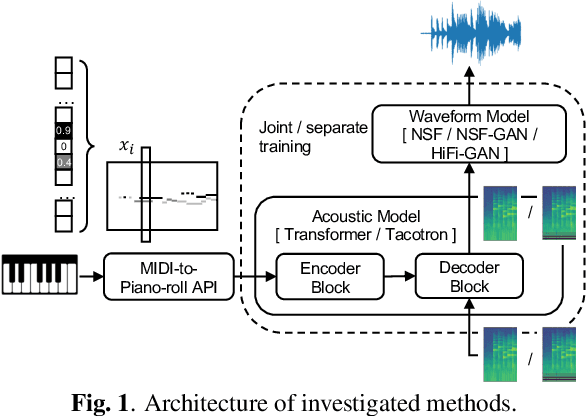

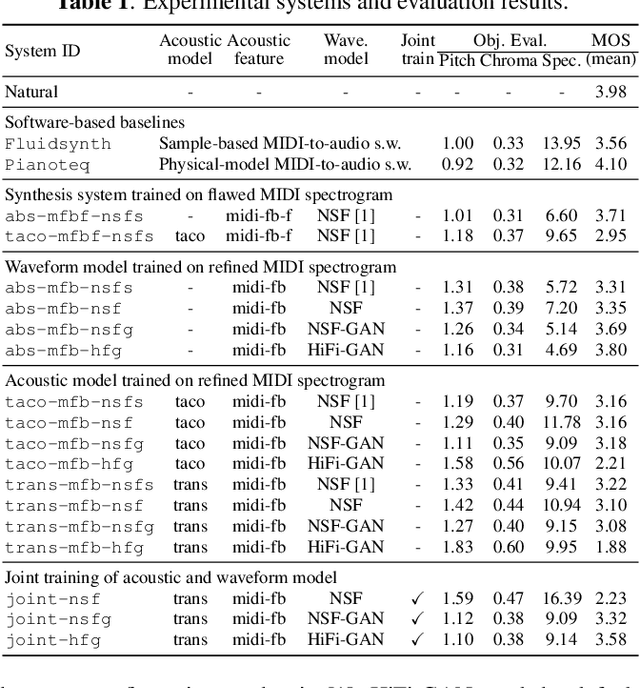

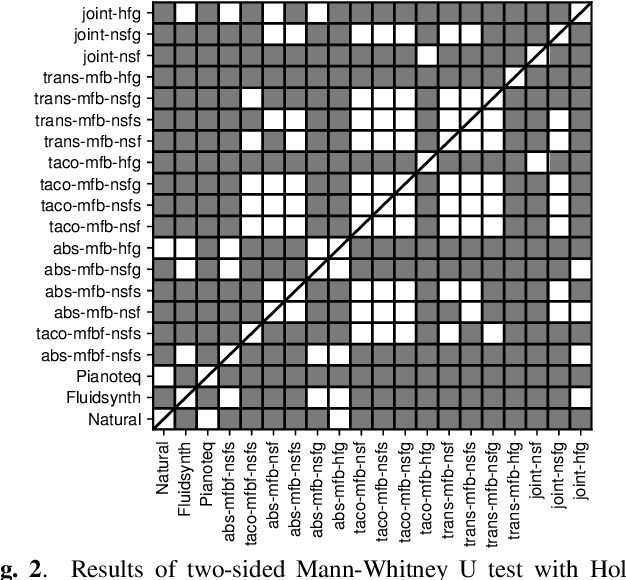

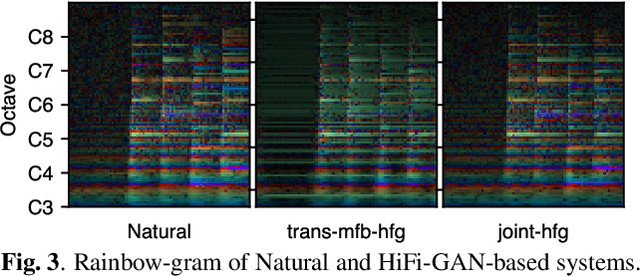

With the similarity between music and speech synthesis from symbolic input and the rapid development of text-to-speech (TTS) techniques, it is worthwhile to explore ways to improve the MIDI-to-audio performance by borrowing from TTS techniques. In this study, we analyze the shortcomings of a TTS-based MIDI-to-audio system and improve it in terms of feature computation, model selection, and training strategy, aiming to synthesize highly natural-sounding audio. Moreover, we conducted an extensive model evaluation through listening tests, pitch measurement, and spectrogram analysis. This work demonstrates not only synthesis of highly natural music but offers a thorough analytical approach and useful outcomes for the community. Our code and pre-trained models are open sourced at https://github.com/nii-yamagishilab/midi-to-audio.

A Context-Aware Computational Approach for Measuring Vocal Entrainment in Dyadic Conversations

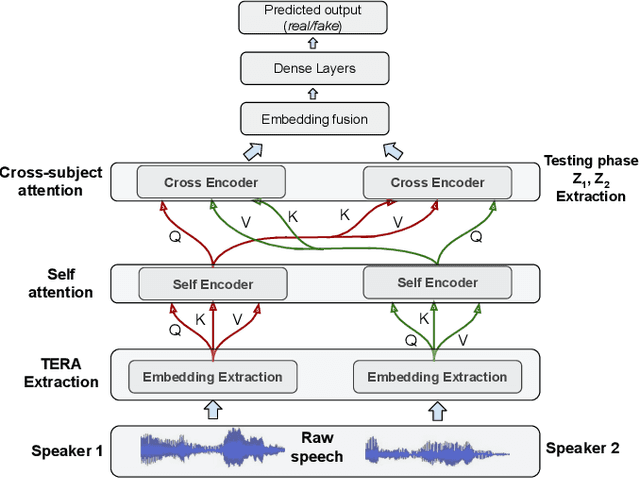

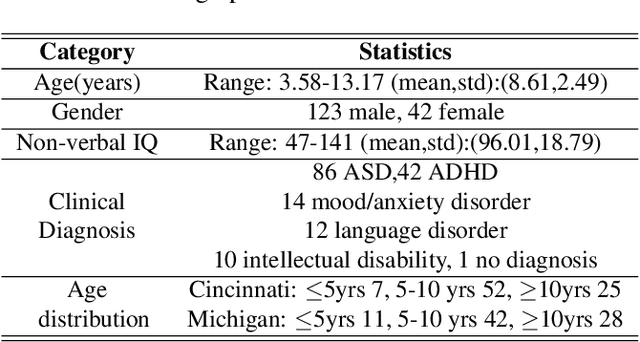

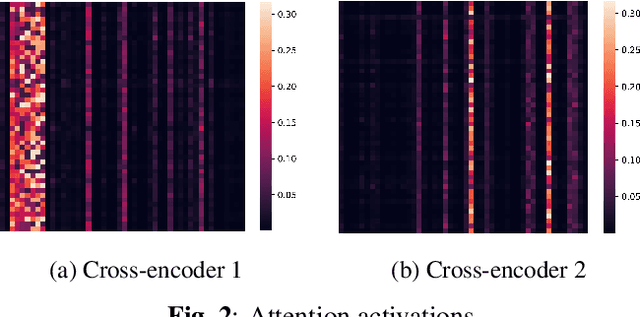

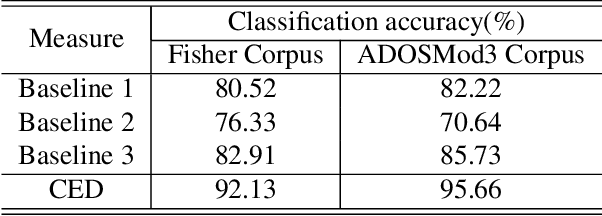

Nov 07, 2022Rimita Lahiri, Md Nasir, Catherine Lord, So Hyun Kim, Shrikanth Narayanan

Vocal entrainment is a social adaptation mechanism in human interaction, knowledge of which can offer useful insights to an individual's cognitive-behavioral characteristics. We propose a context-aware approach for measuring vocal entrainment in dyadic conversations. We use conformers(a combination of convolutional network and transformer) for capturing both short-term and long-term conversational context to model entrainment patterns in interactions across different domains. Specifically we use cross-subject attention layers to learn intra- as well as inter-personal signals from dyadic conversations. We first validate the proposed method based on classification experiments to distinguish between real(consistent) and fake(inconsistent/shuffled) conversations. Experimental results on interactions involving individuals with Autism Spectrum Disorder also show evidence of a statistically-significant association between the introduced entrainment measure and clinical scores relevant to symptoms, including across gender and age groups.

Using Emotion Embeddings to Transfer Knowledge Between Emotions, Languages, and Annotation Formats

Oct 31, 2022Georgios Chochlakis, Gireesh Mahajan, Sabyasachee Baruah, Keith Burghardt, Kristina Lerman, Shrikanth Narayanan

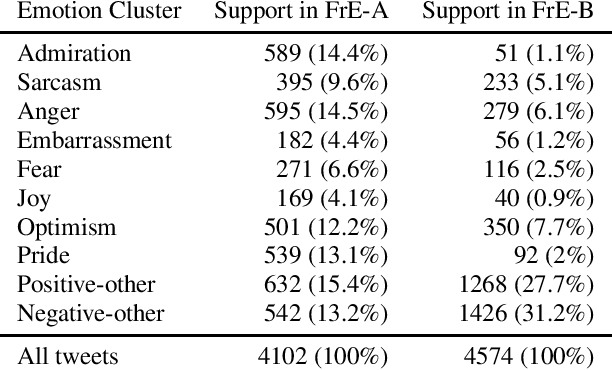

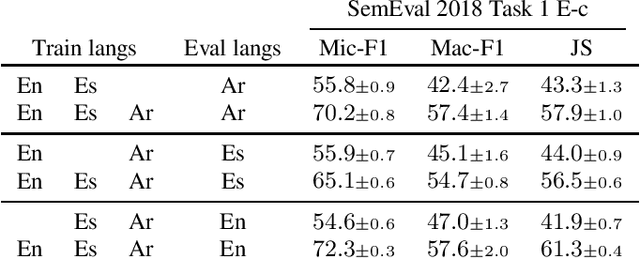

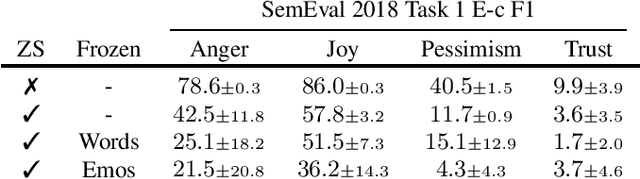

The need for emotional inference from text continues to diversify as more and more disciplines integrate emotions into their theories and applications. These needs include inferring different emotion types, handling multiple languages, and different annotation formats. A shared model between different configurations would enable the sharing of knowledge and a decrease in training costs, and would simplify the process of deploying emotion recognition models in novel environments. In this work, we study how we can build a single model that can transition between these different configurations by leveraging multilingual models and Demux, a transformer-based model whose input includes the emotions of interest, enabling us to dynamically change the emotions predicted by the model. Demux also produces emotion embeddings, and performing operations on them allows us to transition to clusters of emotions by pooling the embeddings of each cluster. We show that Demux can simultaneously transfer knowledge in a zero-shot manner to a new language, to a novel annotation format and to unseen emotions. Code is available at https://github.com/gchochla/Demux-MEmo .

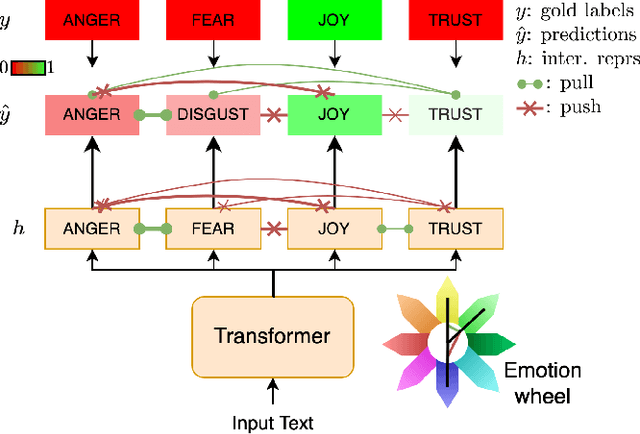

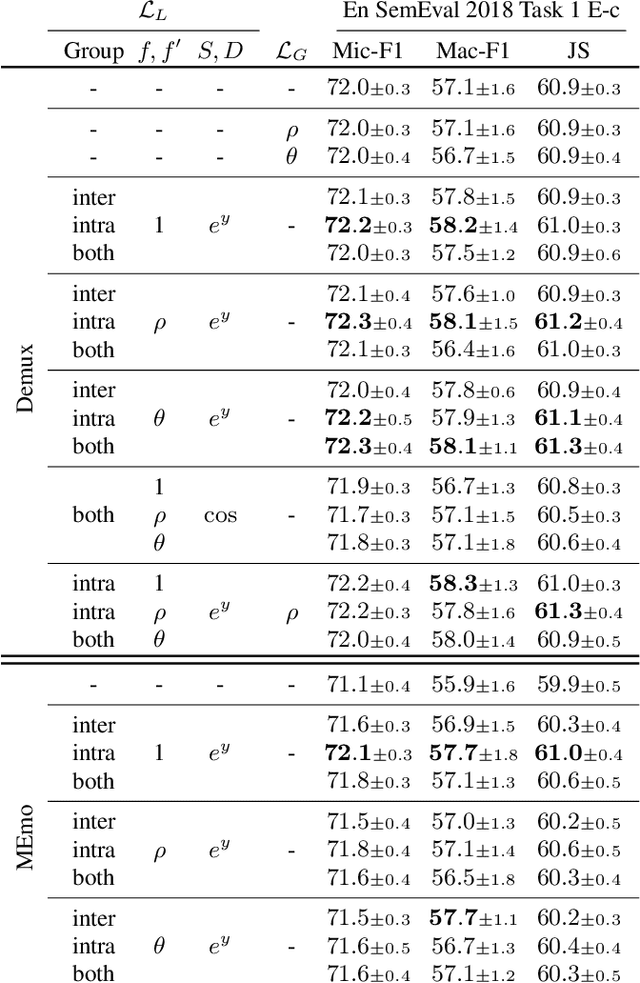

Leveraging Label Correlations in a Multi-label Setting: A Case Study in Emotion

Oct 28, 2022Georgios Chochlakis, Gireesh Mahajan, Sabyasachee Baruah, Keith Burghardt, Kristina Lerman, Shrikanth Narayanan

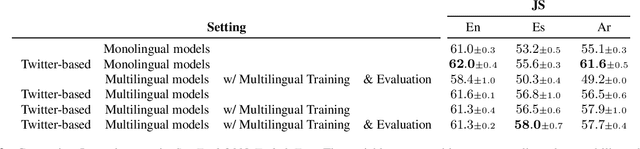

Detecting emotions expressed in text has become critical to a range of fields. In this work, we investigate ways to exploit label correlations in multi-label emotion recognition models to improve emotion detection. First, we develop two modeling approaches to the problem in order to capture word associations of the emotion words themselves, by either including the emotions in the input, or by leveraging Masked Language Modeling (MLM). Second, we integrate pairwise constraints of emotion representations as regularization terms alongside the classification loss of the models. We split these terms into two categories, local and global. The former dynamically change based on the gold labels, while the latter remain static during training. We demonstrate state-of-the-art performance across Spanish, English, and Arabic in SemEval 2018 Task 1 E-c using monolingual BERT-based models. On top of better performance, we also demonstrate improved robustness. Code is available at https://github.com/gchochla/Demux-MEmo.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge