A Review of Robot Learning for Manipulation: Challenges, Representations, and Algorithms

Jul 11, 2019Oliver Kroemer, Scott Niekum, George Konidaris

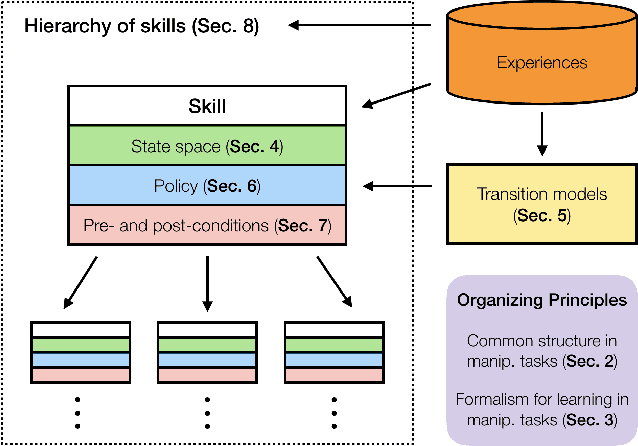

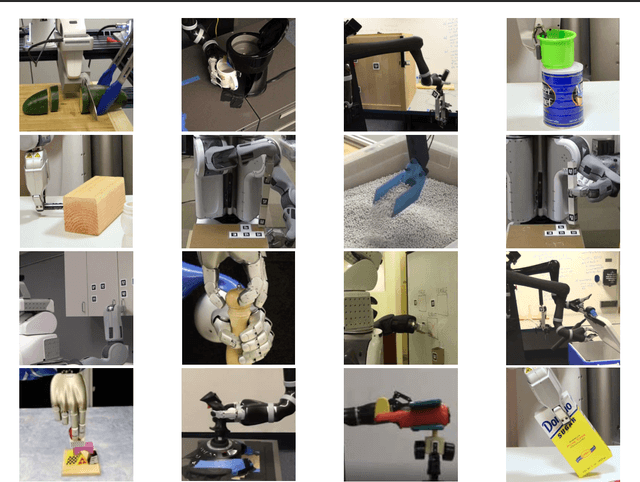

A key challenge in intelligent robotics is creating robots that are capable of directly interacting with the world around them to achieve their goals. The last decade has seen substantial growth in research on the problem of robot manipulation, which aims to exploit the increasing availability of affordable robot arms and grippers to create robots capable of directly interacting with the world to achieve their goals. Learning will be central to such autonomous systems, as the real world contains too much variation for a robot to expect to have an accurate model of its environment, the objects in it, or the skills required to manipulate them, in advance. We aim to survey a representative subset of that research which uses machine learning for manipulation. We describe a formalization of the robot manipulation learning problem that synthesizes existing research into a single coherent framework and highlight the many remaining research opportunities and challenges.

Ranking-Based Reward Extrapolation without Rankings

Jul 09, 2019Daniel S. Brown, Wonjoon Goo, Scott Niekum

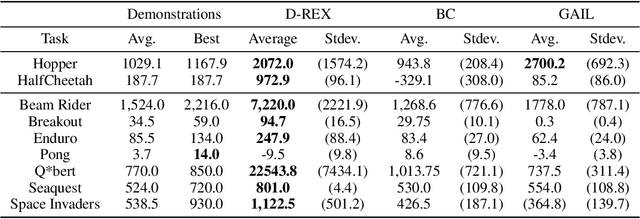

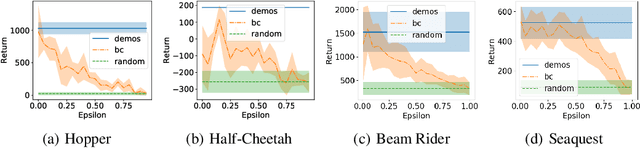

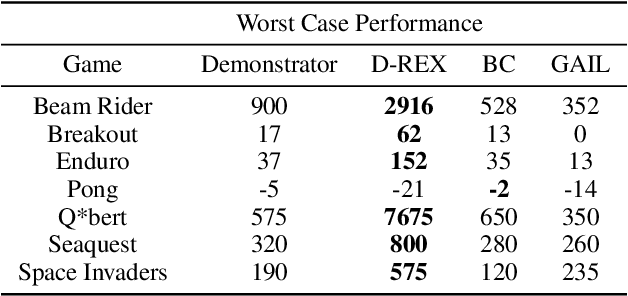

The performance of imitation learning is typically upper-bounded by the performance of the demonstrator. Recent empirical results show that imitation learning via ranked demonstrations allows for better-than-demonstrator performance; however, ranked demonstrations may be difficult to obtain, and little is known theoretically about when such methods can be expected to outperform the demonstrator. To address these issues, we first contribute a sufficient condition for when better-than-demonstrator performance is possible and discuss why ranked demonstrations can contribute to better-than-demonstrator performance. Building on this theory, we then introduce Disturbance-based Reward Extrapolation (D-REX), a ranking-based imitation learning method that injects noise into a policy learned through behavioral cloning to automatically generate ranked demonstrations. By generating rankings automatically, ranking-based imitation learning can be applied in traditional imitation learning settings where only unlabeled demonstrations are available. We empirically validate our approach on standard MuJoCo and Atari benchmarks and show that D-REX can utilize automatic rankings to significantly surpass the performance of the demonstrator and outperform standard imitation learning approaches. D-REX is the first imitation learning approach to achieve significant extrapolation beyond the demonstrator's performance without additional side-information or supervision, such as rewards or human preferences.

Hypothesis-Driven Skill Discovery for Hierarchical Deep Reinforcement Learning

May 27, 2019Caleb Chuck, Supawit Chockchowwat, Scott Niekum

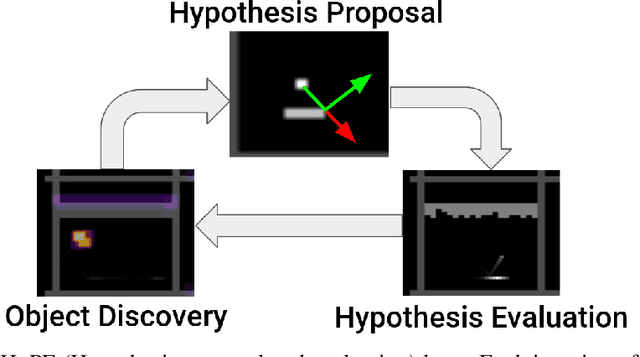

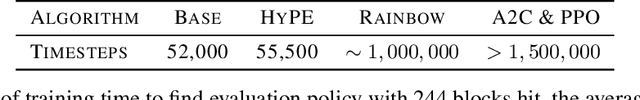

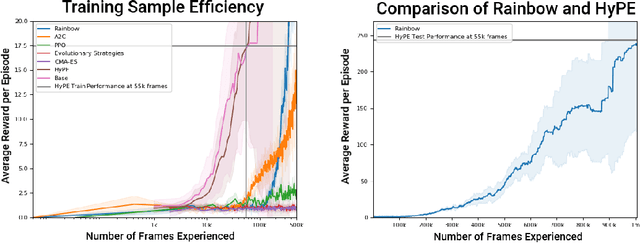

Deep reinforcement learning encompasses many versatile tools for designing learning agents that can perform well on a variety of high-dimensional visual tasks, ranging from video games to robotic manipulation. However, these methods typically suffer from poor sample efficiency, partially because they strive to be largely problem-agnostic. In this work, we demonstrate the utility of a different approach that is extremely sample efficient, but limited to object-centric tasks that (approximately) obey basic physical laws. Specifically, we propose the Hypothesis Proposal and Evaluation (HyPE) algorithm, which utilizes a small set of intuitive assumptions about the behavior of objects in the physical world (or in games that mimic physics) to automatically define and learn hierarchical skills in a highly efficient manner. HyPE does this by discovering objects from raw pixel data, generating hypotheses about the controllability of observed changes in object state, and learning a hierarchy of skills that can test these hypotheses and control increasingly complex interactions with objects. We demonstrate that HyPE can dramatically improve sample efficiency when learning a high-quality pixels-to-actions policy; in the popular benchmark task, Breakout, HyPE learns an order of magnitude faster than common baseline reinforcement learning and evolutionary strategies for policy learning.

Extrapolating Beyond Suboptimal Demonstrations via Inverse Reinforcement Learning from Observations

May 14, 2019Daniel S. Brown, Wonjoon Goo, Prabhat Nagarajan, Scott Niekum

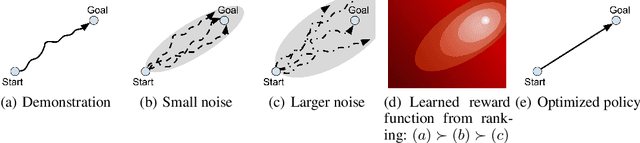

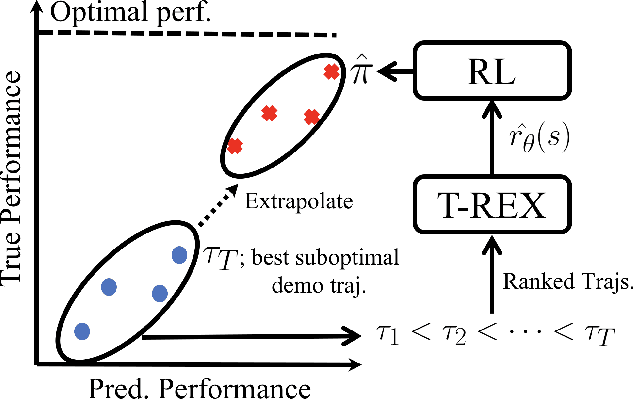

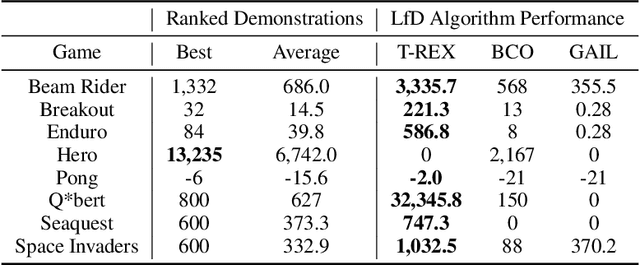

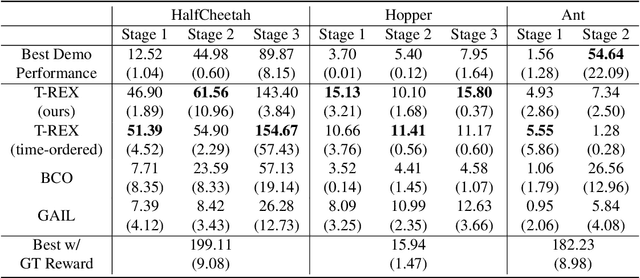

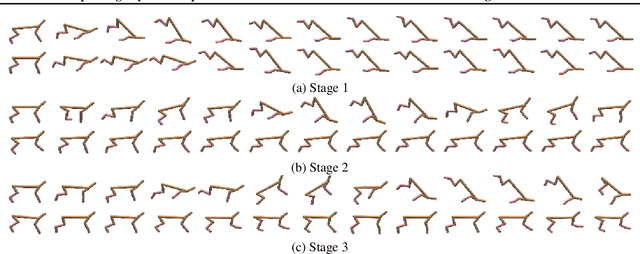

A critical flaw of existing inverse reinforcement learning (IRL) methods is their inability to significantly outperform the demonstrator. This is because IRL typically seeks a reward function that makes the demonstrator appear near-optimal, rather than inferring the underlying intentions of the demonstrator that may have been poorly executed in practice. In this paper, we introduce a novel reward-learning-from-observation algorithm, Trajectory-ranked Reward EXtrapolation (T-REX), that extrapolates beyond a set of (approximately) ranked demonstrations in order to infer high-quality reward functions from a set of potentially poor demonstrations. When combined with deep reinforcement learning, T-REX outperforms state-of-the-art imitation learning and IRL methods on multiple Atari and MuJoCo benchmark tasks and achieves performance that is often more than twice the performance of the best demonstration. We also demonstrate that T-REX is robust to ranking noise and can accurately extrapolate intention by simply watching a learner noisily improve at a task over time.

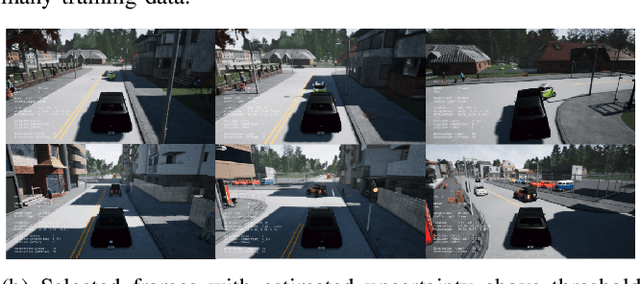

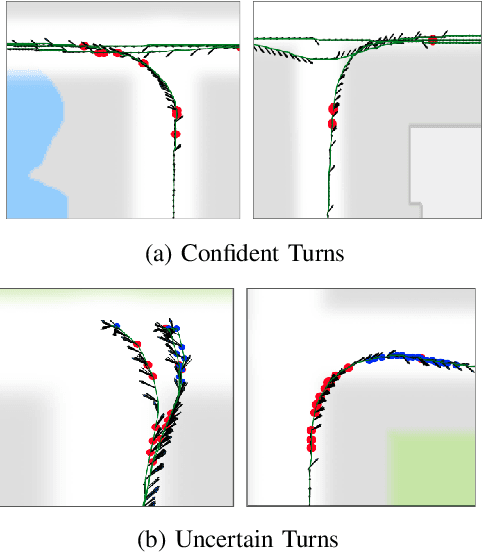

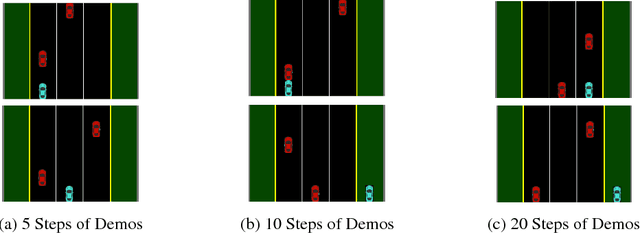

Uncertainty-Aware Data Aggregation for Deep Imitation Learning

May 07, 2019Yuchen Cui, David Isele, Scott Niekum, Kikuo Fujimura

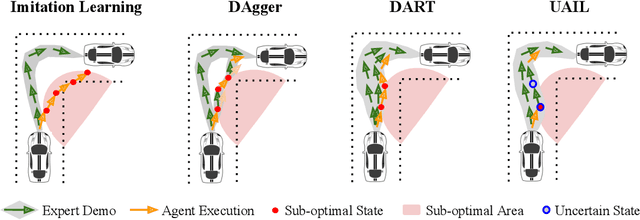

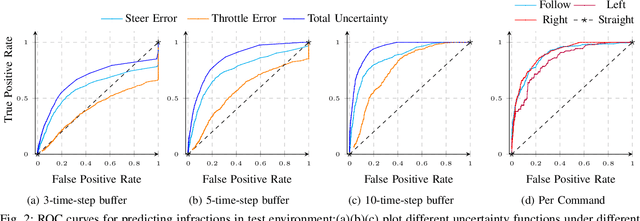

Estimating statistical uncertainties allows autonomous agents to communicate their confidence during task execution and is important for applications in safety-critical domains such as autonomous driving. In this work, we present the uncertainty-aware imitation learning (UAIL) algorithm for improving end-to-end control systems via data aggregation. UAIL applies Monte Carlo Dropout to estimate uncertainty in the control output of end-to-end systems, using states where it is uncertain to selectively acquire new training data. In contrast to prior data aggregation algorithms that force human experts to visit sub-optimal states at random, UAIL can anticipate its own mistakes and switch control to the expert in order to prevent visiting a series of sub-optimal states. Our experimental results from simulated driving tasks demonstrate that our proposed uncertainty estimation method can be leveraged to reliably predict infractions. Our analysis shows that UAIL outperforms existing data aggregation algorithms on a series of benchmark tasks.

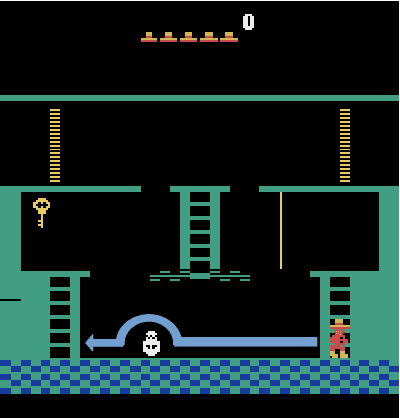

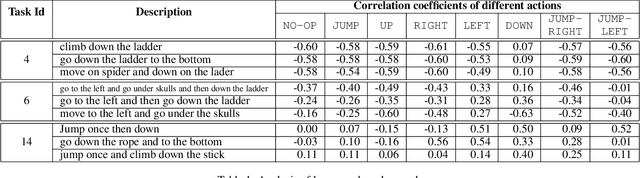

Using Natural Language for Reward Shaping in Reinforcement Learning

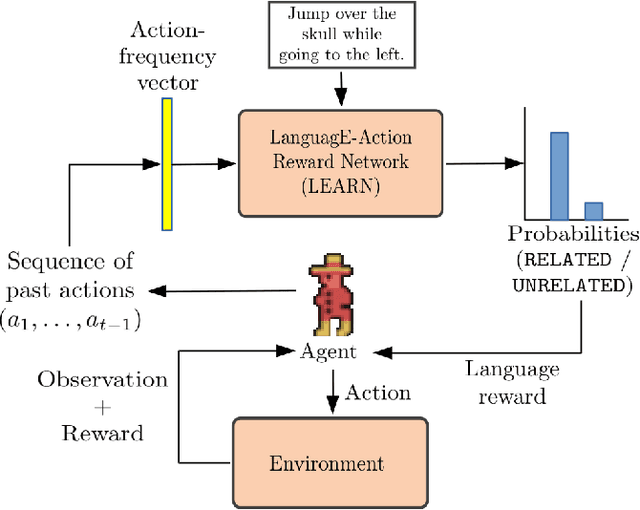

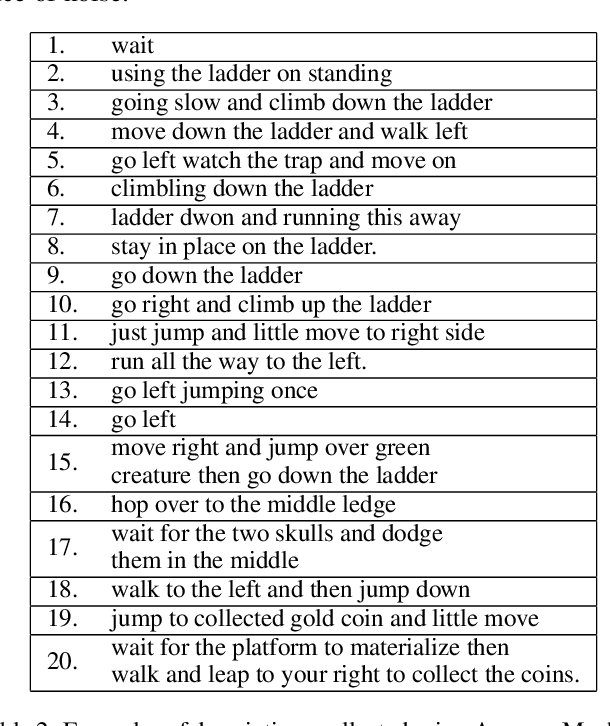

Mar 05, 2019Prasoon Goyal, Scott Niekum, Raymond J. Mooney

Recent reinforcement learning (RL) approaches have shown strong performance in complex domains such as Atari games, but are often highly sample inefficient. A common approach to reduce interaction time with the environment is to use reward shaping, which involves carefully designing reward functions that provide the agent intermediate rewards for progress towards the goal. However, designing appropriate shaping rewards is known to be difficult as well as time-consuming. In this work, we address this problem by using natural language instructions to perform reward shaping. We propose the LanguagE-Action Reward Network (LEARN), a framework that maps free-form natural language instructions to intermediate rewards based on actions taken by the agent. These intermediate language-based rewards can seamlessly be integrated into any standard reinforcement learning algorithm. We experiment with Montezuma's Revenge from the Atari Learning Environment, a popular benchmark in RL. Our experiments on a diverse set of 15 tasks demonstrate that, for the same number of interactions with the environment, language-based rewards lead to successful completion of the task 60% more often on average, compared to learning without language.

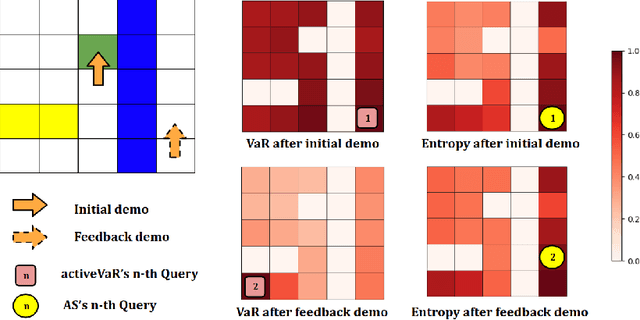

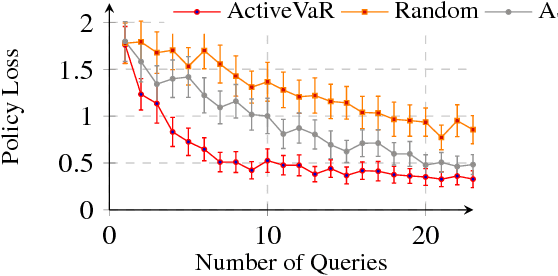

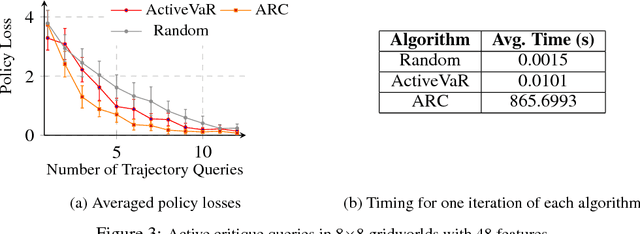

Risk-Aware Active Inverse Reinforcement Learning

Jan 08, 2019Daniel S. Brown, Yuchen Cui, Scott Niekum

Active learning from demonstration allows a robot to query a human for specific types of input to achieve efficient learning. Existing work has explored a variety of active query strategies; however, to our knowledge, none of these strategies directly minimize the performance risk of the policy the robot is learning. Utilizing recent advances in performance bounds for inverse reinforcement learning, we propose a risk-aware active inverse reinforcement learning algorithm that focuses active queries on areas of the state space with the potential for large generalization error. We show that risk-aware active learning outperforms standard active IRL approaches on gridworld, simulated driving, and table setting tasks, while also providing a performance-based stopping criterion that allows a robot to know when it has received enough demonstrations to safely perform a task.

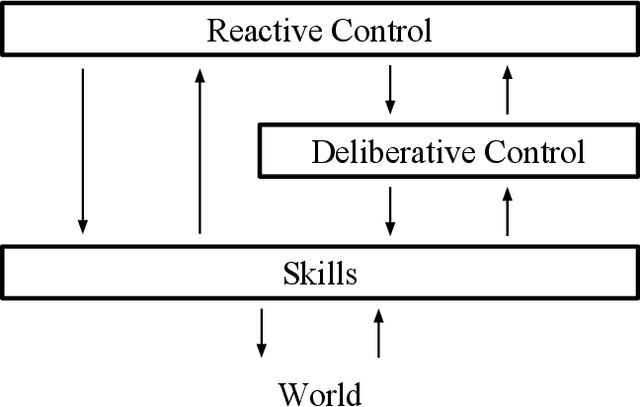

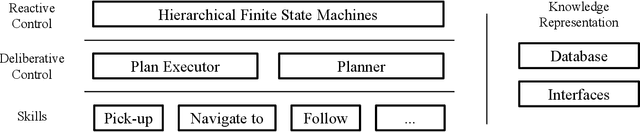

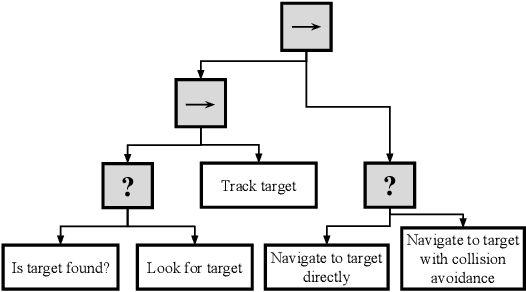

LAAIR: A Layered Architecture for Autonomous Interactive Robots

Nov 09, 2018Yuqian Jiang, Nick Walker, Minkyu Kim, Nicolas Brissonneau, Daniel S. Brown, Justin W. Hart, Scott Niekum, Luis Sentis, Peter Stone

When developing general purpose robots, the overarching software architecture can greatly affect the ease of accomplishing various tasks. Initial efforts to create unified robot systems in the 1990s led to hybrid architectures, emphasizing a hierarchy in which deliberative plans direct the use of reactive skills. However, since that time there has been significant progress in the low-level skills available to robots, including manipulation and perception, making it newly feasible to accomplish many more tasks in real-world domains. There is thus renewed optimism that robots will be able to perform a wide array of tasks while maintaining responsiveness to human operators. However, the top layer in traditional hybrid architectures, designed to achieve long-term goals, can make it difficult to react quickly to human interactions during goal-driven execution. To mitigate this difficulty, we propose a novel architecture that supports such transitions by adding a top-level reactive module which has flexible access to both reactive skills and a deliberative control module. To validate this architecture, we present a case study of its application on a domestic service robot platform.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge