Qiuhong Ke

Unified Multi-View Orthonormal Non-Negative Graph Based Clustering Framework

Nov 03, 2022Abstract:Spectral clustering is an effective methodology for unsupervised learning. Most traditional spectral clustering algorithms involve a separate two-step procedure and apply the transformed new representations for the final clustering results. Recently, much progress has been made to utilize the non-negative feature property in real-world data and to jointly learn the representation and clustering results. However, to our knowledge, no previous work considers a unified model that incorporates the important multi-view information with those properties, which severely limits the performance of existing methods. In this paper, we formulate a novel clustering model, which exploits the non-negative feature property and, more importantly, incorporates the multi-view information into a unified joint learning framework: the unified multi-view orthonormal non-negative graph based clustering framework (Umv-ONGC). Then, we derive an effective three-stage iterative solution for the proposed model and provide analytic solutions for the three sub-problems from the three stages. We also explore, for the first time, the multi-model non-negative graph-based approach to clustering data based on deep features. Extensive experiments on three benchmark data sets demonstrate the effectiveness of the proposed method.

Adaptive Local-Component-aware Graph Convolutional Network for One-shot Skeleton-based Action Recognition

Sep 21, 2022

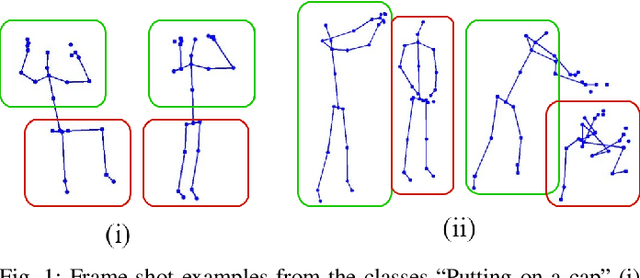

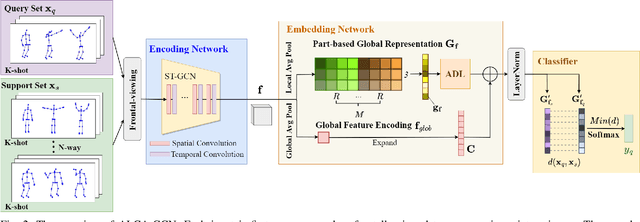

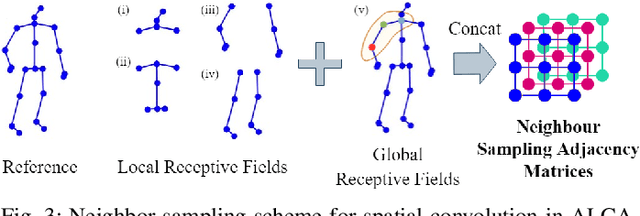

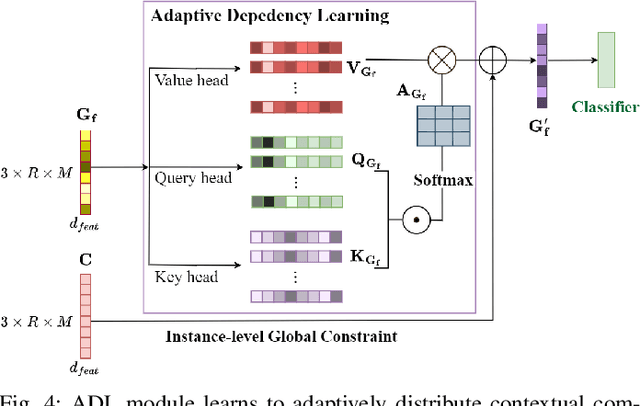

Abstract:Skeleton-based action recognition receives increasing attention because the skeleton representations reduce the amount of training data by eliminating visual information irrelevant to actions. To further improve the sample efficiency, meta-learning-based one-shot learning solutions were developed for skeleton-based action recognition. These methods find the nearest neighbor according to the similarity between instance-level global average embedding. However, such measurement holds unstable representativity due to inadequate generalized learning on local invariant and noisy features, while intuitively, more fine-grained recognition usually relies on determining key local body movements. To address this limitation, we present the Adaptive Local-Component-aware Graph Convolutional Network, which replaces the comparison metric with a focused sum of similarity measurements on aligned local embedding of action-critical spatial/temporal segments. Comprehensive one-shot experiments on the public benchmark of NTU-RGB+D 120 indicate that our method provides a stronger representation than the global embedding and helps our model reach state-of-the-art.

Dynamic Spatio-Temporal Specialization Learning for Fine-Grained Action Recognition

Sep 03, 2022

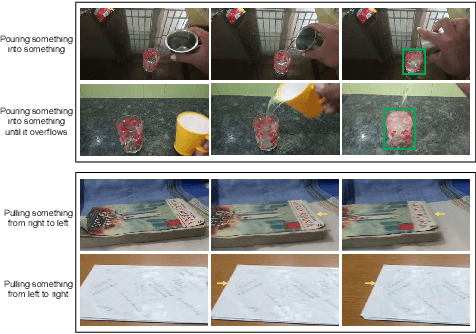

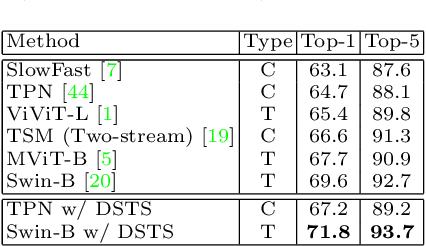

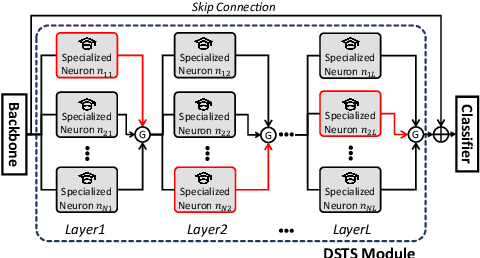

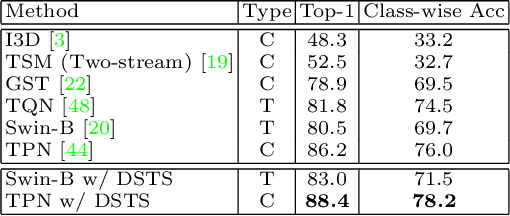

Abstract:The goal of fine-grained action recognition is to successfully discriminate between action categories with subtle differences. To tackle this, we derive inspiration from the human visual system which contains specialized regions in the brain that are dedicated towards handling specific tasks. We design a novel Dynamic Spatio-Temporal Specialization (DSTS) module, which consists of specialized neurons that are only activated for a subset of samples that are highly similar. During training, the loss forces the specialized neurons to learn discriminative fine-grained differences to distinguish between these similar samples, improving fine-grained recognition. Moreover, a spatio-temporal specialization method further optimizes the architectures of the specialized neurons to capture either more spatial or temporal fine-grained information, to better tackle the large range of spatio-temporal variations in the videos. Lastly, we design an Upstream-Downstream Learning algorithm to optimize our model's dynamic decisions during training, improving the performance of our DSTS module. We obtain state-of-the-art performance on two widely-used fine-grained action recognition datasets.

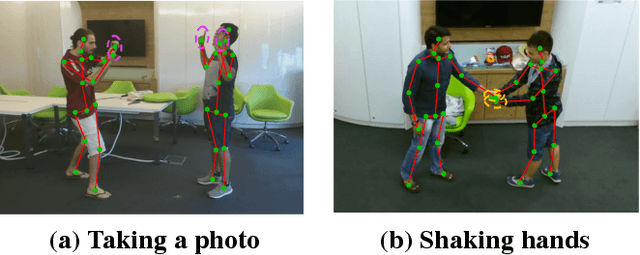

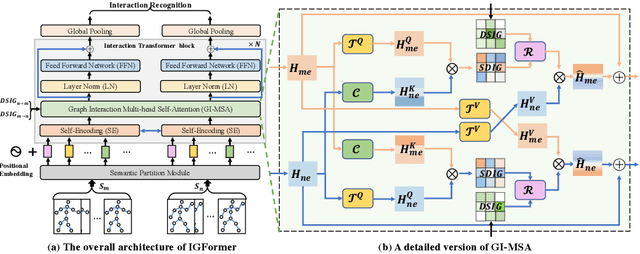

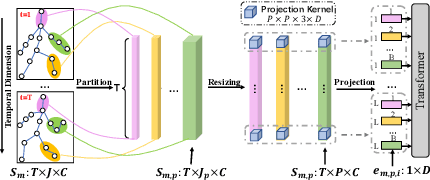

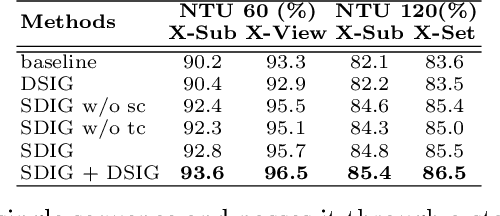

IGFormer: Interaction Graph Transformer for Skeleton-based Human Interaction Recognition

Jul 25, 2022

Abstract:Human interaction recognition is very important in many applications. One crucial cue in recognizing an interaction is the interactive body parts. In this work, we propose a novel Interaction Graph Transformer (IGFormer) network for skeleton-based interaction recognition via modeling the interactive body parts as graphs. More specifically, the proposed IGFormer constructs interaction graphs according to the semantic and distance correlations between the interactive body parts, and enhances the representation of each person by aggregating the information of the interactive body parts based on the learned graphs. Furthermore, we propose a Semantic Partition Module to transform each human skeleton sequence into a Body-Part-Time sequence to better capture the spatial and temporal information of the skeleton sequence for learning the graphs. Extensive experiments on three benchmark datasets demonstrate that our model outperforms the state-of-the-art with a significant margin.

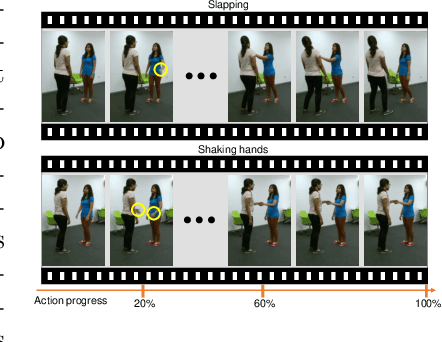

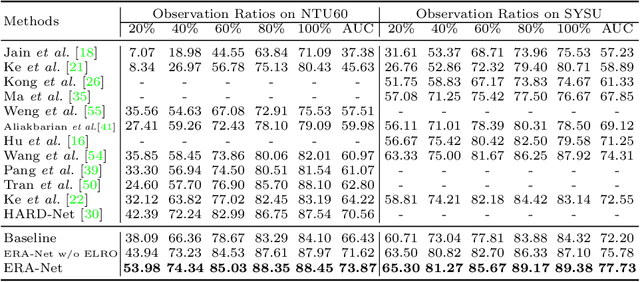

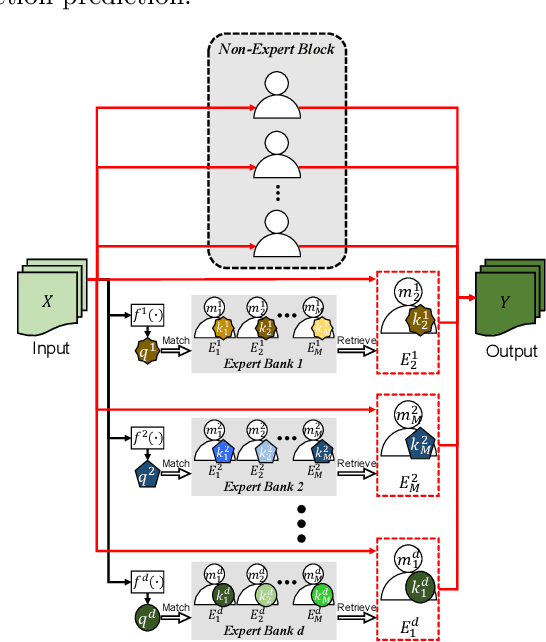

ERA: Expert Retrieval and Assembly for Early Action Prediction

Jul 22, 2022

Abstract:Early action prediction aims to successfully predict the class label of an action before it is completely performed. This is a challenging task because the beginning stages of different actions can be very similar, with only minor subtle differences for discrimination. In this paper, we propose a novel Expert Retrieval and Assembly (ERA) module that retrieves and assembles a set of experts most specialized at using discriminative subtle differences, to distinguish an input sample from other highly similar samples. To encourage our model to effectively use subtle differences for early action prediction, we push experts to discriminate exclusively between samples that are highly similar, forcing these experts to learn to use subtle differences that exist between those samples. Additionally, we design an effective Expert Learning Rate Optimization method that balances the experts' optimization and leads to better performance. We evaluate our ERA module on four public action datasets and achieve state-of-the-art performance.

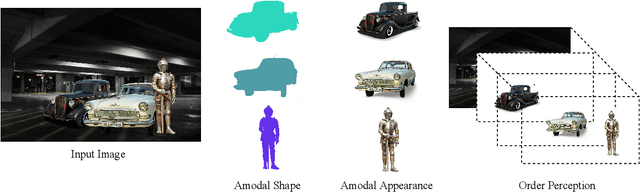

Image Amodal Completion: A Survey

Jul 05, 2022

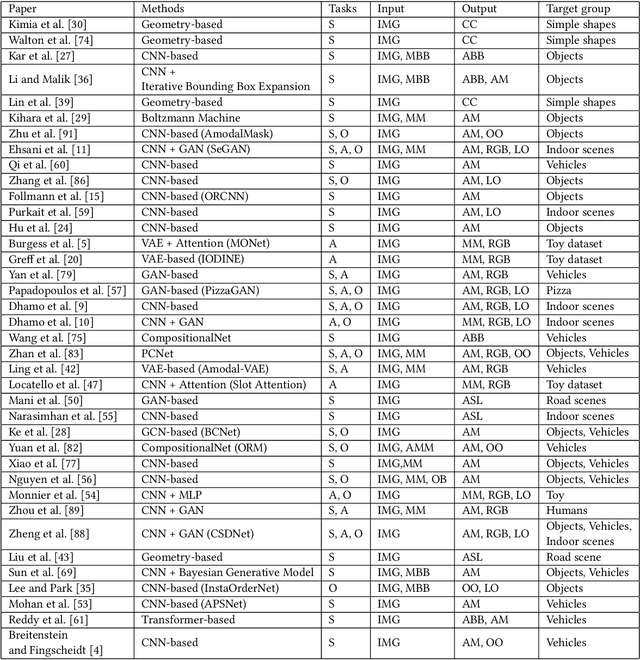

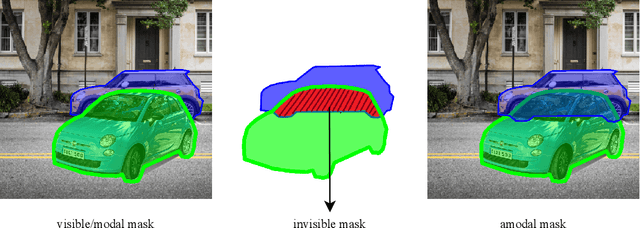

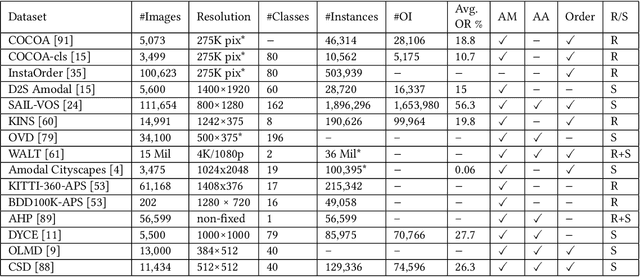

Abstract:Existing computer vision systems can compete with humans in understanding the visible parts of objects, but still fall far short of humans when it comes to depicting the invisible parts of partially occluded objects. Image amodal completion aims to equip computers with human-like amodal completion functions to understand an intact object despite it being partially occluded. The main purpose of this survey is to provide an intuitive understanding of the research hotspots, key technologies and future trends in the field of image amodal completion. Firstly, we present a comprehensive review of the latest literature in this emerging field, exploring three key tasks in image amodal completion, including amodal shape completion, amodal appearance completion, and order perception. Then we examine popular datasets related to image amodal completion along with their common data collection methods and evaluation metrics. Finally, we discuss real-world applications and future research directions for image amodal completion, facilitating the reader's understanding of the challenges of existing technologies and upcoming research trends.

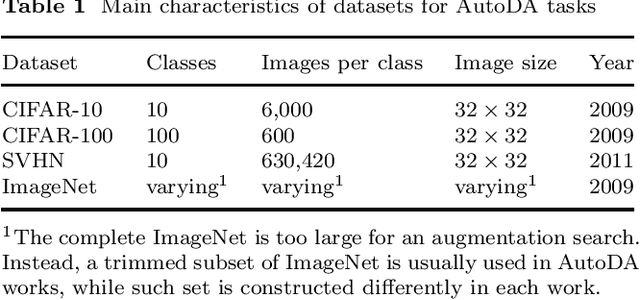

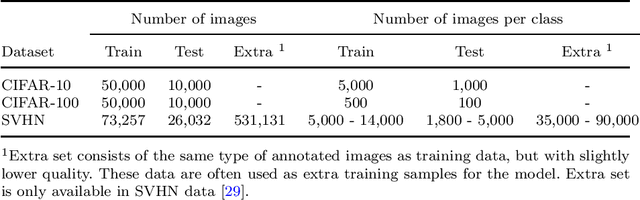

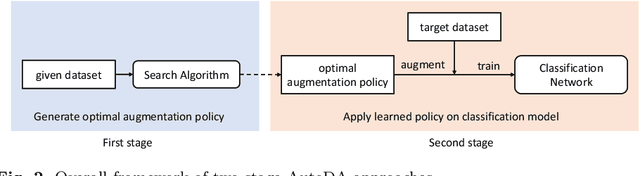

A Survey of Automated Data Augmentation Algorithms for Deep Learning-based Image Classication Tasks

Jun 14, 2022

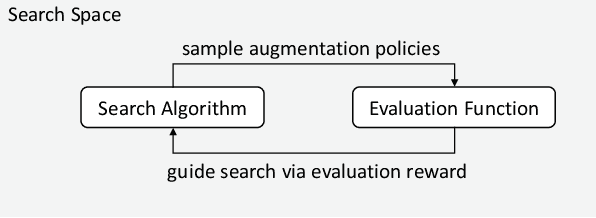

Abstract:In recent years, one of the most popular techniques in the computer vision community has been the deep learning technique. As a data-driven technique, deep model requires enormous amounts of accurately labelled training data, which is often inaccessible in many real-world applications. A data-space solution is Data Augmentation (DA), that can artificially generate new images out of original samples. Image augmentation strategies can vary by dataset, as different data types might require different augmentations to facilitate model training. However, the design of DA policies has been largely decided by the human experts with domain knowledge, which is considered to be highly subjective and error-prone. To mitigate such problem, a novel direction is to automatically learn the image augmentation policies from the given dataset using Automated Data Augmentation (AutoDA) techniques. The goal of AutoDA models is to find the optimal DA policies that can maximize the model performance gains. This survey discusses the underlying reasons of the emergence of AutoDA technology from the perspective of image classification. We identify three key components of a standard AutoDA model: a search space, a search algorithm and an evaluation function. Based on their architecture, we provide a systematic taxonomy of existing image AutoDA approaches. This paper presents the major works in AutoDA field, discussing their pros and cons, and proposing several potential directions for future improvements.

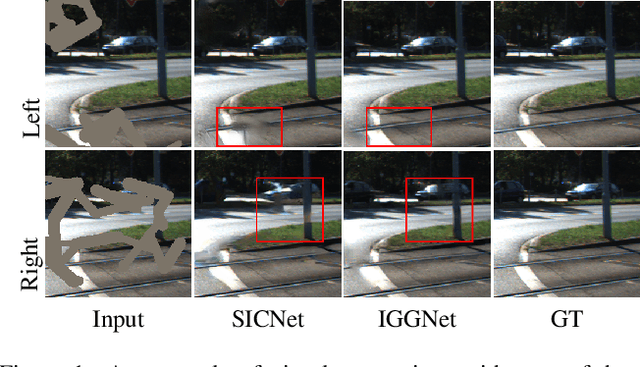

Iterative Geometry-Aware Cross Guidance Network for Stereo Image Inpainting

May 11, 2022

Abstract:Currently, single image inpainting has achieved promising results based on deep convolutional neural networks. However, inpainting on stereo images with missing regions has not been explored thoroughly, which is also a significant but different problem. One crucial requirement for stereo image inpainting is stereo consistency. To achieve it, we propose an Iterative Geometry-Aware Cross Guidance Network (IGGNet). The IGGNet contains two key ingredients, i.e., a Geometry-Aware Attention (GAA) module and an Iterative Cross Guidance (ICG) strategy. The GAA module relies on the epipolar geometry cues and learns the geometry-aware guidance from one view to another, which is beneficial to make the corresponding regions in two views consistent. However, learning guidance from co-existing missing regions is challenging. To address this issue, the ICG strategy is proposed, which can alternately narrow down the missing regions of the two views in an iterative manner. Experimental results demonstrate that our proposed network outperforms the latest stereo image inpainting model and state-of-the-art single image inpainting models.

Spatial-Temporal Transformer for 3D Point Cloud Sequences

Oct 19, 2021Abstract:Effective learning of spatial-temporal information within a point cloud sequence is highly important for many down-stream tasks such as 4D semantic segmentation and 3D action recognition. In this paper, we propose a novel framework named Point Spatial-Temporal Transformer (PST2) to learn spatial-temporal representations from dynamic 3D point cloud sequences. Our PST2 consists of two major modules: a Spatio-Temporal Self-Attention (STSA) module and a Resolution Embedding (RE) module. Our STSA module is introduced to capture the spatial-temporal context information across adjacent frames, while the RE module is proposed to aggregate features across neighbors to enhance the resolution of feature maps. We test the effectiveness our PST2 with two different tasks on point cloud sequences, i.e., 4D semantic segmentation and 3D action recognition. Extensive experiments on three benchmarks show that our PST2 outperforms existing methods on all datasets. The effectiveness of our STSA and RE modules have also been justified with ablation experiments.

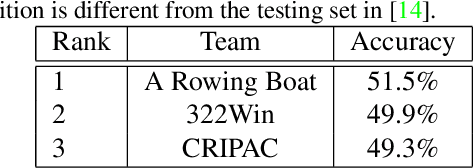

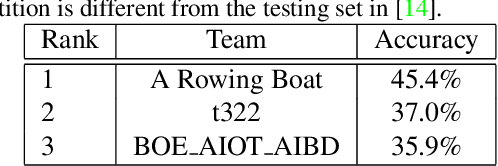

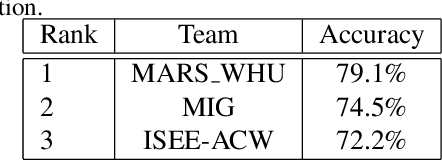

The Multi-Modal Video Reasoning and Analyzing Competition

Aug 18, 2021

Abstract:In this paper, we introduce the Multi-Modal Video Reasoning and Analyzing Competition (MMVRAC) workshop in conjunction with ICCV 2021. This competition is composed of four different tracks, namely, video question answering, skeleton-based action recognition, fisheye video-based action recognition, and person re-identification, which are based on two datasets: SUTD-TrafficQA and UAV-Human. We summarize the top-performing methods submitted by the participants in this competition and show their results achieved in the competition.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge