Matthew Barth

Dual LiDAR-Based Traffic Movement Count Estimation at a Signalized Intersection: Deployment, Data Collection, and Preliminary Analysis

Jul 17, 2025Abstract:Traffic Movement Count (TMC) at intersections is crucial for optimizing signal timings, assessing the performance of existing traffic control measures, and proposing efficient lane configurations to minimize delays, reduce congestion, and promote safety. Traditionally, methods such as manual counting, loop detectors, pneumatic road tubes, and camera-based recognition have been used for TMC estimation. Although generally reliable, camera-based TMC estimation is prone to inaccuracies under poor lighting conditions during harsh weather and nighttime. In contrast, Light Detection and Ranging (LiDAR) technology is gaining popularity in recent times due to reduced costs and its expanding use in 3D object detection, tracking, and related applications. This paper presents the authors' endeavor to develop, deploy and evaluate a dual-LiDAR system at an intersection in the city of Rialto, California, for TMC estimation. The 3D bounding box detections from the two LiDARs are used to classify vehicle counts based on traffic directions, vehicle movements, and vehicle classes. This work discusses the estimated TMC results and provides insights into the observed trends and irregularities. Potential improvements are also discussed that could enhance not only TMC estimation, but also trajectory forecasting and intent prediction at intersections.

The Role of Integrity Monitoring in Connected and Automated Vehicles: Current State-of-Practice and Future Directions

Feb 07, 2025

Abstract:Connected and Automated Vehicle (CAV) research has gained traction in the last decade due to significant advancements in perception, navigation, communication, and control functions. Accurate and reliable position information is needed to meet the requirements of CAV applications, especially when safety is concerned. With the advent of various perception sensors (e.g. camera, LiDAR, etc.), the vehicular positioning system has improved both in accuracy and robustness. Vehicle-to-Vehicle (V2V) and Vehicle-to-Infrastructure (V2I) based cooperative positioning can improve the accuracy of the position estimates, but the integrity risks involved in multi-sensor fusion in a cooperative environment have not yet been fully explored. This paper reviews existing research in the field of positioning Integrity Monitoring (IM) and identifies various research gaps. Particular attention has been placed on identifying research that highlights cooperative IM methods. This analysis helps pave the way for the development of new IM frameworks for cooperative positioning solutions in the future.

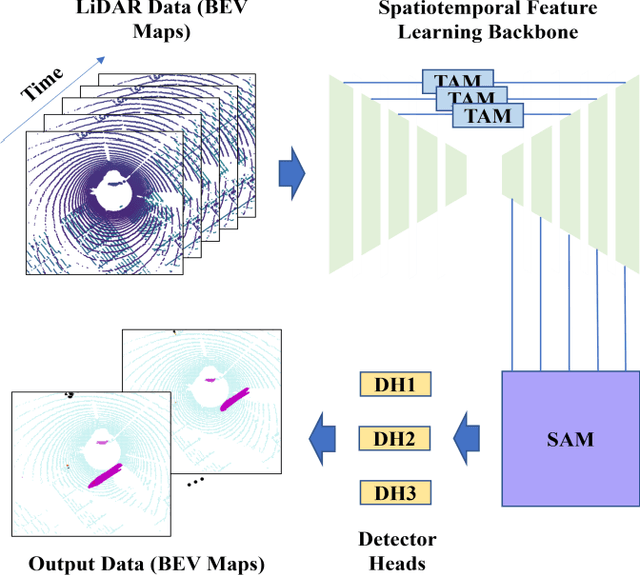

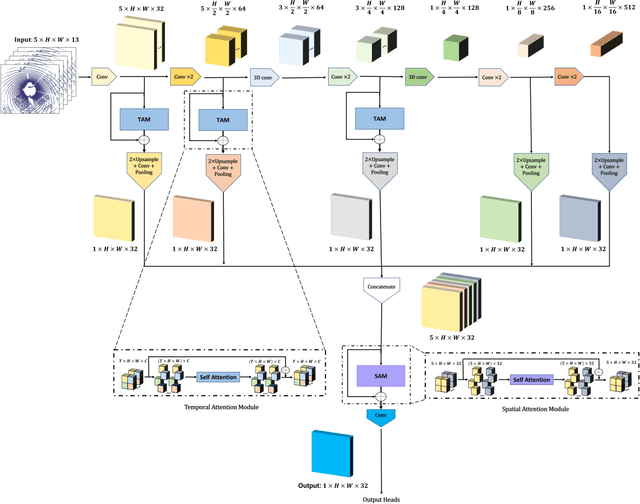

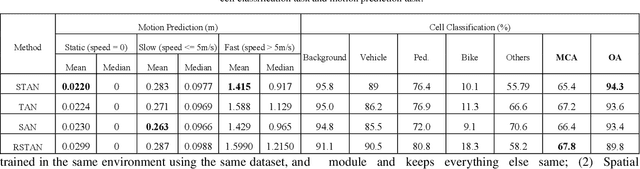

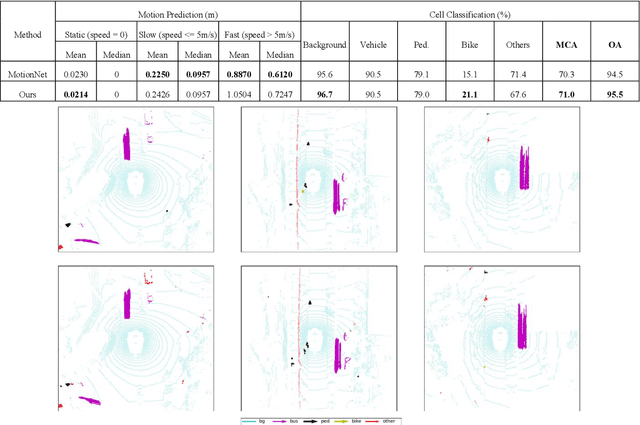

Spatiotemporal Transformer Attention Network for 3D Voxel Level Joint Segmentation and Motion Prediction in Point Cloud

Feb 28, 2022

Abstract:Environment perception including detection, classification, tracking, and motion prediction are key enablers for automated driving systems and intelligent transportation applications. Fueled by the advances in sensing technologies and machine learning techniques, LiDAR-based sensing systems have become a promising solution. The current challenges of this solution are how to effectively combine different perception tasks into a single backbone and how to efficiently learn the spatiotemporal features directly from point cloud sequences. In this research, we propose a novel spatiotemporal attention network based on a transformer self-attention mechanism for joint semantic segmentation and motion prediction within a point cloud at the voxel level. The network is trained to simultaneously outputs the voxel level class and predicted motion by learning directly from a sequence of point cloud datasets. The proposed backbone includes both a temporal attention module (TAM) and a spatial attention module (SAM) to learn and extract the complex spatiotemporal features. This approach has been evaluated with the nuScenes dataset, and promising performance has been achieved.

End-to-End Vision-Based Adaptive Cruise Control Using Deep Reinforcement Learning

Jan 24, 2020

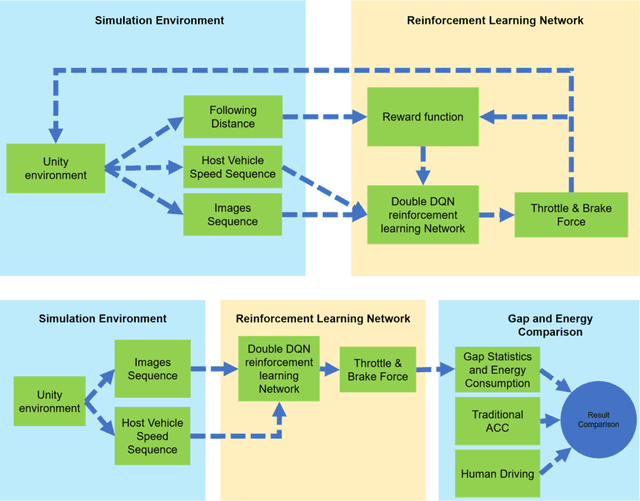

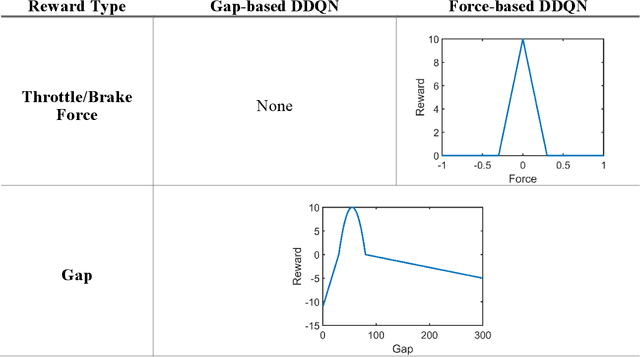

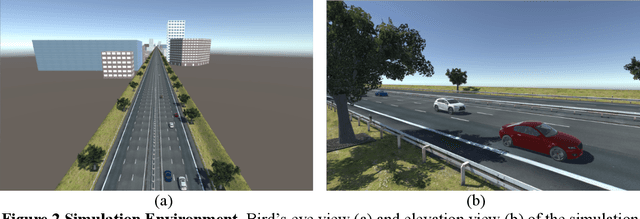

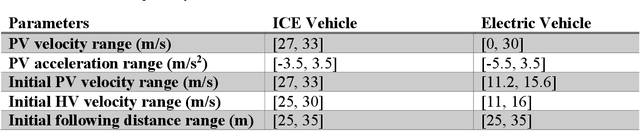

Abstract:This paper presented a deep reinforcement learning method named Double Deep Q-networks to design an end-to-end vision-based adaptive cruise control (ACC) system. A simulation environment of a highway scene was set up in Unity, which is a game engine that provided both physical models of vehicles and feature data for training and testing. Well-designed reward functions associated with the following distance and throttle/brake force were implemented in the reinforcement learning model for both internal combustion engine (ICE) vehicles and electric vehicles (EV) to perform adaptive cruise control. The gap statistics and total energy consumption are evaluated for different vehicle types to explore the relationship between reward functions and powertrain characteristics. Compared with the traditional radar-based ACC systems or human-in-the-loop simulation, the proposed vision-based ACC system can generate either a better gap regulated trajectory or a smoother speed trajectory depending on the preset reward function. The proposed system can be well adaptive to different speed trajectories of the preceding vehicle and operated in real-time.

Vision-Based Lane-Changing Behavior Detection Using Deep Residual Neural Network

Nov 08, 2019

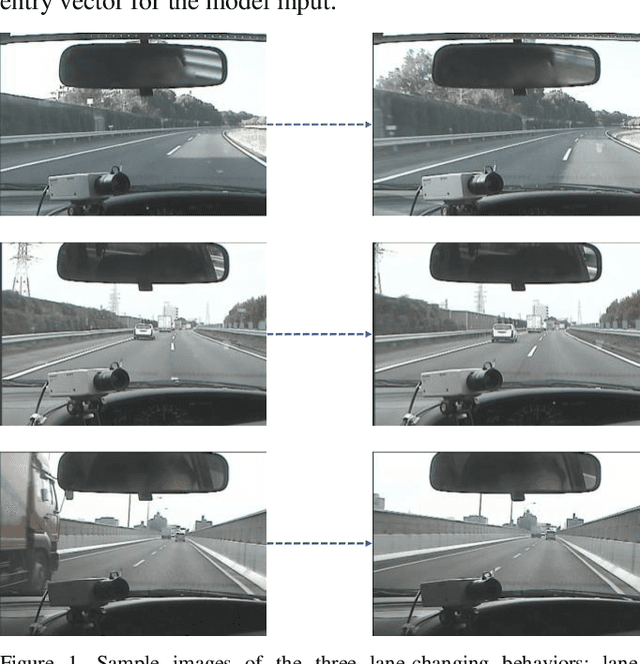

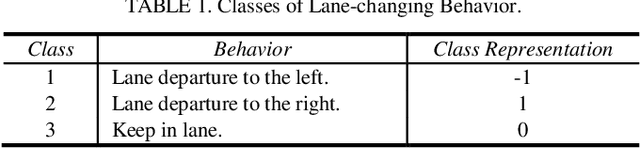

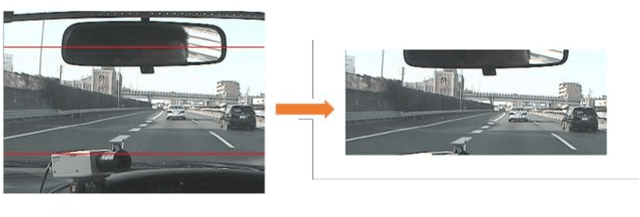

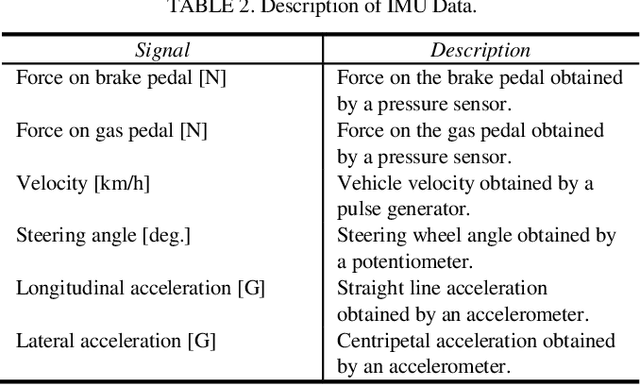

Abstract:Accurate lane localization and lane change detection are crucial in advanced driver assistance systems and autonomous driving systems for safer and more efficient trajectory planning. Conventional localization devices such as Global Positioning System only provide road-level resolution for car navigation, which is incompetent to assist in lane-level decision making. The state of art technique for lane localization is to use Light Detection and Ranging sensors to correct the global localization error and achieve centimeter-level accuracy, but the real-time implementation and popularization for LiDAR is still limited by its computational burden and current cost. As a cost-effective alternative, vision-based lane change detection has been highly regarded for affordable autonomous vehicles to support lane-level localization. A deep learning-based computer vision system is developed to detect the lane change behavior using the images captured by a front-view camera mounted on the vehicle and data from the inertial measurement unit for highway driving. Testing results on real-world driving data have shown that the proposed method is robust with real-time working ability and could achieve around 87% lane change detection accuracy. Compared to the average human reaction to visual stimuli, the proposed computer vision system works 9 times faster, which makes it capable of helping make life-saving decisions in time.

Data-Driven Multi-step Demand Prediction for Ride-hailing Services Using Convolutional Neural Network

Nov 08, 2019

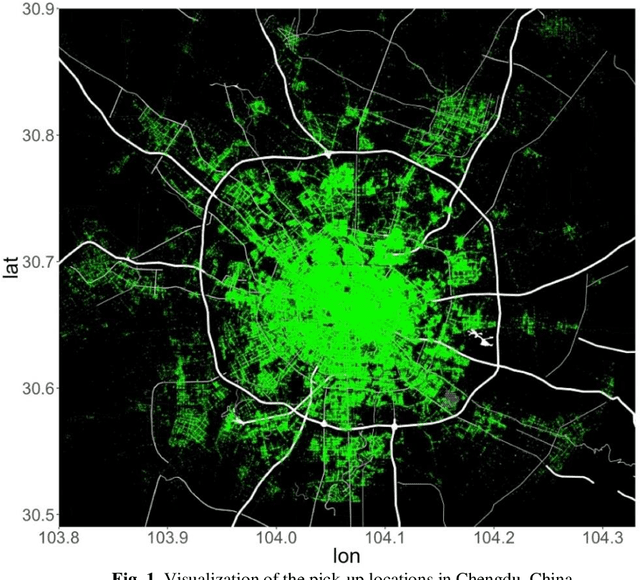

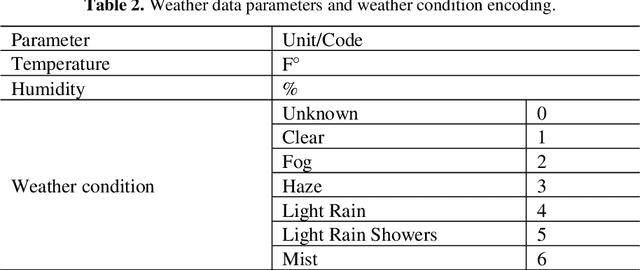

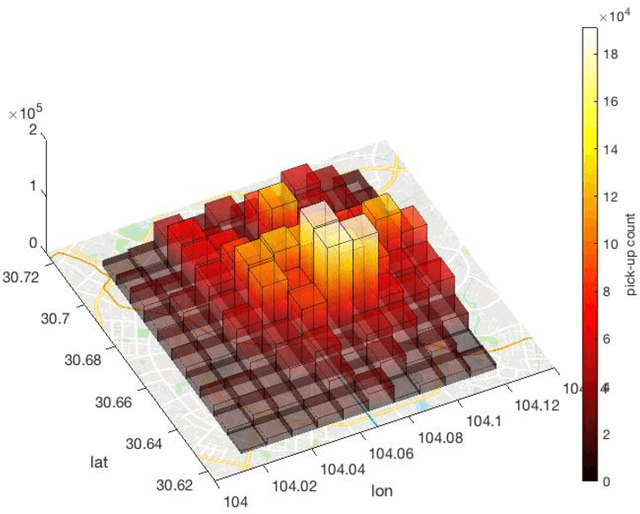

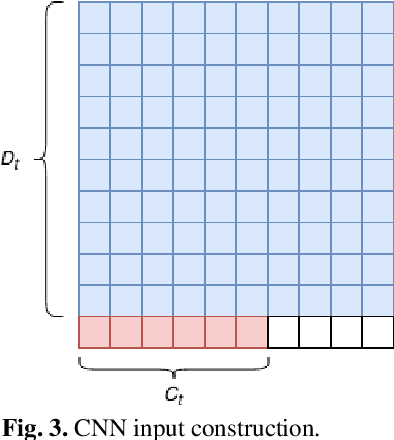

Abstract:Ride-hailing services are growing rapidly and becoming one of the most disruptive technologies in the transportation realm. Accurate prediction of ride-hailing trip demand not only enables cities to better understand people's activity patterns, but also helps ride-hailing companies and drivers make informed decisions to reduce deadheading vehicle miles traveled, traffic congestion, and energy consumption. In this study, a convolutional neural network (CNN)-based deep learning model is proposed for multi-step ride-hailing demand prediction using the trip request data in Chengdu, China, offered by DiDi Chuxing. The CNN model is capable of accurately predicting the ride-hailing pick-up demand at each 1-km by 1-km zone in the city of Chengdu for every 10 minutes. Compared with another deep learning model based on long short-term memory, the CNN model is 30% faster for the training and predicting process. The proposed model can also be easily extended to make multi-step predictions, which would benefit the on-demand shared autonomous vehicles applications and fleet operators in terms of supply-demand rebalancing. The prediction error attenuation analysis shows that the accuracy stays acceptable as the model predicts more steps.

Challenges in Partially-Automated Roadway Feature Mapping Using Mobile Laser Scanning and Vehicle Trajectory Data

Feb 09, 2019

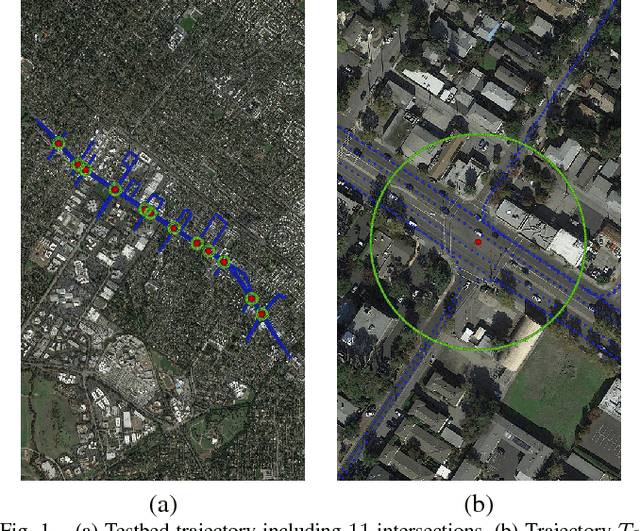

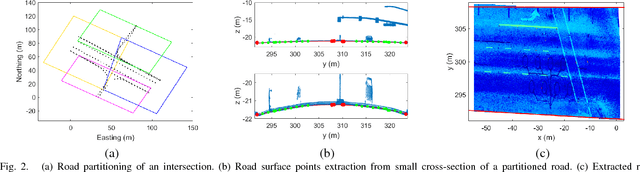

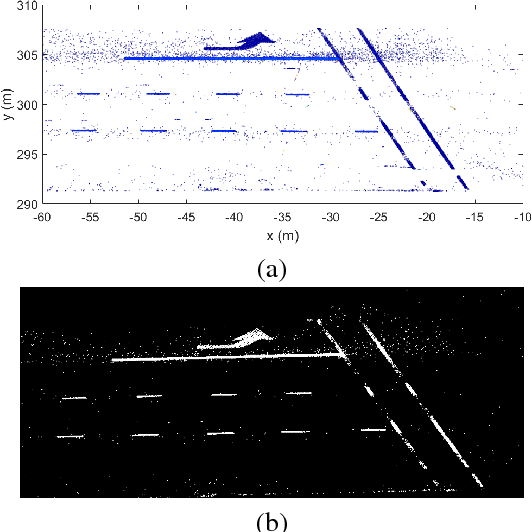

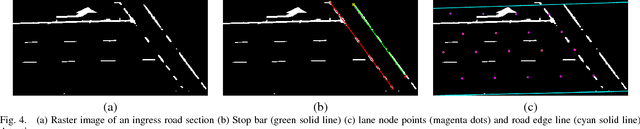

Abstract:Connected vehicle and driver's assistance applications are greatly facilitated by Enhanced Digital Maps (EDMs) that represent roadway features (e.g., lane edges or centerlines, stop bars). Due to the large number of signalized intersections and miles of roadway, manual development of EDMs on a global basis is not feasible. Mobile Terrestrial Laser Scanning (MTLS) is the preferred data acquisition method to provide data for automated EDM development. Such systems provide an MTLS trajectory and a point cloud for the roadway environment. The challenge is to automatically convert these data into an EDM. This article presents a new processing and feature extraction method, experimental demonstration providing SAE-J2735 map messages for eleven example intersections, and a discussion of the results that points out remaining challenges and suggests directions for future research.

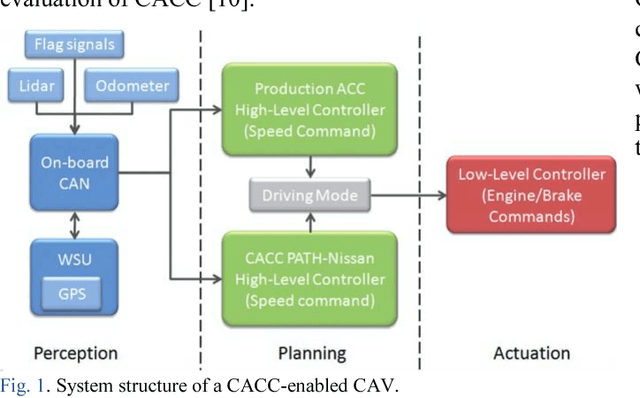

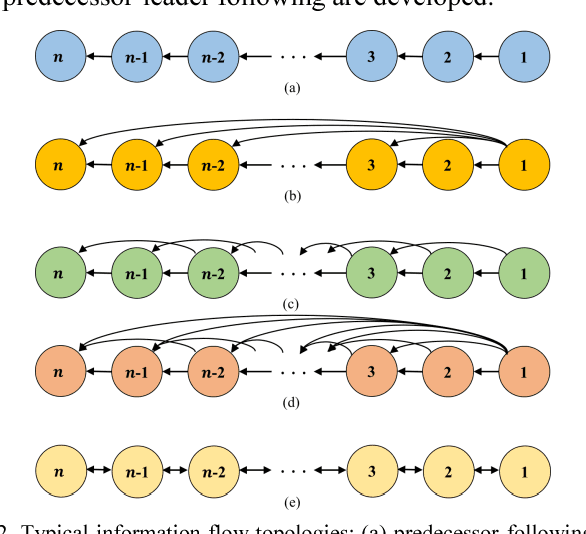

A Review on Cooperative Adaptive Cruise Control (CACC) Systems: Architectures, Controls, and Applications

Sep 08, 2018

Abstract:Connected and automated vehicles (CAVs) have the potential to address the safety, mobility and sustainability issues of our current transportation systems. Cooperative adaptive cruise control (CACC), for example, is one promising technology to allow CAVs to be driven in a cooperative manner and introduces system-wide benefits. In this paper, we review the progress achieved by researchers worldwide regarding different aspects of CACC systems. Literature of CACC system architectures are reviewed, which explain how this system works from a higher level. Different control methodologies and their related issues are reviewed to introduce CACC systems from a lower level. Applications of CACC technology are demonstrated with detailed literature, which draw an overall landscape of CACC, point out current opportunities and challenges, and anticipate its development in the near future.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge