Saswat Priyadarshi Nayak

Dual LiDAR-Based Traffic Movement Count Estimation at a Signalized Intersection: Deployment, Data Collection, and Preliminary Analysis

Jul 17, 2025Abstract:Traffic Movement Count (TMC) at intersections is crucial for optimizing signal timings, assessing the performance of existing traffic control measures, and proposing efficient lane configurations to minimize delays, reduce congestion, and promote safety. Traditionally, methods such as manual counting, loop detectors, pneumatic road tubes, and camera-based recognition have been used for TMC estimation. Although generally reliable, camera-based TMC estimation is prone to inaccuracies under poor lighting conditions during harsh weather and nighttime. In contrast, Light Detection and Ranging (LiDAR) technology is gaining popularity in recent times due to reduced costs and its expanding use in 3D object detection, tracking, and related applications. This paper presents the authors' endeavor to develop, deploy and evaluate a dual-LiDAR system at an intersection in the city of Rialto, California, for TMC estimation. The 3D bounding box detections from the two LiDARs are used to classify vehicle counts based on traffic directions, vehicle movements, and vehicle classes. This work discusses the estimated TMC results and provides insights into the observed trends and irregularities. Potential improvements are also discussed that could enhance not only TMC estimation, but also trajectory forecasting and intent prediction at intersections.

The Role of Integrity Monitoring in Connected and Automated Vehicles: Current State-of-Practice and Future Directions

Feb 07, 2025

Abstract:Connected and Automated Vehicle (CAV) research has gained traction in the last decade due to significant advancements in perception, navigation, communication, and control functions. Accurate and reliable position information is needed to meet the requirements of CAV applications, especially when safety is concerned. With the advent of various perception sensors (e.g. camera, LiDAR, etc.), the vehicular positioning system has improved both in accuracy and robustness. Vehicle-to-Vehicle (V2V) and Vehicle-to-Infrastructure (V2I) based cooperative positioning can improve the accuracy of the position estimates, but the integrity risks involved in multi-sensor fusion in a cooperative environment have not yet been fully explored. This paper reviews existing research in the field of positioning Integrity Monitoring (IM) and identifies various research gaps. Particular attention has been placed on identifying research that highlights cooperative IM methods. This analysis helps pave the way for the development of new IM frameworks for cooperative positioning solutions in the future.

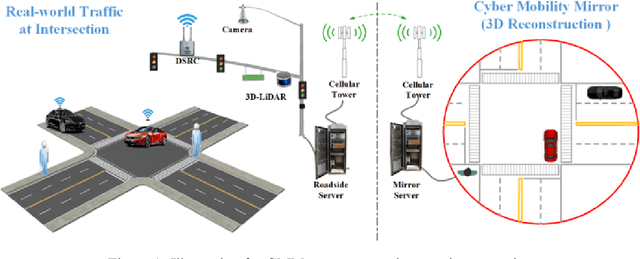

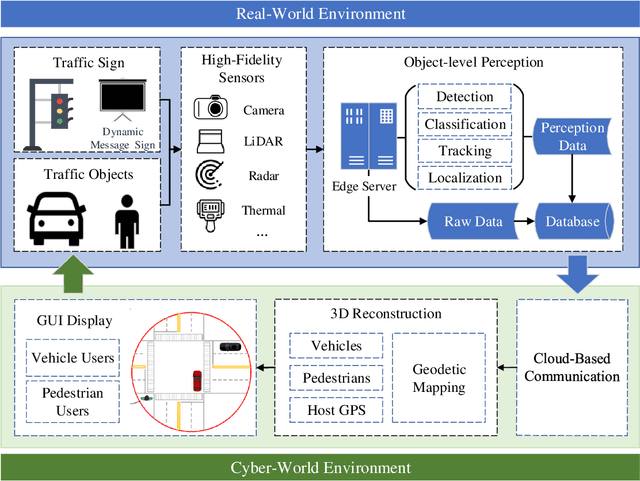

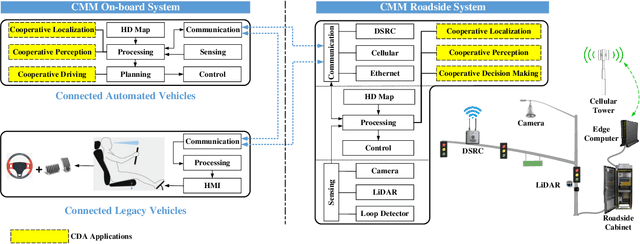

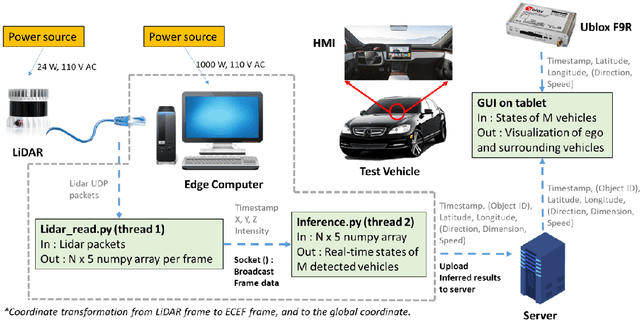

Cyber Mobility Mirror: A Deep Learning-based Real-World Object Perception Platform Using Roadside LiDAR

Apr 07, 2022

Abstract:Object perception plays a fundamental role in Cooperative Driving Automation (CDA) which is regarded as a revolutionary promoter for the next-generation transportation systems. However, the vehicle-based perception may suffer from the limited sensing range and occlusion as well as low penetration rates in connectivity. In this paper, we propose Cyber Mobility Mirror (CMM), a next-generation real-time traffic surveillance system for 3D object perception and reconstruction, to explore the potential of roadside sensors for enabling CDA in the real world. The CMM system consists of six main components: 1) the data pre-processor to retrieve and preprocess the raw data; 2) the roadside 3D object detector to generate 3D detection results; 3) the multi-object tracker to identify detected objects; 4) the global locator to map positioning information from the LiDAR coordinate to geographic coordinate using coordinate transformation; 5) the cloud-based communicator to transmit perception information from roadside sensors to equipped vehicles, and 6) the onboard advisor to reconstruct and display the real-time traffic conditions via Graphical User Interface (GUI). In this study, a field-operational system is deployed at a real-world intersection, University Avenue and Iowa Avenue in Riverside, California to assess the feasibility and performance of our CMM system. Results from field tests demonstrate that our CMM prototype system can provide satisfactory perception performance with 96.99% precision and 83.62% recall. High-fidelity real-time traffic conditions (at the object level) can be geo-localized with an average error of 0.14m and displayed on the GUI of the equipped vehicle with a frequency of 3-4 Hz.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge