Guoyuan Wu

Dual LiDAR-Based Traffic Movement Count Estimation at a Signalized Intersection: Deployment, Data Collection, and Preliminary Analysis

Jul 17, 2025Abstract:Traffic Movement Count (TMC) at intersections is crucial for optimizing signal timings, assessing the performance of existing traffic control measures, and proposing efficient lane configurations to minimize delays, reduce congestion, and promote safety. Traditionally, methods such as manual counting, loop detectors, pneumatic road tubes, and camera-based recognition have been used for TMC estimation. Although generally reliable, camera-based TMC estimation is prone to inaccuracies under poor lighting conditions during harsh weather and nighttime. In contrast, Light Detection and Ranging (LiDAR) technology is gaining popularity in recent times due to reduced costs and its expanding use in 3D object detection, tracking, and related applications. This paper presents the authors' endeavor to develop, deploy and evaluate a dual-LiDAR system at an intersection in the city of Rialto, California, for TMC estimation. The 3D bounding box detections from the two LiDARs are used to classify vehicle counts based on traffic directions, vehicle movements, and vehicle classes. This work discusses the estimated TMC results and provides insights into the observed trends and irregularities. Potential improvements are also discussed that could enhance not only TMC estimation, but also trajectory forecasting and intent prediction at intersections.

PDB: Not All Drivers Are the Same -- A Personalized Dataset for Understanding Driving Behavior

Mar 09, 2025

Abstract:Driving behavior is inherently personal, influenced by individual habits, decision-making styles, and physiological states. However, most existing datasets treat all drivers as homogeneous, overlooking driver-specific variability. To address this gap, we introduce the Personalized Driving Behavior (PDB) dataset, a multi-modal dataset designed to capture personalization in driving behavior under naturalistic driving conditions. Unlike conventional datasets, PDB minimizes external influences by maintaining consistent routes, vehicles, and lighting conditions across sessions. It includes sources from 128-line LiDAR, front-facing camera video, GNSS, 9-axis IMU, CAN bus data (throttle, brake, steering angle), and driver-specific signals such as facial video and heart rate. The dataset features 12 participants, approximately 270,000 LiDAR frames, 1.6 million images, and 6.6 TB of raw sensor data. The processed trajectory dataset consists of 1,669 segments, each spanning 10 seconds with a 0.2-second interval. By explicitly capturing drivers' behavior, PDB serves as a unique resource for human factor analysis, driver identification, and personalized mobility applications, contributing to the development of human-centric intelligent transportation systems.

Investigating Personalized Driving Behaviors in Dilemma Zones: Analysis and Prediction of Stop-or-Go Decisions

May 06, 2024Abstract:Dilemma zones at signalized intersections present a commonly occurring but unsolved challenge for both drivers and traffic operators. Onsets of the yellow lights prompt varied responses from different drivers: some may brake abruptly, compromising the ride comfort, while others may accelerate, increasing the risk of red-light violations and potential safety hazards. Such diversity in drivers' stop-or-go decisions may result from not only surrounding traffic conditions, but also personalized driving behaviors. To this end, identifying personalized driving behaviors and integrating them into advanced driver assistance systems (ADAS) to mitigate the dilemma zone problem presents an intriguing scientific question. In this study, we employ a game engine-based (i.e., CARLA-enabled) driving simulator to collect high-resolution vehicle trajectories, incoming traffic signal phase and timing information, and stop-or-go decisions from four subject drivers in various scenarios. This approach allows us to analyze personalized driving behaviors in dilemma zones and develop a Personalized Transformer Encoder to predict individual drivers' stop-or-go decisions. The results show that the Personalized Transformer Encoder improves the accuracy of predicting driver decision-making in the dilemma zone by 3.7% to 12.6% compared to the Generic Transformer Encoder, and by 16.8% to 21.6% over the binary logistic regression model.

Feature Corrective Transfer Learning: End-to-End Solutions to Object Detection in Non-Ideal Visual Conditions

Apr 19, 2024Abstract:A significant challenge in the field of object detection lies in the system's performance under non-ideal imaging conditions, such as rain, fog, low illumination, or raw Bayer images that lack ISP processing. Our study introduces "Feature Corrective Transfer Learning", a novel approach that leverages transfer learning and a bespoke loss function to facilitate the end-to-end detection of objects in these challenging scenarios without the need to convert non-ideal images into their RGB counterparts. In our methodology, we initially train a comprehensive model on a pristine RGB image dataset. Subsequently, non-ideal images are processed by comparing their feature maps against those from the initial ideal RGB model. This comparison employs the Extended Area Novel Structural Discrepancy Loss (EANSDL), a novel loss function designed to quantify similarities and integrate them into the detection loss. This approach refines the model's ability to perform object detection across varying conditions through direct feature map correction, encapsulating the essence of Feature Corrective Transfer Learning. Experimental validation on variants of the KITTI dataset demonstrates a significant improvement in mean Average Precision (mAP), resulting in a 3.8-8.1% relative enhancement in detection under non-ideal conditions compared to the baseline model, and a less marginal performance difference within 1.3% of the mAP@[0.5:0.95] achieved under ideal conditions by the standard Faster RCNN algorithm.

KI-GAN: Knowledge-Informed Generative Adversarial Networks for Enhanced Multi-Vehicle Trajectory Forecasting at Signalized Intersections

Apr 19, 2024

Abstract:Reliable prediction of vehicle trajectories at signalized intersections is crucial to urban traffic management and autonomous driving systems. However, it presents unique challenges, due to the complex roadway layout at intersections, involvement of traffic signal controls, and interactions among different types of road users. To address these issues, we present in this paper a novel model called Knowledge-Informed Generative Adversarial Network (KI-GAN), which integrates both traffic signal information and multi-vehicle interactions to predict vehicle trajectories accurately. Additionally, we propose a specialized attention pooling method that accounts for vehicle orientation and proximity at intersections. Based on the SinD dataset, our KI-GAN model is able to achieve an Average Displacement Error (ADE) of 0.05 and a Final Displacement Error (FDE) of 0.12 for a 6-second observation and 6-second prediction cycle. When the prediction window is extended to 9 seconds, the ADE and FDE values are further reduced to 0.11 and 0.26, respectively. These results demonstrate the effectiveness of the proposed KI-GAN model in vehicle trajectory prediction under complex scenarios at signalized intersections, which represents a significant advancement in the target field.

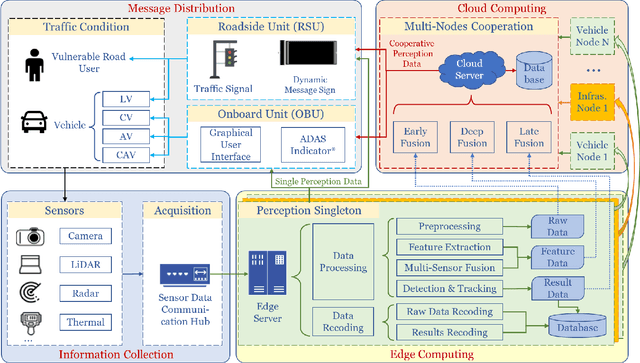

Cooperverse: A Mobile-Edge-Cloud Framework for Universal Cooperative Perception with Mixed Connectivity and Automation

Feb 06, 2023Abstract:Cooperative perception (CP) is attracting increasing attention and is regarded as the core foundation to support cooperative driving automation, a potential key solution to addressing the safety, mobility, and sustainability issues of contemporary transportation systems. However, current research on CP is still at the beginning stages where a systematic problem formulation of CP is still missing, acting as the essential guideline of the system design of a CP system under real-world situations. In this paper, we formulate a universal CP system into an optimization problem and a mobile-edge-cloud framework called Cooperverse. This system addresses CP in a mixed connectivity and automation environment. A Dynamic Feature Sharing (DFS) methodology is introduced to support this CP system under certain constraints and a Random Priority Filtering (RPF) method is proposed to conduct DFS with high performance. Experiments have been conducted based on a high-fidelity CP platform, and the results show that the Cooperverse framework is effective for dynamic node engagement and the proposed DFS methodology can improve system CP performance by 14.5% and the RPF method can reduce the communication cost for mobile nodes by 90% with only 1.7% drop for average precision.

VINet: Lightweight, Scalable, and Heterogeneous Cooperative Perception for 3D Object Detection

Dec 14, 2022

Abstract:Utilizing the latest advances in Artificial Intelligence (AI), the computer vision community is now witnessing an unprecedented evolution in all kinds of perception tasks, particularly in object detection. Based on multiple spatially separated perception nodes, Cooperative Perception (CP) has emerged to significantly advance the perception of automated driving. However, current cooperative object detection methods mainly focus on ego-vehicle efficiency without considering the practical issues of system-wide costs. In this paper, we introduce VINet, a unified deep learning-based CP network for scalable, lightweight, and heterogeneous cooperative 3D object detection. VINet is the first CP method designed from the standpoint of large-scale system-level implementation and can be divided into three main phases: 1) Global Pre-Processing and Lightweight Feature Extraction which prepare the data into global style and extract features for cooperation in a lightweight manner; 2) Two-Stream Fusion which fuses the features from scalable and heterogeneous perception nodes; and 3) Central Feature Backbone and 3D Detection Head which further process the fused features and generate cooperative detection results. A cooperative perception platform is designed and developed for CP dataset acquisition and several baselines are compared during the experiments. The experimental analysis shows that VINet can achieve remarkable improvements for pedestrians and cars with 2x less system-wide computational costs and 12x less system-wide communicational costs.

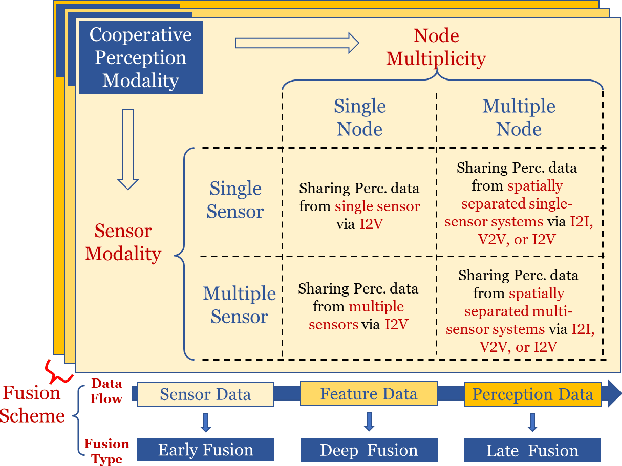

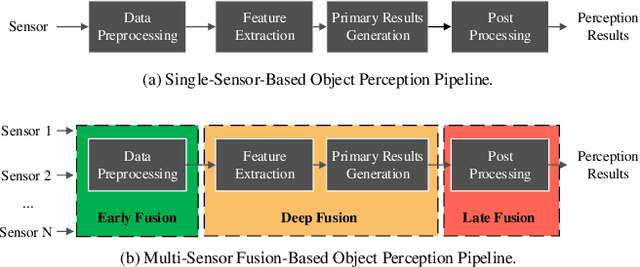

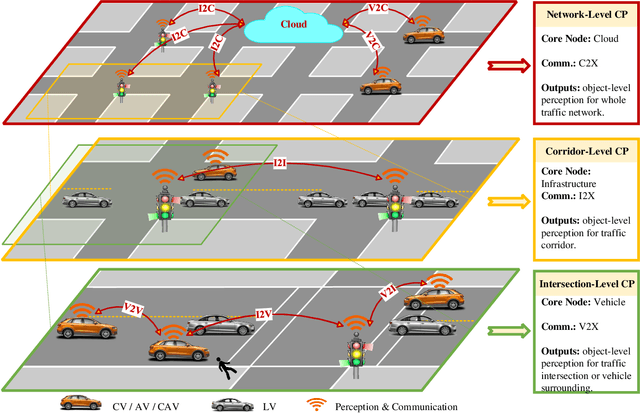

A Survey and Framework of Cooperative Perception: From Heterogeneous Singleton to Hierarchical Cooperation

Aug 22, 2022

Abstract:Perceiving the environment is one of the most fundamental keys to enabling Cooperative Driving Automation (CDA), which is regarded as the revolutionary solution to addressing the safety, mobility, and sustainability issues of contemporary transportation systems. Although an unprecedented evolution is now happening in the area of computer vision for object perception, state-of-the-art perception methods are still struggling with sophisticated real-world traffic environments due to the inevitably physical occlusion and limited receptive field of single-vehicle systems. Based on multiple spatially separated perception nodes, Cooperative Perception (CP) is born to unlock the bottleneck of perception for driving automation. In this paper, we comprehensively review and analyze the research progress on CP and, to the best of our knowledge, this is the first time to propose a unified CP framework. Architectures and taxonomy of CP systems based on different types of sensors are reviewed to show a high-level description of the workflow and different structures for CP systems. Node structure, sensor modality, and fusion schemes are reviewed and analyzed with comprehensive literature to provide detailed explanations of specific methods. A Hierarchical CP framework is proposed, followed by a review of existing Datasets and Simulators to sketch an overall landscape of CP. Discussion highlights the current opportunities, open challenges, and anticipated future trends.

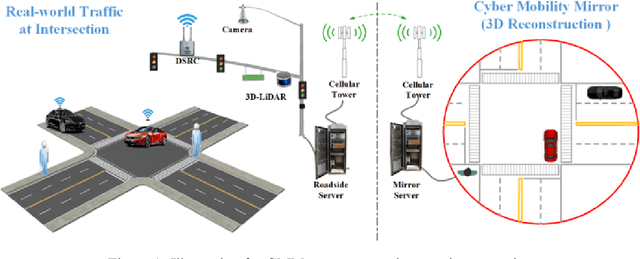

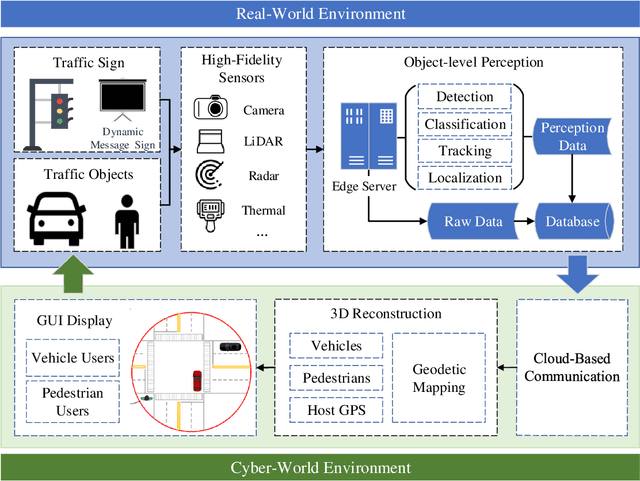

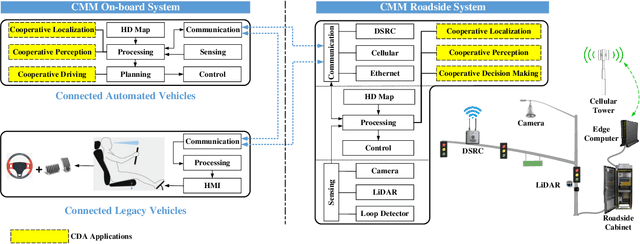

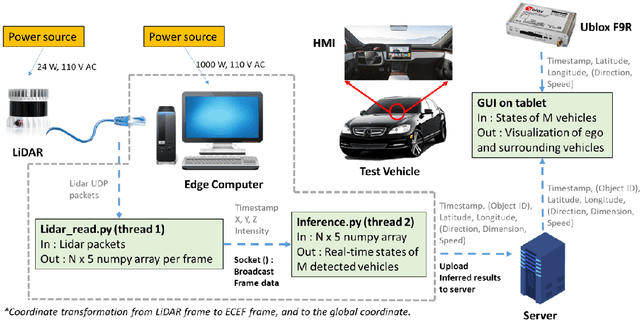

Cyber Mobility Mirror: A Deep Learning-based Real-World Object Perception Platform Using Roadside LiDAR

Apr 07, 2022

Abstract:Object perception plays a fundamental role in Cooperative Driving Automation (CDA) which is regarded as a revolutionary promoter for the next-generation transportation systems. However, the vehicle-based perception may suffer from the limited sensing range and occlusion as well as low penetration rates in connectivity. In this paper, we propose Cyber Mobility Mirror (CMM), a next-generation real-time traffic surveillance system for 3D object perception and reconstruction, to explore the potential of roadside sensors for enabling CDA in the real world. The CMM system consists of six main components: 1) the data pre-processor to retrieve and preprocess the raw data; 2) the roadside 3D object detector to generate 3D detection results; 3) the multi-object tracker to identify detected objects; 4) the global locator to map positioning information from the LiDAR coordinate to geographic coordinate using coordinate transformation; 5) the cloud-based communicator to transmit perception information from roadside sensors to equipped vehicles, and 6) the onboard advisor to reconstruct and display the real-time traffic conditions via Graphical User Interface (GUI). In this study, a field-operational system is deployed at a real-world intersection, University Avenue and Iowa Avenue in Riverside, California to assess the feasibility and performance of our CMM system. Results from field tests demonstrate that our CMM prototype system can provide satisfactory perception performance with 96.99% precision and 83.62% recall. High-fidelity real-time traffic conditions (at the object level) can be geo-localized with an average error of 0.14m and displayed on the GUI of the equipped vehicle with a frequency of 3-4 Hz.

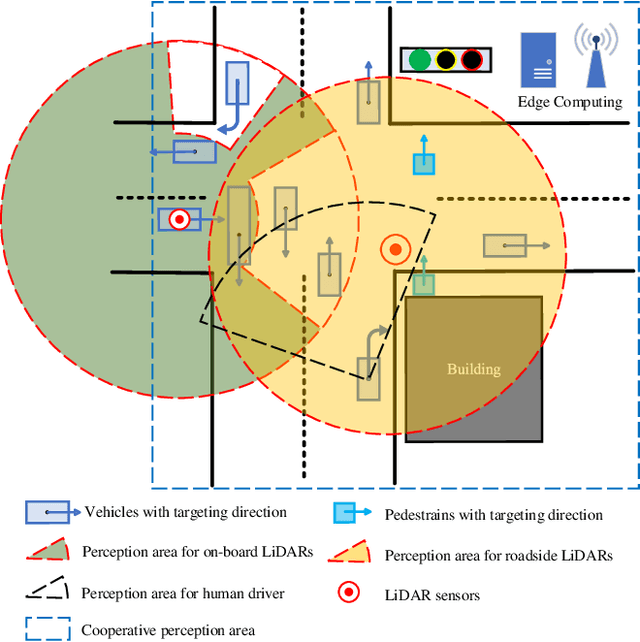

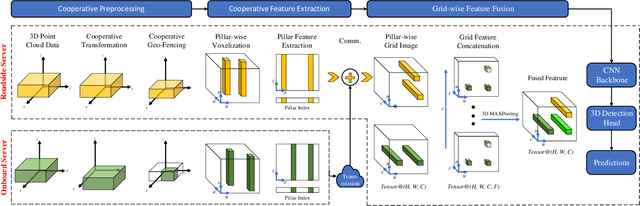

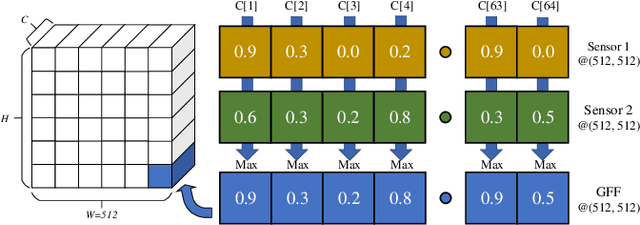

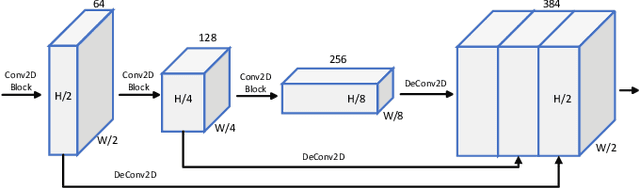

PillarGrid: Deep Learning-based Cooperative Perception for 3D Object Detection from Onboard-Roadside LiDAR

Mar 19, 2022

Abstract:3D object detection plays a fundamental role in enabling autonomous driving, which is regarded as the significant key to unlocking the bottleneck of contemporary transportation systems from the perspectives of safety, mobility, and sustainability. Most of the state-of-the-art (SOTA) object detection methods from point clouds are developed based on a single onboard LiDAR, whose performance will be inevitably limited by the range and occlusion, especially in dense traffic scenarios. In this paper, we propose \textit{PillarGrid}, a novel cooperative perception method fusing information from multiple 3D LiDARs (both on-board and roadside), to enhance the situation awareness for connected and automated vehicles (CAVs). PillarGrid consists of four main phases: 1) cooperative preprocessing of point clouds, 2) pillar-wise voxelization and feature extraction, 3) grid-wise deep fusion of features from multiple sensors, and 4) convolutional neural network (CNN)-based augmented 3D object detection. A novel cooperative perception platform is developed for model training and testing. Extensive experimentation shows that PillarGrid outperforms the SOTA single-LiDAR-based 3D object detection methods with respect to both accuracy and range by a large margin.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge