Ludovic Righetti

NYU Tandon School of Engineering

Learning to Explore in Motion and Interaction Tasks

Aug 10, 2019

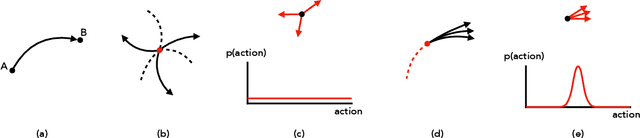

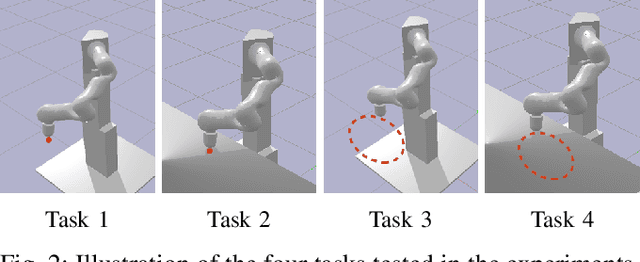

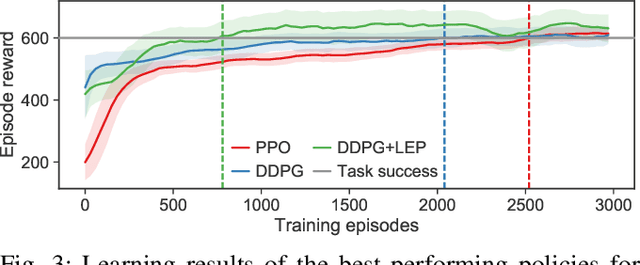

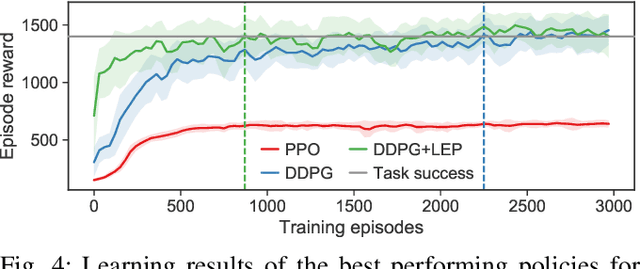

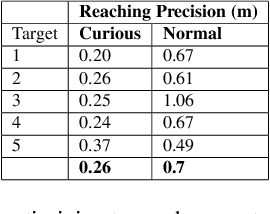

Abstract:Model free reinforcement learning suffers from the high sampling complexity inherent to robotic manipulation or locomotion tasks. Most successful approaches typically use random sampling strategies which leads to slow policy convergence. In this paper we present a novel approach for efficient exploration that leverages previously learned tasks. We exploit the fact that the same system is used across many tasks and build a generative model for exploration based on data from previously solved tasks to improve learning new tasks. The approach also enables continuous learning of improved exploration strategies as novel tasks are learned. Extensive simulations on a robot manipulator performing a variety of motion and contact interaction tasks demonstrate the capabilities of the approach. In particular, our experiments suggest that the exploration strategy can more than double learning speed, especially when rewards are sparse. Moreover, the algorithm is robust to task variations and parameter tuning, making it beneficial for complex robotic problems.

Learning Variable Impedance Control for Contact Sensitive Tasks

Jul 17, 2019

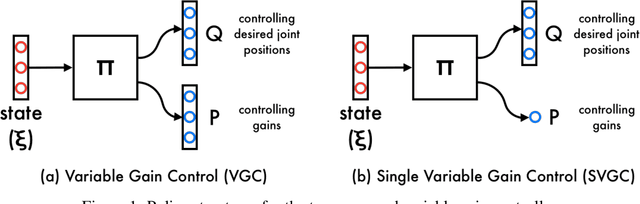

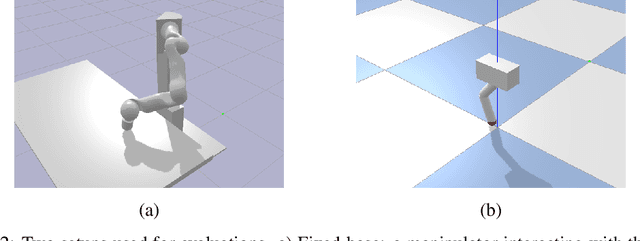

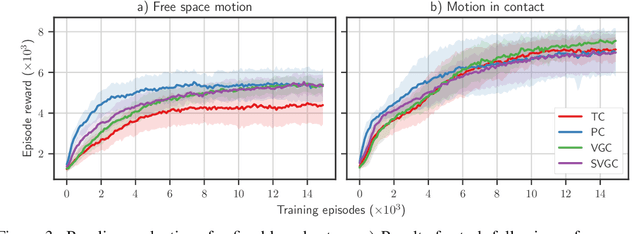

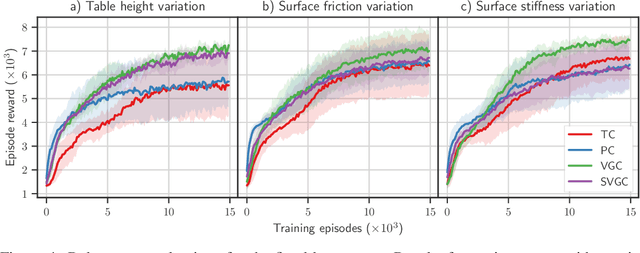

Abstract:Reinforcement learning algorithms have shown great success in solving different problems ranging from playing video games to robotics. However, they struggle to solve delicate robotic problems, especially those involving contact interactions. Though in principle a policy outputting joint torques should be able to learn these tasks, in practice we see that they have difficulty to robustly solve the problem without any structure in the action space. In this paper, we investigate how the choice of action space can give robust performance in presence of contact uncertainties. We propose to learn a policy that outputs impedance and desired position in joint space as a function of system states without imposing any other structure to the problem. We compare the performance of this approach to torque and position control policies under different contact uncertainties. Extensive simulation results on two different systems, a hopper (floating-base) with intermittent contacts and a manipulator (fixed-base) wiping a table, show that our proposed approach outperforms policies outputting torque or position in terms of both learning rate and robustness to environment uncertainty.

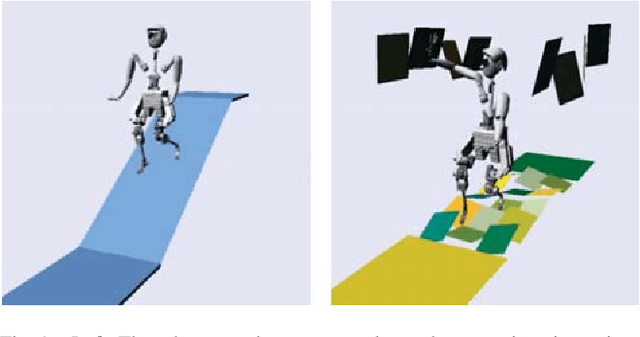

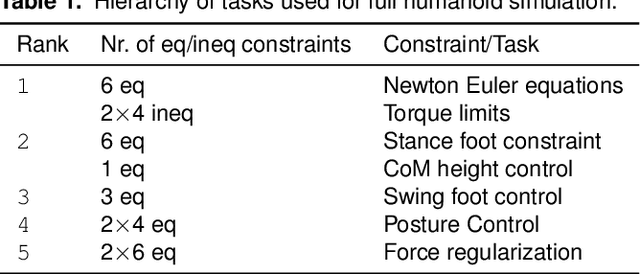

Robust Humanoid Locomotion Using Trajectory Optimization and Sample-Efficient Learning

Jul 10, 2019

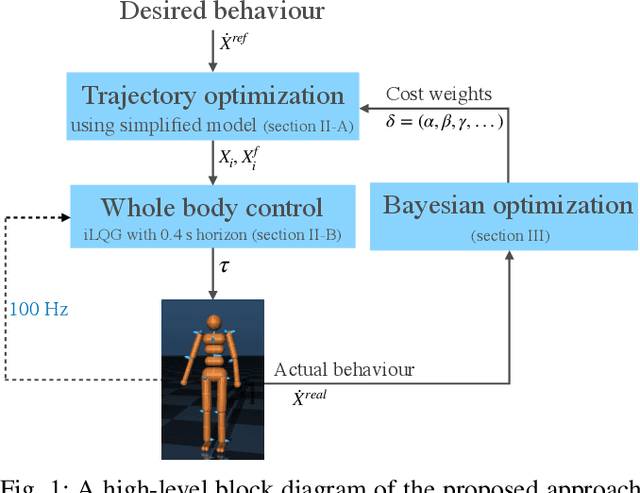

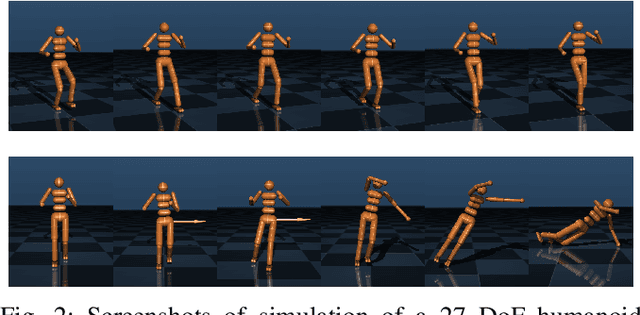

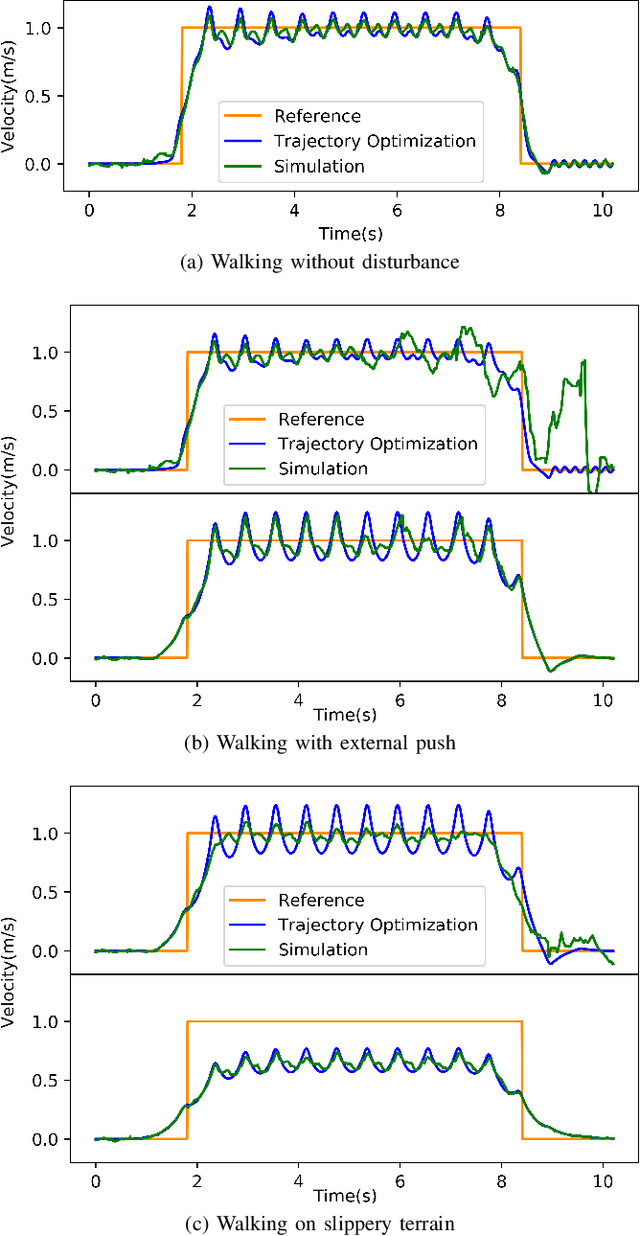

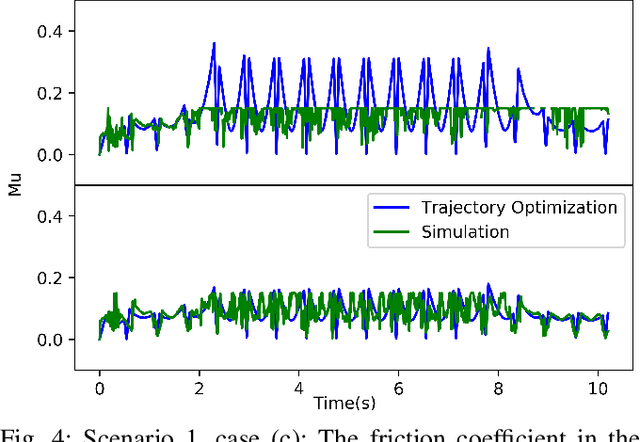

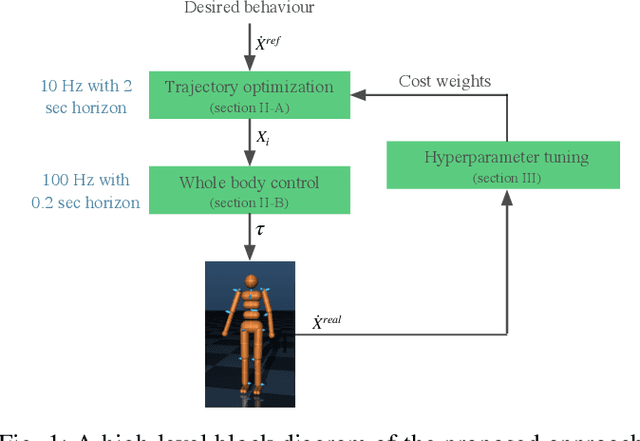

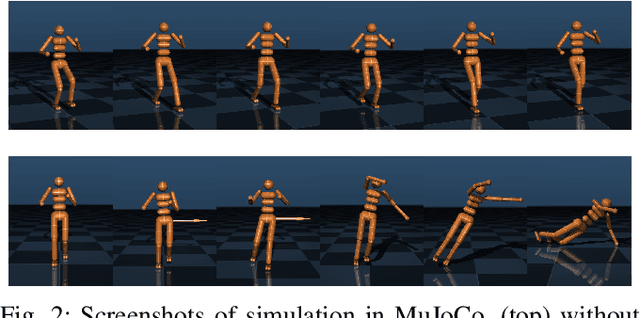

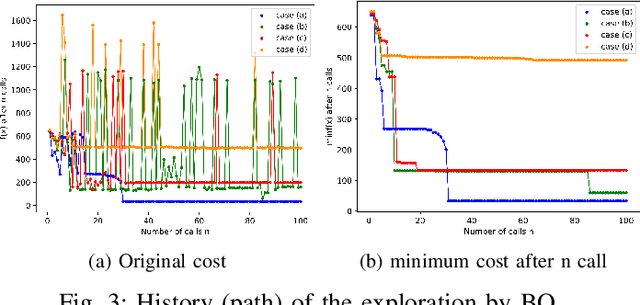

Abstract:Trajectory optimization (TO) is one of the most powerful tools for generating feasible motions for humanoid robots. However, including uncertainties and stochasticity in the TO problem to generate robust motions can easily lead to intractable problems. Furthermore, since the models used in TO have always some level of abstraction, it can be hard to find a realistic set of uncertainties in the model space. In this paper we leverage a sample-efficient learning technique (Bayesian optimization) to robustify TO for humanoid locomotion. The main idea is to use data from full-body simulations to make the TO stage robust by tuning the cost weights. To this end, we split the TO problem into two phases. The first phase solves a convex optimization problem for generating center of mass (CoM) trajectories based on simplified linear dynamics. The second stage employs iterative Linear-Quadratic Gaussian (iLQG) as a whole-body controller to generate full body control inputs. Then we use Bayesian optimization to find the cost weights to use in the first stage that yields robust performance in the simulation/experiment, in the presence of different disturbance/uncertainties. The results show that the proposed approach is able to generate robust motions for different sets of disturbances and uncertainties.

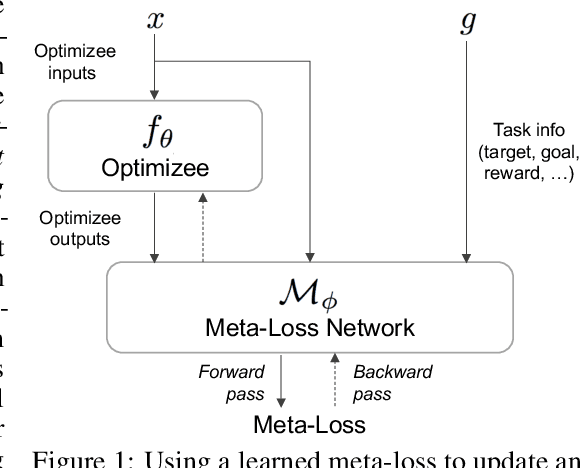

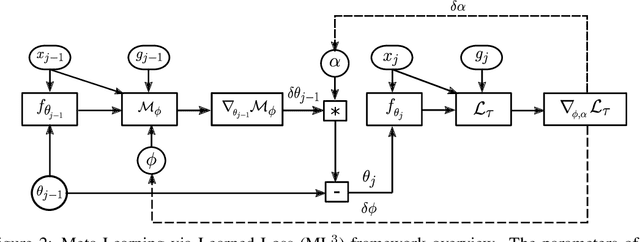

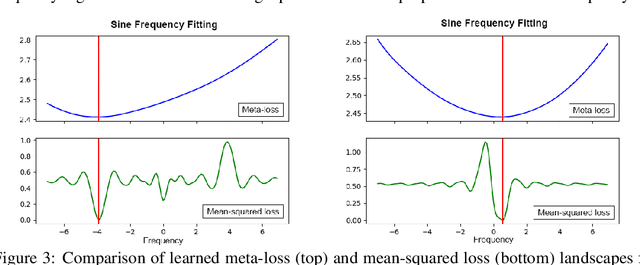

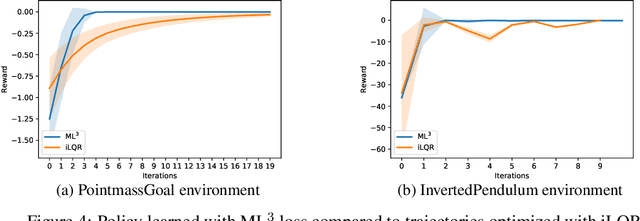

Meta-Learning via Learned Loss

Jun 12, 2019

Abstract:We present a meta-learning approach based on learning an adaptive, high-dimensional loss function that can generalize across multiple tasks and different model architectures. We develop a fully differentiable pipeline for learning a loss function targeted at maximizing the performance of an optimizee trained using this loss function. We observe that the loss landscape produced by our learned loss significantly improves upon the original task-specific loss. We evaluate our method on supervised and reinforcement learning tasks. Furthermore, we show that our pipeline is able to operate in sparse reward and self-supervised reinforcement learning scenarios.

Trajectory Optimization for Robust Humanoid Locomotion with Sample-Efficient Learning

Jun 09, 2019

Abstract:Trajectory optimization (TO) is one of the most powerful tools for generating feasible motions for humanoid robots. However, including uncertainties and stochasticity in the TO problem to generate robust motions can easily lead to an interactable problem. Furthermore, since the models used in the TO have always some level of abstraction, it is hard to find a realistic set of uncertainty in the space of abstract model. In this paper we aim at leveraging a sample-efficient learning technique (Bayesian optimization) to robustify trajectory optimization for humanoid locomotion. The main idea is to use Bayesian optimization to find the optimal set of cost weights which compromises performance with respect to robustness with a few realistic simulation/experiment. The results show that the proposed approach is able to generate robust motions for different set of disturbances and uncertainties.

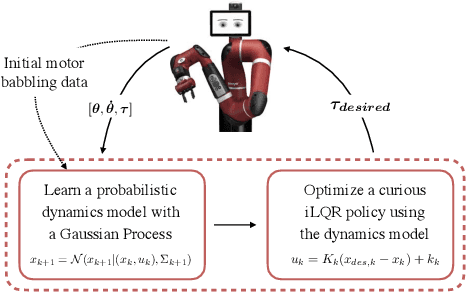

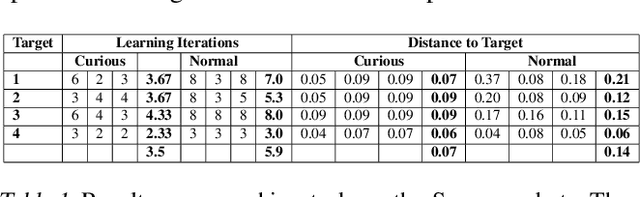

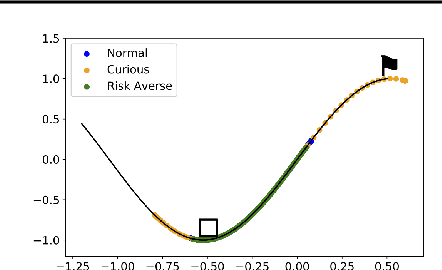

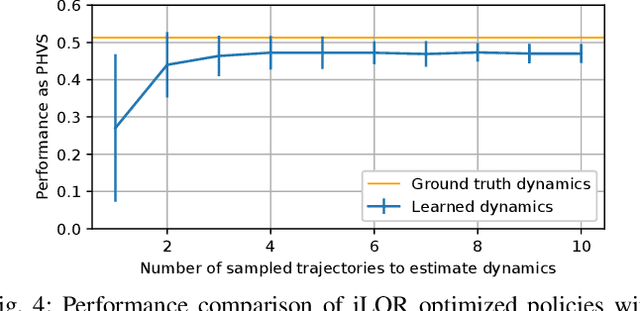

Curious iLQR: Resolving Uncertainty in Model-based RL

Apr 15, 2019

Abstract:Curiosity as a means to explore during reinforcement learning problems has recently become very popular. However, very little progress has been made in utilizing curiosity for learning control. In this work, we propose a model-based reinforcement learning (MBRL) framework that combines Bayesian modeling of the system dynamics with curious iLQR, a risk-seeking iterative LQR approach. During trajectory optimization the curious iLQR attempts to minimize both the task-dependent cost and the uncertainty in the dynamics model. We scale this approach to perform reaching tasks on 7-DoF manipulators, to perform both simulation and real robot reaching experiments. Our experiments consistently show that MBRL with curious iLQR more easily overcomes bad initial dynamics models and reaches desired joint configurations more reliably and with less system rollouts.

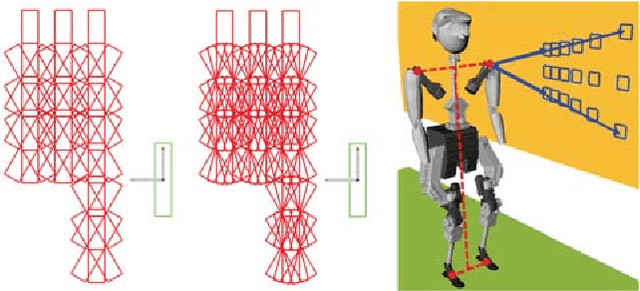

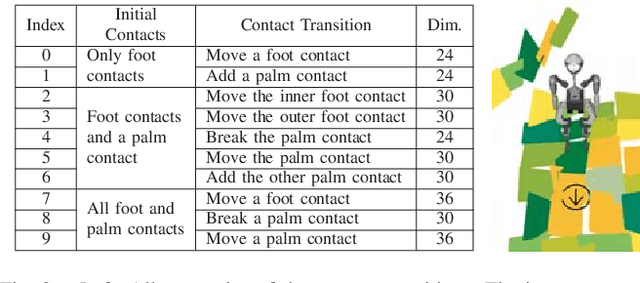

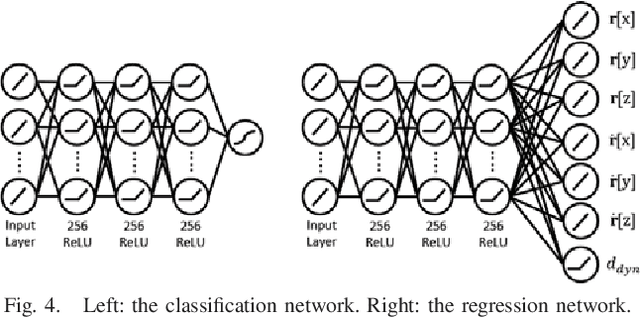

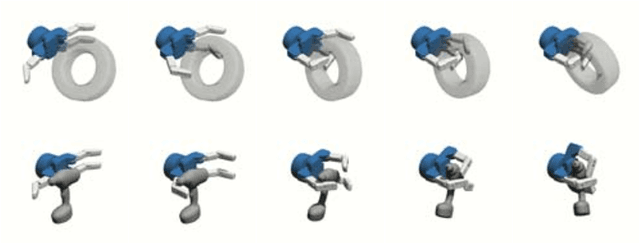

Efficient Humanoid Contact Planning using Learned Centroidal Dynamics Prediction

Mar 01, 2019

Abstract:Humanoid robots dynamically navigate an environment by interacting with it via contact wrenches exerted at intermittent contact poses. Therefore, it is important to consider dynamics when planning a contact sequence. Traditional contact planning approaches assume a quasi-static balance criterion to reduce the computational challenges of selecting a contact sequence over a rough terrain. This however limits the applicability of the approach when dynamic motions are required, such as when walking down a steep slope or crossing a wide gap. Recent methods overcome this limitation with the help of efficient mixed integer convex programming solvers capable of synthesizing dynamic contact sequences. Nevertheless, its exponential-time complexity limits its applicability to short time horizon contact sequences within small environments. In this paper, we go beyond current approaches by learning a prediction of the dynamic evolution of the robot centroidal momenta, which can then be used for quickly generating dynamically robust contact sequences for robots with arms and legs using a search-based contact planner. We demonstrate the efficiency and quality of the results of the proposed approach in a set of dynamically challenging scenarios.

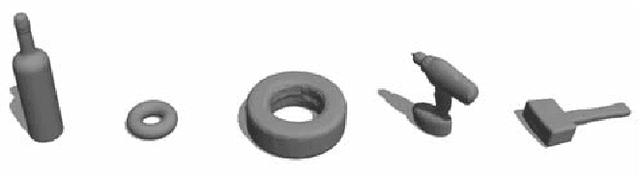

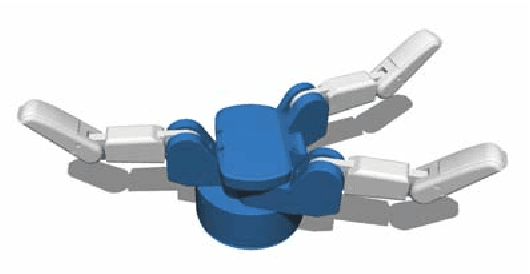

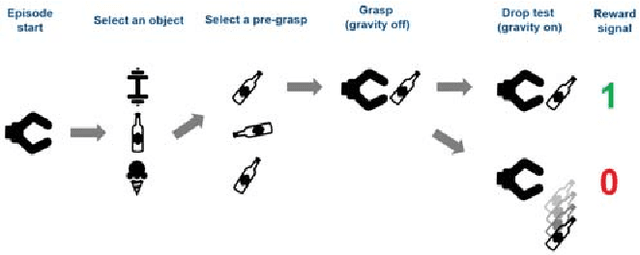

Leveraging Contact Forces for Learning to Grasp

Sep 19, 2018

Abstract:Grasping objects under uncertainty remains an open problem in robotics research. This uncertainty is often due to noisy or partial observations of the object pose or shape. To enable a robot to react appropriately to unforeseen effects, it is crucial that it continuously takes sensor feedback into account. While visual feedback is important for inferring a grasp pose and reaching for an object, contact feedback offers valuable information during manipulation and grasp acquisition. In this paper, we use model-free deep reinforcement learning to synthesize control policies that exploit contact sensing to generate robust grasping under uncertainty. We demonstrate our approach on a multi-fingered hand that exhibits more complex finger coordination than the commonly used two-fingered grippers. We conduct extensive experiments in order to assess the performance of the learned policies, with and without contact sensing. While it is possible to learn grasping policies without contact sensing, our results suggest that contact feedback allows for a significant improvement of grasping robustness under object pose uncertainty and for objects with a complex shape.

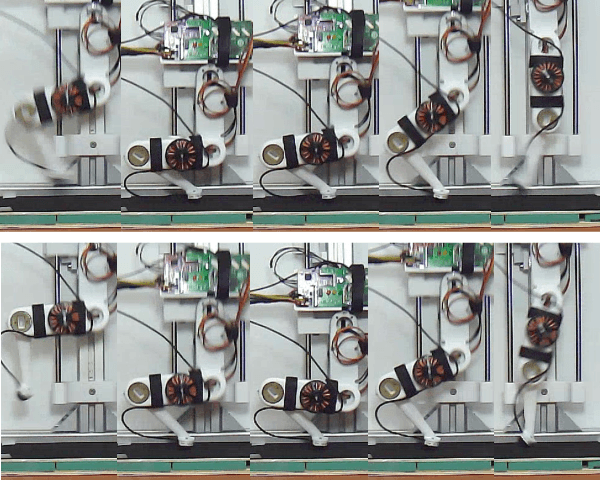

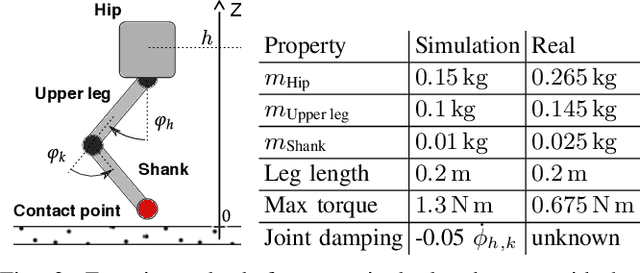

Learning a Structured Neural Network Policy for a Hopping Task

Aug 06, 2018

Abstract:In this work we present a method for learning a reactive policy for a simple dynamic locomotion task involving hard impact and switching contacts where we assume the contact location and contact timing to be unknown. To learn such a policy, we use optimal control to optimize a local controller for a fixed environment and contacts. We learn the contact-rich dynamics for our underactuated systems along these trajectories in a sample efficient manner. We use the optimized policies to learn the reactive policy in form of a neural network. Using a new neural network architecture, we are able to preserve more information from the local policy and make its output interpretable in the sense that its output in terms of desired trajectories, feedforward commands and gains can be interpreted. Extensive simulations demonstrate the robustness of the approach to changing environments, outperforming a model-free gradient policy based methods on the same tasks in simulation. Finally, we show that the learned policy can be robustly transferred on a real robot.

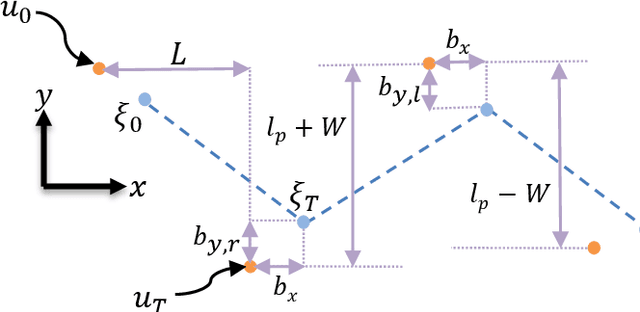

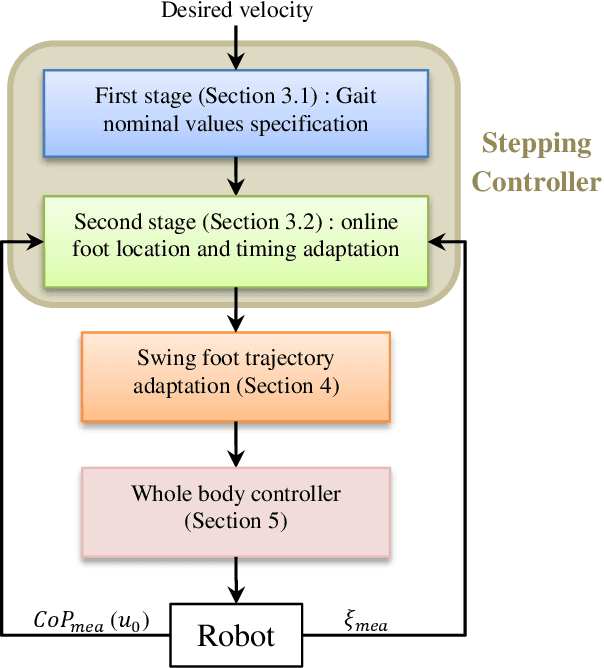

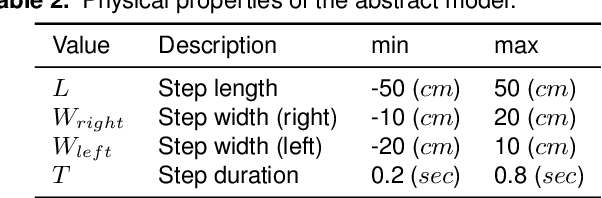

Walking Control Based on Step Timing Adaptation

Jul 23, 2018

Abstract:Step adjustment for biped robots has been shown to improve gait robustness, however the adaptation of step timing is often neglected in control strategies because it gives rise to non-convex problems when optimized over several steps. In this paper, we argue that it is not necessary to optimize walking over several steps to guarantee stability and that it is sufficient to merely select the next step timing and location. From this insight, we propose a novel walking pattern generator with linear constraints that optimally selects step location and timing at every control cycle. The resulting controller is computationally simple, yet guarantees that any viable state will remain viable in the future. We propose a swing foot adaptation strategy and show how the approach can be used with an inverse dynamics controller without any explicit control of the center of mass or the foot center of pressure. This is particularly useful for biped robots with limited control authority on their foot center of pressure, such as robots with point feet and robots with passive ankles. Extensive simulations on a humanoid robot with passive ankles subject to external pushes and foot slippage demonstrate the capabilities of the approach in cases where the foot center of pressure cannot be controlled and emphasize the importance of step timing adaptation to stabilize walking.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge