Kostas E. Bekris

An Open-Source, Reproducible Tensegrity Robot that can Navigate Among Obstacles

Nov 08, 2025Abstract:Tensegrity robots, composed of rigid struts and elastic tendons, provide impact resistance, low mass, and adaptability to unstructured terrain. Their compliance and complex, coupled dynamics, however, present modeling and control challenges, hindering path planning and obstacle avoidance. This paper presents a complete, open-source, and reproducible system that enables navigation for a 3-bar tensegrity robot. The system comprises: (i) an inexpensive, open-source hardware design, and (ii) an integrated, open-source software stack for physics-based modeling, system identification, state estimation, path planning, and control. All hardware and software are publicly available at https://sites.google.com/view/tensegrity-navigation/. The proposed system tracks the robot's pose and executes collision-free paths to a specified goal among known obstacle locations. System robustness is demonstrated through experiments involving unmodeled environmental challenges, including a vertical drop, an incline, and granular media, culminating in an outdoor field demonstration. To validate reproducibility, experiments were conducted using robot instances at two different laboratories. This work provides the robotics community with a complete navigation system for a compliant, impact-resistant, and shape-morphing robot. This system is intended to serve as a springboard for advancing the navigation capabilities of other unconventional robotic platforms.

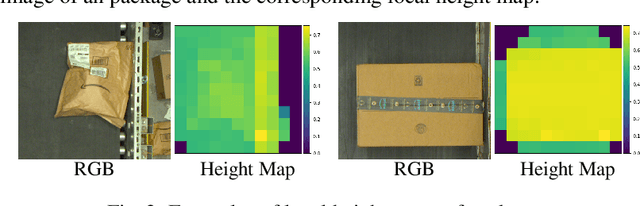

Demonstrating Multi-Suction Item Picking at Scale via Multi-Modal Learning of Pick Success

Jun 12, 2025Abstract:This work demonstrates how autonomously learning aspects of robotic operation from sparsely-labeled, real-world data of deployed, engineered solutions at industrial scale can provide with solutions that achieve improved performance. Specifically, it focuses on multi-suction robot picking and performs a comprehensive study on the application of multi-modal visual encoders for predicting the success of candidate robotic picks. Picking diverse items from unstructured piles is an important and challenging task for robot manipulation in real-world settings, such as warehouses. Methods for picking from clutter must work for an open set of items while simultaneously meeting latency constraints to achieve high throughput. The demonstrated approach utilizes multiple input modalities, such as RGB, depth and semantic segmentation, to estimate the quality of candidate multi-suction picks. The strategy is trained from real-world item picking data, with a combination of multimodal pretrain and finetune. The manuscript provides comprehensive experimental evaluation performed over a large item-picking dataset, an item-picking dataset targeted to include partial occlusions, and a package-picking dataset, which focuses on containers, such as boxes and envelopes, instead of unpackaged items. The evaluation measures performance for different item configurations, pick scenes, and object types. Ablations help to understand the effects of in-domain pretraining, the impact of different modalities and the importance of finetuning. These ablations reveal both the importance of training over multiple modalities but also the ability of models to learn during pretraining the relationship between modalities so that during finetuning and inference, only a subset of them can be used as input.

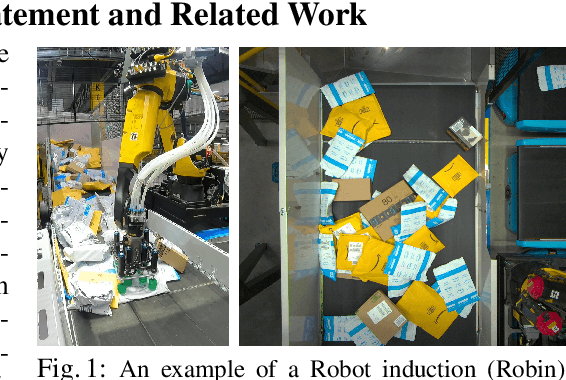

Learning to Optimize Package Picking for Large-Scale, Real-World Robot Induction

Jun 11, 2025

Abstract:Warehouse automation plays a pivotal role in enhancing operational efficiency, minimizing costs, and improving resilience to workforce variability. While prior research has demonstrated the potential of machine learning (ML) models to increase picking success rates in large-scale robotic fleets by prioritizing high-probability picks and packages, these efforts primarily focused on predicting success probabilities for picks sampled using heuristic methods. Limited attention has been given, however, to leveraging data-driven approaches to directly optimize sampled picks for better performance at scale. In this study, we propose an ML-based framework that predicts transform adjustments as well as improving the selection of suction cups for multi-suction end effectors for sampled picks to enhance their success probabilities. The framework was integrated and evaluated in test workcells that resemble the operations of Amazon Robotics' Robot Induction (Robin) fleet, which is used for package manipulation. Evaluated on over 2 million picks, the proposed method achieves a 20\% reduction in pick failure rates compared to a heuristic-based pick sampling baseline, demonstrating its effectiveness in large-scale warehouse automation scenarios.

PROBE: Proprioceptive Obstacle Detection and Estimation while Navigating in Clutter

May 17, 2025Abstract:In critical applications, including search-and-rescue in degraded environments, blockages can be prevalent and prevent the effective deployment of certain sensing modalities, particularly vision, due to occlusion and the constrained range of view of onboard camera sensors. To enable robots to tackle these challenges, we propose a new approach, Proprioceptive Obstacle Detection and Estimation while navigating in clutter PROBE, which instead relies only on the robot's proprioception to infer the presence or absence of occluded rectangular obstacles while predicting their dimensions and poses in SE(2). The proposed approach is a Transformer neural network that receives as input a history of applied torques and sensed whole-body movements of the robot and returns a parameterized representation of the obstacles in the environment. The effectiveness of PROBE is evaluated on simulated environments in Isaac Gym and with a real Unitree Go1 quadruped robot.

Integrating Model-based Control and RL for Sim2Real Transfer of Tight Insertion Policies

May 17, 2025Abstract:Object insertion under tight tolerances ($< \hspace{-.02in} 1mm$) is an important but challenging assembly task as even small errors can result in undesirable contacts. Recent efforts focused on Reinforcement Learning (RL), which often depends on careful definition of dense reward functions. This work proposes an effective strategy for such tasks that integrates traditional model-based control with RL to achieve improved insertion accuracy. The policy is trained exclusively in simulation and is zero-shot transferred to the real system. It employs a potential field-based controller to acquire a model-based policy for inserting a plug into a socket given full observability in simulation. This policy is then integrated with residual RL, which is trained in simulation given only a sparse, goal-reaching reward. A curriculum scheme over observation noise and action magnitude is used for training the residual RL policy. Both policy components use as input the SE(3) poses of both the plug and the socket and return the plug's SE(3) pose transform, which is executed by a robotic arm using a controller. The integrated policy is deployed on the real system without further training or fine-tuning, given a visual SE(3) object tracker. The proposed solution and alternatives are evaluated across a variety of objects and conditions in simulation and reality. The proposed approach outperforms recent RL-based methods in this domain and prior efforts with hybrid policies. Ablations highlight the impact of each component of the approach.

Kinodynamic Trajectory Following with STELA: Simultaneous Trajectory Estimation & Local Adaptation

Apr 28, 2025Abstract:State estimation and control are often addressed separately, leading to unsafe execution due to sensing noise, execution errors, and discrepancies between the planning model and reality. Simultaneous control and trajectory estimation using probabilistic graphical models has been proposed as a unified solution to these challenges. Previous work, however, relies heavily on appropriate Gaussian priors and is limited to holonomic robots with linear time-varying models. The current research extends graphical optimization methods to vehicles with arbitrary dynamical models via Simultaneous Trajectory Estimation and Local Adaptation (STELA). The overall approach initializes feasible trajectories using a kinodynamic, sampling-based motion planner. Then, it simultaneously: (i) estimates the past trajectory based on noisy observations, and (ii) adapts the controls to be executed to minimize deviations from the planned, feasible trajectory, while avoiding collisions. The proposed factor graph representation of trajectories in STELA can be applied for any dynamical system given access to first or second-order state update equations, and introduces the duration of execution between two states in the trajectory discretization as an optimization variable. These features provide both generalization and flexibility in trajectory following. In addition to targeting computational efficiency, the proposed strategy performs incremental updates of the factor graph using the iSAM algorithm and introduces a time-window mechanism. This mechanism allows the factor graph to be dynamically updated to operate over a limited history and forward horizon of the planned trajectory. This enables online updates of controls at a minimum of 10Hz. Experiments demonstrate that STELA achieves at least comparable performance to previous frameworks on idealized vehicles with linear dynamics.[...]

The State of Robot Motion Generation

Oct 16, 2024Abstract:This paper reviews the large spectrum of methods for generating robot motion proposed over the 50 years of robotics research culminating in recent developments. It crosses the boundaries of methodologies, typically not surveyed together, from those that operate over explicit models to those that learn implicit ones. The paper discusses the current state-of-the-art as well as properties of varying methodologies, highlighting opportunities for integration.

${\tt KRAFT}$: Sampling-Based Kinodynamic Replanning and Feedback Control over Approximate, Identified Models of Vehicular Systems

Sep 17, 2024Abstract:This paper aims to increase the safety and reliability of executing trajectories planned for robots with non-trivial dynamics given a light-weight, approximate dynamics model. Scenarios include mobile robots navigating through workspaces with imperfectly modeled surfaces and unknown friction. The proposed approach, Kinodynamic Replanning over Approximate Models with Feedback Tracking (KRAFT), integrates: (i) replanning via an asymptotically optimal sampling-based kinodynamic tree planner, with (ii) trajectory following via feedback control, and (iii) a safety mechanism to reduce collision due to second-order dynamics. The planning and control components use a rough dynamics model expressed analytically via differential equations, which is tuned via system identification (SysId) in a training environment but not the deployed one. This allows the process to be fast and achieve long-horizon reasoning during each replanning cycle. At the same time, the model still includes gaps with reality, even after SysID, in new environments. Experiments demonstrate the limitations of kinematic path planning and path tracking approaches, highlighting the importance of: (a) closing the feedback-loop also at the planning level; and (b) long-horizon reasoning, for safe and efficient trajectory execution given inaccurate models.

${\tt MORALS}$: Analysis of High-Dimensional Robot Controllers via Topological Tools in a Latent Space

Oct 05, 2023Abstract:Estimating the region of attraction (${\tt RoA}$) for a robotic system's controller is essential for safe application and controller composition. Many existing methods require access to a closed-form expression that limit applicability to data-driven controllers. Methods that operate only over trajectory rollouts tend to be data-hungry. In prior work, we have demonstrated that topological tools based on Morse Graphs offer data-efficient ${\tt RoA}$ estimation without needing an analytical model. They struggle, however, with high-dimensional systems as they operate over a discretization of the state space. This paper presents ${\it Mo}$rse Graph-aided discovery of ${\it R}$egions of ${\it A}$ttraction in a learned ${\it L}$atent ${\it S}$pace (${\tt MORALS}$). The approach combines autoencoding neural networks with Morse Graphs. ${\tt MORALS}$ shows promising predictive capabilities in estimating attractors and their ${\tt RoA}$s for data-driven controllers operating over high-dimensional systems, including a 67-dim humanoid robot and a 96-dim 3-fingered manipulator. It first projects the dynamics of the controlled system into a learned latent space. Then, it constructs a reduced form of Morse Graphs representing the bistability of the underlying dynamics, i.e., detecting when the controller results in a desired versus an undesired behavior. The evaluation on high-dimensional robotic datasets indicates the data efficiency of the approach in ${\tt RoA}$ estimation.

Roadmaps with Gaps over Controllers: Achieving Efficiency in Planning under Dynamics

Oct 05, 2023Abstract:This paper aims to improve the computational efficiency of motion planning for mobile robots with non-trivial dynamics by taking advantage of learned controllers. It adopts a decoupled strategy, where a system-specific controller is first trained offline in an empty environment to deal with the system's dynamics. For an environment, the proposed approach constructs offline a data structure, a "Roadmap with Gaps," to approximately learn how to solve planning queries in this environment using the learned controller. Its nodes correspond to local regions and edges correspond to applications of the learned control policy that approximately connect these regions. Gaps arise due to the controller not perfectly connecting pairs of individual states along edges. Online, given a query, a tree sampling-based motion planner uses the roadmap so that the tree's expansion is informed towards the goal region. The tree expansion selects local subgoals given a wavefront on the roadmap that guides towards the goal. When the controller cannot reach a subgoal region, the planner resorts to random exploration to maintain probabilistic completeness and asymptotic optimality. The experimental evaluation shows that the approach significantly improves the computational efficiency of motion planning on various benchmarks, including physics-based vehicular models on uneven and varying friction terrains as well as a quadrotor under air pressure effects.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge