Khuyagbaatar Batsuren

Evaluating Subword Tokenization: Alien Subword Composition and OOV Generalization Challenge

Apr 20, 2024

Abstract:The popular subword tokenizers of current language models, such as Byte-Pair Encoding (BPE), are known not to respect morpheme boundaries, which affects the downstream performance of the models. While many improved tokenization algorithms have been proposed, their evaluation and cross-comparison is still an open problem. As a solution, we propose a combined intrinsic-extrinsic evaluation framework for subword tokenization. Intrinsic evaluation is based on our new UniMorph Labeller tool that classifies subword tokenization as either morphological or alien. Extrinsic evaluation, in turn, is performed via the Out-of-Vocabulary Generalization Challenge 1.0 benchmark, which consists of three newly specified downstream text classification tasks. Our empirical findings show that the accuracy of UniMorph Labeller is 98%, and that, in all language models studied (including ALBERT, BERT, RoBERTa, and DeBERTa), alien tokenization leads to poorer generalizations compared to morphological tokenization for semantic compositionality of word meanings.

State-of-the-art generalisation research in NLP: a taxonomy and review

Oct 10, 2022

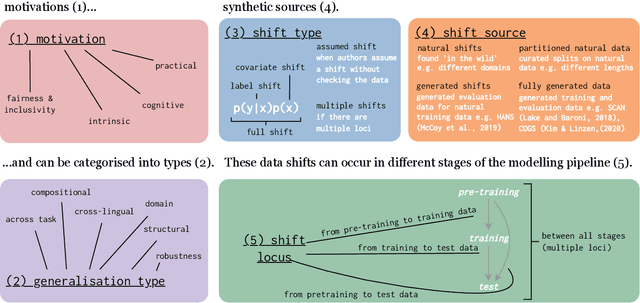

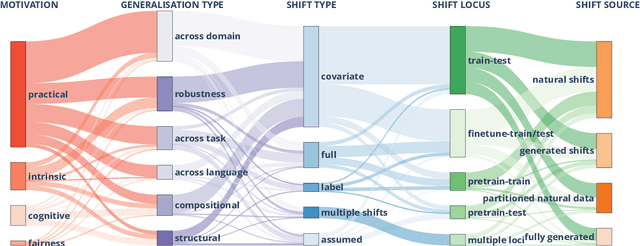

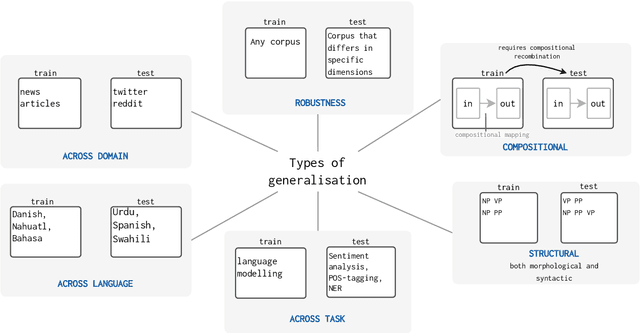

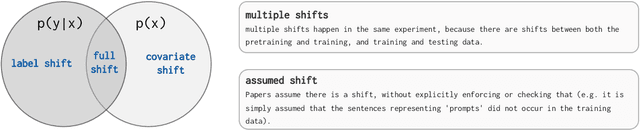

Abstract:The ability to generalise well is one of the primary desiderata of natural language processing (NLP). Yet, what `good generalisation' entails and how it should be evaluated is not well understood, nor are there any common standards to evaluate it. In this paper, we aim to lay the ground-work to improve both of these issues. We present a taxonomy for characterising and understanding generalisation research in NLP, we use that taxonomy to present a comprehensive map of published generalisation studies, and we make recommendations for which areas might deserve attention in the future. Our taxonomy is based on an extensive literature review of generalisation research, and contains five axes along which studies can differ: their main motivation, the type of generalisation they aim to solve, the type of data shift they consider, the source by which this data shift is obtained, and the locus of the shift within the modelling pipeline. We use our taxonomy to classify over 400 previous papers that test generalisation, for a total of more than 600 individual experiments. Considering the results of this review, we present an in-depth analysis of the current state of generalisation research in NLP, and make recommendations for the future. Along with this paper, we release a webpage where the results of our review can be dynamically explored, and which we intend to up-date as new NLP generalisation studies are published. With this work, we aim to make steps towards making state-of-the-art generalisation testing the new status quo in NLP.

Text Characterization Toolkit

Oct 04, 2022

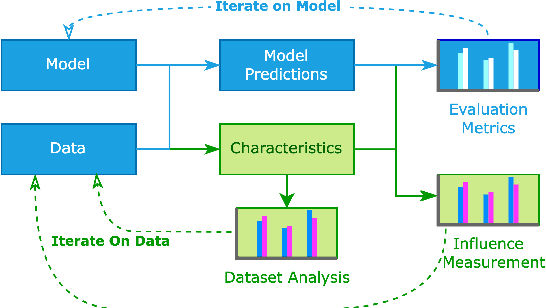

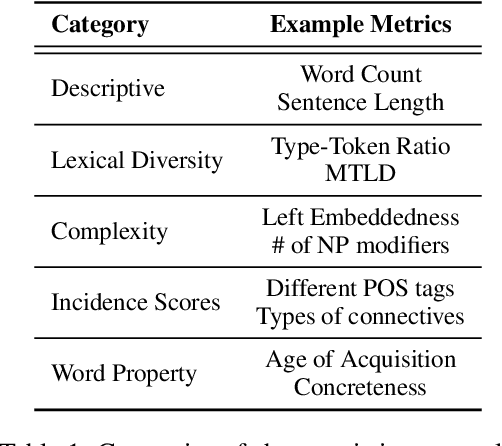

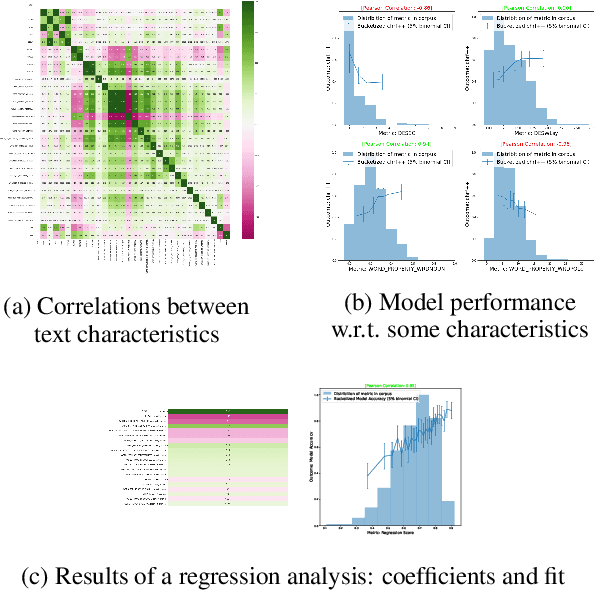

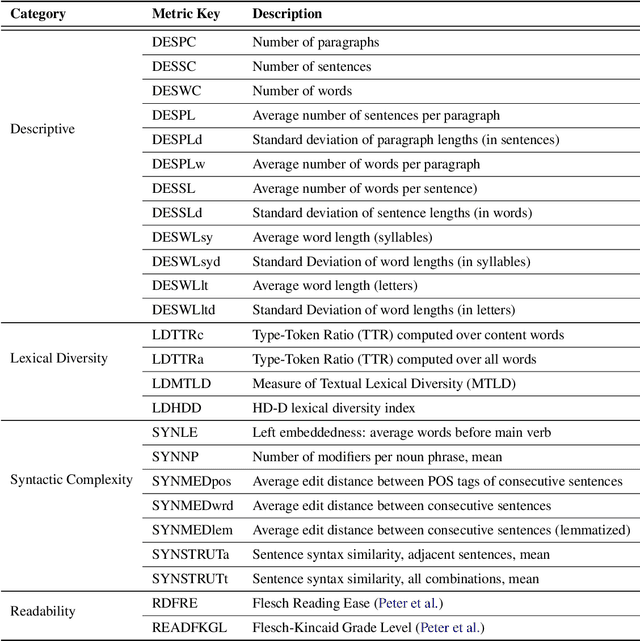

Abstract:In NLP, models are usually evaluated by reporting single-number performance scores on a number of readily available benchmarks, without much deeper analysis. Here, we argue that - especially given the well-known fact that benchmarks often contain biases, artefacts, and spurious correlations - deeper results analysis should become the de-facto standard when presenting new models or benchmarks. We present a tool that researchers can use to study properties of the dataset and the influence of those properties on their models' behaviour. Our Text Characterization Toolkit includes both an easy-to-use annotation tool, as well as off-the-shelf scripts that can be used for specific analyses. We also present use-cases from three different domains: we use the tool to predict what are difficult examples for given well-known trained models and identify (potentially harmful) biases and heuristics that are present in a dataset.

The SIGMORPHON 2022 Shared Task on Morpheme Segmentation

Jun 15, 2022

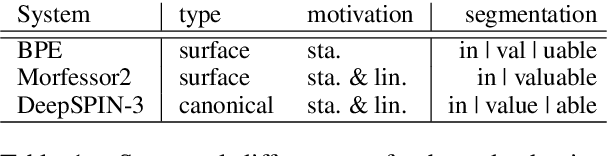

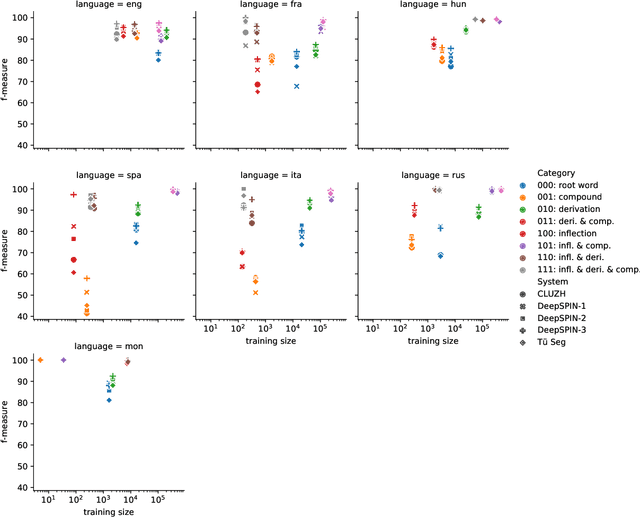

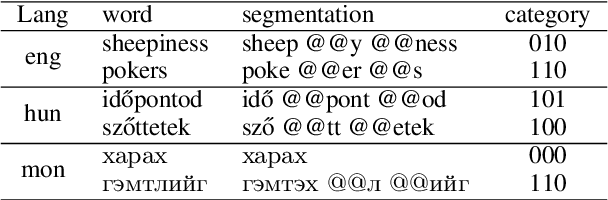

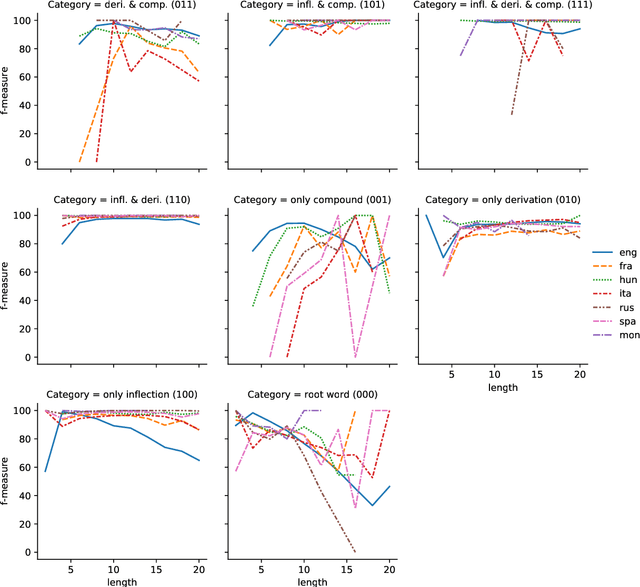

Abstract:The SIGMORPHON 2022 shared task on morpheme segmentation challenged systems to decompose a word into a sequence of morphemes and covered most types of morphology: compounds, derivations, and inflections. Subtask 1, word-level morpheme segmentation, covered 5 million words in 9 languages (Czech, English, Spanish, Hungarian, French, Italian, Russian, Latin, Mongolian) and received 13 system submissions from 7 teams and the best system averaged 97.29% F1 score across all languages, ranging English (93.84%) to Latin (99.38%). Subtask 2, sentence-level morpheme segmentation, covered 18,735 sentences in 3 languages (Czech, English, Mongolian), received 10 system submissions from 3 teams, and the best systems outperformed all three state-of-the-art subword tokenization methods (BPE, ULM, Morfessor2) by 30.71% absolute. To facilitate error analysis and support any type of future studies, we released all system predictions, the evaluation script, and all gold standard datasets.

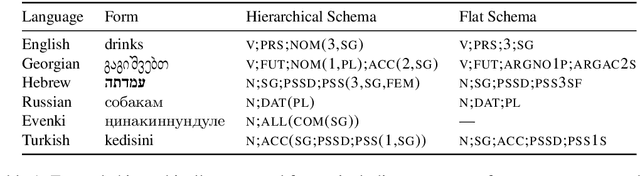

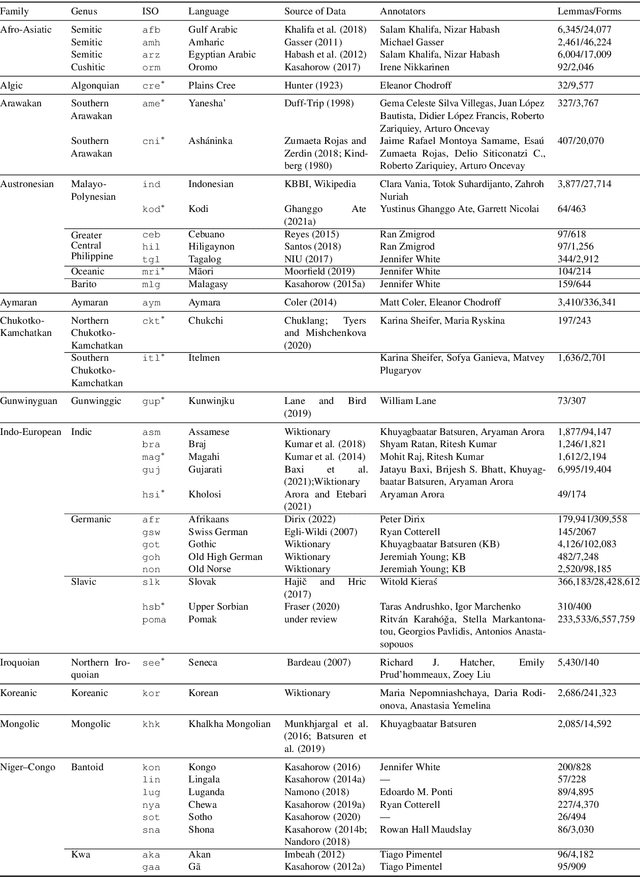

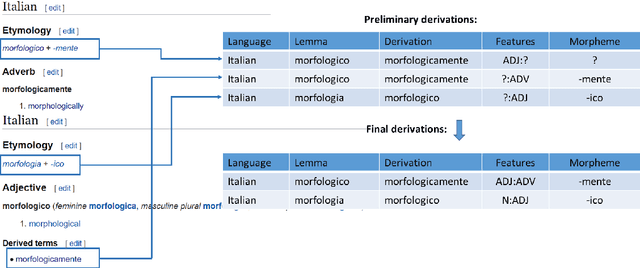

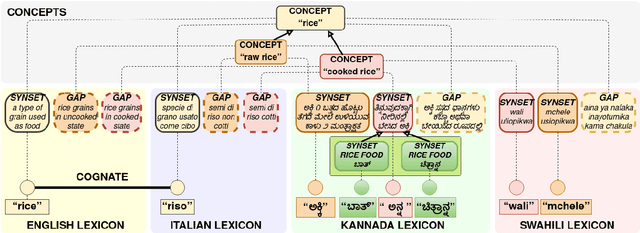

UniMorph 4.0: Universal Morphology

May 10, 2022

Abstract:The Universal Morphology (UniMorph) project is a collaborative effort providing broad-coverage instantiated normalized morphological inflection tables for hundreds of diverse world languages. The project comprises two major thrusts: a language-independent feature schema for rich morphological annotation and a type-level resource of annotated data in diverse languages realizing that schema. This paper presents the expansions and improvements made on several fronts over the last couple of years (since McCarthy et al. (2020)). Collaborative efforts by numerous linguists have added 67 new languages, including 30 endangered languages. We have implemented several improvements to the extraction pipeline to tackle some issues, e.g. missing gender and macron information. We have also amended the schema to use a hierarchical structure that is needed for morphological phenomena like multiple-argument agreement and case stacking, while adding some missing morphological features to make the schema more inclusive. In light of the last UniMorph release, we also augmented the database with morpheme segmentation for 16 languages. Lastly, this new release makes a push towards inclusion of derivational morphology in UniMorph by enriching the data and annotation schema with instances representing derivational processes from MorphyNet.

Using Linguistic Typology to Enrich Multilingual Lexicons: the Case of Lexical Gaps in Kinship

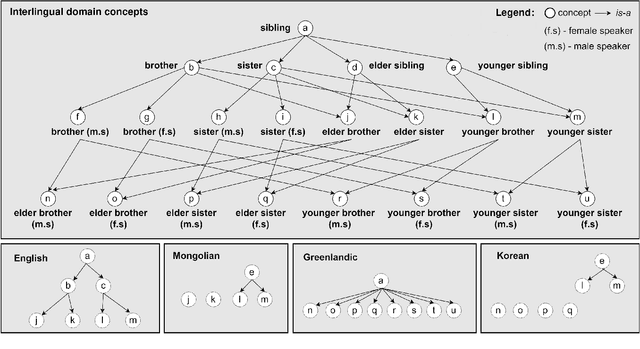

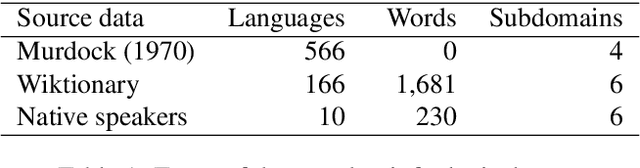

Apr 11, 2022

Abstract:This paper describes a method to enrich lexical resources with content relating to linguistic diversity, based on knowledge from the field of lexical typology. We capture the phenomenon of diversity through the notions of lexical gap and language-specific word and use a systematic method to infer gaps semi-automatically on a large scale. As a first result obtained for the domain of kinship terminology, known to be very diverse throughout the world, we publish a lexico-semantic resource consisting of 198 domain concepts, 1,911 words, and 37,370 gaps covering 699 languages. We see potential in the use of resources such as ours for the improvement of a variety of cross-lingual NLP tasks, which we demonstrate through a downstream application for the evaluation of machine translation systems.

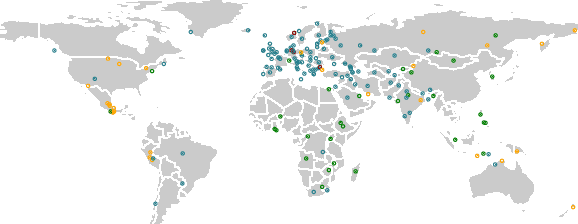

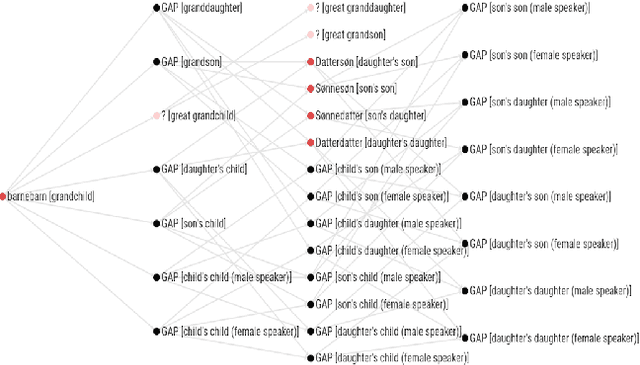

Language Diversity: Visible to Humans, Exploitable by Machines

Mar 09, 2022

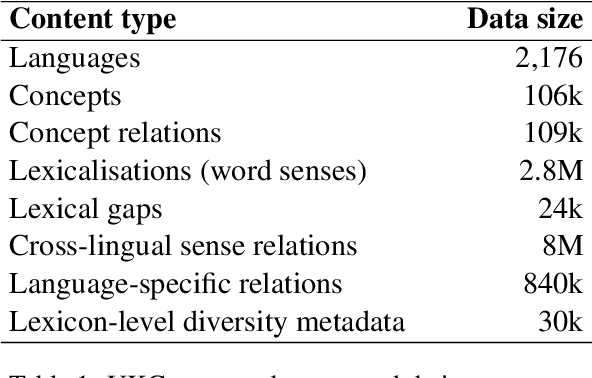

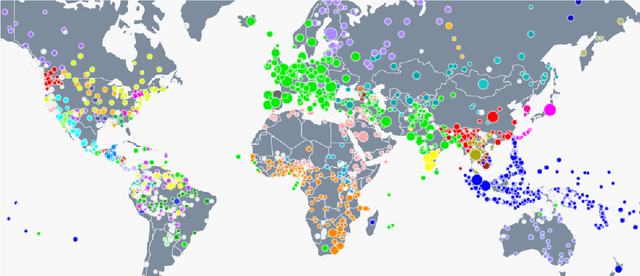

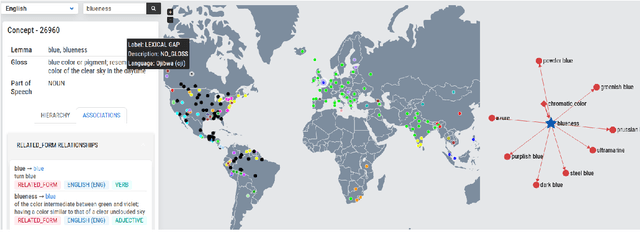

Abstract:The Universal Knowledge Core (UKC) is a large multilingual lexical database with a focus on language diversity and covering over a thousand languages. The aim of the database, as well as its tools and data catalogue, is to make the somewhat abstract notion of diversity visually understandable for humans and formally exploitable by machines. The UKC website lets users explore millions of individual words and their meanings, but also phenomena of cross-lingual convergence and divergence, such as shared interlingual meanings, lexicon similarities, cognate clusters, or lexical gaps. The UKC LiveLanguage Catalogue, in turn, provides access to the underlying lexical data in a computer-processable form, ready to be reused in cross-lingual applications.

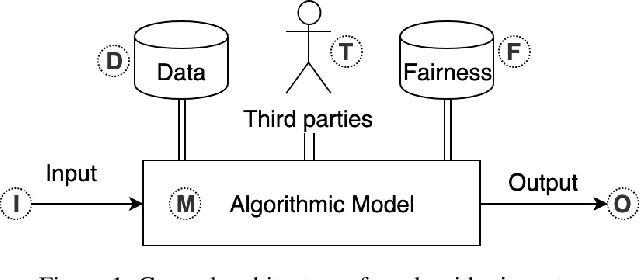

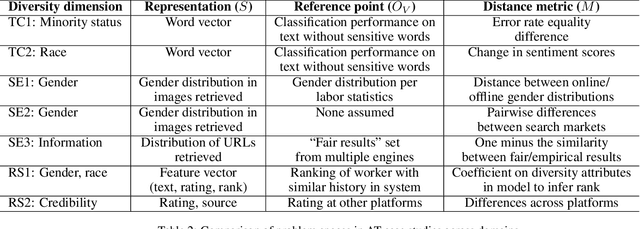

Towards Algorithmic Transparency: A Diversity Perspective

Apr 12, 2021

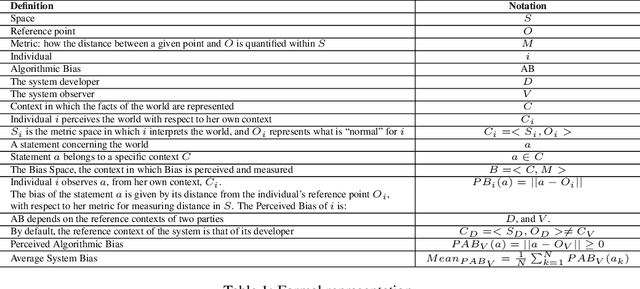

Abstract:As the role of algorithmic systems and processes increases in society, so does the risk of bias, which can result in discrimination against individuals and social groups. Research on algorithmic bias has exploded in recent years, highlighting both the problems of bias, and the potential solutions, in terms of algorithmic transparency (AT). Transparency is important for facilitating fairness management as well as explainability in algorithms; however, the concept of diversity, and its relationship to bias and transparency, has been largely left out of the discussion. We reflect on the relationship between diversity and bias, arguing that diversity drives the need for transparency. Using a perspective-taking lens, which takes diversity as a given, we propose a conceptual framework to characterize the problem and solution spaces of AT, to aid its application in algorithmic systems. Example cases from three research domains are described using our framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge