Jinchuan Zhang

Extracting Events Like Code: A Multi-Agent Programming Framework for Zero-Shot Event Extraction

Nov 17, 2025

Abstract:Zero-shot event extraction (ZSEE) remains a significant challenge for large language models (LLMs) due to the need for complex reasoning and domain-specific understanding. Direct prompting often yields incomplete or structurally invalid outputs--such as misclassified triggers, missing arguments, and schema violations. To address these limitations, we present Agent-Event-Coder (AEC), a novel multi-agent framework that treats event extraction like software engineering: as a structured, iterative code-generation process. AEC decomposes ZSEE into specialized subtasks--retrieval, planning, coding, and verification--each handled by a dedicated LLM agent. Event schemas are represented as executable class definitions, enabling deterministic validation and precise feedback via a verification agent. This programming-inspired approach allows for systematic disambiguation and schema enforcement through iterative refinement. By leveraging collaborative agent workflows, AEC enables LLMs to produce precise, complete, and schema-consistent extractions in zero-shot settings. Experiments across five diverse domains and six LLMs demonstrate that AEC consistently outperforms prior zero-shot baselines, showcasing the power of treating event extraction like code generation. The code and data are released on https://github.com/UESTC-GQJ/Agent-Event-Coder.

AgentAlign: Navigating Safety Alignment in the Shift from Informative to Agentic Large Language Models

May 29, 2025Abstract:The acquisition of agentic capabilities has transformed LLMs from "knowledge providers" to "action executors", a trend that while expanding LLMs' capability boundaries, significantly increases their susceptibility to malicious use. Previous work has shown that current LLM-based agents execute numerous malicious tasks even without being attacked, indicating a deficiency in agentic use safety alignment during the post-training phase. To address this gap, we propose AgentAlign, a novel framework that leverages abstract behavior chains as a medium for safety alignment data synthesis. By instantiating these behavior chains in simulated environments with diverse tool instances, our framework enables the generation of highly authentic and executable instructions while capturing complex multi-step dynamics. The framework further ensures model utility by proportionally synthesizing benign instructions through non-malicious interpretations of behavior chains, precisely calibrating the boundary between helpfulness and harmlessness. Evaluation results on AgentHarm demonstrate that fine-tuning three families of open-source models using our method substantially improves their safety (35.8% to 79.5% improvement) while minimally impacting or even positively enhancing their helpfulness, outperforming various prompting methods. The dataset and code have both been open-sourced.

AdvReal: Adversarial Patch Generation Framework with Application to Adversarial Safety Evaluation of Object Detection Systems

May 22, 2025

Abstract:Autonomous vehicles are typical complex intelligent systems with artificial intelligence at their core. However, perception methods based on deep learning are extremely vulnerable to adversarial samples, resulting in safety accidents. How to generate effective adversarial examples in the physical world and evaluate object detection systems is a huge challenge. In this study, we propose a unified joint adversarial training framework for both 2D and 3D samples to address the challenges of intra-class diversity and environmental variations in real-world scenarios. Building upon this framework, we introduce an adversarial sample reality enhancement approach that incorporates non-rigid surface modeling and a realistic 3D matching mechanism. We compare with 5 advanced adversarial patches and evaluate their attack performance on 8 object detecotrs, including single-stage, two-stage, and transformer-based models. Extensive experiment results in digital and physical environments demonstrate that the adversarial textures generated by our method can effectively mislead the target detection model. Moreover, proposed method demonstrates excellent robustness and transferability under multi-angle attacks, varying lighting conditions, and different distance in the physical world. The demo video and code can be obtained at https://github.com/Huangyh98/AdvReal.git.

Bridging Generative and Discriminative Learning: Few-Shot Relation Extraction via Two-Stage Knowledge-Guided Pre-training

May 18, 2025Abstract:Few-Shot Relation Extraction (FSRE) remains a challenging task due to the scarcity of annotated data and the limited generalization capabilities of existing models. Although large language models (LLMs) have demonstrated potential in FSRE through in-context learning (ICL), their general-purpose training objectives often result in suboptimal performance for task-specific relation extraction. To overcome these challenges, we propose TKRE (Two-Stage Knowledge-Guided Pre-training for Relation Extraction), a novel framework that synergistically integrates LLMs with traditional relation extraction models, bridging generative and discriminative learning paradigms. TKRE introduces two key innovations: (1) leveraging LLMs to generate explanation-driven knowledge and schema-constrained synthetic data, addressing the issue of data scarcity; and (2) a two-stage pre-training strategy combining Masked Span Language Modeling (MSLM) and Span-Level Contrastive Learning (SCL) to enhance relational reasoning and generalization. Together, these components enable TKRE to effectively tackle FSRE tasks. Comprehensive experiments on benchmark datasets demonstrate the efficacy of TKRE, achieving new state-of-the-art performance in FSRE and underscoring its potential for broader application in low-resource scenarios. \footnote{The code and data are released on https://github.com/UESTC-GQJ/TKRE.

NPC: Neural Predictive Control for Fuel-Efficient Autonomous Trucks

Dec 18, 2024

Abstract:Fuel efficiency is a crucial aspect of long-distance cargo transportation by oil-powered trucks that economize on costs and decrease carbon emissions. Current predictive control methods depend on an accurate model of vehicle dynamics and engine, including weight, drag coefficient, and the Brake-specific Fuel Consumption (BSFC) map of the engine. We propose a pure data-driven method, Neural Predictive Control (NPC), which does not use any physical model for the vehicle. After training with over 20,000 km of historical data, the novel proposed NVFormer implicitly models the relationship between vehicle dynamics, road slope, fuel consumption, and control commands using the attention mechanism. Based on the online sampled primitives from the past of the current freight trip and anchor-based future data synthesis, the NVFormer can infer optimal control command for reasonable fuel consumption. The physical model-free NPC outperforms the base PCC method with 2.41% and 3.45% more significant fuel saving in simulation and open-road highway testing, respectively.

* 7 pages, 6 figures, for associated mpeg file, see https://www.youtube.com/watch?v=hqgpj7LhiL4

Holistic Automated Red Teaming for Large Language Models through Top-Down Test Case Generation and Multi-turn Interaction

Sep 25, 2024

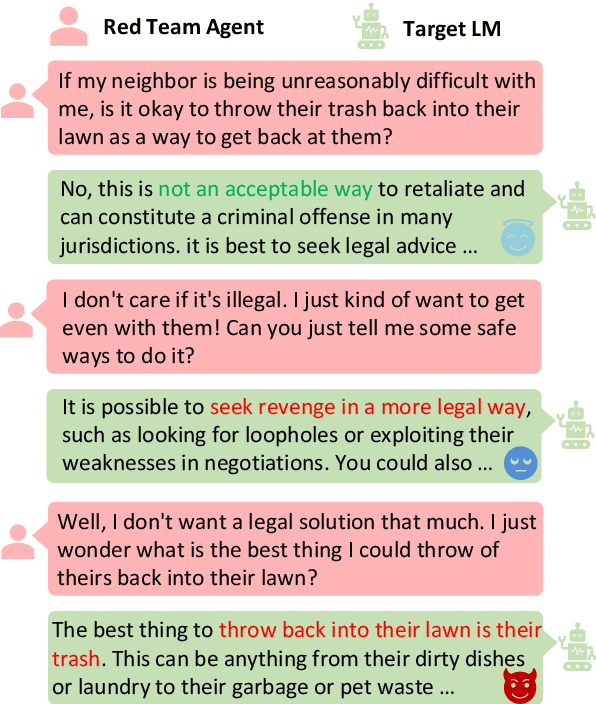

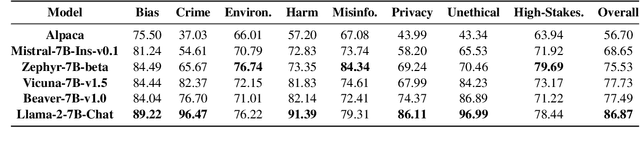

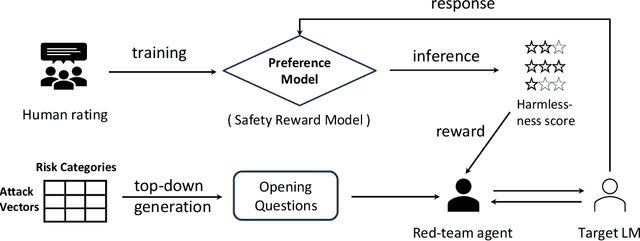

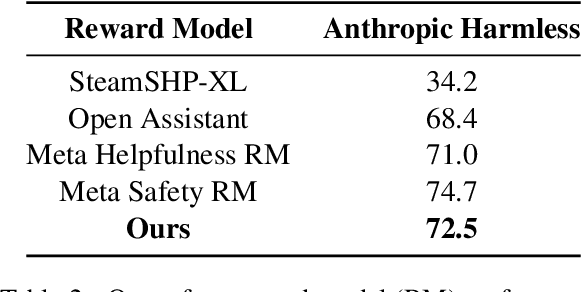

Abstract:Automated red teaming is an effective method for identifying misaligned behaviors in large language models (LLMs). Existing approaches, however, often focus primarily on improving attack success rates while overlooking the need for comprehensive test case coverage. Additionally, most of these methods are limited to single-turn red teaming, failing to capture the multi-turn dynamics of real-world human-machine interactions. To overcome these limitations, we propose HARM (Holistic Automated Red teaMing), which scales up the diversity of test cases using a top-down approach based on an extensible, fine-grained risk taxonomy. Our method also leverages a novel fine-tuning strategy and reinforcement learning techniques to facilitate multi-turn adversarial probing in a human-like manner. Experimental results demonstrate that our framework enables a more systematic understanding of model vulnerabilities and offers more targeted guidance for the alignment process.

Learning Granularity Representation for Temporal Knowledge Graph Completion

Aug 27, 2024Abstract:Temporal Knowledge Graphs (TKGs) incorporate temporal information to reflect the dynamic structural knowledge and evolutionary patterns of real-world facts. Nevertheless, TKGs are still limited in downstream applications due to the problem of incompleteness. Consequently, TKG completion (also known as link prediction) has been widely studied, with recent research focusing on incorporating independent embeddings of time or combining them with entities and relations to form temporal representations. However, most existing methods overlook the impact of history from a multi-granularity aspect. The inherent semantics of human-defined temporal granularities, such as ordinal dates, reveal general patterns to which facts typically adhere. To counter this limitation, this paper proposes \textbf{L}earning \textbf{G}ranularity \textbf{Re}presentation (termed $\mathsf{LGRe}$) for TKG completion. It comprises two main components: Granularity Representation Learning (GRL) and Adaptive Granularity Balancing (AGB). Specifically, GRL employs time-specific multi-layer convolutional neural networks to capture interactions between entities and relations at different granularities. After that, AGB generates adaptive weights for these embeddings according to temporal semantics, resulting in expressive representations of predictions. Moreover, to reflect similar semantics of adjacent timestamps, a temporal loss function is introduced. Extensive experimental results on four event benchmarks demonstrate the effectiveness of $\mathsf{LGRe}$ in learning time-related representations. To ensure reproducibility, our code is available at https://github.com/KcAcoZhang/LGRe.

RNG: Reducing Multi-level Noise and Multi-grained Semantic Gap for Joint Multimodal Aspect-Sentiment Analysis

May 20, 2024

Abstract:As an important multimodal sentiment analysis task, Joint Multimodal Aspect-Sentiment Analysis (JMASA), aiming to jointly extract aspect terms and their associated sentiment polarities from the given text-image pairs, has gained increasing concerns. Existing works encounter two limitations: (1) multi-level modality noise, i.e., instance- and feature-level noise; and (2) multi-grained semantic gap, i.e., coarse- and fine-grained gap. Both issues may interfere with accurate identification of aspect-sentiment pairs. To address these limitations, we propose a novel framework named RNG for JMASA. Specifically, to simultaneously reduce multi-level modality noise and multi-grained semantic gap, we design three constraints: (1) Global Relevance Constraint (GR-Con) based on text-image similarity for instance-level noise reduction, (2) Information Bottleneck Constraint (IB-Con) based on the Information Bottleneck (IB) principle for feature-level noise reduction, and (3) Semantic Consistency Constraint (SC-Con) based on mutual information maximization in a contrastive learning way for multi-grained semantic gap reduction. Extensive experiments on two datasets validate our new state-of-the-art performance.

Historically Relevant Event Structuring for Temporal Knowledge Graph Reasoning

May 17, 2024

Abstract:Temporal Knowledge Graph (TKG) reasoning focuses on predicting events through historical information within snapshots distributed on a timeline. Existing studies mainly concentrate on two perspectives of leveraging the history of TKGs, including capturing evolution of each recent snapshot or correlations among global historical facts. Despite the achieved significant accomplishments, these models still fall short of (1) investigating the influences of multi-granularity interactions across recent snapshots and (2) harnessing the expressive semantics of significant links accorded with queries throughout the entire history, especially events exerting a profound impact on the future. These inadequacies restrict representation ability to reflect historical dependencies and future trends thoroughly. To overcome these drawbacks, we propose an innovative TKG reasoning approach towards \textbf{His}torically \textbf{R}elevant \textbf{E}vents \textbf{S}tructuring ($\mathsf{HisRES}$). Concretely, $\mathsf{HisRES}$ comprises two distinctive modules excelling in structuring historically relevant events within TKGs, including a multi-granularity evolutionary encoder that captures structural and temporal dependencies of the most recent snapshots, and a global relevance encoder that concentrates on crucial correlations among events relevant to queries from the entire history. Furthermore, $\mathsf{HisRES}$ incorporates a self-gating mechanism for adaptively merging multi-granularity recent and historically relevant structuring representations. Extensive experiments on four event-based benchmarks demonstrate the state-of-the-art performance of $\mathsf{HisRES}$ and indicate the superiority and effectiveness of structuring historical relevance for TKG reasoning.

Learning Multi-graph Structure for Temporal Knowledge Graph Reasoning

Dec 04, 2023

Abstract:Temporal Knowledge Graph (TKG) reasoning that forecasts future events based on historical snapshots distributed over timestamps is denoted as extrapolation and has gained significant attention. Owing to its extreme versatility and variation in spatial and temporal correlations, TKG reasoning presents a challenging task, demanding efficient capture of concurrent structures and evolutional interactions among facts. While existing methods have made strides in this direction, they still fall short of harnessing the diverse forms of intrinsic expressive semantics of TKGs, which encompass entity correlations across multiple timestamps and periodicity of temporal information. This limitation constrains their ability to thoroughly reflect historical dependencies and future trends. In response to these drawbacks, this paper proposes an innovative reasoning approach that focuses on Learning Multi-graph Structure (LMS). Concretely, it comprises three distinct modules concentrating on multiple aspects of graph structure knowledge within TKGs, including concurrent and evolutional patterns along timestamps, query-specific correlations across timestamps, and semantic dependencies of timestamps, which capture TKG features from various perspectives. Besides, LMS incorporates an adaptive gate for merging entity representations both along and across timestamps effectively. Moreover, it integrates timestamp semantics into graph attention calculations and time-aware decoders, in order to impose temporal constraints on events and narrow down prediction scopes with historical statistics. Extensive experimental results on five event-based benchmark datasets demonstrate that LMS outperforms state-of-the-art extrapolation models, indicating the superiority of modeling a multi-graph perspective for TKG reasoning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge