Jianxin Li

Beihang University

Deep Learning Schema-based Event Extraction: Literature Review and Current Trends

Jul 22, 2021

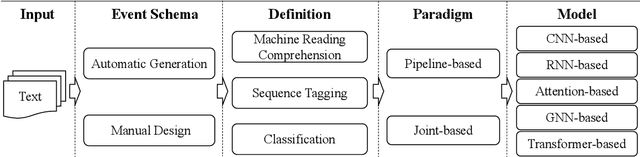

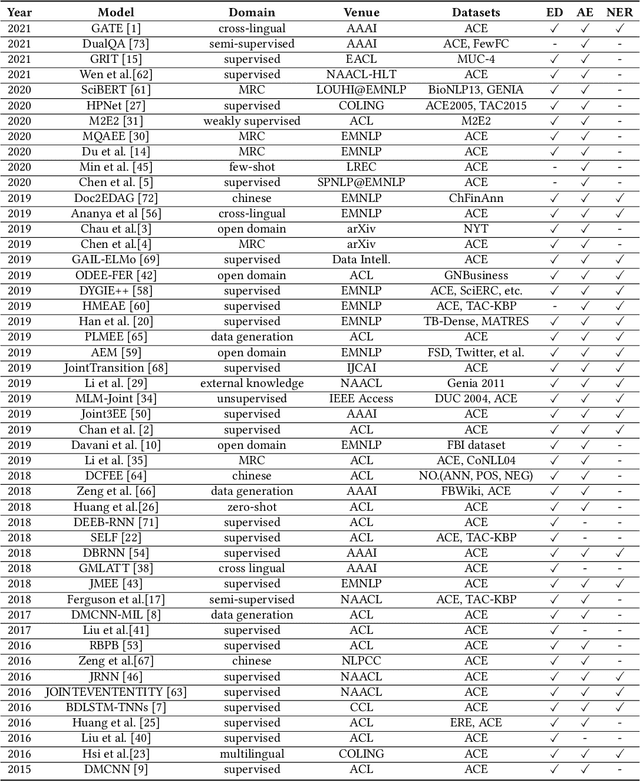

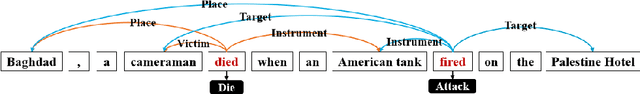

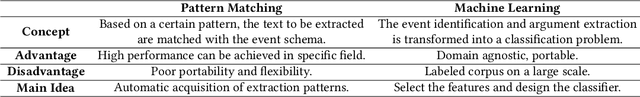

Abstract:Schema-based event extraction is a critical technique to apprehend the essential content of events promptly. With the rapid development of deep learning technology, event extraction technology based on deep learning has become a research hotspot. Numerous methods, datasets, and evaluation metrics have been proposed in the literature, raising the need for a comprehensive and updated survey. This paper fills the gap by reviewing the state-of-the-art approaches, focusing on deep learning-based models. We summarize the task definition, paradigm, and models of schema-based event extraction and then discuss each of these in detail. We introduce benchmark datasets that support tests of predictions and evaluation metrics. A comprehensive comparison between different techniques is also provided in this survey. Finally, we conclude by summarizing future research directions facing the research area.

Reinforcement Learning-based Dialogue Guided Event Extraction to Exploit Argument Relations

Jun 23, 2021

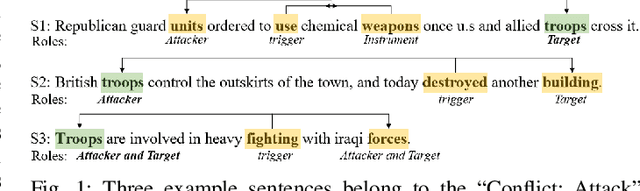

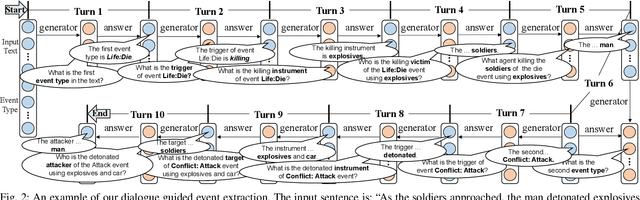

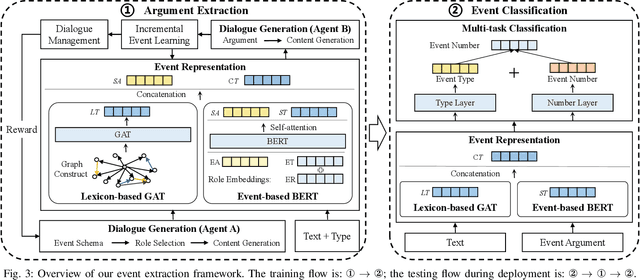

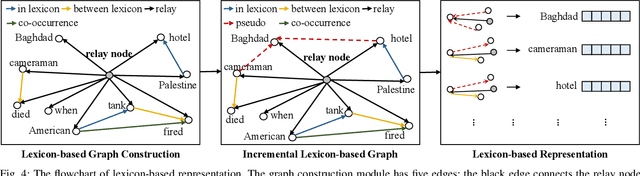

Abstract:Event extraction is a fundamental task for natural language processing. Finding the roles of event arguments like event participants is essential for event extraction. However, doing so for real-life event descriptions is challenging because an argument's role often varies in different contexts. While the relationship and interactions between multiple arguments are useful for settling the argument roles, such information is largely ignored by existing approaches. This paper presents a better approach for event extraction by explicitly utilizing the relationships of event arguments. We achieve this through a carefully designed task-oriented dialogue system. To model the argument relation, we employ reinforcement learning and incremental learning to extract multiple arguments via a multi-turned, iterative process. Our approach leverages knowledge of the already extracted arguments of the same sentence to determine the role of arguments that would be difficult to decide individually. It then uses the newly obtained information to improve the decisions of previously extracted arguments. This two-way feedback process allows us to exploit the argument relations to effectively settle argument roles, leading to better sentence understanding and event extraction. Experimental results show that our approach consistently outperforms seven state-of-the-art event extraction methods for the classification of events and argument role and argument identification.

RoSearch: Search for Robust Student Architectures When Distilling Pre-trained Language Models

Jun 07, 2021

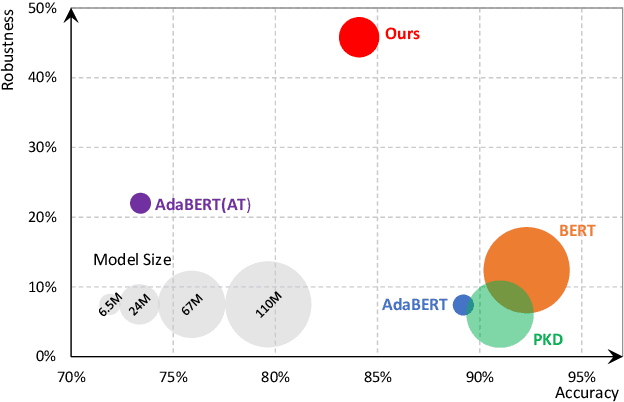

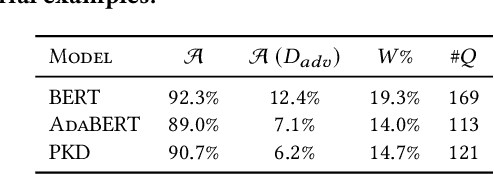

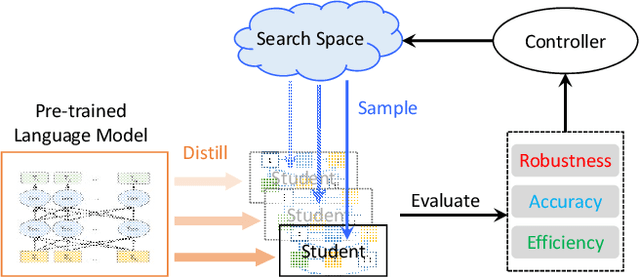

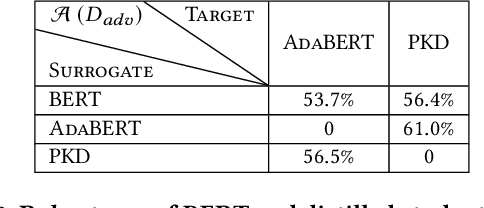

Abstract:Pre-trained language models achieve outstanding performance in NLP tasks. Various knowledge distillation methods have been proposed to reduce the heavy computation and storage requirements of pre-trained language models. However, from our observations, student models acquired by knowledge distillation suffer from adversarial attacks, which limits their usage in security sensitive scenarios. In order to overcome these security problems, RoSearch is proposed as a comprehensive framework to search the student models with better adversarial robustness when performing knowledge distillation. A directed acyclic graph based search space is built and an evolutionary search strategy is utilized to guide the searching approach. Each searched architecture is trained by knowledge distillation on pre-trained language model and then evaluated under a robustness-, accuracy- and efficiency-aware metric as environmental fitness. Experimental results show that RoSearch can improve robustness of student models from 7%~18% up to 45.8%~47.8% on different datasets with comparable weight compression ratio to existing distillation methods (4.6$\times$~6.5$\times$ improvement from teacher model BERT_BASE) and low accuracy drop. In addition, we summarize the relationship between student architecture and robustness through statistics of searched models.

Attend and Select: A Segment Attention based Selection Mechanism for Microblog Hashtag Generation

Jun 06, 2021

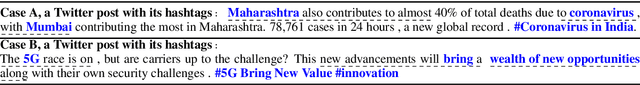

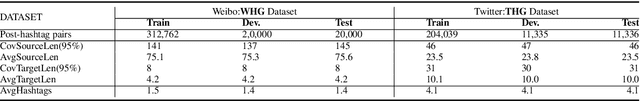

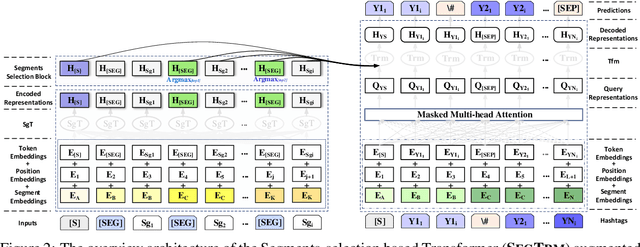

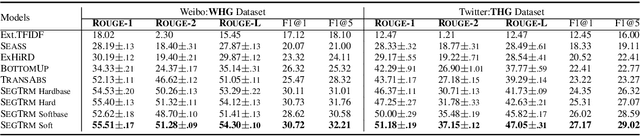

Abstract:Automatic microblog hashtag generation can help us better and faster understand or process the critical content of microblog posts. Conventional sequence-to-sequence generation methods can produce phrase-level hashtags and have achieved remarkable performance on this task. However, they are incapable of filtering out secondary information and not good at capturing the discontinuous semantics among crucial tokens. A hashtag is formed by tokens or phrases that may originate from various fragmentary segments of the original text. In this work, we propose an end-to-end Transformer-based generation model which consists of three phases: encoding, segments-selection, and decoding. The model transforms discontinuous semantic segments from the source text into a sequence of hashtags. Specifically, we introduce a novel Segments Selection Mechanism (SSM) for Transformer to obtain segmental representations tailored to phrase-level hashtag generation. Besides, we introduce two large-scale hashtag generation datasets, which are newly collected from Chinese Weibo and English Twitter. Extensive evaluations on the two datasets reveal our approach's superiority with significant improvements to extraction and generation baselines. The code and datasets are available at \url{https://github.com/OpenSUM/HashtagGen}.

Automated Timeline Length Selection for Flexible Timeline Summarization

May 29, 2021

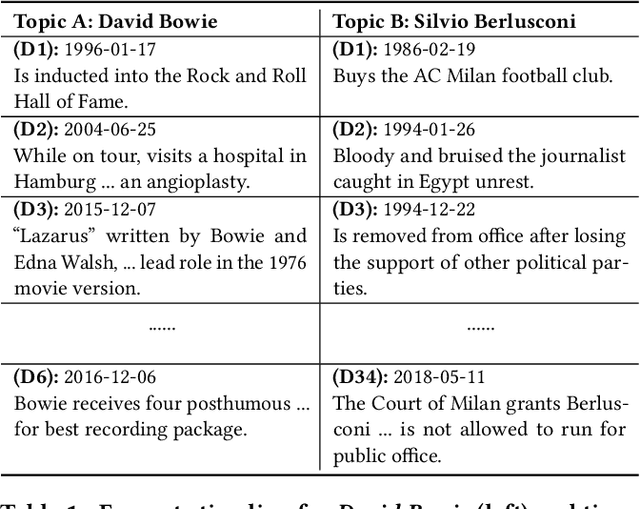

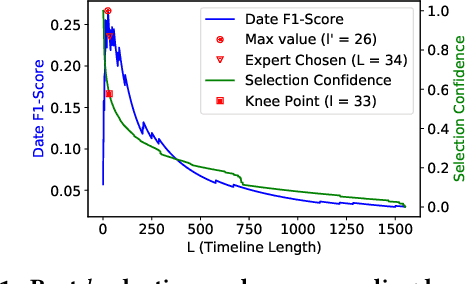

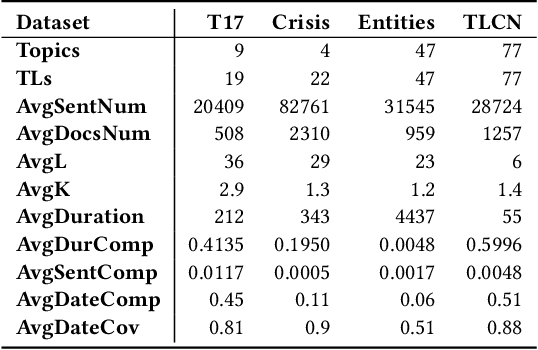

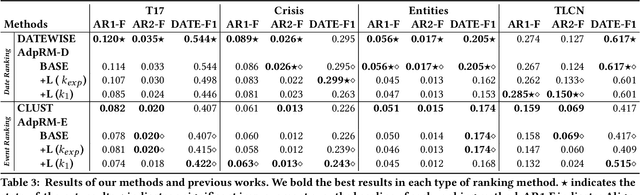

Abstract:By producing summaries for long-running events, timeline summarization (TLS) underpins many information retrieval tasks. Successful TLS requires identifying an appropriate set of key dates (the timeline length) to cover. However, doing so is challenging as the right length can change from one topic to another. Existing TLS solutions either rely on an event-agnostic fixed length or an expert-supplied setting. Neither of the strategies is desired for real-life TLS scenarios. A fixed, event-agnostic setting ignores the diversity of events and their development and hence can lead to low-quality TLS. Relying on expert-crafted settings is neither scalable nor sustainable for processing many dynamically changing events. This paper presents a better TLS approach for automatically and dynamically determining the TLS timeline length. We achieve this by employing the established elbow method from the machine learning community to automatically find the minimum number of dates within the time series to generate concise and informative summaries. We applied our approach to four TLS datasets of English and Chinese and compared them against three prior methods. Experimental results show that our approach delivers comparable or even better summaries over state-of-art TLS methods, but it achieves this without expert involvement.

Noised Consistency Training for Text Summarization

May 28, 2021

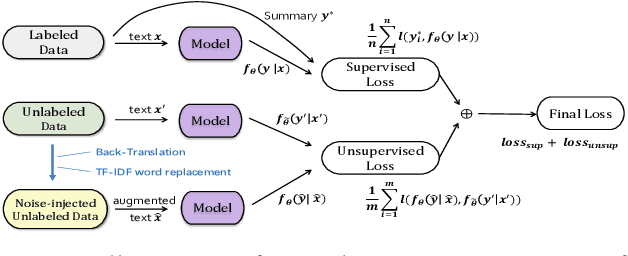

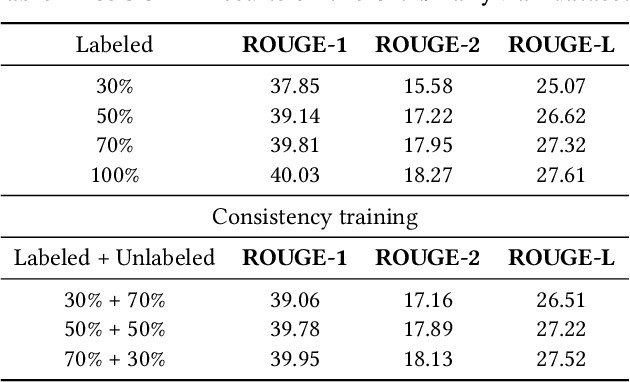

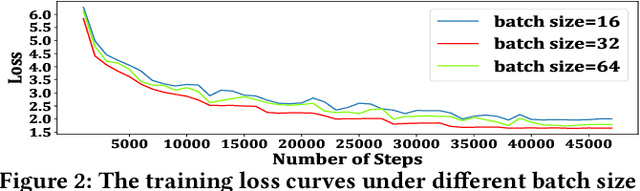

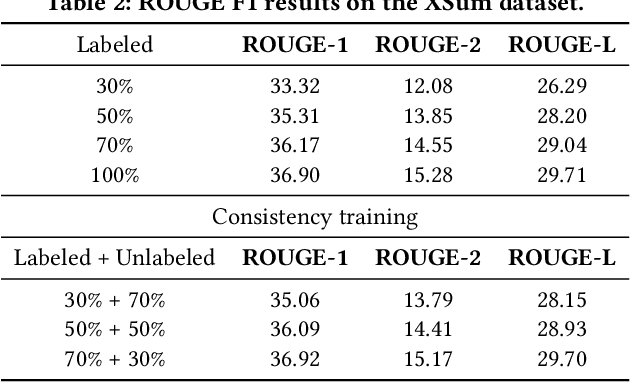

Abstract:Neural abstractive summarization methods often require large quantities of labeled training data. However, labeling large amounts of summarization data is often prohibitive due to time, financial, and expertise constraints, which has limited the usefulness of summarization systems to practical applications. In this paper, we argue that this limitation can be overcome by a semi-supervised approach: consistency training which is to leverage large amounts of unlabeled data to improve the performance of supervised learning over a small corpus. The consistency regularization semi-supervised learning can regularize model predictions to be invariant to small noise applied to input articles. By adding noised unlabeled corpus to help regularize consistency training, this framework obtains comparative performance without using the full dataset. In particular, we have verified that leveraging large amounts of unlabeled data decently improves the performance of supervised learning over an insufficient labeled dataset.

Graph Entropy Guided Node Embedding Dimension Selection for Graph Neural Networks

May 24, 2021

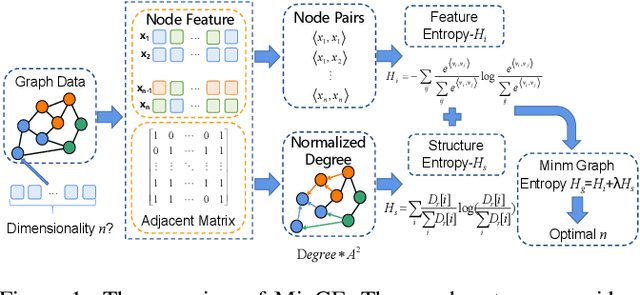

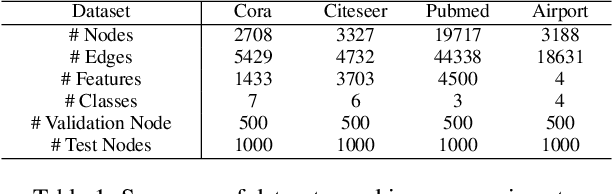

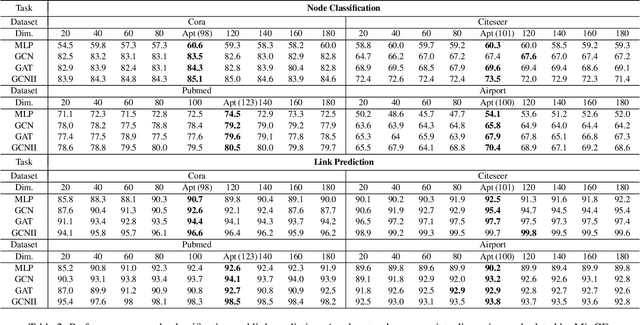

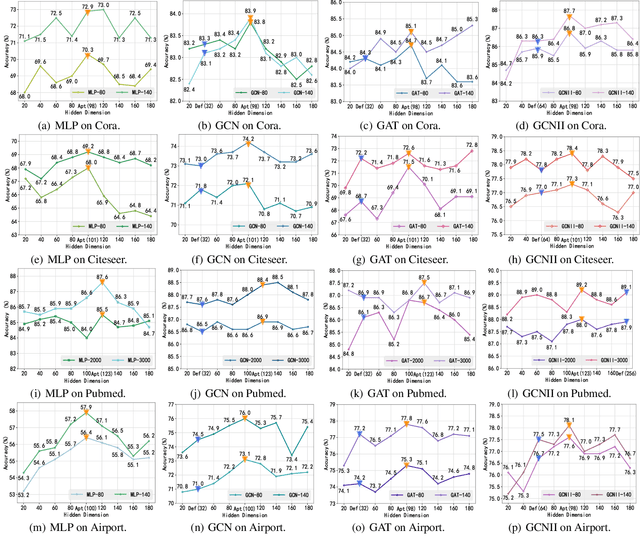

Abstract:Graph representation learning has achieved great success in many areas, including e-commerce, chemistry, biology, etc. However, the fundamental problem of choosing the appropriate dimension of node embedding for a given graph still remains unsolved. The commonly used strategies for Node Embedding Dimension Selection (NEDS) based on grid search or empirical knowledge suffer from heavy computation and poor model performance. In this paper, we revisit NEDS from the perspective of minimum entropy principle. Subsequently, we propose a novel Minimum Graph Entropy (MinGE) algorithm for NEDS with graph data. To be specific, MinGE considers both feature entropy and structure entropy on graphs, which are carefully designed according to the characteristics of the rich information in them. The feature entropy, which assumes the embeddings of adjacent nodes to be more similar, connects node features and link topology on graphs. The structure entropy takes the normalized degree as basic unit to further measure the higher-order structure of graphs. Based on them, we design MinGE to directly calculate the ideal node embedding dimension for any graph. Finally, comprehensive experiments with popular Graph Neural Networks (GNNs) on benchmark datasets demonstrate the effectiveness and generalizability of our proposed MinGE.

A Robust and Generalized Framework for Adversarial Graph Embedding

May 22, 2021

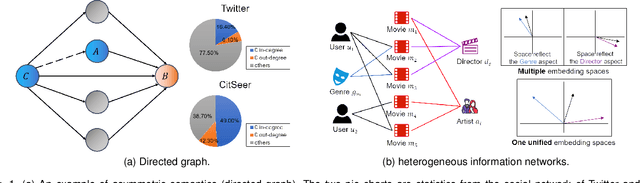

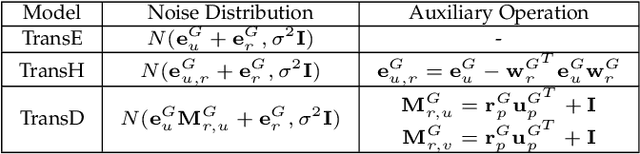

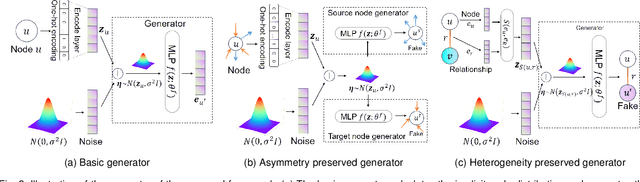

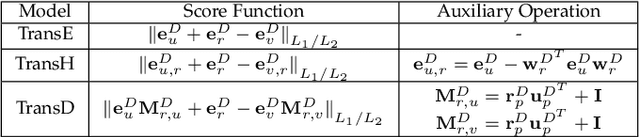

Abstract:Graph embedding is essential for graph mining tasks. With the prevalence of graph data in real-world applications, many methods have been proposed in recent years to learn high-quality graph embedding vectors various types of graphs. However, most existing methods usually randomly select the negative samples from the original graph to enhance the training data without considering the noise. In addition, most of these methods only focus on the explicit graph structures and cannot fully capture complex semantics of edges such as various relationships or asymmetry. In order to address these issues, we propose a robust and generalized framework for adversarial graph embedding based on generative adversarial networks. Inspired by generative adversarial network, we propose a robust and generalized framework for adversarial graph embedding, named AGE. AGE generates the fake neighbor nodes as the enhanced negative samples from the implicit distribution, and enables the discriminator and generator to jointly learn each node's robust and generalized representation. Based on this framework, we propose three models to handle three types of graph data and derive the corresponding optimization algorithms, i.e., UG-AGE and DG-AGE for undirected and directed homogeneous graphs, respectively, and HIN-AGE for heterogeneous information networks. Extensive experiments show that our methods consistently and significantly outperform existing state-of-the-art methods across multiple graph mining tasks, including link prediction, node classification, and graph reconstruction.

Federated Knowledge Graphs Embedding

May 17, 2021

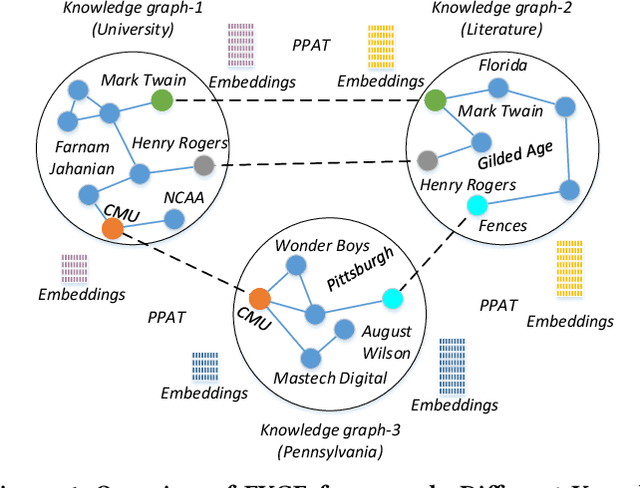

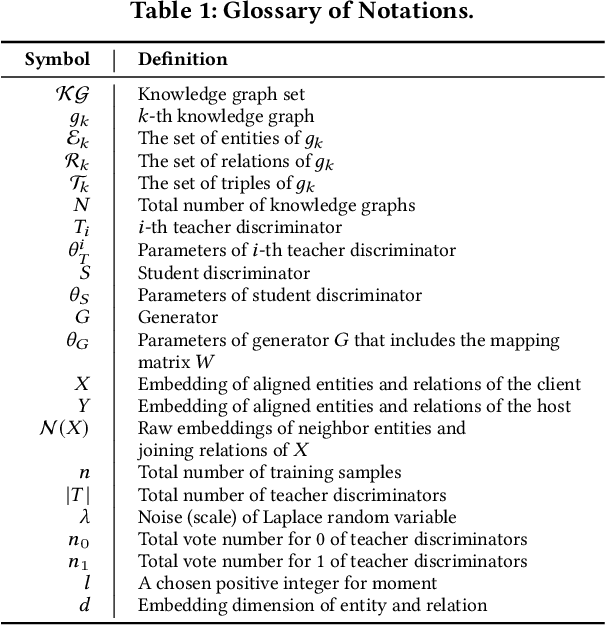

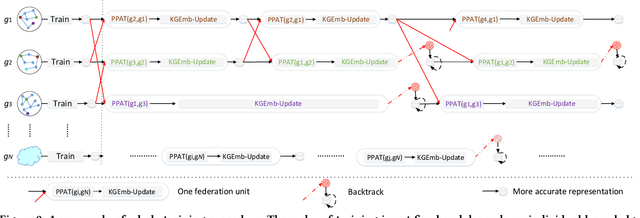

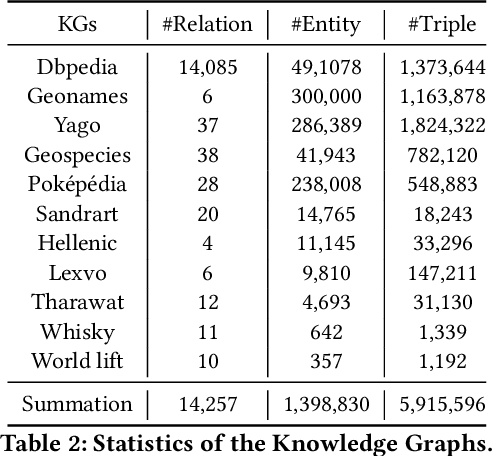

Abstract:In this paper, we propose a novel decentralized scalable learning framework, Federated Knowledge Graphs Embedding (FKGE), where embeddings from different knowledge graphs can be learnt in an asynchronous and peer-to-peer manner while being privacy-preserving. FKGE exploits adversarial generation between pairs of knowledge graphs to translate identical entities and relations of different domains into near embedding spaces. In order to protect the privacy of the training data, FKGE further implements a privacy-preserving neural network structure to guarantee no raw data leakage. We conduct extensive experiments to evaluate FKGE on 11 knowledge graphs, demonstrating a significant and consistent improvement in model quality with at most 17.85% and 7.90% increases in performance on triple classification and link prediction tasks.

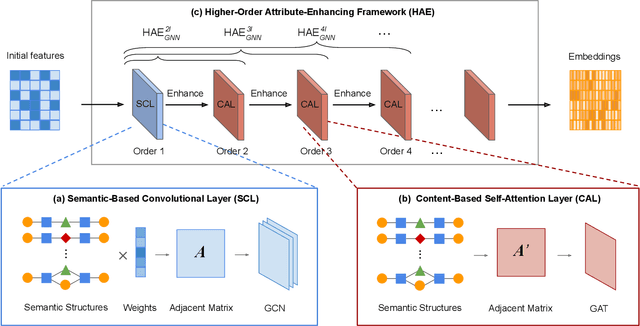

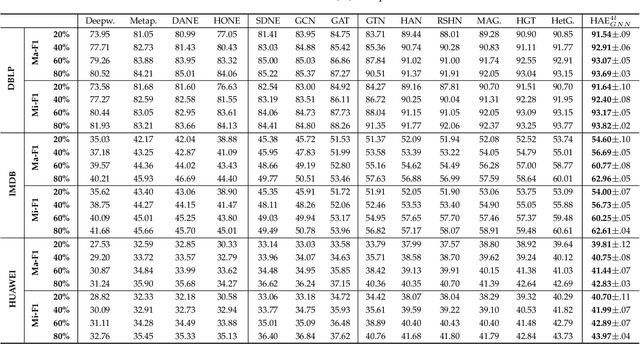

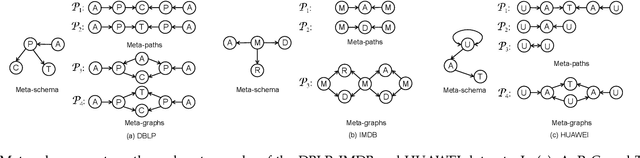

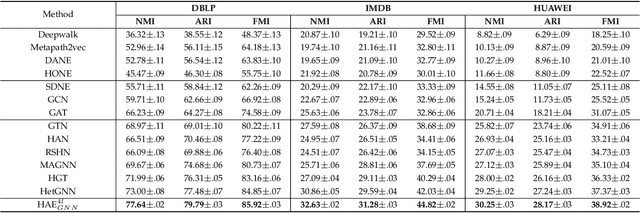

Higher-Order Attribute-Enhancing Heterogeneous Graph Neural Networks

Apr 16, 2021

Abstract:Graph neural networks (GNNs) have been widely used in deep learning on graphs. They can learn effective node representations that achieve superior performances in graph analysis tasks such as node classification and node clustering. However, most methods ignore the heterogeneity in real-world graphs. Methods designed for heterogeneous graphs, on the other hand, fail to learn complex semantic representations because they only use meta-paths instead of meta-graphs. Furthermore, they cannot fully capture the content-based correlations between nodes, as they either do not use the self-attention mechanism or only use it to consider the immediate neighbors of each node, ignoring the higher-order neighbors. We propose a novel Higher-order Attribute-Enhancing (HAE) framework that enhances node embedding in a layer-by-layer manner. Under the HAE framework, we propose a Higher-order Attribute-Enhancing Graph Neural Network (HAEGNN) for heterogeneous network representation learning. HAEGNN simultaneously incorporates meta-paths and meta-graphs for rich, heterogeneous semantics, and leverages the self-attention mechanism to explore content-based nodes interactions. The unique higher-order architecture of HAEGNN allows examining the first-order as well as higher-order neighborhoods. Moreover, HAEGNN shows good explainability as it learns the importances of different meta-paths and meta-graphs. HAEGNN is also memory-efficient, for it avoids per meta-path based matrix calculation. Experimental results not only show HAEGNN superior performance against the state-of-the-art methods in node classification, node clustering, and visualization, but also demonstrate its superiorities in terms of memory efficiency and explainability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge