Huamin Qu

SirenLess: reveal the intention behind news

Jan 08, 2020

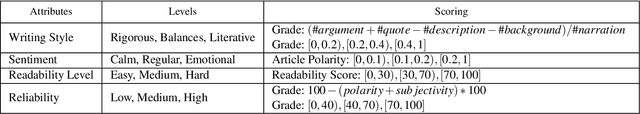

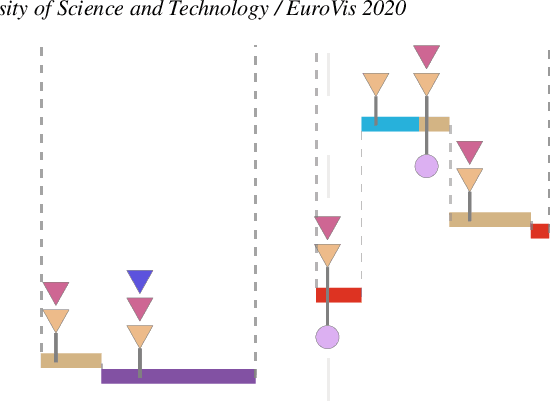

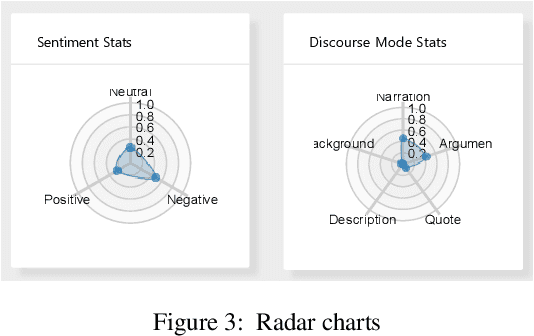

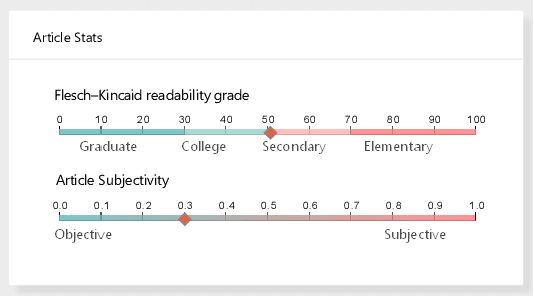

Abstract:News articles tend to be increasingly misleading nowadays, preventing readers from making subjective judgments towards certain events. While some machine learning approaches have been proposed to detect misleading news, most of them are black boxes that provide limited help for humans in decision making. In this paper, we present SirenLess, a visual analytical system for misleading news detection by linguistic features. The system features article explorer, a novel interactive tool that integrates news metadata and linguistic features to reveal semantic structures of news articles and facilitate textual analysis. We use SirenLess to analyze 18 news articles from different sources and summarize some helpful patterns for misleading news detection. A user study with journalism professionals and university students is conducted to confirm the usefulness and effectiveness of our system.

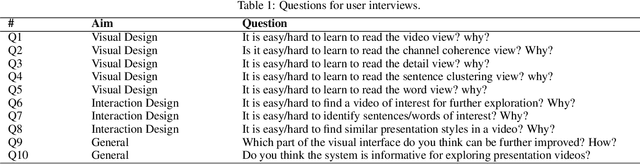

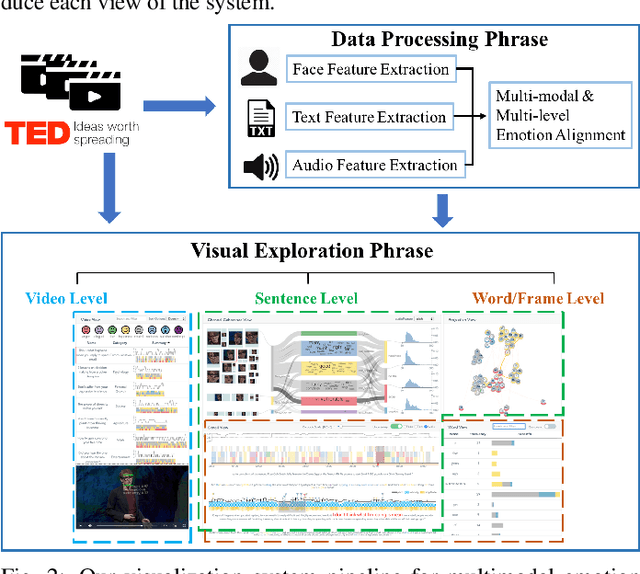

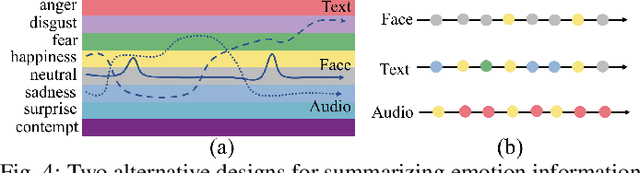

EmoCo: Visual Analysis of Emotion Coherence in Presentation Videos

Jul 29, 2019

Abstract:Emotions play a key role in human communication and public presentations. Human emotions are usually expressed through multiple modalities. Therefore, exploring multimodal emotions and their coherence is of great value for understanding emotional expressions in presentations and improving presentation skills. However, manually watching and studying presentation videos is often tedious and time-consuming. There is a lack of tool support to help conduct an efficient and in-depth multi-level analysis. Thus, in this paper, we introduce EmoCo, an interactive visual analytics system to facilitate efficient analysis of emotion coherence across facial, text, and audio modalities in presentation videos. Our visualization system features a channel coherence view and a sentence clustering view that together enable users to obtain a quick overview of emotion coherence and its temporal evolution. In addition, a detail view and word view enable detailed exploration and comparison from the sentence level and word level, respectively. We thoroughly evaluate the proposed system and visualization techniques through two usage scenarios based on TED Talk videos and interviews with two domain experts. The results demonstrate the effectiveness of our system in gaining insights into emotion coherence in presentations.

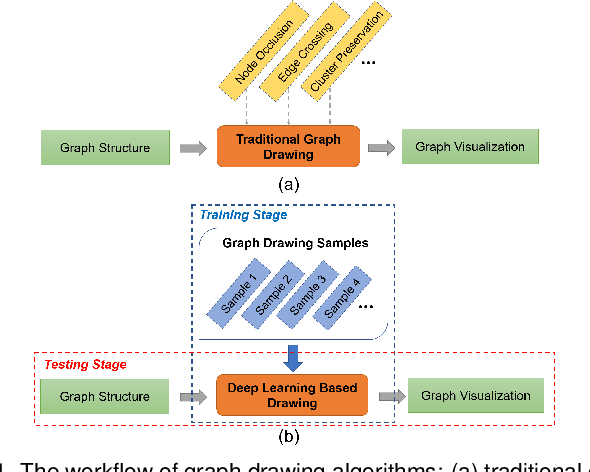

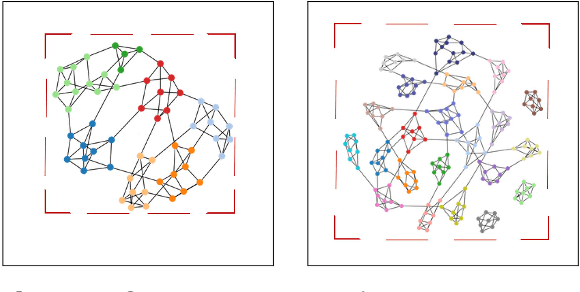

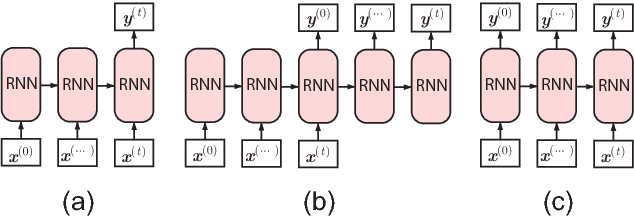

DeepDrawing: A Deep Learning Approach to Graph Drawing

Jul 27, 2019

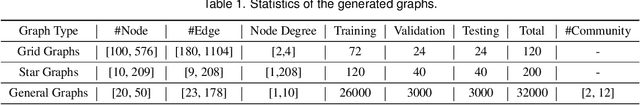

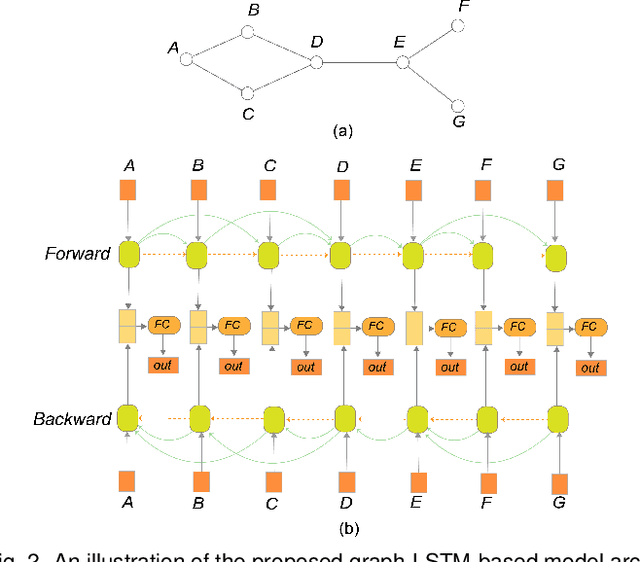

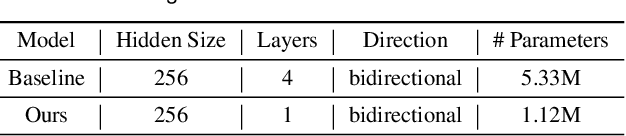

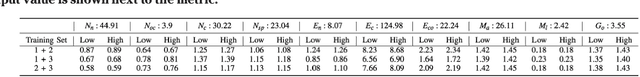

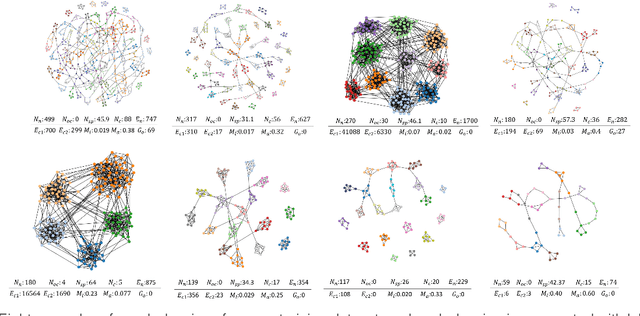

Abstract:Node-link diagrams are widely used to facilitate network explorations. However, when using a graph drawing technique to visualize networks, users often need to tune different algorithm-specific parameters iteratively by comparing the corresponding drawing results in order to achieve a desired visual effect. This trial and error process is often tedious and time-consuming, especially for non-expert users. Inspired by the powerful data modelling and prediction capabilities of deep learning techniques, we explore the possibility of applying deep learning techniques to graph drawing. Specifically, we propose using a graph-LSTM-based approach to directly map network structures to graph drawings. Given a set of layout examples as the training dataset, we train the proposed graph-LSTM-based model to capture their layout characteristics. Then, the trained model is used to generate graph drawings in a similar style for new networks. We evaluated the proposed approach on two special types of layouts (i.e., grid layouts and star layouts) and two general types of layouts (i.e., ForceAtlas2 and PivotMDS) in both qualitative and quantitative ways. The results provide support for the effectiveness of our approach. We also conducted a time cost assessment on the drawings of small graphs with 20 to 50 nodes. We further report the lessons we learned and discuss the limitations and future work.

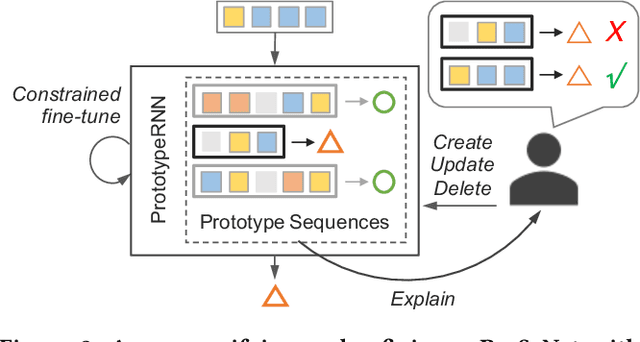

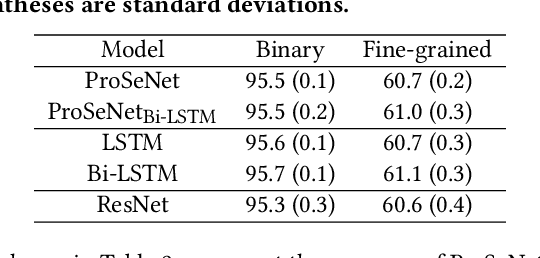

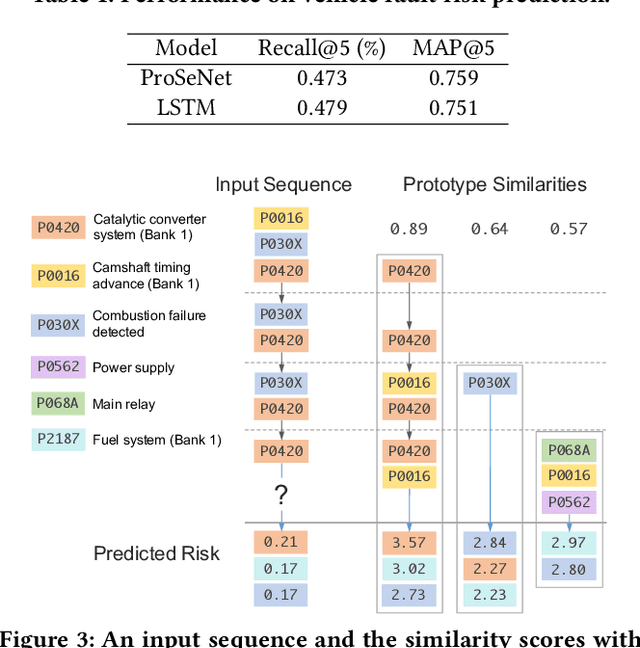

Interpretable and Steerable Sequence Learning via Prototypes

Jul 23, 2019

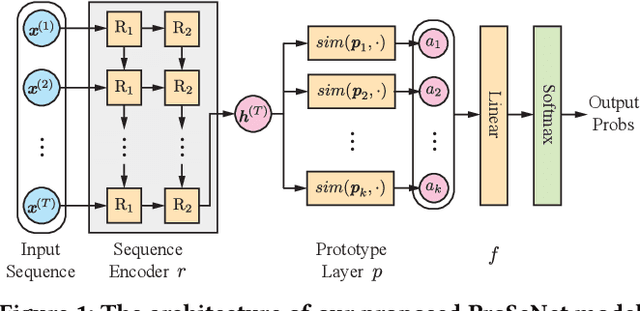

Abstract:One of the major challenges in machine learning nowadays is to provide predictions with not only high accuracy but also user-friendly explanations. Although in recent years we have witnessed increasingly popular use of deep neural networks for sequence modeling, it is still challenging to explain the rationales behind the model outputs, which is essential for building trust and supporting the domain experts to validate, critique and refine the model. We propose ProSeNet, an interpretable and steerable deep sequence model with natural explanations derived from case-based reasoning. The prediction is obtained by comparing the inputs to a few prototypes, which are exemplar cases in the problem domain. For better interpretability, we define several criteria for constructing the prototypes, including simplicity, diversity, and sparsity and propose the learning objective and the optimization procedure. ProSeNet also provides a user-friendly approach to model steering: domain experts without any knowledge on the underlying model or parameters can easily incorporate their intuition and experience by manually refining the prototypes. We conduct experiments on a wide range of real-world applications, including predictive diagnostics for automobiles, ECG, and protein sequence classification and sentiment analysis on texts. The result shows that ProSeNet can achieve accuracy on par with state-of-the-art deep learning models. We also evaluate the interpretability of the results with concrete case studies. Finally, through user study on Amazon Mechanical Turk (MTurk), we demonstrate that the model selects high-quality prototypes which align well with human knowledge and can be interactively refined for better interpretability without loss of performance.

* Accepted as a full paper at KDD 2019 on May 8, 2019

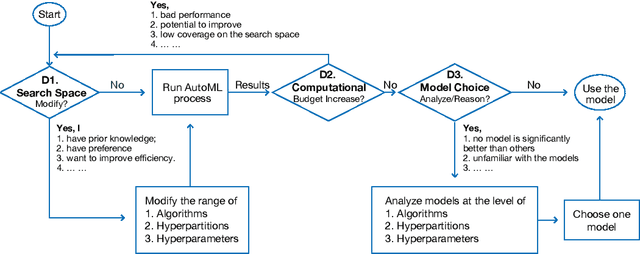

ATMSeer: Increasing Transparency and Controllability in Automated Machine Learning

Feb 13, 2019

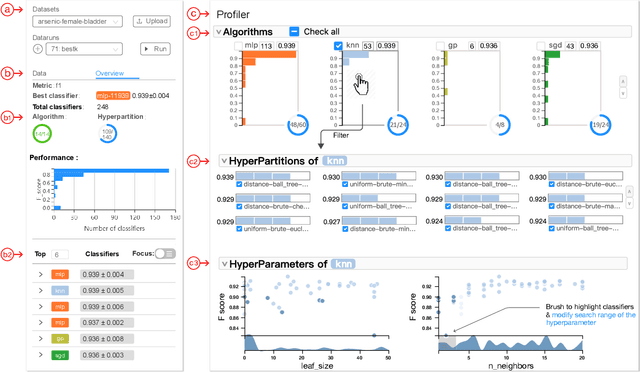

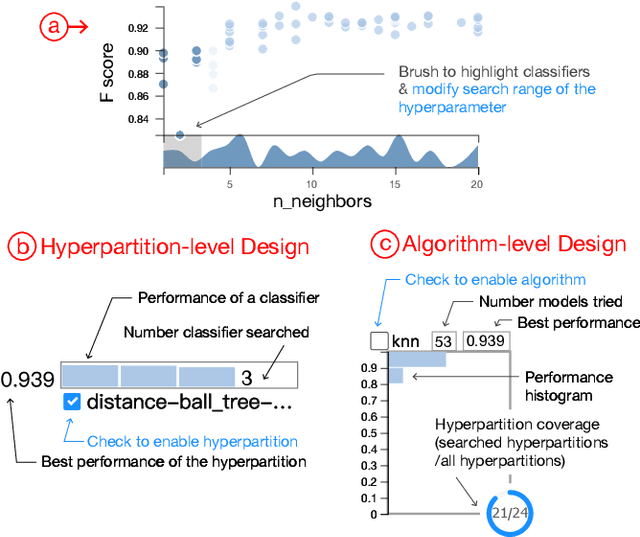

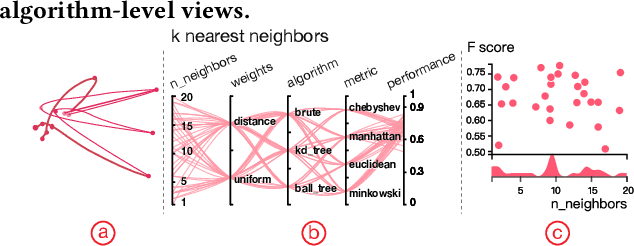

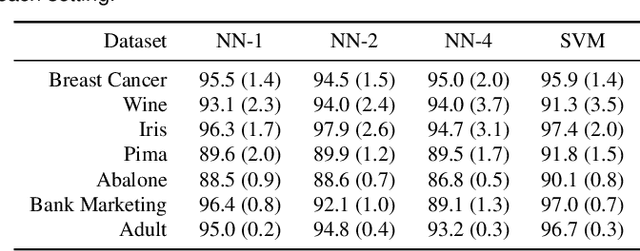

Abstract:To relieve the pain of manually selecting machine learning algorithms and tuning hyperparameters, automated machine learning (AutoML) methods have been developed to automatically search for good models. Due to the huge model search space, it is impossible to try all models. Users tend to distrust automatic results and increase the search budget as much as they can, thereby undermining the efficiency of AutoML. To address these issues, we design and implement ATMSeer, an interactive visualization tool that supports users in refining the search space of AutoML and analyzing the results. To guide the design of ATMSeer, we derive a workflow of using AutoML based on interviews with machine learning experts. A multi-granularity visualization is proposed to enable users to monitor the AutoML process, analyze the searched models, and refine the search space in real time. We demonstrate the utility and usability of ATMSeer through two case studies, expert interviews, and a user study with 13 end users.

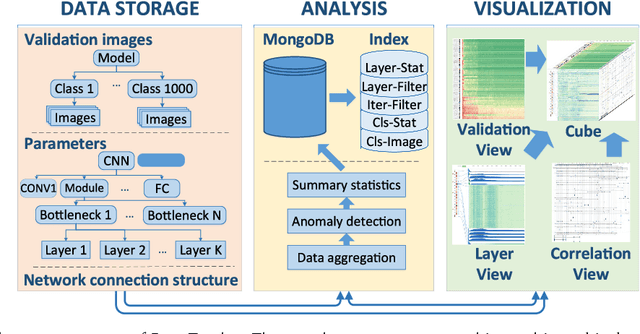

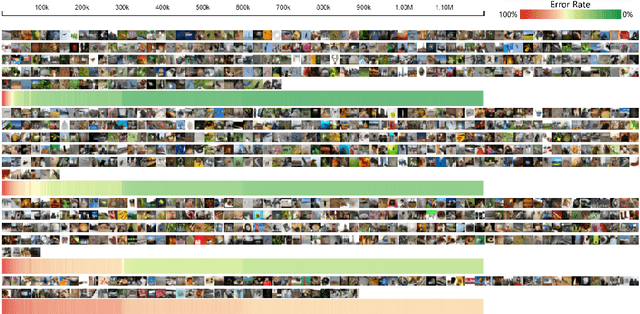

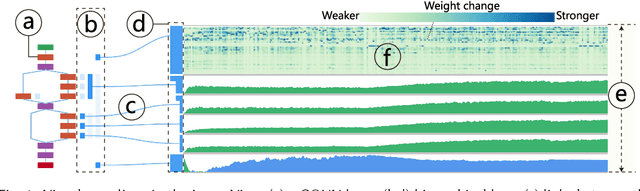

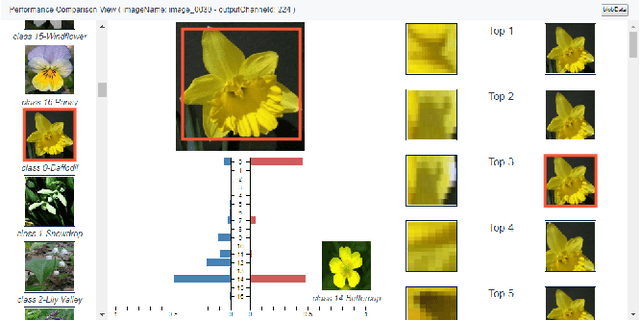

DeepTracker: Visualizing the Training Process of Convolutional Neural Networks

Aug 26, 2018

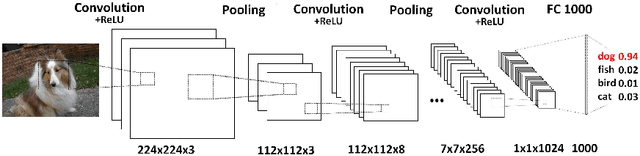

Abstract:Deep convolutional neural networks (CNNs) have achieved remarkable success in various fields. However, training an excellent CNN is practically a trial-and-error process that consumes a tremendous amount of time and computer resources. To accelerate the training process and reduce the number of trials, experts need to understand what has occurred in the training process and why the resulting CNN behaves as such. However, current popular training platforms, such as TensorFlow, only provide very little and general information, such as training/validation errors, which is far from enough to serve this purpose. To bridge this gap and help domain experts with their training tasks in a practical environment, we propose a visual analytics system, DeepTracker, to facilitate the exploration of the rich dynamics of CNN training processes and to identify the unusual patterns that are hidden behind the huge amount of training log. Specifically,we combine a hierarchical index mechanism and a set of hierarchical small multiples to help experts explore the entire training log from different levels of detail. We also introduce a novel cube-style visualization to reveal the complex correlations among multiple types of heterogeneous training data including neuron weights, validation images, and training iterations. Three case studies are conducted to demonstrate how DeepTracker provides its users with valuable knowledge in an industry-level CNN training process, namely in our case, training ResNet-50 on the ImageNet dataset. We show that our method can be easily applied to other state-of-the-art "very deep" CNN models.

Evaluating the Readability of Force Directed Graph Layouts: A Deep Learning Approach

Aug 02, 2018

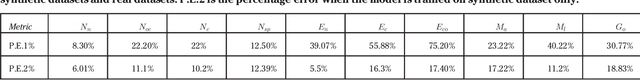

Abstract:Existing graph layout algorithms are usually not able to optimize all the aesthetic properties desired in a graph layout. To evaluate how well the desired visual features are reflected in a graph layout, many readability metrics have been proposed in the past decades. However, the calculation of these readability metrics often requires access to the node and edge coordinates and is usually computationally inefficient, especially for dense graphs. Importantly, when the node and edge coordinates are not accessible, it becomes impossible to evaluate the graph layouts quantitatively. In this paper, we present a novel deep learning-based approach to evaluate the readability of graph layouts by directly using graph images. A convolutional neural network architecture is proposed and trained on a benchmark dataset of graph images, which is composed of synthetically-generated graphs and graphs created by sampling from real large networks. Multiple representative readability metrics (including edge crossing, node spread, and group overlap) are considered in the proposed approach. We quantitatively compare our approach to traditional methods and qualitatively evaluate our approach using a case study and visualizing convolutional layers. This work is a first step towards using deep learning based methods to evaluate images from the visualization field quantitatively.

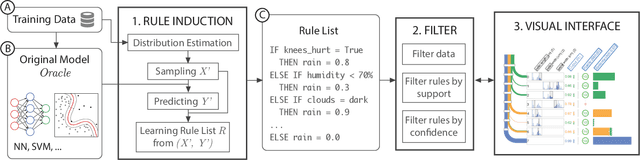

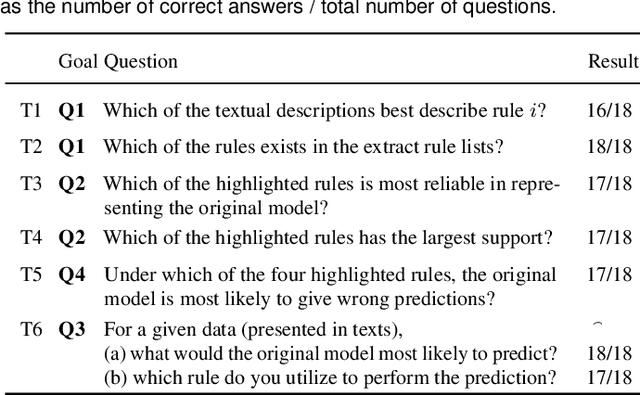

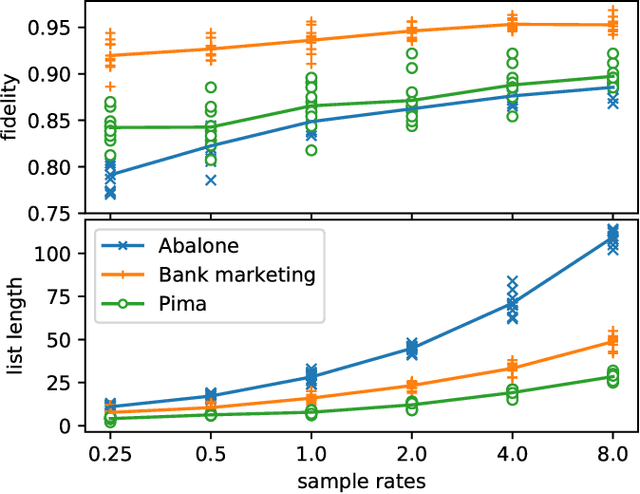

RuleMatrix: Visualizing and Understanding Classifiers with Rules

Jul 17, 2018

Abstract:With the growing adoption of machine learning techniques, there is a surge of research interest towards making machine learning systems more transparent and interpretable. Various visualizations have been developed to help model developers understand, diagnose, and refine machine learning models. However, a large number of potential but neglected users are the domain experts with little knowledge of machine learning but are expected to work with machine learning systems. In this paper, we present an interactive visualization technique to help users with little expertise in machine learning to understand, explore and validate predictive models. By viewing the model as a black box, we extract a standardized rule-based knowledge representation from its input-output behavior. We design RuleMatrix, a matrix-based visualization of rules to help users navigate and verify the rules and the black-box model. We evaluate the effectiveness of RuleMatrix via two use cases and a usability study.

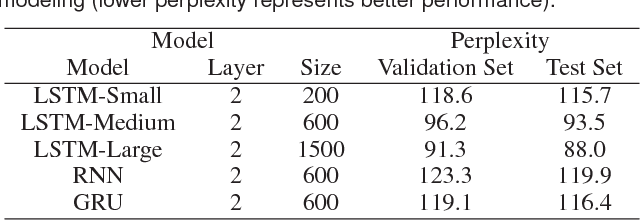

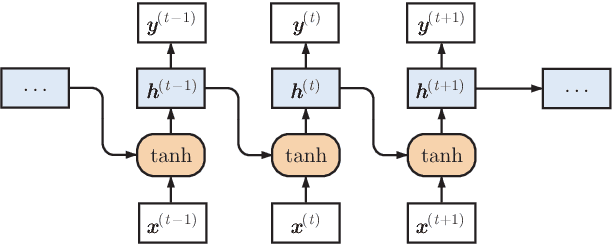

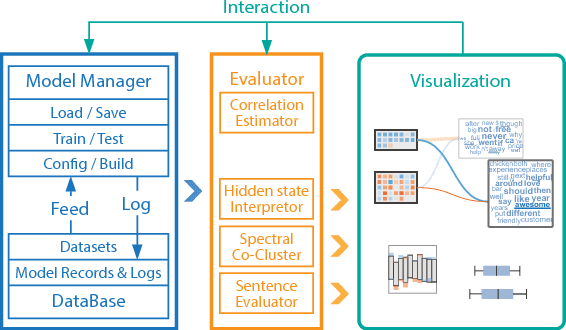

Understanding Hidden Memories of Recurrent Neural Networks

Oct 30, 2017

Abstract:Recurrent neural networks (RNNs) have been successfully applied to various natural language processing (NLP) tasks and achieved better results than conventional methods. However, the lack of understanding of the mechanisms behind their effectiveness limits further improvements on their architectures. In this paper, we present a visual analytics method for understanding and comparing RNN models for NLP tasks. We propose a technique to explain the function of individual hidden state units based on their expected response to input texts. We then co-cluster hidden state units and words based on the expected response and visualize co-clustering results as memory chips and word clouds to provide more structured knowledge on RNNs' hidden states. We also propose a glyph-based sequence visualization based on aggregate information to analyze the behavior of an RNN's hidden state at the sentence-level. The usability and effectiveness of our method are demonstrated through case studies and reviews from domain experts.

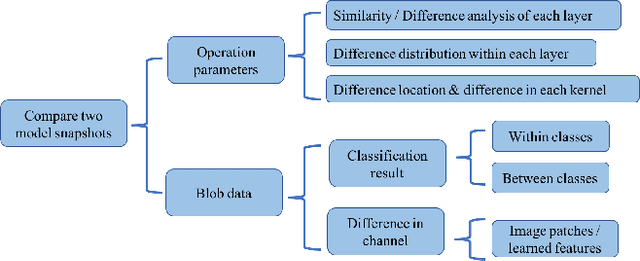

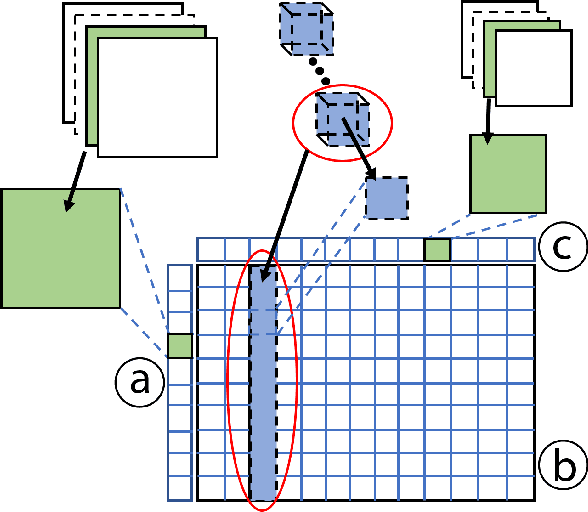

CNNComparator: Comparative Analytics of Convolutional Neural Networks

Oct 15, 2017

Abstract:Convolutional neural networks (CNNs) are widely used in many image recognition tasks due to their extraordinary performance. However, training a good CNN model can still be a challenging task. In a training process, a CNN model typically learns a large number of parameters over time, which usually results in different performance. Often, it is difficult to explore the relationships between the learned parameters and the model performance due to a large number of parameters and different random initializations. In this paper, we present a visual analytics approach to compare two different snapshots of a trained CNN model taken after different numbers of epochs, so as to provide some insight into the design or the training of a better CNN model. Our system compares snapshots by exploring the differences in operation parameters and the corresponding blob data at different levels. A case study has been conducted to demonstrate the effectiveness of our system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge