Xumeng Chen

AIConfigurator: Lightning-Fast Configuration Optimization for Multi-Framework LLM Serving

Jan 09, 2026Abstract:Optimizing Large Language Model (LLM) inference in production systems is increasingly difficult due to dynamic workloads, stringent latency/throughput targets, and a rapidly expanding configuration space. This complexity spans not only distributed parallelism strategies (tensor/pipeline/expert) but also intricate framework-specific runtime parameters such as those concerning the enablement of CUDA graphs, available KV-cache memory fractions, and maximum token capacity, which drastically impact performance. The diversity of modern inference frameworks (e.g., TRT-LLM, vLLM, SGLang), each employing distinct kernels and execution policies, makes manual tuning both framework-specific and computationally prohibitive. We present AIConfigurator, a unified performance-modeling system that enables rapid, framework-agnostic inference configuration search without requiring GPU-based profiling. AIConfigurator combines (1) a methodology that decomposes inference into analytically modelable primitives - GEMM, attention, communication, and memory operations while capturing framework-specific scheduling dynamics; (2) a calibrated kernel-level performance database for these primitives across a wide range of hardware platforms and popular open-weights models (GPT-OSS, Qwen, DeepSeek, LLama, Mistral); and (3) an abstraction layer that automatically resolves optimal launch parameters for the target backend, seamlessly integrating into production-grade orchestration systems. Evaluation on production LLM serving workloads demonstrates that AIConfigurator identifies superior serving configurations that improve performance by up to 40% for dense models (e.g., Qwen3-32B) and 50% for MoE architectures (e.g., DeepSeek-V3), while completing searches within 30 seconds on average. Enabling the rapid exploration of vast design spaces - from cluster topology down to engine specific flags.

SirenLess: reveal the intention behind news

Jan 08, 2020

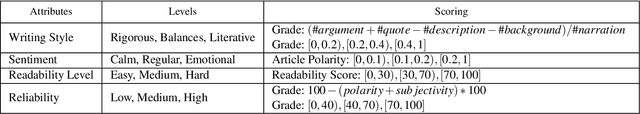

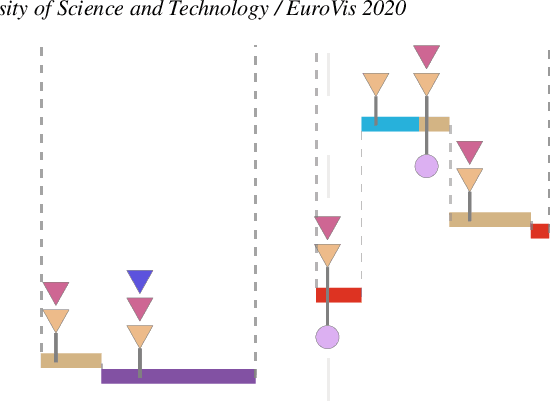

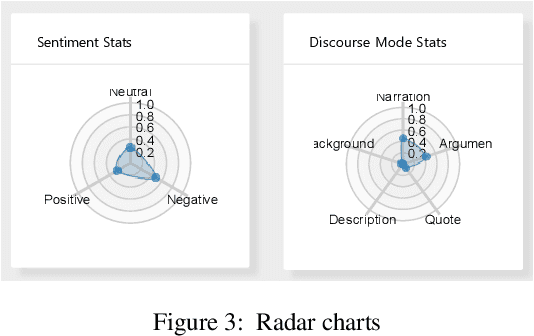

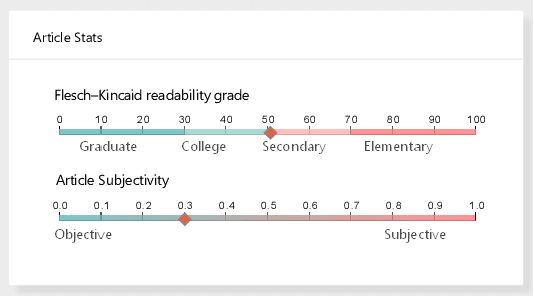

Abstract:News articles tend to be increasingly misleading nowadays, preventing readers from making subjective judgments towards certain events. While some machine learning approaches have been proposed to detect misleading news, most of them are black boxes that provide limited help for humans in decision making. In this paper, we present SirenLess, a visual analytical system for misleading news detection by linguistic features. The system features article explorer, a novel interactive tool that integrates news metadata and linguistic features to reveal semantic structures of news articles and facilitate textual analysis. We use SirenLess to analyze 18 news articles from different sources and summarize some helpful patterns for misleading news detection. A user study with journalism professionals and university students is conducted to confirm the usefulness and effectiveness of our system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge