Elena De Momi

Dept. of Electronics, Information, and Bioengineering, Politecnico di Milano, Italy

Autonomous Intraluminal Navigation of a Soft Robot using Deep-Learning-based Visual Servoing

Jul 01, 2022

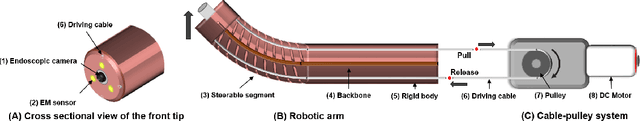

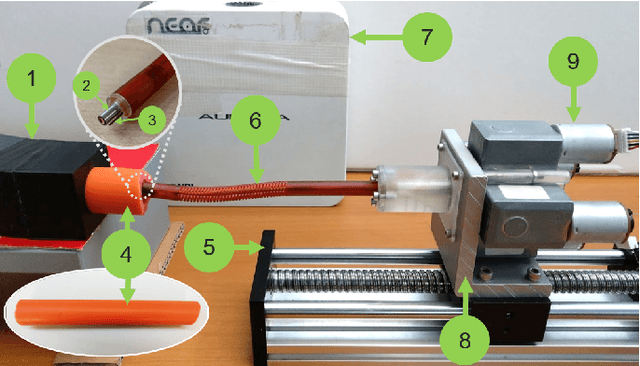

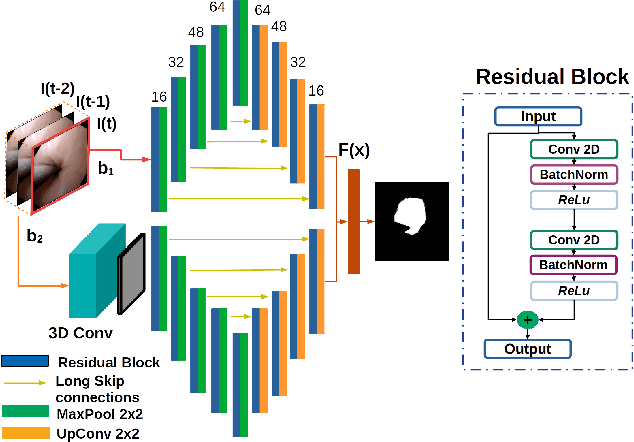

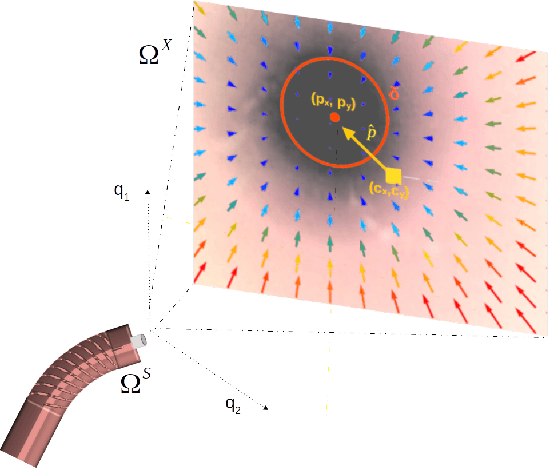

Abstract:Navigation inside luminal organs is an arduous task that requires non-intuitive coordination between the movement of the operator's hand and the information obtained from the endoscopic video. The development of tools to automate certain tasks could alleviate the physical and mental load of doctors during interventions, allowing them to focus on diagnosis and decision-making tasks. In this paper, we present a synergic solution for intraluminal navigation consisting of a 3D printed endoscopic soft robot that can move safely inside luminal structures. Visual servoing, based on Convolutional Neural Networks (CNNs) is used to achieve the autonomous navigation task. The CNN is trained with phantoms and in-vivo data to segment the lumen, and a model-less approach is presented to control the movement in constrained environments. The proposed robot is validated in anatomical phantoms in different path configurations. We analyze the movement of the robot using different metrics such as task completion time, smoothness, error in the steady-state, and mean and maximum error. We show that our method is suitable to navigate safely in hollow environments and conditions which are different than the ones the network was originally trained on.

FetReg2021: A Challenge on Placental Vessel Segmentation and Registration in Fetoscopy

Jun 30, 2022

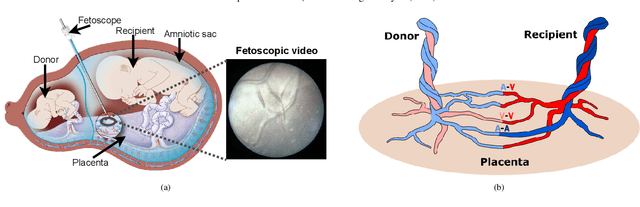

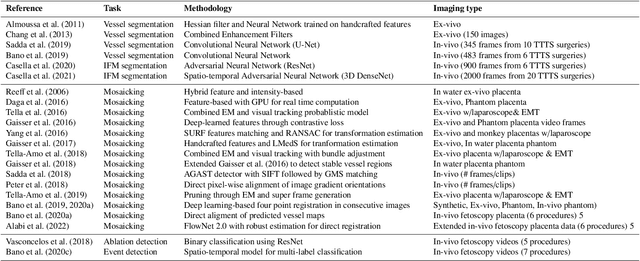

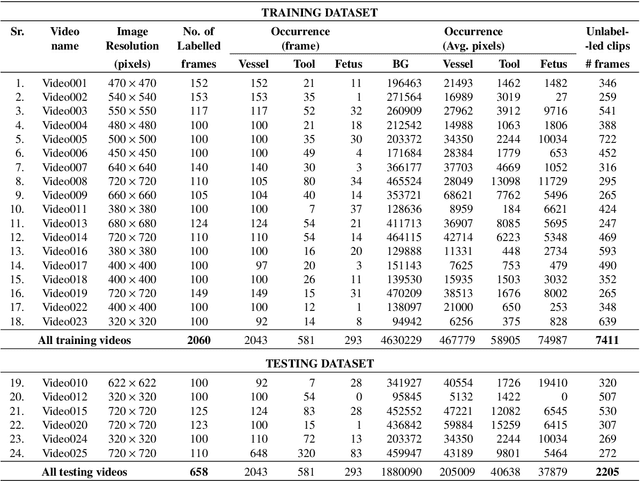

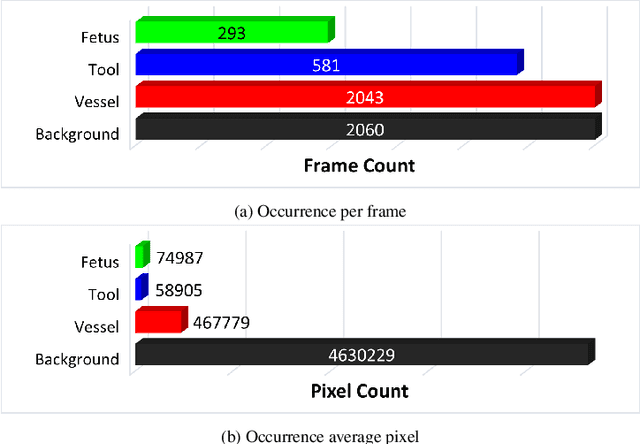

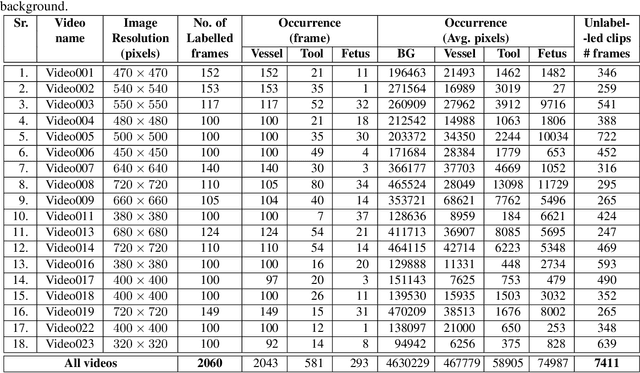

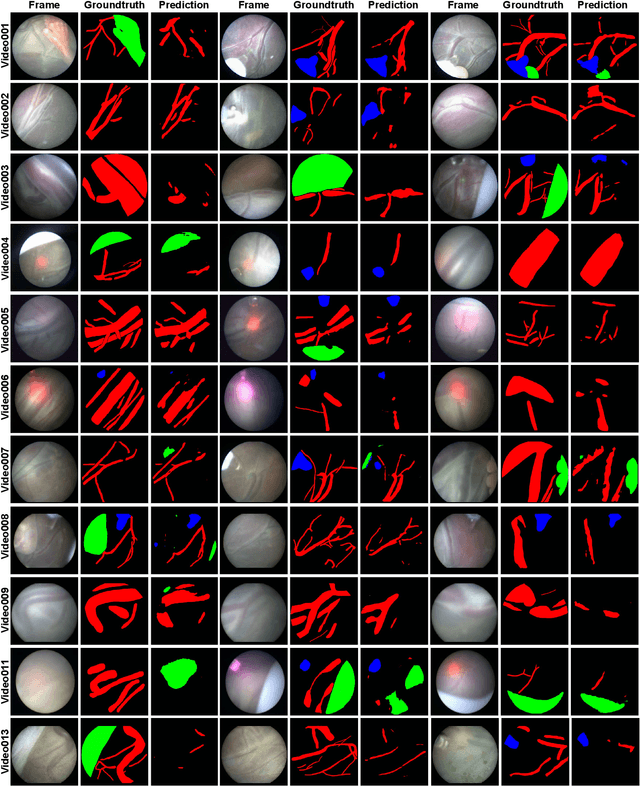

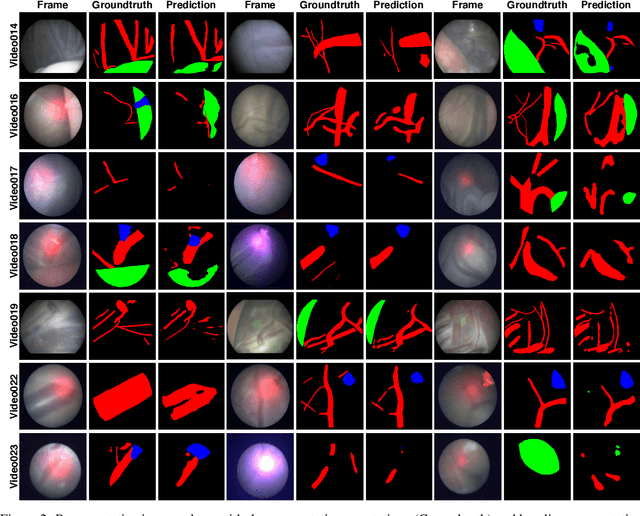

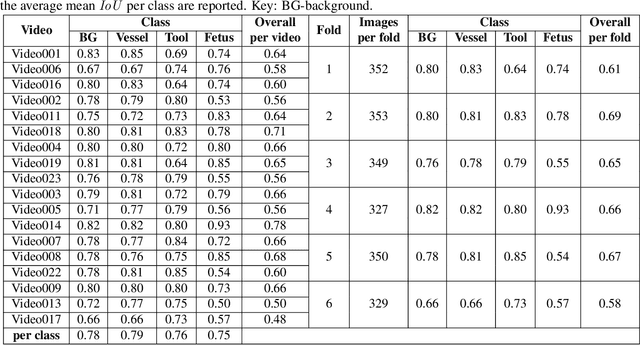

Abstract:Fetoscopy laser photocoagulation is a widely adopted procedure for treating Twin-to-Twin Transfusion Syndrome (TTTS). The procedure involves photocoagulation pathological anastomoses to regulate blood exchange among twins. The procedure is particularly challenging due to the limited field of view, poor manoeuvrability of the fetoscope, poor visibility, and variability in illumination. These challenges may lead to increased surgery time and incomplete ablation. Computer-assisted intervention (CAI) can provide surgeons with decision support and context awareness by identifying key structures in the scene and expanding the fetoscopic field of view through video mosaicking. Research in this domain has been hampered by the lack of high-quality data to design, develop and test CAI algorithms. Through the Fetoscopic Placental Vessel Segmentation and Registration (FetReg2021) challenge, which was organized as part of the MICCAI2021 Endoscopic Vision challenge, we released the first largescale multicentre TTTS dataset for the development of generalized and robust semantic segmentation and video mosaicking algorithms. For this challenge, we released a dataset of 2060 images, pixel-annotated for vessels, tool, fetus and background classes, from 18 in-vivo TTTS fetoscopy procedures and 18 short video clips. Seven teams participated in this challenge and their model performance was assessed on an unseen test dataset of 658 pixel-annotated images from 6 fetoscopic procedures and 6 short clips. The challenge provided an opportunity for creating generalized solutions for fetoscopic scene understanding and mosaicking. In this paper, we present the findings of the FetReg2021 challenge alongside reporting a detailed literature review for CAI in TTTS fetoscopy. Through this challenge, its analysis and the release of multi-centre fetoscopic data, we provide a benchmark for future research in this field.

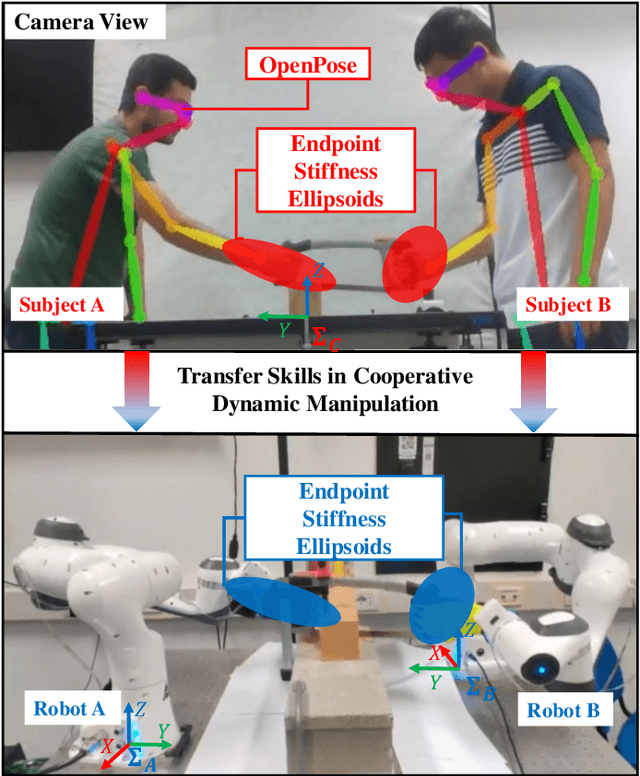

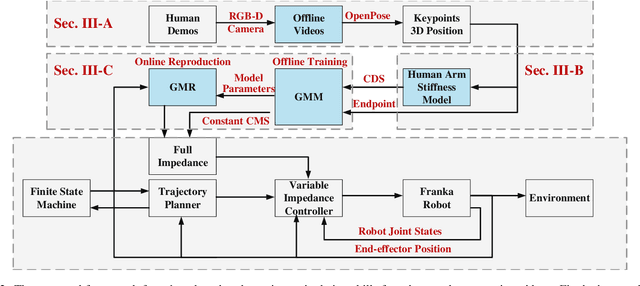

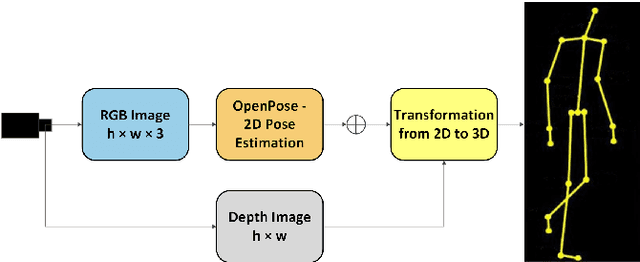

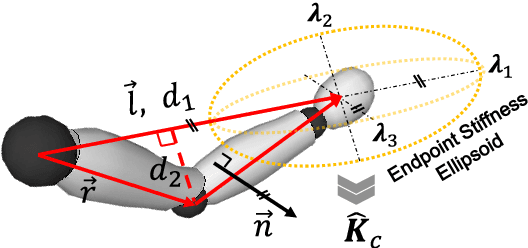

Learning Cooperative Dynamic Manipulation Skills from Human Demonstration Videos

Apr 08, 2022

Abstract:This article proposes a method for learning and robotic replication of dynamic collaborative tasks from offline videos. The objective is to extend the concept of learning from demonstration (LfD) to dynamic scenarios, benefiting from widely available or easily producible offline videos. To achieve this goal, we decode important dynamic information, such as the Configuration Dependent Stiffness (CDS), which reveals the contribution of arm pose to the arm endpoint stiffness, from a three-dimensional human skeleton model. Next, through encoding of the CDS via Gaussian Mixture Model (GMM) and decoding via Gaussian Mixture Regression (GMR), the robot's Cartesian impedance profile is estimated and replicated. We demonstrate the proposed method in a collaborative sawing task with leader-follower structure, considering environmental constraints and dynamic uncertainties. The experimental setup includes two Panda robots, which replicate the leader-follower roles and the impedance profiles extracted from a two-persons sawing video.

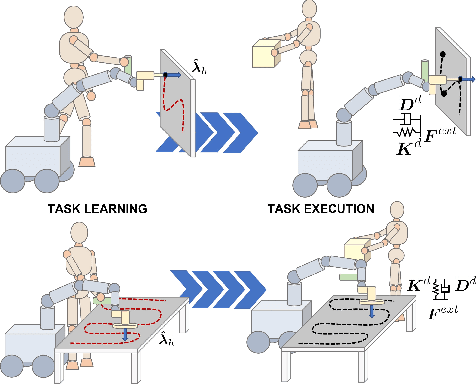

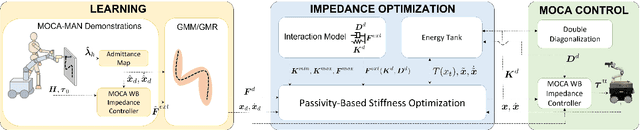

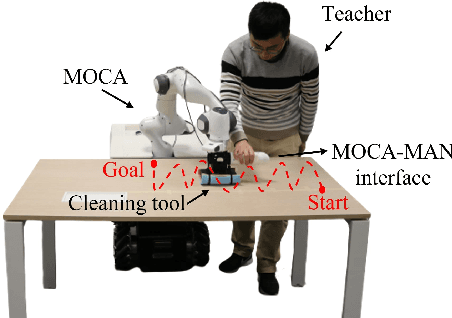

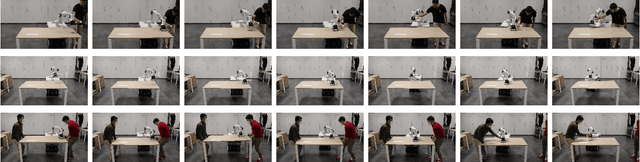

A Hybrid Learning and Optimization Framework to Achieve Physically Interactive Tasks with Mobile Manipulators

Mar 28, 2022

Abstract:This paper proposes a hybrid learning and optimization framework for mobile manipulators for complex and physically interactive tasks. The framework exploits the MOCA-MAN interface to obtain intuitive and simplified human demonstrations and Gaussian Mixture Model/Gaussian Mixture Regression to encode and generate the learned task requirements in terms of position, velocity, and force profiles. Next, using the desired trajectories and force profiles generated by GMM/GMR, the impedance parameters of a Cartesian impedance controller are optimized online through a Quadratic Program augmented with an energy tank to ensure the passivity of the controlled system. Two experiments are conducted to validate the framework, comparing our method with two approaches with constant stiffness (high and low). The results showed that the proposed method outperforms the other two cases in terms of trajectory tracking and generated interaction forces, even in the presence of disturbances such as unexpected end-effector collisions.

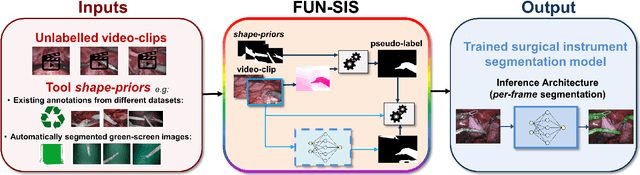

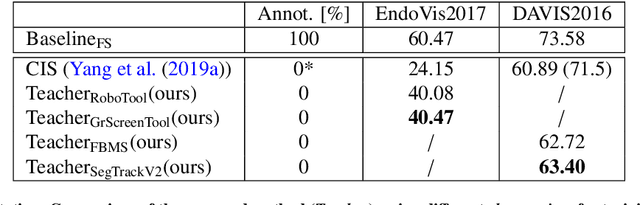

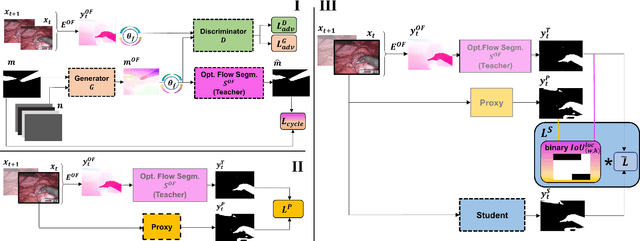

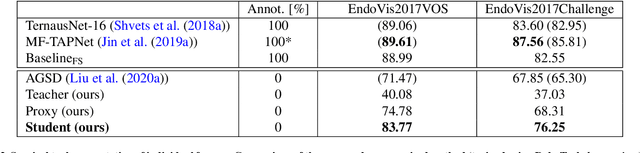

FUN-SIS: a Fully UNsupervised approach for Surgical Instrument Segmentation

Feb 16, 2022

Abstract:Automatic surgical instrument segmentation of endoscopic images is a crucial building block of many computer-assistance applications for minimally invasive surgery. So far, state-of-the-art approaches completely rely on the availability of a ground-truth supervision signal, obtained via manual annotation, thus expensive to collect at large scale. In this paper, we present FUN-SIS, a Fully-UNsupervised approach for binary Surgical Instrument Segmentation. FUN-SIS trains a per-frame segmentation model on completely unlabelled endoscopic videos, by solely relying on implicit motion information and instrument shape-priors. We define shape-priors as realistic segmentation masks of the instruments, not necessarily coming from the same dataset/domain as the videos. The shape-priors can be collected in various and convenient ways, such as recycling existing annotations from other datasets. We leverage them as part of a novel generative-adversarial approach, allowing to perform unsupervised instrument segmentation of optical-flow images during training. We then use the obtained instrument masks as pseudo-labels in order to train a per-frame segmentation model; to this aim, we develop a learning-from-noisy-labels architecture, designed to extract a clean supervision signal from these pseudo-labels, leveraging their peculiar noise properties. We validate the proposed contributions on three surgical datasets, including the MICCAI 2017 EndoVis Robotic Instrument Segmentation Challenge dataset. The obtained fully-unsupervised results for surgical instrument segmentation are almost on par with the ones of fully-supervised state-of-the-art approaches. This suggests the tremendous potential of the proposed method to leverage the great amount of unlabelled data produced in the context of minimally invasive surgery.

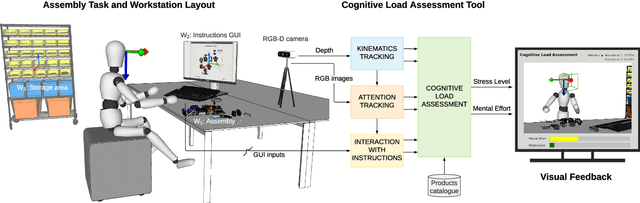

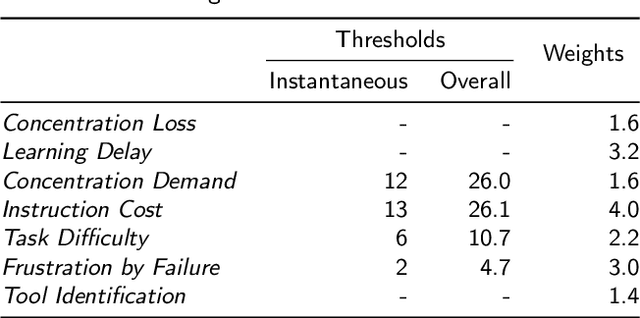

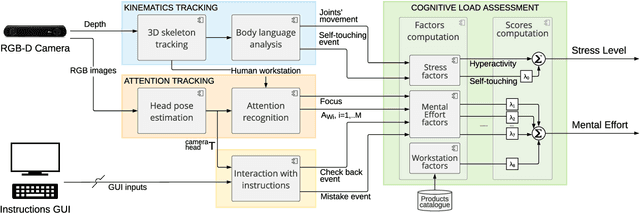

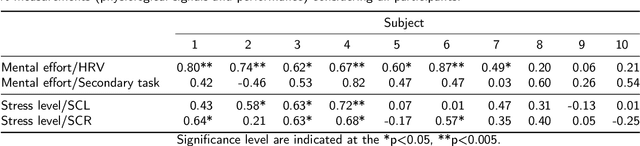

An Online Framework for Cognitive Load Assessment in Assembly Tasks

Sep 08, 2021

Abstract:The ongoing trend towards Industry 4.0 has revolutionised ordinary workplaces, profoundly changing the role played by humans in the production chain. Research on ergonomics in industrial settings mainly focuses on reducing the operator's physical fatigue and discomfort to improve throughput and avoid safety hazards. However, as the production complexity increases, the cognitive resources demand and mental workload could compromise the operator's performance and the efficiency of the shop floor workplace. State-of-the-art methods in cognitive science work offline and/or involve bulky equipment hardly deployable in industrial settings. This paper presents a novel method for online assessment of cognitive load in manufacturing, primarily assembly, by detecting patterns in human motion directly from the input images of a stereo camera. Head pose estimation and skeleton tracking are exploited to investigate the workers' attention and assess hyperactivity and unforeseen movements. Pilot experiments suggest that our factor assessment tool provides significant insights into workers' mental workload, even confirmed by correlations with physiological and performance measurements. According to data gathered in this study, a vision-based cognitive load assessment has the potential to be integrated into the development of mechatronic systems for improving cognitive ergonomics in manufacturing.

Position-based Dynamics Simulator of Brain Deformations for Path Planning and Intra-Operative Control in Keyhole Neurosurgery

Jun 18, 2021

Abstract:Many tasks in robot-assisted surgery require planning and controlling manipulators' motions that interact with highly deformable objects. This study proposes a realistic, time-bounded simulator based on Position-based Dynamics (PBD) simulation that mocks brain deformations due to catheter insertion for pre-operative path planning and intra-operative guidance in keyhole surgical procedures. It maximizes the probability of success by accounting for uncertainty in deformation models, noisy sensing, and unpredictable actuation. The PBD deformation parameters were initialized on a parallelepiped-shaped simulated phantom to obtain a reasonable starting guess for the brain white matter. They were calibrated by comparing the obtained displacements with deformation data for catheter insertion in a composite hydrogel phantom. Knowing the gray matter brain structures' different behaviors, the parameters were fine-tuned to obtain a generalized human brain model. The brain structures' average displacement was compared with values in the literature. The simulator's numerical model uses a novel approach with respect to the literature, and it has proved to be a close match with real brain deformations through validation using recorded deformation data of in-vivo animal trials with a mean mismatch of 4.73$\pm$2.15%. The stability, accuracy, and real-time performance make this model suitable for creating a dynamic environment for KN path planning, pre-operative path planning, and intra-operative guidance.

FetReg: Placental Vessel Segmentation and Registration in Fetoscopy Challenge Dataset

Jun 16, 2021

Abstract:Fetoscopy laser photocoagulation is a widely used procedure for the treatment of Twin-to-Twin Transfusion Syndrome (TTTS), that occur in mono-chorionic multiple pregnancies due to placental vascular anastomoses. This procedure is particularly challenging due to limited field of view, poor manoeuvrability of the fetoscope, poor visibility due to fluid turbidity, variability in light source, and unusual position of the placenta. This may lead to increased procedural time and incomplete ablation, resulting in persistent TTTS. Computer-assisted intervention may help overcome these challenges by expanding the fetoscopic field of view through video mosaicking and providing better visualization of the vessel network. However, the research and development in this domain remain limited due to unavailability of high-quality data to encode the intra- and inter-procedure variability. Through the \textit{Fetoscopic Placental Vessel Segmentation and Registration (FetReg)} challenge, we present a large-scale multi-centre dataset for the development of generalized and robust semantic segmentation and video mosaicking algorithms for the fetal environment with a focus on creating drift-free mosaics from long duration fetoscopy videos. In this paper, we provide an overview of the FetReg dataset, challenge tasks, evaluation metrics and baseline methods for both segmentation and registration. Baseline methods results on the FetReg dataset shows that our dataset poses interesting challenges, offering large opportunity for the creation of novel methods and models through a community effort initiative guided by the FetReg challenge.

An Integrated Dynamic Method for Allocating Roles and Planning Tasks for Mixed Human-Robot Teams

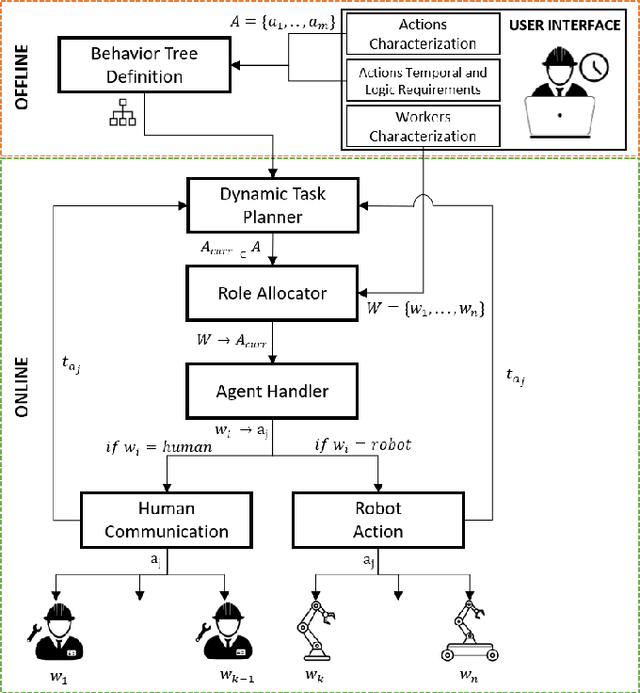

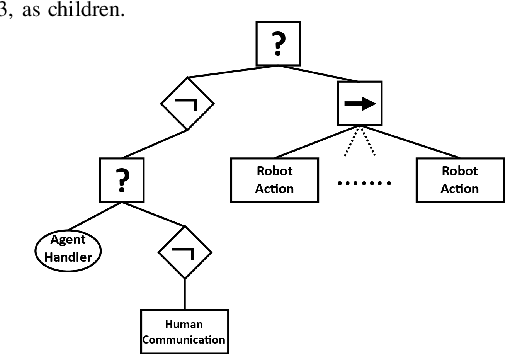

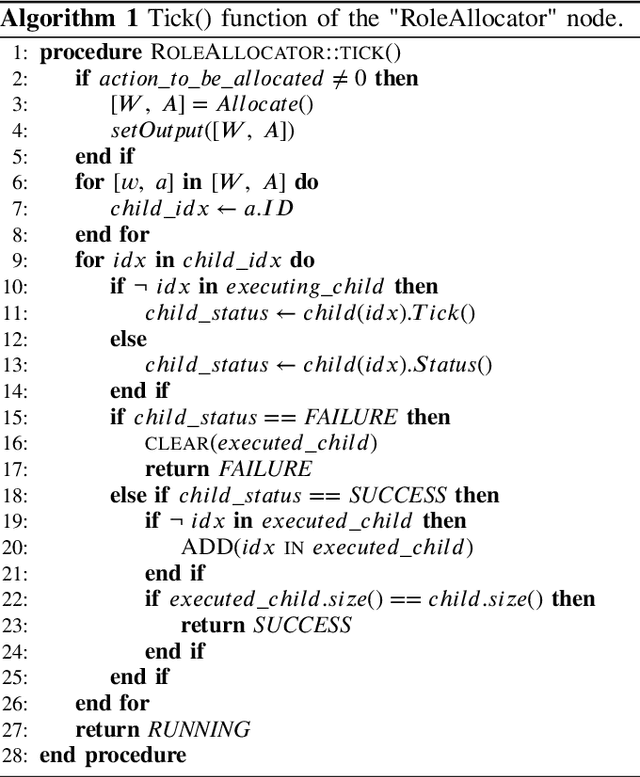

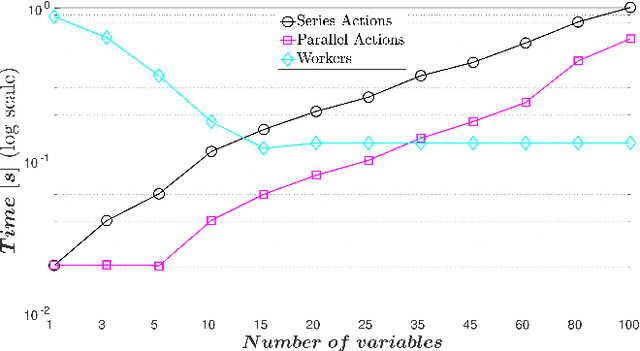

May 25, 2021

Abstract:This paper proposes a novel integrated dynamic method based on Behavior Trees for planning and allocating tasks in mixed human robot teams, suitable for manufacturing environments. The Behavior Tree formulation allows encoding a single job as a compound of different tasks with temporal and logic constraints. In this way, instead of the well-studied offline centralized optimization problem, the role allocation problem is solved with multiple simplified online optimization sub-problem, without complex and cross-schedule task dependencies. These sub-problems are defined as Mixed-Integer Linear Programs, that, according to the worker-actions related costs and the workers' availability, allocate the yet-to-execute tasks among the available workers. To characterize the behavior of the developed method, we opted to perform different simulation experiments in which the results of the action-worker allocation and computational complexity are evaluated. The obtained results, due to the nature of the algorithm and to the possibility of simulating the agents' behavior, should describe well also how the algorithm performs in real experiments.

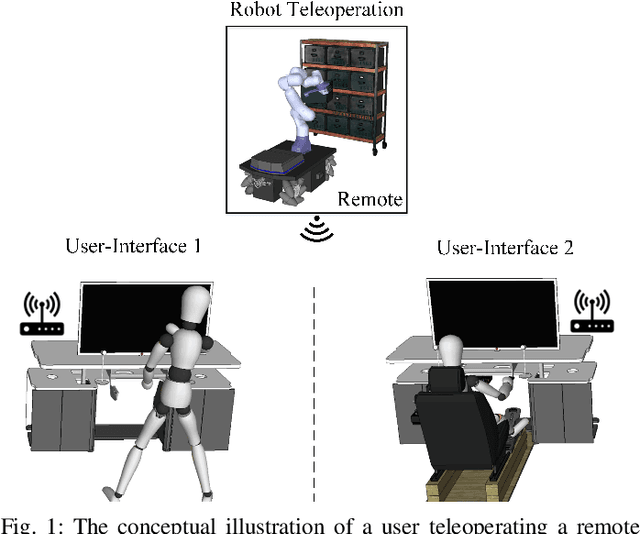

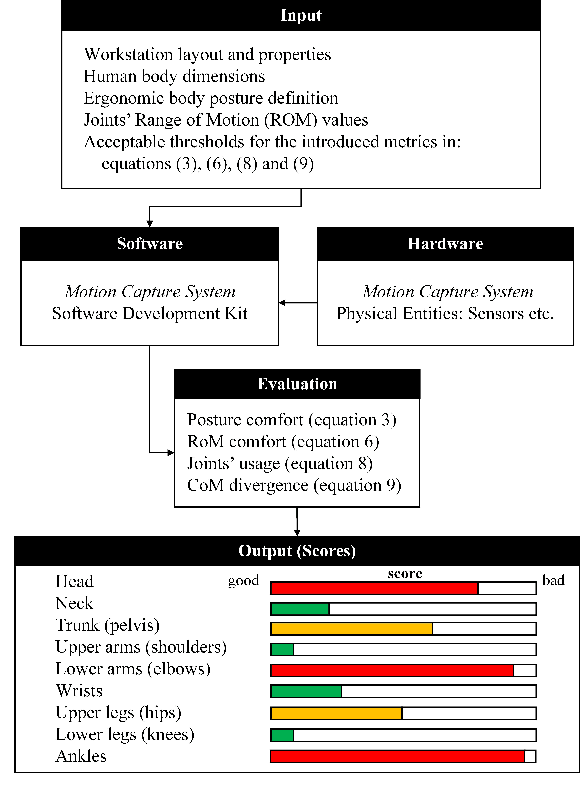

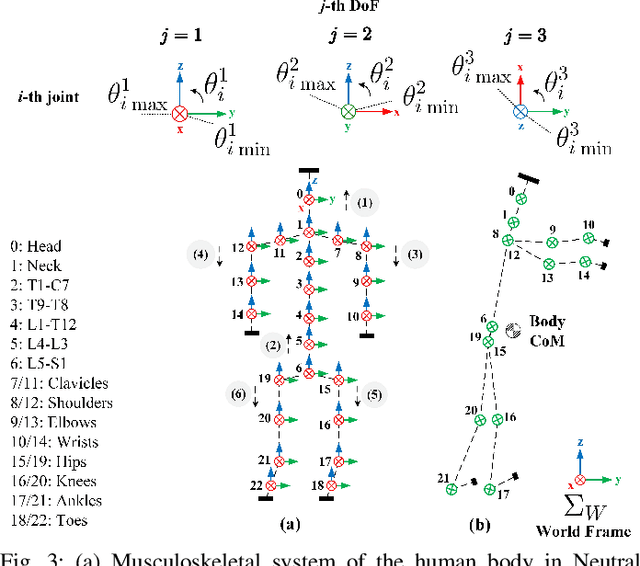

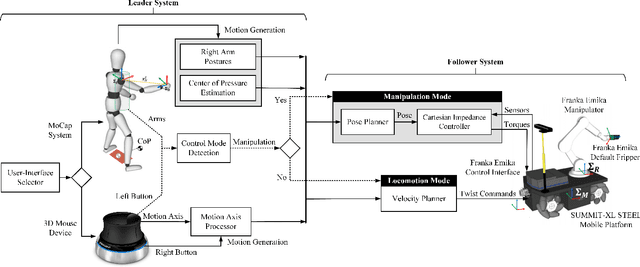

Quantitative Physical Ergonomics Assessment of Teleoperation Interfaces

May 20, 2021

Abstract:Human factors and ergonomics are the essential constituents of teleoperation interfaces, which can significantly affect the human operator's performance. Thus, a quantitative evaluation of these elements and the ability to establish reliable comparison bases for different teleoperation interfaces are the keys to select the most suitable one for a particular application. However, most of the works on teleoperation have so far focused on the stability analysis and the transparency improvement of these systems, and do not cover the important usability aspects. In this work, we propose a foundation to build a general framework for the analysis of human factors and ergonomics in employing diverse teleoperation interfaces. The proposed framework will go beyond the traditional subjective analyses of usability by complementing it with online measurements of the human body configurations. As a result, multiple quantitative metrics such as joints' usage, range of motion comfort, center of mass divergence, and posture comfort are introduced. To demonstrate the potential of the proposed framework, two different teleoperation interfaces are considered, and real-world experiments with eleven participants performing a simulated industrial remote pick-and-place task are conducted. The quantitative results of this analysis are provided, and compared with subjective questionnaires, illustrating the effectiveness of the proposed framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge