Elena De Momi

Dept. of Electronics, Information, and Bioengineering, Politecnico di Milano, Italy

RealSynCol: a high-fidelity synthetic colon dataset for 3D reconstruction applications

Feb 09, 2026Abstract:Deep learning has the potential to improve colonoscopy by enabling 3D reconstruction of the colon, providing a comprehensive view of mucosal surfaces and lesions, and facilitating the identification of unexplored areas. However, the development of robust methods is limited by the scarcity of large-scale ground truth data. We propose RealSynCol, a highly realistic synthetic dataset designed to replicate the endoscopic environment. Colon geometries extracted from 10 CT scans were imported into a virtual environment that closely mimics intraoperative conditions and rendered with realistic vascular textures. The resulting dataset comprises 28\,130 frames, paired with ground truth depth maps, optical flow, 3D meshes, and camera trajectories. A benchmark study was conducted to evaluate the available synthetic colon datasets for the tasks of depth and pose estimation. Results demonstrate that the high realism and variability of RealSynCol significantly enhance generalization performance on clinical images, proving it to be a powerful tool for developing deep learning algorithms to support endoscopic diagnosis.

UnReflectAnything: RGB-Only Highlight Removal by Rendering Synthetic Specular Supervision

Dec 11, 2025Abstract:Specular highlights distort appearance, obscure texture, and hinder geometric reasoning in both natural and surgical imagery. We present UnReflectAnything, an RGB-only framework that removes highlights from a single image by predicting a highlight map together with a reflection-free diffuse reconstruction. The model uses a frozen vision transformer encoder to extract multi-scale features, a lightweight head to localize specular regions, and a token-level inpainting module that restores corrupted feature patches before producing the final diffuse image. To overcome the lack of paired supervision, we introduce a Virtual Highlight Synthesis pipeline that renders physically plausible specularities using monocular geometry, Fresnel-aware shading, and randomized lighting which enables training on arbitrary RGB images with correct geometric structure. UnReflectAnything generalizes across natural and surgical domains where non-Lambertian surfaces and non-uniform lighting create severe highlights and it achieves competitive performance with state-of-the-art results on several benchmarks. Project Page: https://alberto-rota.github.io/UnReflectAnything/

Self-Supervised Contrastive Embedding Adaptation for Endoscopic Image Matching

Dec 11, 2025

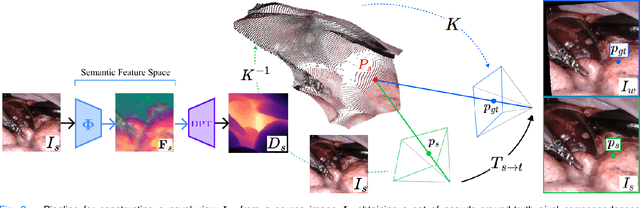

Abstract:Accurate spatial understanding is essential for image-guided surgery, augmented reality integration and context awareness. In minimally invasive procedures, where visual input is the sole intraoperative modality, establishing precise pixel-level correspondences between endoscopic frames is critical for 3D reconstruction, camera tracking, and scene interpretation. However, the surgical domain presents distinct challenges: weak perspective cues, non-Lambertian tissue reflections, and complex, deformable anatomy degrade the performance of conventional computer vision techniques. While Deep Learning models have shown strong performance in natural scenes, their features are not inherently suited for fine-grained matching in surgical images and require targeted adaptation to meet the demands of this domain. This research presents a novel Deep Learning pipeline for establishing feature correspondences in endoscopic image pairs, alongside a self-supervised optimization framework for model training. The proposed methodology leverages a novel-view synthesis pipeline to generate ground-truth inlier correspondences, subsequently utilized for mining triplets within a contrastive learning paradigm. Through this self-supervised approach, we augment the DINOv2 backbone with an additional Transformer layer, specifically optimized to produce embeddings that facilitate direct matching through cosine similarity thresholding. Experimental evaluation demonstrates that our pipeline surpasses state-of-the-art methodologies on the SCARED datasets improved matching precision and lower epipolar error compared to the related work. The proposed framework constitutes a valuable contribution toward enabling more accurate high-level computer vision applications in surgical endoscopy.

Physiological Measures of the Mental Workload in Users of a Lower Limb Exosuit: A Comparison of Subjective and Objective Metrics

Nov 14, 2025Abstract:Lower-limb exosuits are particularly relevant for individuals with some degree of mobility impairment, such as post-stroke patients or older adults with reduced movement capabilities. This study aims to investigate the mental workload (MWL) assessment of XoSoft, a lower-limb soft exoskeleton, using and comparing subjective and objective physiological metrics. The NASA-TLX questionnaire, the average percentage change in pupil size (APCPS), and the Baevsky stress index (SI) are compared. The experiments were conducted on 18 healthy subjects while walking and involved mathematical tasks to create a double-task condition. The results show a complex interaction between task difficulty, exoskeleton activation, and pupillary dynamics, suggesting that the subject might reach a saturated condition under a high mental load. Besides, the data indicate that pupil diameter may be an objective mental workload indicator that correlates with subjective NASA-TLX questionnaires. The discordant indications from the stress index suggest how different metrics of the ocular and cardiac levels respond differently to various stimuli and dynamics. Research has also revealed ocular asymmetry, with the right eye more sensitive to cognitive load.

Impact of a Lower Limb Exosuit Anchor Points on Energetics and Biomechanics

Jul 31, 2025Abstract:Anchor point placement is a crucial yet often overlooked aspect of exosuit design since it determines how forces interact with the human body. This work analyzes the impact of different anchor point positions on gait kinematics, muscular activation and energetic consumption. A total of six experiments were conducted with 11 subjects wearing the XoSoft exosuit, which assists hip flexion in five configurations. Subjects were instrumented with an IMU-based motion tracking system, EMG sensors, and a mask to measure metabolic consumption. The results show that positioning the knee anchor point on the posterior side while keeping the hip anchor on the anterior part can reduce muscle activation in the hip flexors by up to 10.21\% and metabolic expenditure by up to 18.45\%. Even if the only assisted joint was the hip, all the configurations introduced changes also in the knee and ankle kinematics. Overall, no single configuration was optimal across all subjects, suggesting that a personalized approach is necessary to transmit the assistance forces optimally. These findings emphasize that anchor point position does indeed have a significant impact on exoskeleton effectiveness and efficiency. However, these optimal positions are subject-specific to the exosuit design, and there is a strong need for future work to tailor musculoskeletal models to individual characteristics and validate these results in clinical populations.

* 12 pages, 10 figures

Implementation and Assessment of an Augmented Training Curriculum for Surgical Robotics

Jul 10, 2025Abstract:The integration of high-level assistance algorithms in surgical robotics training curricula may be beneficial in establishing a more comprehensive and robust skillset for aspiring surgeons, improving their clinical performance as a consequence. This work presents the development and validation of a haptic-enhanced Virtual Reality simulator for surgical robotics training, featuring 8 surgical tasks that the trainee can interact with thanks to the embedded physics engine. This virtual simulated environment is augmented by the introduction of high-level haptic interfaces for robotic assistance that aim at re-directing the motion of the trainee's hands and wrists toward targets or away from obstacles, and providing a quantitative performance score after the execution of each training exercise.An experimental study shows that the introduction of enhanced robotic assistance into a surgical robotics training curriculum improves performance during the training process and, crucially, promotes the transfer of the acquired skills to an unassisted surgical scenario, like the clinical one.

Intelligent Framework for Human-Robot Collaboration: Safety, Dynamic Ergonomics, and Adaptive Decision-Making

Mar 10, 2025Abstract:The integration of collaborative robots into industrial environments has improved productivity, but has also highlighted significant challenges related to operator safety and ergonomics. This paper proposes an innovative framework that integrates advanced visual perception technologies, real-time ergonomic monitoring, and Behaviour Tree (BT)-based adaptive decision-making. Unlike traditional methods, which often operate in isolation or statically, our approach combines deep learning models (YOLO11 and SlowOnly), advanced tracking (Unscented Kalman Filter) and dynamic ergonomic assessments (OWAS), offering a modular, scalable and adaptive system. Experimental results show that the framework outperforms previous methods in several aspects: accuracy in detecting postures and actions, adaptivity in managing human-robot interactions, and ability to reduce ergonomic risk through timely robotic interventions. In particular, the visual perception module showed superiority over YOLOv9 and YOLOv8, while real-time ergonomic monitoring eliminated the limitations of static analysis. Adaptive role management, made possible by the Behaviour Tree, provided greater responsiveness than rule-based systems, making the framework suitable for complex industrial scenarios. Our system demonstrated a 92.5\% accuracy in grasping intention recognition and successfully classified ergonomic risks with real-time responsiveness (average latency of 0.57 seconds), enabling timely robotic

A Reinforcement Learning Approach to Non-prehensile Manipulation through Sliding

Feb 24, 2025

Abstract:Although robotic applications increasingly demand versatile and dynamic object handling, most existing techniques are predominantly focused on grasp-based manipulation, limiting their applicability in non-prehensile tasks. To address this need, this study introduces a Deep Deterministic Policy Gradient (DDPG) reinforcement learning framework for efficient non-prehensile manipulation, specifically for sliding an object on a surface. The algorithm generates a linear trajectory by precisely controlling the acceleration of a robotic arm rigidly coupled to the horizontal surface, enabling the relative manipulation of an object as it slides on top of the surface. Furthermore, two distinct algorithms have been developed to estimate the frictional forces dynamically during the sliding process. These algorithms provide online friction estimates after each action, which are fed back into the actor model as critical feedback after each action. This feedback mechanism enhances the policy's adaptability and robustness, ensuring more precise control of the platform's acceleration in response to varying surface condition. The proposed algorithm is validated through simulations and real-world experiments. Results demonstrate that the proposed framework effectively generalizes sliding manipulation across varying distances and, more importantly, adapts to different surfaces with diverse frictional properties. Notably, the trained model exhibits zero-shot sim-to-real transfer capabilities.

PRO-MIND: Proximity and Reactivity Optimisation of robot Motion to tune safety limits, human stress, and productivity in INDustrial settings

Sep 10, 2024Abstract:Despite impressive advancements of industrial collaborative robots, their potential remains largely untapped due to the difficulty in balancing human safety and comfort with fast production constraints. To help address this challenge, we present PRO-MIND, a novel human-in-the-loop framework that leverages valuable data about the human co-worker to optimise robot trajectories. By estimating human attention and mental effort, our method dynamically adjusts safety zones and enables on-the-fly alterations of the robot path to enhance human comfort and optimal stopping conditions. Moreover, we formulate a multi-objective optimisation to adapt the robot's trajectory execution time and smoothness based on the current human psycho-physical stress, estimated from heart rate variability and frantic movements. These adaptations exploit the properties of B-spline curves to preserve continuity and smoothness, which are crucial factors in improving motion predictability and comfort. Evaluation in two realistic case studies showcases the framework's ability to restrain the operators' workload and stress and to ensure their safety while enhancing human-robot productivity. Further strengths of PRO-MIND include its adaptability to each individual's specific needs and sensitivity to variations in attention, mental effort, and stress during task execution.

DARES: Depth Anything in Robotic Endoscopic Surgery with Self-supervised Vector-LoRA of the Foundation Model

Aug 30, 2024

Abstract:Robotic-assisted surgery (RAS) relies on accurate depth estimation for 3D reconstruction and visualization. While foundation models like Depth Anything Models (DAM) show promise, directly applying them to surgery often yields suboptimal results. Fully fine-tuning on limited surgical data can cause overfitting and catastrophic forgetting, compromising model robustness and generalization. Although Low-Rank Adaptation (LoRA) addresses some adaptation issues, its uniform parameter distribution neglects the inherent feature hierarchy, where earlier layers, learning more general features, require more parameters than later ones. To tackle this issue, we introduce Depth Anything in Robotic Endoscopic Surgery (DARES), a novel approach that employs a new adaptation technique, Vector Low-Rank Adaptation (Vector-LoRA) on the DAM V2 to perform self-supervised monocular depth estimation in RAS scenes. To enhance learning efficiency, we introduce Vector-LoRA by integrating more parameters in earlier layers and gradually decreasing parameters in later layers. We also design a reprojection loss based on the multi-scale SSIM error to enhance depth perception by better tailoring the foundation model to the specific requirements of the surgical environment. The proposed method is validated on the SCARED dataset and demonstrates superior performance over recent state-of-the-art self-supervised monocular depth estimation techniques, achieving an improvement of 13.3% in the absolute relative error metric. The code and pre-trained weights are available at https://github.com/mobarakol/DARES.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge