Daniele Pucci

Istituto Italiano di Tecnologia, Genova, Italy

Non-Linear Trajectory Optimization for Large Step-Ups: Application to the Humanoid Robot Atlas

Apr 25, 2020

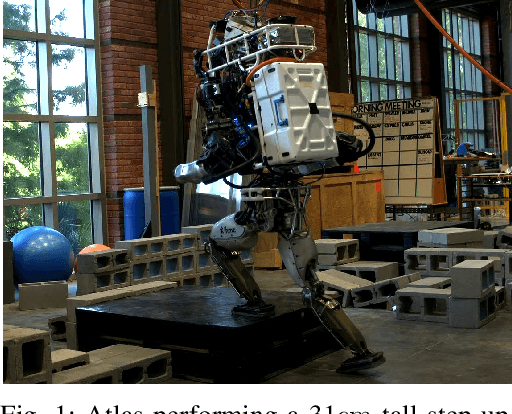

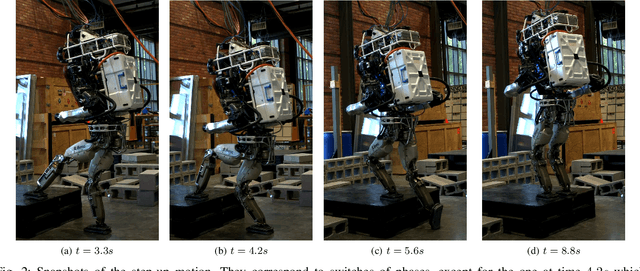

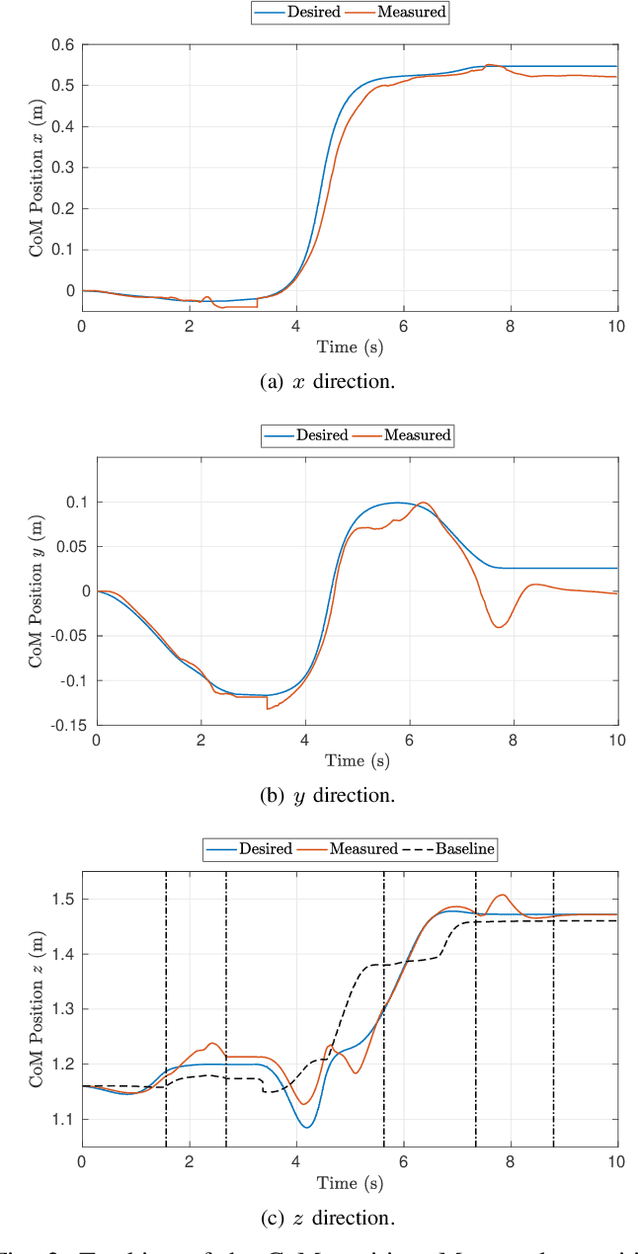

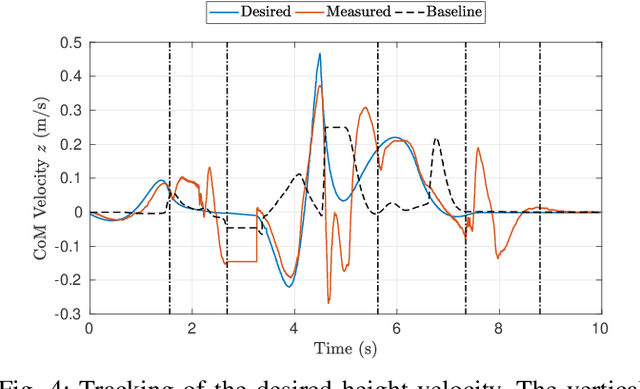

Abstract:Performing large step-ups is a challenging task for a humanoid robot. It requires the robot to perform motions at the limit of its reachable workspace while straining to move its body upon the obstacle. This paper presents a non-linear trajectory optimization method for generating step-up motions. We adopt a simplified model of the centroidal dynamics to generate feasible Center of Mass trajectories aimed at reducing the torques required for the step-up motion. The activation and deactivation of contacts at both feet are considered explicitly. The output of the planner is a Center of Mass trajectory plus an optimal duration for each walking phase. These desired values are stabilized by a whole-body controller that determines a set of desired joint torques. We experimentally demonstrate that by using trajectory optimization techniques, the maximum torque required to the full-size humanoid robot Atlas can be reduced up to 20% when performing a step-up motion.

Whole-Body Walking Generation using Contact Parametrization: A Non-Linear Trajectory Optimization Approach

Mar 10, 2020

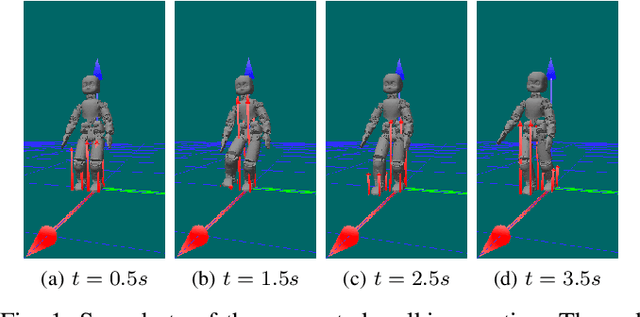

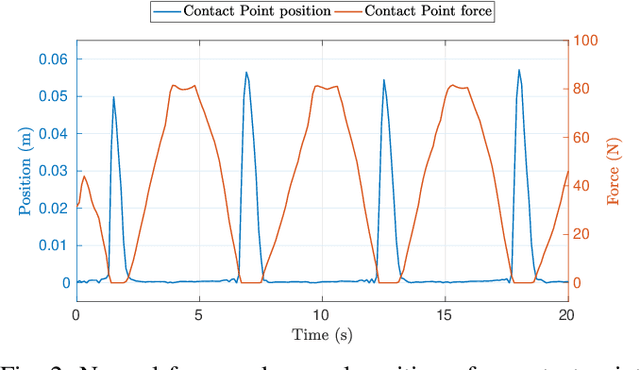

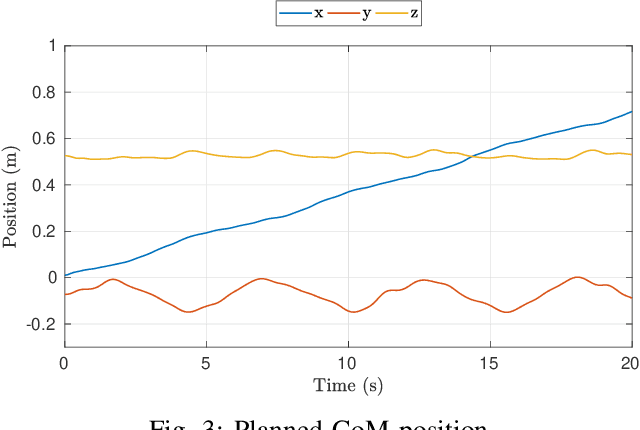

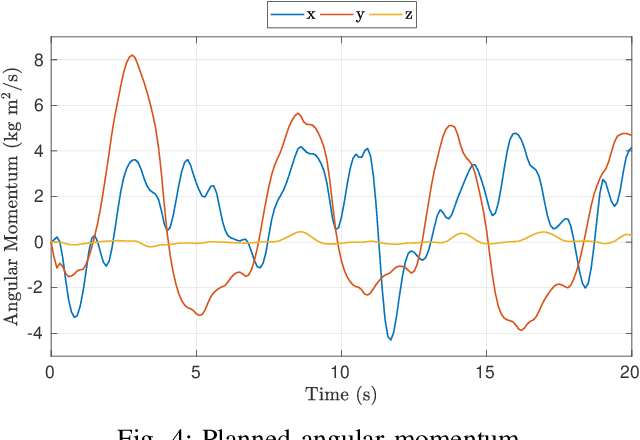

Abstract:In this paper, we describe a planner capable of generating walking trajectories by using the centroidal dynamics and the full kinematics of a humanoid robot model. The interaction between the robot and the walking surface is modeled explicitly through a novel contact parametrization. The approach is complementarity-free and does not need a predefined contact sequence. By solving an optimal control problem we obtain walking trajectories. In particular, through a set of constraints and dynamic equations, we model the robot in contact with the ground. We describe the objective the robot needs to achieve with a set of tasks. The whole optimal control problem is transcribed into an optimization problem via a Direct Multiple Shooting approach and solved with an off-the-shelf solver. We show that it is possible to achieve walking motions automatically by specifying a minimal set of references, such as a constant desired Center of Mass velocity and a reference point on the ground.

Recent Advances in Human-Robot Collaboration Towards Joint Action

Jan 02, 2020

Abstract:Robots existed as separate entities till now, but the horizons of a symbiotic human-robot partnership are impending. Despite all the recent technical advances in terms of hardware, robots are still not endowed with desirable relational skills that ensure a social component in their existence. This article draws from our experience as roboticists in Human-Robot Collaboration (HRC) with humanoid robots and presents some of the recent advances made towards realizing intuitive robot behaviors and partner-aware control involving physical interactions.

Gym-Ignition: Reproducible Robotic Simulations for Reinforcement Learning

Dec 02, 2019

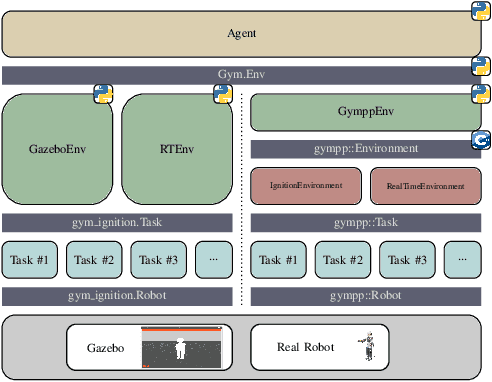

Abstract:This paper presents Gym-Ignition, a new framework to create reproducible robotic environments for reinforcement learning research. It interfaces with the new generation of Gazebo, part of the Ignition Robotics suite, which provides three main improvements for reinforcement learning applications compared to the alternatives: 1) the modular architecture enables using the simulator as a C++ library, simplifying the interconnection with external software; 2) multiple physics and rendering engines are supported as plugins, simplifying their selection during the execution; 3) the new distributed simulation capability allows simulating complex scenarios while sharing the load on multiple workers and machines. The core of Gym-Ignition is a component that contains the Ignition Gazebo simulator and exposes a simple interface for its configuration and execution. We provide a Python package that allows developers to create robotic environments simulated in Ignition Gazebo. Environments expose the common OpenAI Gym interface, making them compatible out-of-the-box with third-party frameworks containing reinforcement learning algorithms. Simulations can be executed in both headless and GUI mode, the physics engine can run in accelerated mode, and instances can be parallelized. Furthermore, the Gym-Ignition software architecture provides abstraction of the Robot and the Task, making environments agnostic on the specific runtime. This abstraction allows their execution also in a real-time setting on actual robotic platforms, even if driven by different middlewares.

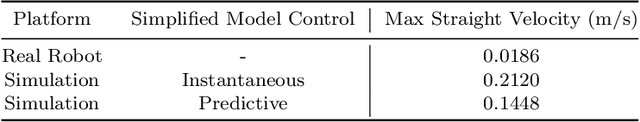

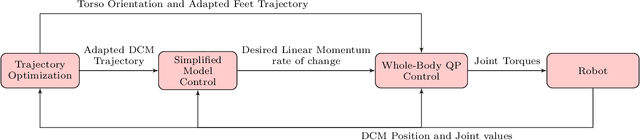

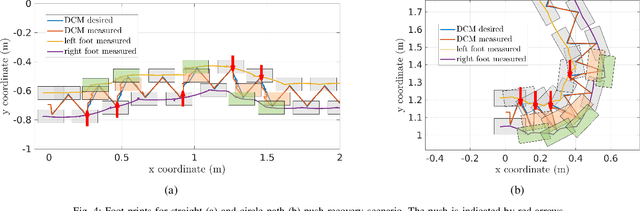

A Benchmarking of DCM Based Architectures for Position, Velocity and Torque Controlled Humanoid Robots

Nov 27, 2019

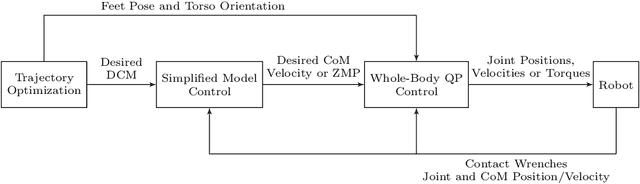

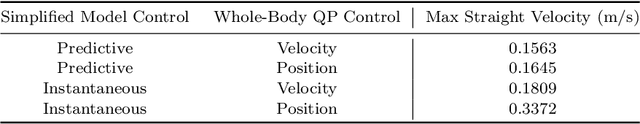

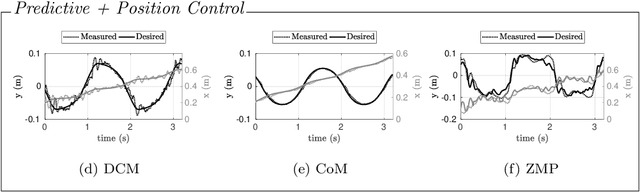

Abstract:This paper contributes towards the benchmarking of control architectures for bipedal robot locomotion. It considers architectures that are based on the Divergent Component of Motion (DCM) and composed of three main layers: trajectory optimization, simplified model control, and whole-body QP control layer. While the first two layers use simplified robot models, the whole-body QP control layer uses a complete robot model to produce either desired positions, velocities, or torques inputs at the joint-level. This paper then compares two implementations of the simplified model control layer, which are tested with position, velocity, and torque control modes for the whole-body QP control layer. In particular, both an instantaneous and a Receding Horizon controller are presented for the simplified model control layer. We show also that one of the proposed architectures allows the humanoid robot iCub to achieve a forward walking velocity of 0.3372 meters per second, which is the highest walking velocity achieved by the iCub robot.

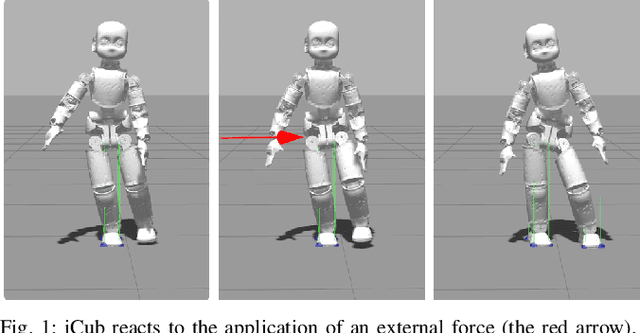

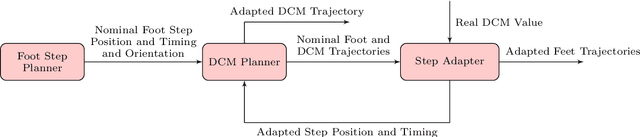

Online DCM Trajectory Generation for Push Recovery of Torque-Controlled Humanoid Robots

Oct 14, 2019

Abstract:We present a computationally efficient method for online planning of bipedal walking trajectories with push recovery. In particular, the proposed methodology fits control architectures where the Divergent-Component-of-Motion (DCM) is planned beforehand, and adds a step adapter to adjust the planned trajectories and achieve push recovery. Assuming that the robot is in a single support state, the step adapter generates new positions and timings for the next step. The step adapter is active in single support phases only, but the proposed torque-control architecture considers double support phases too. The key idea for the design of the step adapter is to impose both initial and final DCM step values using an exponential interpolation of the time varying ZMP trajectory.This allows us to cast the push recovery problem as a Quadratic Programming (QP) one, and to solve it online with state-of-the-art optimisers. The overall approach is validated with simulations of the torque-controlled 33 kg humanoid robot iCub. Results show that the proposed strategy prevents the humanoid robot from falling while walking at 0.28 m/s and pushed with external forces up to 150 Newton for 0.05 seconds.

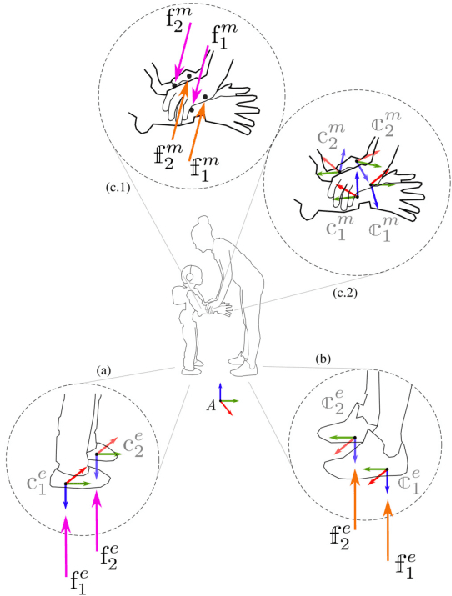

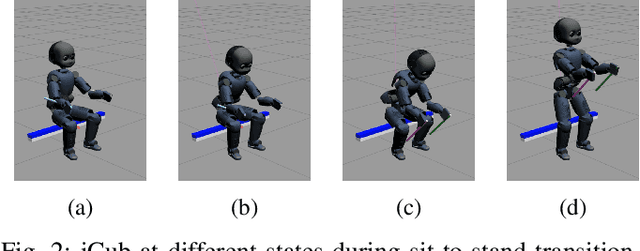

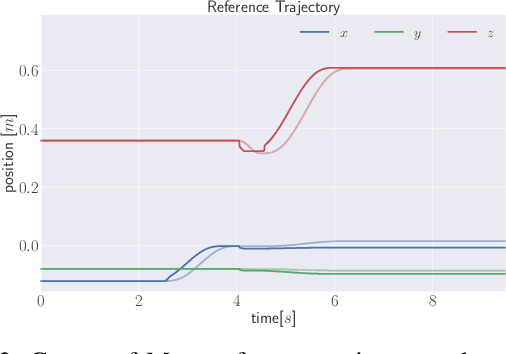

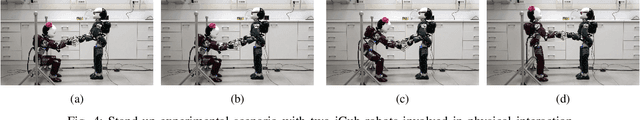

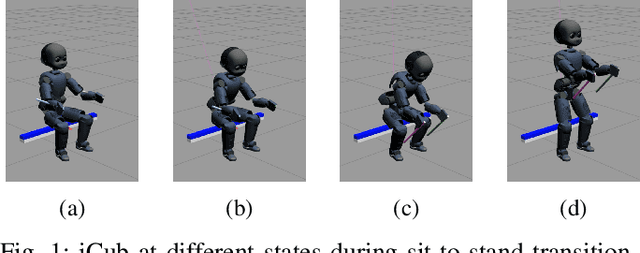

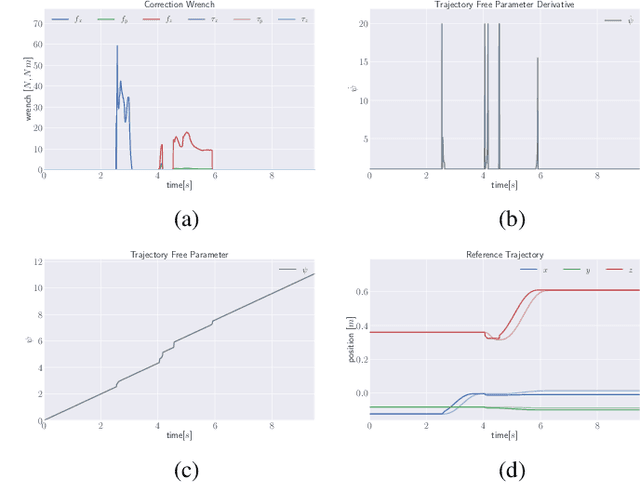

Trajectory Advancement for Robot Stand-up with Human Assistance

Oct 14, 2019

Abstract:Physical interactions are inevitable part of human-robot collaboration tasks and rather than exhibiting simple reactive behaviors to human interactions, collaborative robots need to be endowed with intuitive behaviors. This paper proposes a trajectory advancement approach that facilitates advancement along a reference trajectory by leveraging assistance from helpful interaction wrench present during human-robot collaboration. We validate our approach through experiments in simulation with iCub.

Modeling, Identification and Control of Model Jet Engines for Jet Powered Robotics

Sep 29, 2019

Abstract:The paper contributes towards the modeling, identification, and control of model jet engines. We propose a nonlinear, second order model in order to capture the model jet engines governing dynamics. The model structure is identified by applying sparse identification of nonlinear dynamics, and then the parameters of the model are found via gray-box identification procedures. Once the model has been identified, we approached the control of the model jet engine by designing two control laws. The first one is based on the classical Feedback Linearization technique while the second one on the Sliding Mode control. The overall methodology has been verified by modeling, identifying and controlling two model jet engines, i.e. P100-RX and P220-RXi developed by JetCat, which provide a maximum thrust of 100 N and 220 N, respectively.

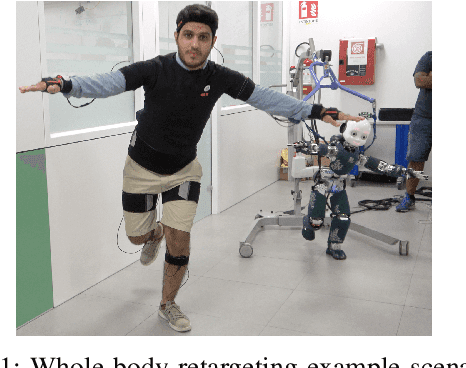

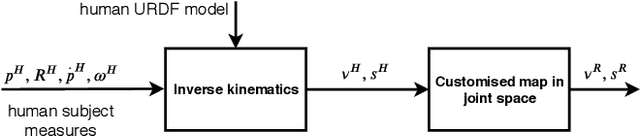

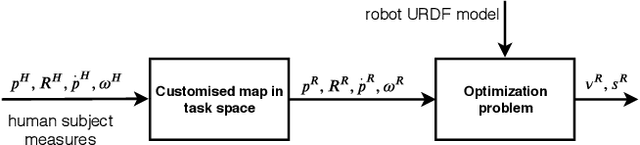

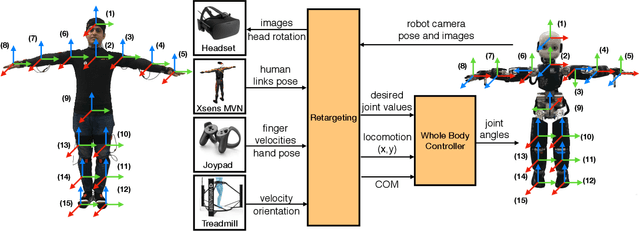

Whole-Body Geometric Retargeting for Humanoid Robots

Sep 22, 2019

Abstract:Humanoid robot teleoperation allows humans to integrate their cognitive capabilities with the apparatus to perform tasks that need high strength, manoeuvrability and dexterity. This paper presents a framework for teleoperation of humanoid robots using a novel approach for motion retargeting through inverse kinematics over the robot model. The proposed method enhances scalability for retargeting, i.e., it allows teleoperating different robots by different human users with minimal changes to the proposed system. Our framework enables an intuitive and natural interaction between the human operator and the humanoid robot at the configuration space level. We validate our approach by demonstrating whole-body retargeting with multiple robot models. Furthermore, we present experimental validation through teleoperation experiments using two state-of-the-art whole-body controllers for humanoid robots.

* Equal author contribution from Kourosh Darvish and Yeshasvi Tirupachuri

Model-Based Real-Time Motion Tracking using Dynamical Inverse Kinematics

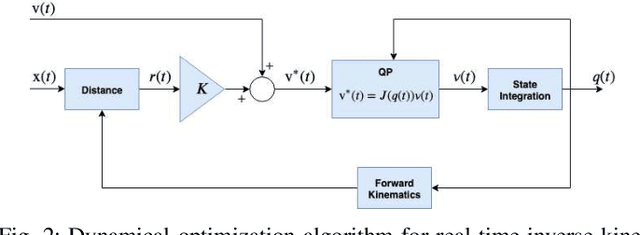

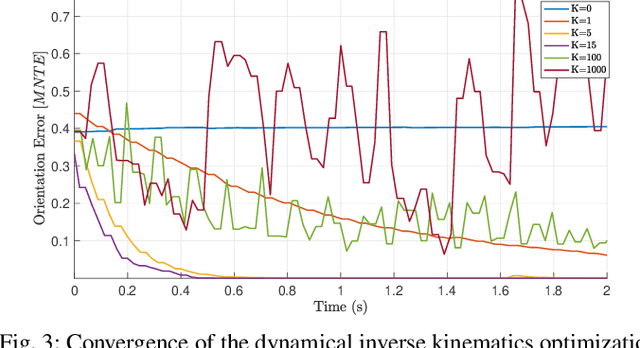

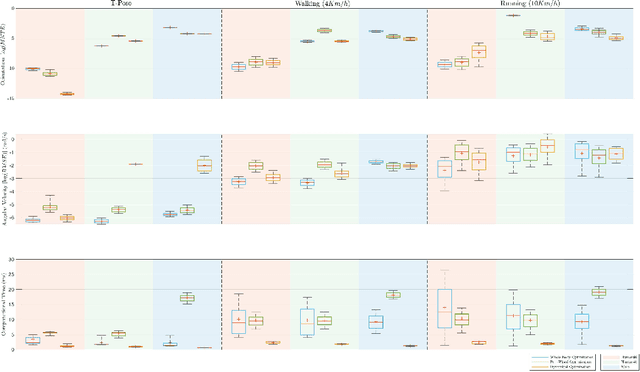

Sep 17, 2019

Abstract:This paper contributes towards the development of motion tracking algorithms for time-critical applications, proposing an infrastructure for solving dynamically the inverse kinematics of human models. We present a method based on the integration of the differential kinematics, and for which the convergence is proved using Lyapunov analysis. The method is tested in an experimental scenario where the motion of a subject is tracked in static and dynamic configurations, and the inverse kinematics is solved both for human and humanoid models. The architecture is evaluated both terms of accuracy and computational load, and compared to iterative optimization algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge