Kernel Exponential Family Estimation via Doubly Dual Embedding

Nov 06, 2018Bo Dai, Hanjun Dai, Arthur Gretton, Le Song, Dale Schuurmans, Niao He

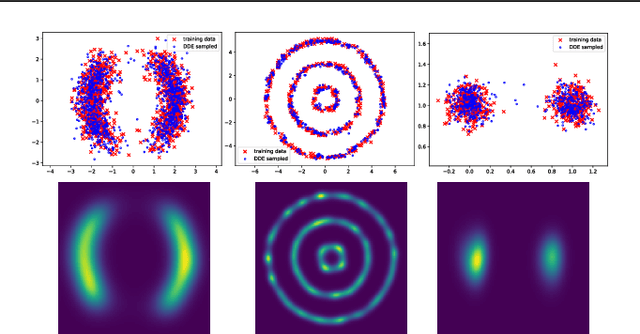

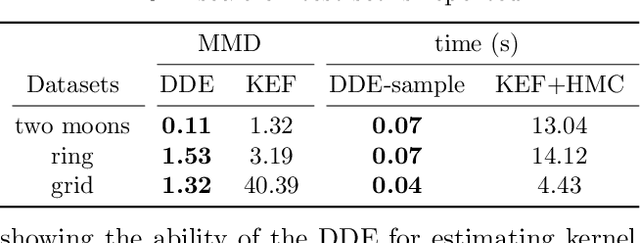

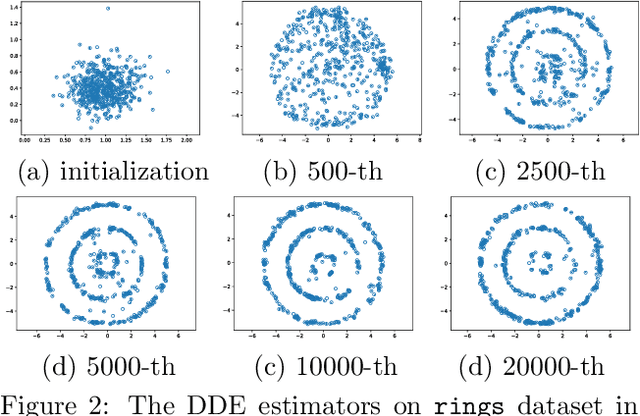

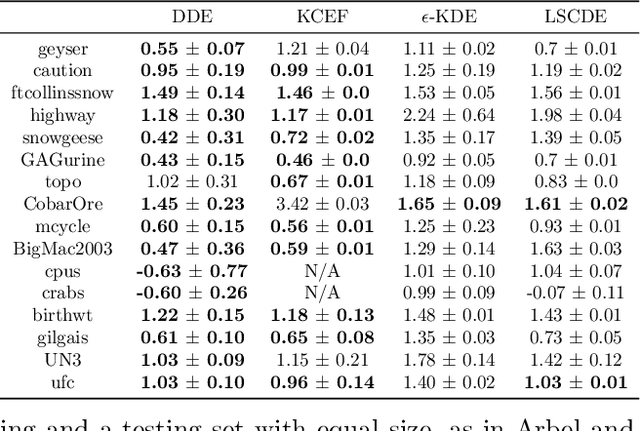

We investigate penalized maximum log-likelihood estimation for exponential family distributions whose natural parameter resides in a reproducing kernel Hilbert space. Key to our approach is a novel technique, doubly dual embedding, that avoids computation of the partition function. This technique also allows the development of a flexible sampling strategy that amortizes the cost of Monte-Carlo sampling in the inference stage. The resulting estimator can be easily generalized to kernel conditional exponential families. We furthermore establish a connection between infinite-dimensional exponential family estimation and MMD-GANs, revealing a new perspective for understanding GANs. Compared to current score matching based estimators, the proposed method improves both memory and time efficiency while enjoying stronger statistical properties, such as fully capturing smoothness in its statistical convergence rate while the score matching estimator appears to saturate. Finally, we show that the proposed estimator can empirically outperform state-of-the-art methods in both kernel exponential family estimation and its conditional extension.

Smoothed Action Value Functions for Learning Gaussian Policies

Jul 25, 2018Ofir Nachum, Mohammad Norouzi, George Tucker, Dale Schuurmans

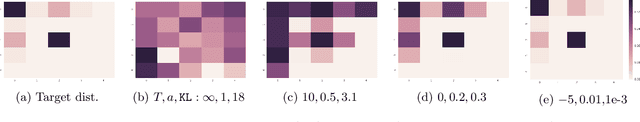

State-action value functions (i.e., Q-values) are ubiquitous in reinforcement learning (RL), giving rise to popular algorithms such as SARSA and Q-learning. We propose a new notion of action value defined by a Gaussian smoothed version of the expected Q-value. We show that such smoothed Q-values still satisfy a Bellman equation, making them learnable from experience sampled from an environment. Moreover, the gradients of expected reward with respect to the mean and covariance of a parameterized Gaussian policy can be recovered from the gradient and Hessian of the smoothed Q-value function. Based on these relationships, we develop new algorithms for training a Gaussian policy directly from a learned smoothed Q-value approximator. The approach is additionally amenable to proximal optimization by augmenting the objective with a penalty on KL-divergence from a previous policy. We find that the ability to learn both a mean and covariance during training leads to significantly improved results on standard continuous control benchmarks.

Planning and Learning with Stochastic Action Sets

May 07, 2018Craig Boutilier, Alon Cohen, Amit Daniely, Avinatan Hassidim, Yishay Mansour, Ofer Meshi, Martin Mladenov, Dale Schuurmans

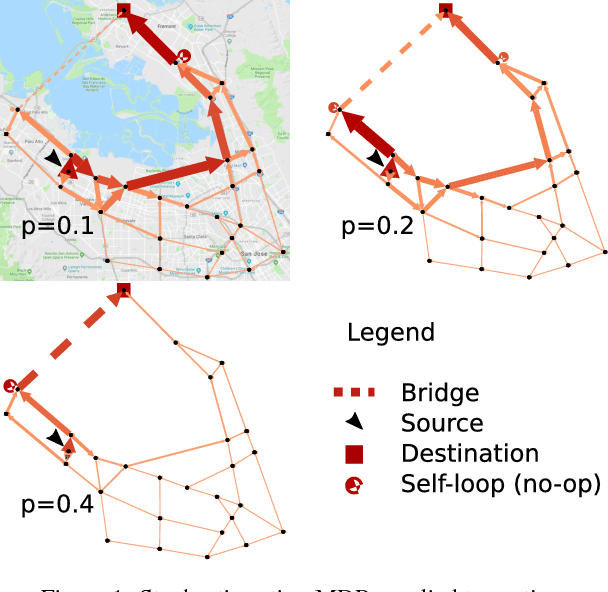

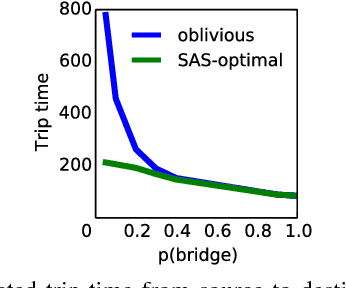

In many practical uses of reinforcement learning (RL) the set of actions available at a given state is a random variable, with realizations governed by an exogenous stochastic process. Somewhat surprisingly, the foundations for such sequential decision processes have been unaddressed. In this work, we formalize and investigate MDPs with stochastic action sets (SAS-MDPs) to provide these foundations. We show that optimal policies and value functions in this model have a structure that admits a compact representation. From an RL perspective, we show that Q-learning with sampled action sets is sound. In model-based settings, we consider two important special cases: when individual actions are available with independent probabilities; and a sampling-based model for unknown distributions. We develop poly-time value and policy iteration methods for both cases; and in the first, we offer a poly-time linear programming solution.

Variational Rejection Sampling

Apr 05, 2018Aditya Grover, Ramki Gummadi, Miguel Lazaro-Gredilla, Dale Schuurmans, Stefano Ermon

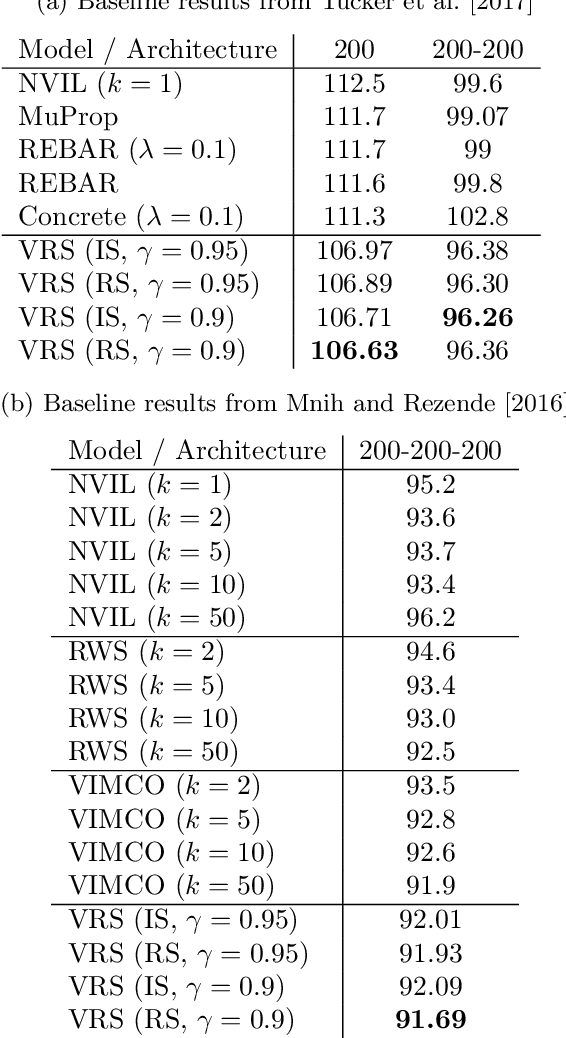

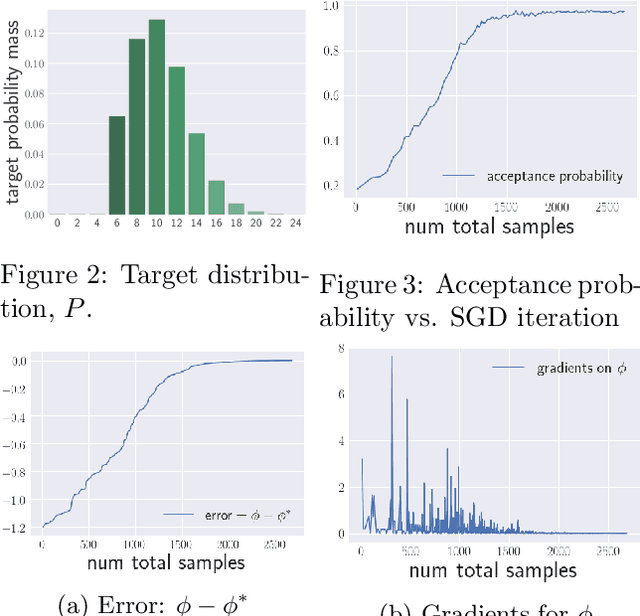

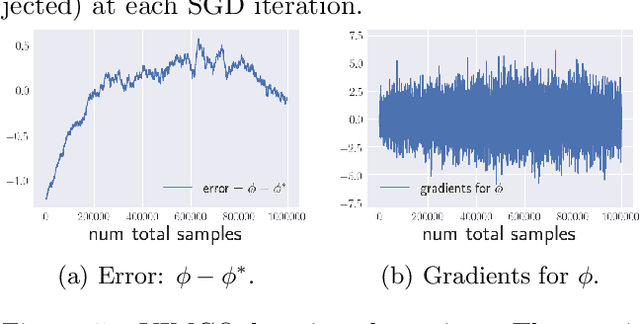

Learning latent variable models with stochastic variational inference is challenging when the approximate posterior is far from the true posterior, due to high variance in the gradient estimates. We propose a novel rejection sampling step that discards samples from the variational posterior which are assigned low likelihoods by the model. Our approach provides an arbitrarily accurate approximation of the true posterior at the expense of extra computation. Using a new gradient estimator for the resulting unnormalized proposal distribution, we achieve average improvements of 3.71 nats and 0.21 nats over state-of-the-art single-sample and multi-sample alternatives respectively for estimating marginal log-likelihoods using sigmoid belief networks on the MNIST dataset.

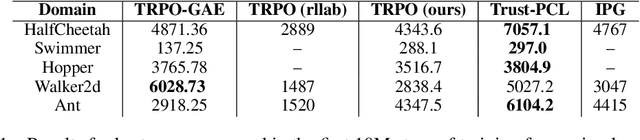

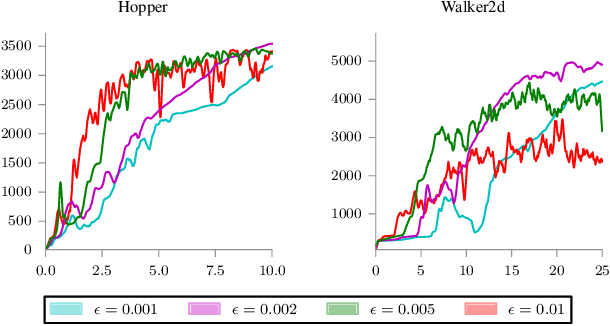

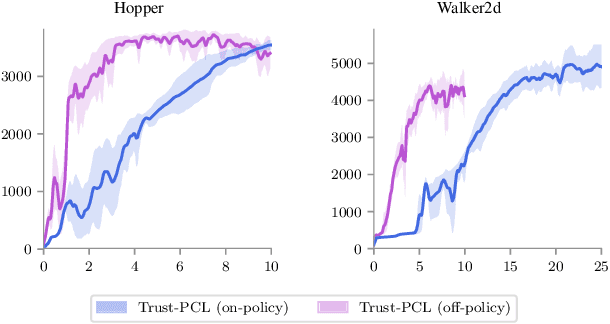

Trust-PCL: An Off-Policy Trust Region Method for Continuous Control

Feb 22, 2018Ofir Nachum, Mohammad Norouzi, Kelvin Xu, Dale Schuurmans

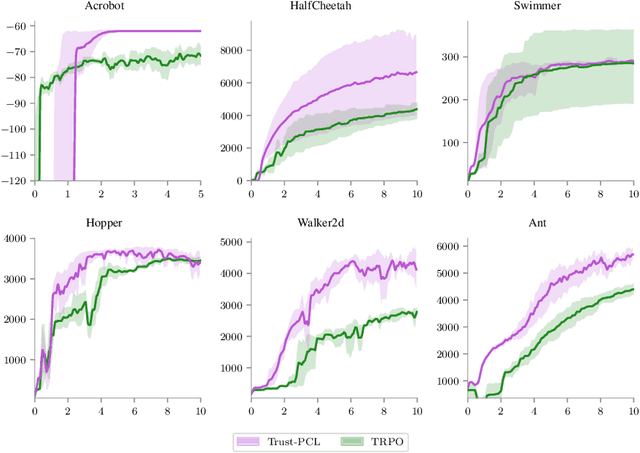

Trust region methods, such as TRPO, are often used to stabilize policy optimization algorithms in reinforcement learning (RL). While current trust region strategies are effective for continuous control, they typically require a prohibitively large amount of on-policy interaction with the environment. To address this problem, we propose an off-policy trust region method, Trust-PCL. The algorithm is the result of observing that the optimal policy and state values of a maximum reward objective with a relative-entropy regularizer satisfy a set of multi-step pathwise consistencies along any path. Thus, Trust-PCL is able to maintain optimization stability while exploiting off-policy data to improve sample efficiency. When evaluated on a number of continuous control tasks, Trust-PCL improves the solution quality and sample efficiency of TRPO.

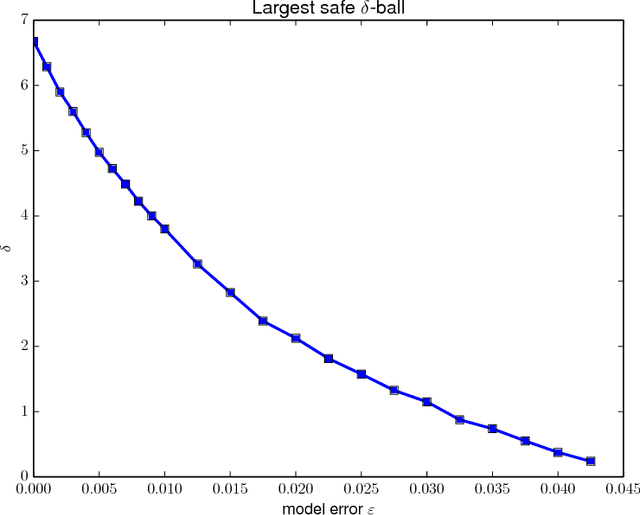

Safe Exploration for Identifying Linear Systems via Robust Optimization

Nov 30, 2017Tyler Lu, Martin Zinkevich, Craig Boutilier, Binz Roy, Dale Schuurmans

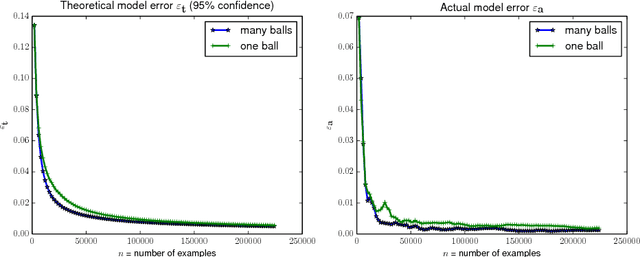

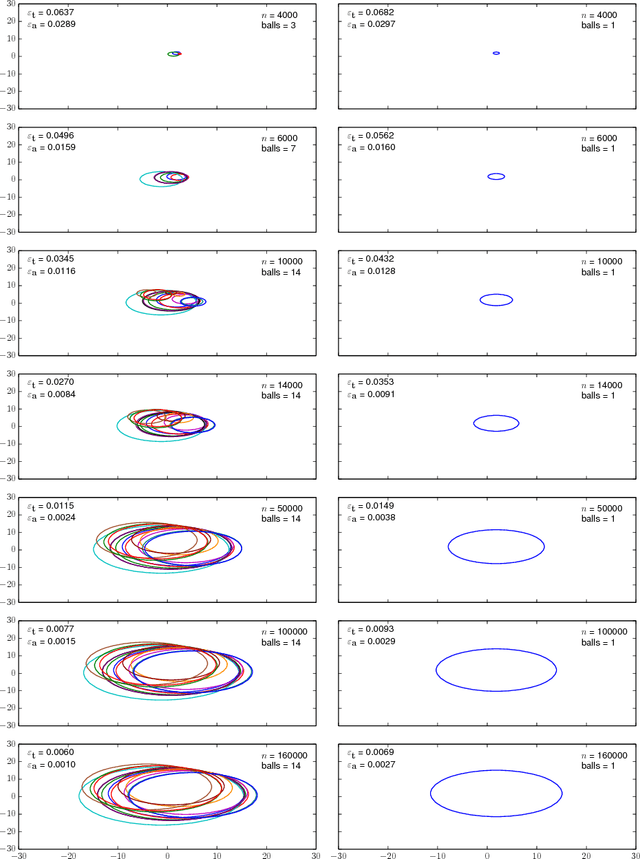

Safely exploring an unknown dynamical system is critical to the deployment of reinforcement learning (RL) in physical systems where failures may have catastrophic consequences. In scenarios where one knows little about the dynamics, diverse transition data covering relevant regions of state-action space is needed to apply either model-based or model-free RL. Motivated by the cooling of Google's data centers, we study how one can safely identify the parameters of a system model with a desired accuracy and confidence level. In particular, we focus on learning an unknown linear system with Gaussian noise assuming only that, initially, a nominal safe action is known. Define safety as satisfying specific linear constraints on the state space (e.g., requirements on process variable) that must hold over the span of an entire trajectory, and given a Probably Approximately Correct (PAC) style bound on the estimation error of model parameters, we show how to compute safe regions of action space by gradually growing a ball around the nominal safe action. One can apply any exploration strategy where actions are chosen from such safe regions. Experiments on a stylized model of data center cooling dynamics show how computing proper safe regions can increase the sample efficiency of safe exploration.

Bridging the Gap Between Value and Policy Based Reinforcement Learning

Nov 22, 2017Ofir Nachum, Mohammad Norouzi, Kelvin Xu, Dale Schuurmans

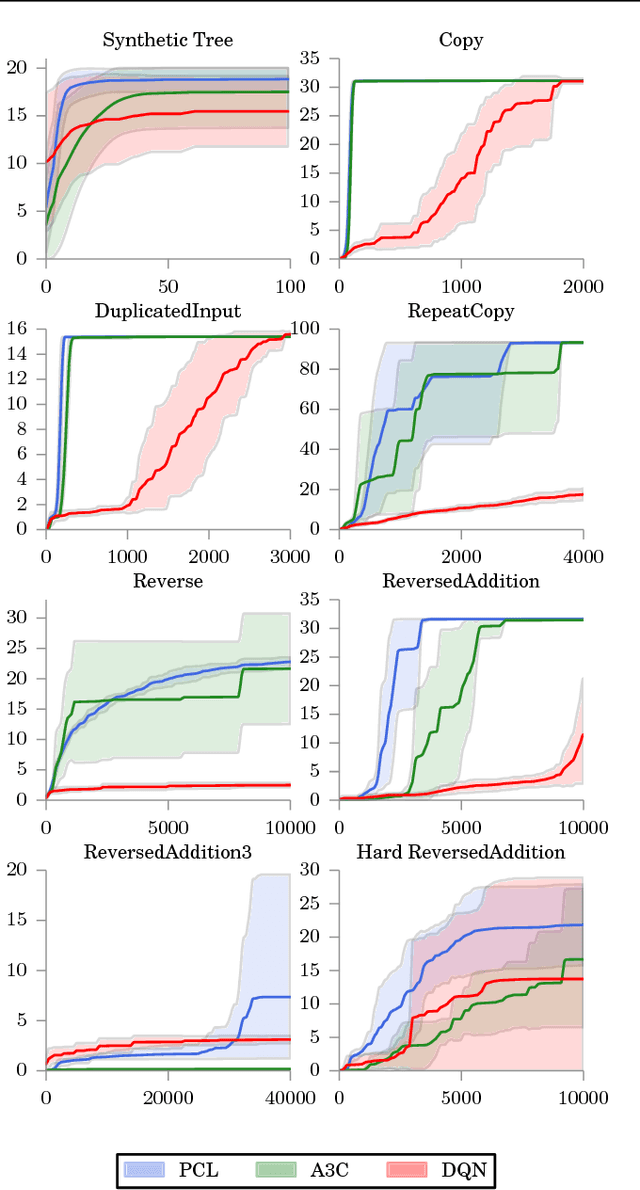

We establish a new connection between value and policy based reinforcement learning (RL) based on a relationship between softmax temporal value consistency and policy optimality under entropy regularization. Specifically, we show that softmax consistent action values correspond to optimal entropy regularized policy probabilities along any action sequence, regardless of provenance. From this observation, we develop a new RL algorithm, Path Consistency Learning (PCL), that minimizes a notion of soft consistency error along multi-step action sequences extracted from both on- and off-policy traces. We examine the behavior of PCL in different scenarios and show that PCL can be interpreted as generalizing both actor-critic and Q-learning algorithms. We subsequently deepen the relationship by showing how a single model can be used to represent both a policy and the corresponding softmax state values, eliminating the need for a separate critic. The experimental evaluation demonstrates that PCL significantly outperforms strong actor-critic and Q-learning baselines across several benchmarks.

Improving Policy Gradient by Exploring Under-appreciated Rewards

Mar 15, 2017Ofir Nachum, Mohammad Norouzi, Dale Schuurmans

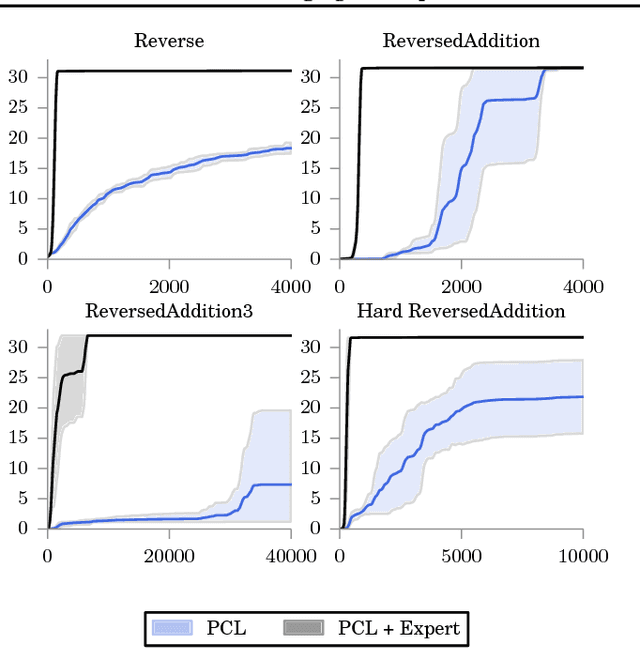

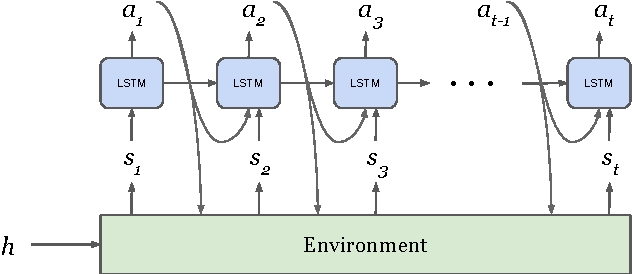

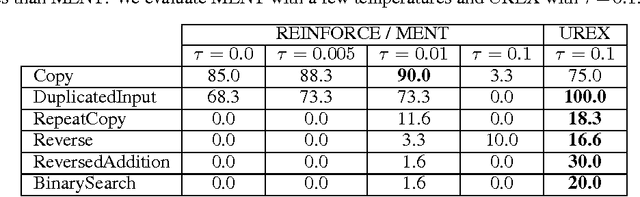

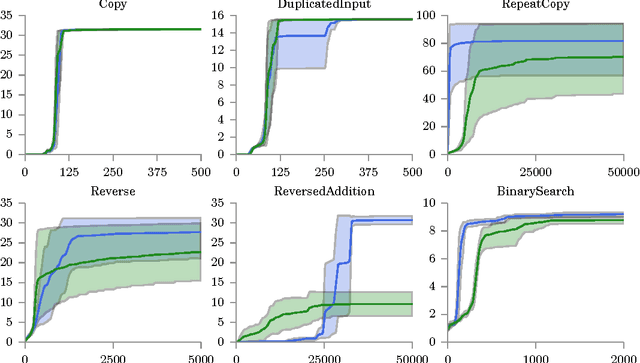

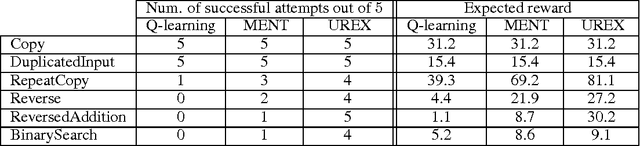

This paper presents a novel form of policy gradient for model-free reinforcement learning (RL) with improved exploration properties. Current policy-based methods use entropy regularization to encourage undirected exploration of the reward landscape, which is ineffective in high dimensional spaces with sparse rewards. We propose a more directed exploration strategy that promotes exploration of under-appreciated reward regions. An action sequence is considered under-appreciated if its log-probability under the current policy under-estimates its resulting reward. The proposed exploration strategy is easy to implement, requiring small modifications to an implementation of the REINFORCE algorithm. We evaluate the approach on a set of algorithmic tasks that have long challenged RL methods. Our approach reduces hyper-parameter sensitivity and demonstrates significant improvements over baseline methods. Our algorithm successfully solves a benchmark multi-digit addition task and generalizes to long sequences. This is, to our knowledge, the first time that a pure RL method has solved addition using only reward feedback.

Reward Augmented Maximum Likelihood for Neural Structured Prediction

Jan 04, 2017Mohammad Norouzi, Samy Bengio, Zhifeng Chen, Navdeep Jaitly, Mike Schuster, Yonghui Wu, Dale Schuurmans

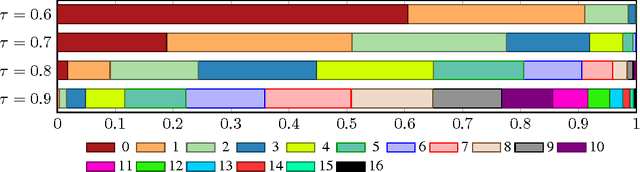

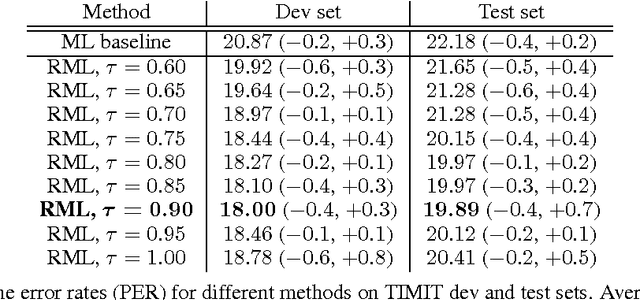

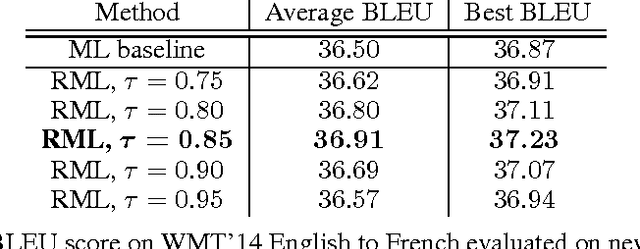

A key problem in structured output prediction is direct optimization of the task reward function that matters for test evaluation. This paper presents a simple and computationally efficient approach to incorporate task reward into a maximum likelihood framework. By establishing a link between the log-likelihood and expected reward objectives, we show that an optimal regularized expected reward is achieved when the conditional distribution of the outputs given the inputs is proportional to their exponentiated scaled rewards. Accordingly, we present a framework to smooth the predictive probability of the outputs using their corresponding rewards. We optimize the conditional log-probability of augmented outputs that are sampled proportionally to their exponentiated scaled rewards. Experiments on neural sequence to sequence models for speech recognition and machine translation show notable improvements over a maximum likelihood baseline by using reward augmented maximum likelihood (RAML), where the rewards are defined as the negative edit distance between the outputs and the ground truth labels.

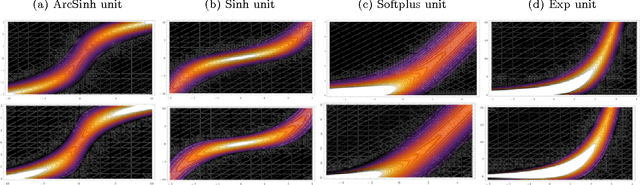

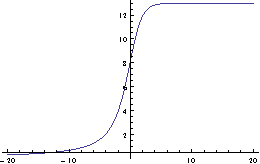

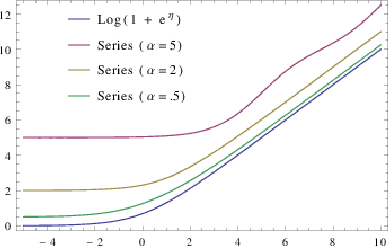

Stochastic Neural Networks with Monotonic Activation Functions

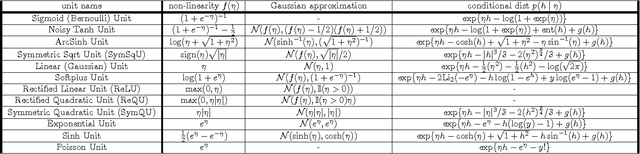

Jul 22, 2016Siamak Ravanbakhsh, Barnabas Poczos, Jeff Schneider, Dale Schuurmans, Russell Greiner

We propose a Laplace approximation that creates a stochastic unit from any smooth monotonic activation function, using only Gaussian noise. This paper investigates the application of this stochastic approximation in training a family of Restricted Boltzmann Machines (RBM) that are closely linked to Bregman divergences. This family, that we call exponential family RBM (Exp-RBM), is a subset of the exponential family Harmoniums that expresses family members through a choice of smooth monotonic non-linearity for each neuron. Using contrastive divergence along with our Gaussian approximation, we show that Exp-RBM can learn useful representations using novel stochastic units.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge