Chaofan Zhang

State Key Laboratory of Management and Control for Complex Systems, Institute of Automation, Chinese Academy of Sciences, China

DexTac: Learning Contact-aware Visuotactile Policies via Hand-by-hand Teaching

Jan 29, 2026Abstract:For contact-intensive tasks, the ability to generate policies that produce comprehensive tactile-aware motions is essential. However, existing data collection and skill learning systems for dexterous manipulation often suffer from low-dimensional tactile information. To address this limitation, we propose DexTac, a visuo-tactile manipulation learning framework based on kinesthetic teaching. DexTac captures multi-dimensional tactile data-including contact force distributions and spatial contact regions-directly from human demonstrations. By integrating these rich tactile modalities into a policy network, the resulting contact-aware agent enables a dexterous hand to autonomously select and maintain optimal contact regions during complex interactions. We evaluate our framework on a challenging unimanual injection task. Experimental results demonstrate that DexTac achieves a 91.67% success rate. Notably, in high-precision scenarios involving small-scale syringes, our approach outperforms force-only baselines by 31.67%. These results underscore that learning multi-dimensional tactile priors from human demonstrations is critical for achieving robust, human-like dexterous manipulation in contact-rich environments.

VTLA: Vision-Tactile-Language-Action Model with Preference Learning for Insertion Manipulation

May 14, 2025Abstract:While vision-language models have advanced significantly, their application in language-conditioned robotic manipulation is still underexplored, especially for contact-rich tasks that extend beyond visually dominant pick-and-place scenarios. To bridge this gap, we introduce Vision-Tactile-Language-Action model, a novel framework that enables robust policy generation in contact-intensive scenarios by effectively integrating visual and tactile inputs through cross-modal language grounding. A low-cost, multi-modal dataset has been constructed in a simulation environment, containing vision-tactile-action-instruction pairs specifically designed for the fingertip insertion task. Furthermore, we introduce Direct Preference Optimization (DPO) to offer regression-like supervision for the VTLA model, effectively bridging the gap between classification-based next token prediction loss and continuous robotic tasks. Experimental results show that the VTLA model outperforms traditional imitation learning methods (e.g., diffusion policies) and existing multi-modal baselines (TLA/VLA), achieving over 90% success rates on unseen peg shapes. Finally, we conduct real-world peg-in-hole experiments to demonstrate the exceptional Sim2Real performance of the proposed VTLA model. For supplementary videos and results, please visit our project website: https://sites.google.com/view/vtla

CLTP: Contrastive Language-Tactile Pre-training for 3D Contact Geometry Understanding

May 13, 2025Abstract:Recent advancements in integrating tactile sensing with vision-language models (VLMs) have demonstrated remarkable potential for robotic multimodal perception. However, existing tactile descriptions remain limited to superficial attributes like texture, neglecting critical contact states essential for robotic manipulation. To bridge this gap, we propose CLTP, an intuitive and effective language tactile pretraining framework that aligns tactile 3D point clouds with natural language in various contact scenarios, thus enabling contact-state-aware tactile language understanding for contact-rich manipulation tasks. We first collect a novel dataset of 50k+ tactile 3D point cloud-language pairs, where descriptions explicitly capture multidimensional contact states (e.g., contact location, shape, and force) from the tactile sensor's perspective. CLTP leverages a pre-aligned and frozen vision-language feature space to bridge holistic textual and tactile modalities. Experiments validate its superiority in three downstream tasks: zero-shot 3D classification, contact state classification, and tactile 3D large language model (LLM) interaction. To the best of our knowledge, this is the first study to align tactile and language representations from the contact state perspective for manipulation tasks, providing great potential for tactile-language-action model learning. Code and datasets are open-sourced at https://sites.google.com/view/cltp/.

TLA: Tactile-Language-Action Model for Contact-Rich Manipulation

Mar 11, 2025Abstract:Significant progress has been made in vision-language models. However, language-conditioned robotic manipulation for contact-rich tasks remains underexplored, particularly in terms of tactile sensing. To address this gap, we introduce the Tactile-Language-Action (TLA) model, which effectively processes sequential tactile feedback via cross-modal language grounding to enable robust policy generation in contact-intensive scenarios. In addition, we construct a comprehensive dataset that contains 24k pairs of tactile action instruction data, customized for fingertip peg-in-hole assembly, providing essential resources for TLA training and evaluation. Our results show that TLA significantly outperforms traditional imitation learning methods (e.g., diffusion policy) in terms of effective action generation and action accuracy, while demonstrating strong generalization capabilities by achieving over 85\% success rate on previously unseen assembly clearances and peg shapes. We publicly release all data and code in the hope of advancing research in language-conditioned tactile manipulation skill learning. Project website: https://sites.google.com/view/tactile-language-action/

SparseFocus: Learning-based One-shot Autofocus for Microscopy with Sparse Content

Feb 10, 2025

Abstract:Autofocus is necessary for high-throughput and real-time scanning in microscopic imaging. Traditional methods rely on complex hardware or iterative hill-climbing algorithms. Recent learning-based approaches have demonstrated remarkable efficacy in a one-shot setting, avoiding hardware modifications or iterative mechanical lens adjustments. However, in this paper, we highlight a significant challenge that the richness of image content can significantly affect autofocus performance. When the image content is sparse, previous autofocus methods, whether traditional climbing-hill or learning-based, tend to fail. To tackle this, we propose a content-importance-based solution, named SparseFocus, featuring a novel two-stage pipeline. The first stage measures the importance of regions within the image, while the second stage calculates the defocus distance from selected important regions. To validate our approach and benefit the research community, we collect a large-scale dataset comprising millions of labelled defocused images, encompassing both dense, sparse and extremely sparse scenarios. Experimental results show that SparseFocus surpasses existing methods, effectively handling all levels of content sparsity. Moreover, we integrate SparseFocus into our Whole Slide Imaging (WSI) system that performs well in real-world applications. The code and dataset will be made available upon the publication of this paper.

Real-Time Marker Localization Learning for GelStereo Tactile Sensing

Nov 24, 2022

Abstract:Visuotactile sensing technology is becoming more popular in tactile sensing, but the effectiveness of the existing marker detection localization methods remains to be further explored. Instead of contour-based blob detection, this paper presents a learning-based marker localization network for GelStereo visuotactile sensing called Marknet. Specifically, the Marknet presents a grid regression architecture to incorporate the distribution of the GelStereo markers. Furthermore, a marker rationality evaluator (MRE) is modelled to screen suitable prediction results. The experimental results show that the Marknet combined with MRE achieves 93.90% precision for irregular markers in contact areas, which outperforms the traditional contour-based blob detection method by a large margin of 42.32%. Meanwhile, the proposed learning-based marker localization method can achieve better real-time performance beyond the blob detection interface provided by the OpenCV library through GPU acceleration, which we believe will lead to considerable perceptual sensitivity gains in various robotic manipulation tasks.

Lightweight Object-level Topological Semantic Mapping and Long-term Global Localization based on Graph Matching

Jan 16, 2022

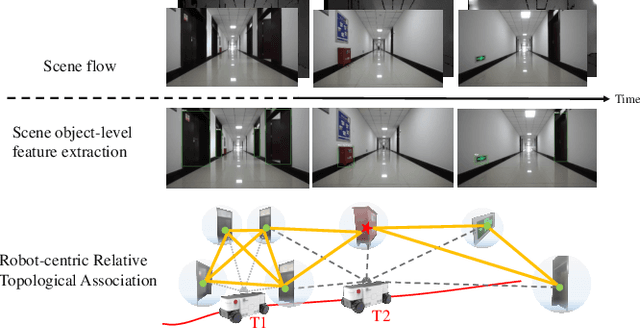

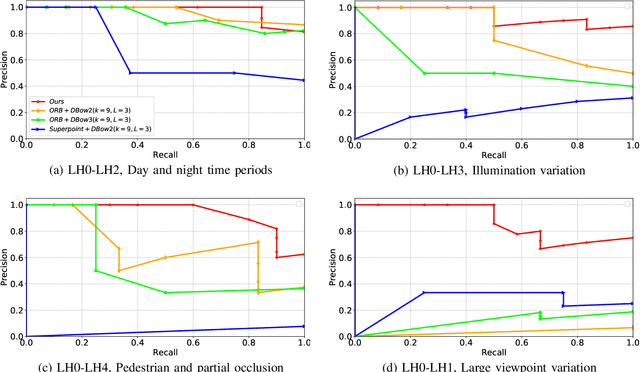

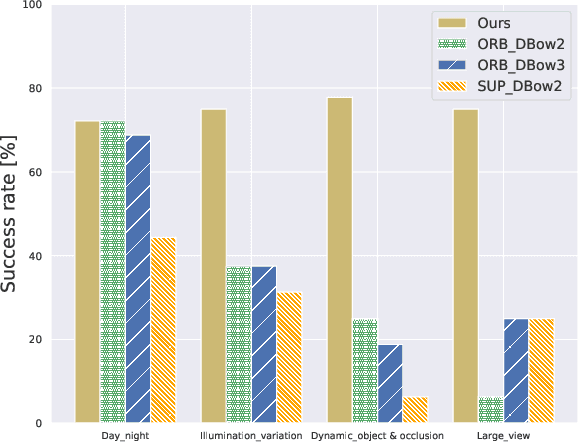

Abstract:Mapping and localization are two essential tasks for mobile robots in real-world applications. However, largescale and dynamic scenes challenge the accuracy and robustness of most current mature solutions. This situation becomes even worse when computational resources are limited. In this paper, we present a novel lightweight object-level mapping and localization method with high accuracy and robustness. Different from previous methods, our method does not need a prior constructed precise geometric map, which greatly releases the storage burden, especially for large-scale navigation. We use object-level features with both semantic and geometric information to model landmarks in the environment. Particularly, a learning topological primitive is first proposed to efficiently obtain and organize the object-level landmarks. On the basis of this, we use a robot-centric mapping framework to represent the environment as a semantic topology graph and relax the burden of maintaining global consistency at the same time. Besides, a hierarchical memory management mechanism is introduced to improve the efficiency of online mapping with limited computational resources. Based on the proposed map, the robust localization is achieved by constructing a novel local semantic scene graph descriptor, and performing multi-constraint graph matching to compare scene similarity. Finally, we test our method on a low-cost embedded platform to demonstrate its advantages. Experimental results on a large scale and multi-session real-world environment show that the proposed method outperforms the state of arts in terms of lightweight and robustness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge