Changhao Chen

DeepTIO: A Deep Thermal-Inertial Odometry with Visual Hallucination

Sep 16, 2019

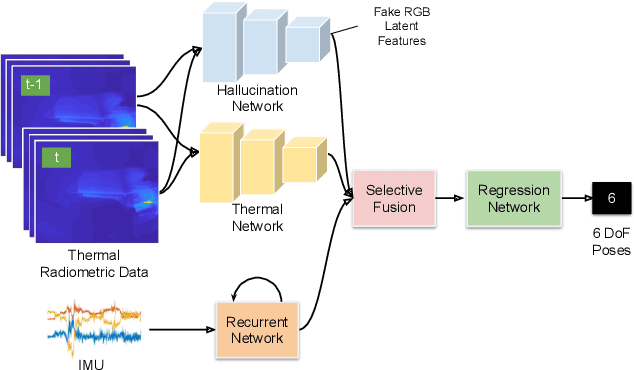

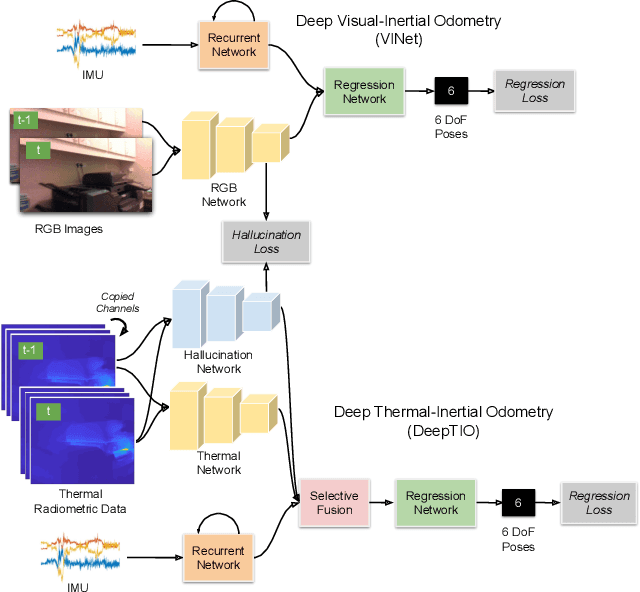

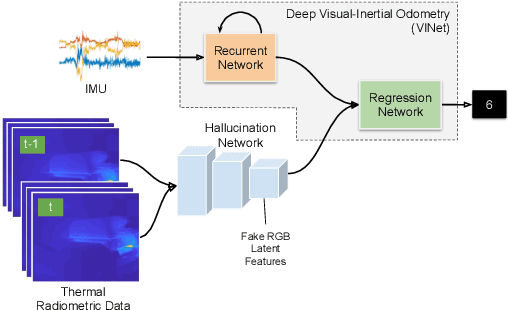

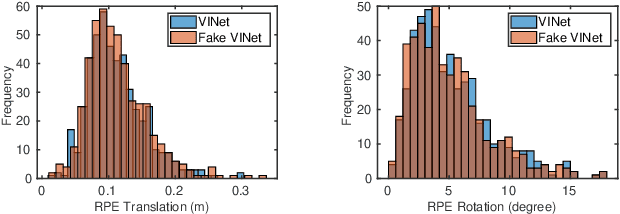

Abstract:Visual odometry shows excellent performance in a wide range of environments. However, in visually-denied scenarios (e.g. heavy smoke or darkness), pose estimates degrade or even fail. Thermal imaging cameras are commonly used for perception and inspection when the environment has low visibility. However, their use in odometry estimation is hampered by the lack of robust visual features. In part, this is as a result of the sensor measuring the ambient temperature profile rather than scene appearance and geometry. To overcome these issues, we propose a Deep Neural Network model for thermal-inertial odometry (DeepTIO) by incorporating a visual hallucination network to provide the thermal network with complementary information. The hallucination network is taught to predict fake visual features from thermal images by using the robust Huber loss. We also employ selective fusion to attentively fuse the features from three different modalities, i.e thermal, hallucination, and inertial features. Extensive experiments are performed in our large scale hand-held data in benign and smoke-filled environments, showing the efficacy of the proposed model.

AtLoc: Attention Guided Camera Localization

Sep 08, 2019

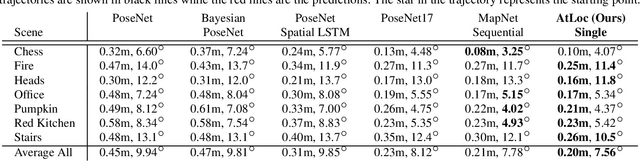

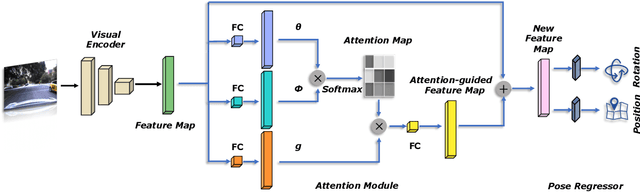

Abstract:Deep learning has achieved impressive results in camera localization, but current single-image techniques typically suffer from a lack of robustness, leading to large outliers. To some extent, this has been tackled by sequential (multi-images) or geometry constraint approaches, which can learn to reject dynamic objects and illumination conditions to achieve better performance. In this work, we show that attention can be used to force the network to focus on more geometrically robust objects and features, achieving state-of-the-art performance in common benchmark, even if using only a single image as input. Extensive experimental evidence is provided through public indoor and outdoor datasets. Through visualization of the saliency maps, we demonstrate how the network learns to reject dynamic objects, yielding superior global camera pose regression performance. The source code is avaliable at https://github.com/BingCS/AtLoc.

Autonomous Learning for Face Recognition in the Wild via Ambient Wireless Cues

Aug 14, 2019

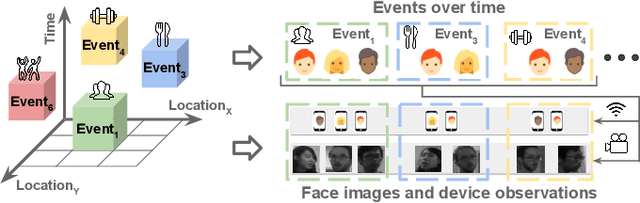

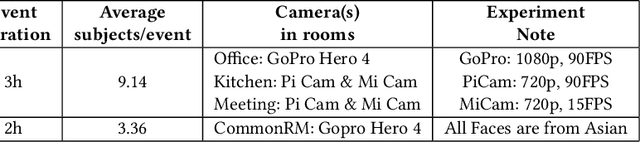

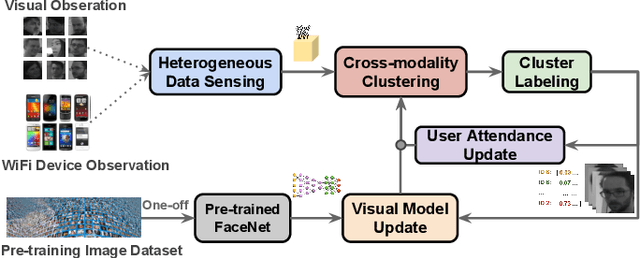

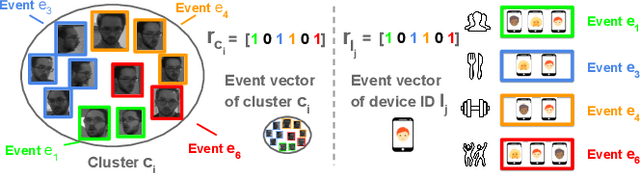

Abstract:Facial recognition is a key enabling component for emerging Internet of Things (IoT) services such as smart homes or responsive offices. Through the use of deep neural networks, facial recognition has achieved excellent performance. However, this is only possibly when trained with hundreds of images of each user in different viewing and lighting conditions. Clearly, this level of effort in enrolment and labelling is impossible for wide-spread deployment and adoption. Inspired by the fact that most people carry smart wireless devices with them, e.g. smartphones, we propose to use this wireless identifier as a supervisory label. This allows us to curate a dataset of facial images that are unique to a certain domain e.g. a set of people in a particular office. This custom corpus can then be used to finetune existing pre-trained models e.g. FaceNet. However, due to the vagaries of wireless propagation in buildings, the supervisory labels are noisy and weak.We propose a novel technique, AutoTune, which learns and refines the association between a face and wireless identifier over time, by increasing the inter-cluster separation and minimizing the intra-cluster distance. Through extensive experiments with multiple users on two sites, we demonstrate the ability of AutoTune to design an environment-specific, continually evolving facial recognition system with entirely no user effort.

DynaNet: Neural Kalman Dynamical Model for Motion Estimation and Prediction

Aug 11, 2019

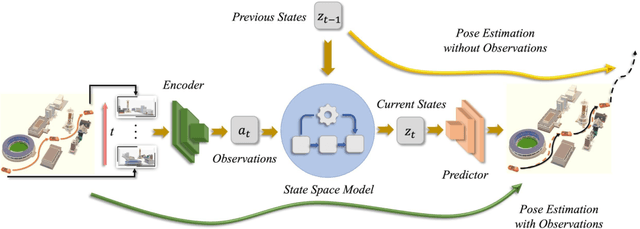

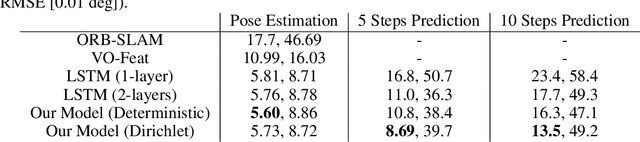

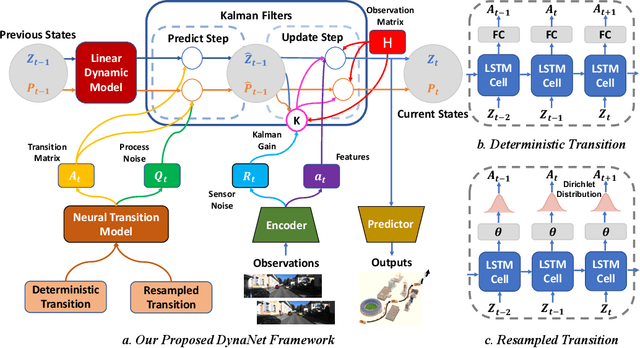

Abstract:Dynamical models estimate and predict the temporal evolution of physical systems. State Space Models (SSMs) in particular represent the system dynamics with many desirable properties, such as being able to model uncertainty in both the model and measurements, and optimal (in the Bayesian sense) recursive formulations e.g. the Kalman Filter. However, they require significant domain knowledge to derive the parametric form and considerable hand-tuning to correctly set all the parameters. Data driven techniques e.g. Recurrent Neural Networks have emerged as compelling alternatives to SSMs with wide success across a number of challenging tasks, in part due to their ability to extract relevant features from rich inputs. They however lack interpretability and robustness to unseen conditions. In this work, we present DynaNet, a hybrid deep learning and time-varying state-space model which can be trained end-to-end. Our neural Kalman dynamical model allows us to exploit the relative merits of each approach. We demonstrate state-of-the-art estimation and prediction on a number of physically challenging tasks, including visual odometry, sensor fusion for visual-inertial navigation and pendulum control. In addition we show how DynaNet can indicate failures through investigation of properties such as the rate of innovation (Kalman Gain).

Selective Sensor Fusion for Neural Visual-Inertial Odometry

Mar 04, 2019

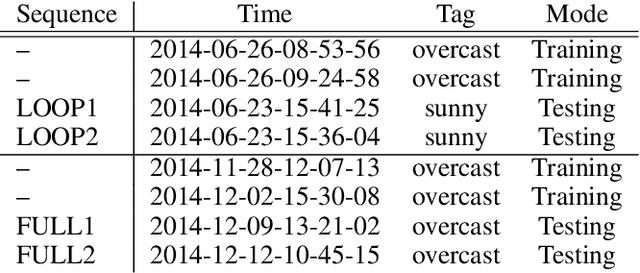

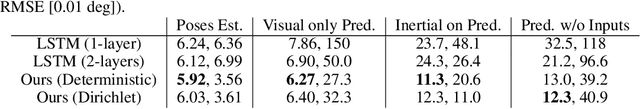

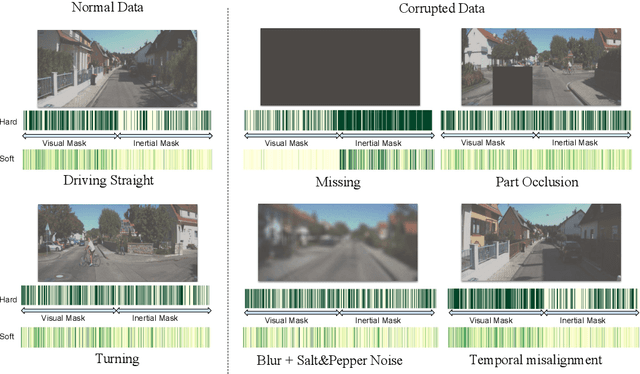

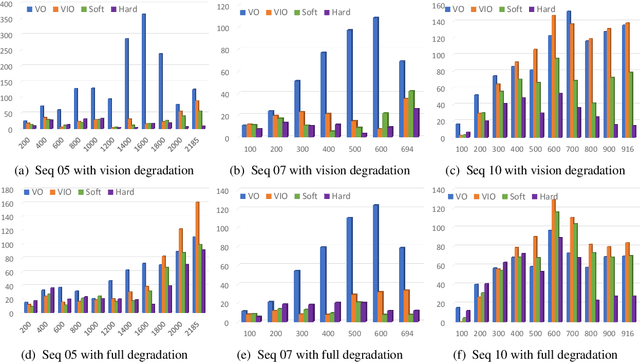

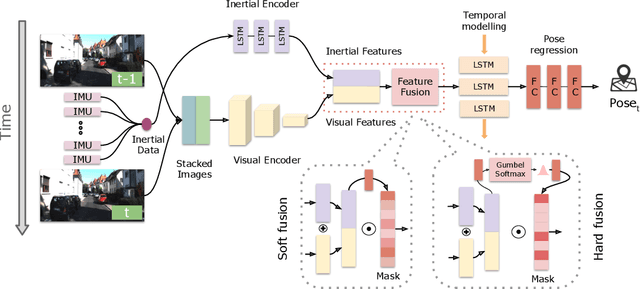

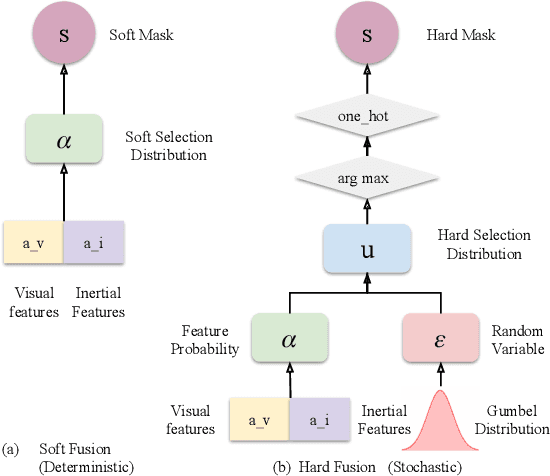

Abstract:Deep learning approaches for Visual-Inertial Odometry (VIO) have proven successful, but they rarely focus on incorporating robust fusion strategies for dealing with imperfect input sensory data. We propose a novel end-to-end selective sensor fusion framework for monocular VIO, which fuses monocular images and inertial measurements in order to estimate the trajectory whilst improving robustness to real-life issues, such as missing and corrupted data or bad sensor synchronization. In particular, we propose two fusion modalities based on different masking strategies: deterministic soft fusion and stochastic hard fusion, and we compare with previously proposed direct fusion baselines. During testing, the network is able to selectively process the features of the available sensor modalities and produce a trajectory at scale. We present a thorough investigation on the performances on three public autonomous driving, Micro Aerial Vehicle (MAV) and hand-held VIO datasets. The results demonstrate the effectiveness of the fusion strategies, which offer better performances compared to direct fusion, particularly in presence of corrupted data. In addition, we study the interpretability of the fusion networks by visualising the masking layers in different scenarios and with varying data corruption, revealing interesting correlations between the fusion networks and imperfect sensory input data.

Learning with Stochastic Guidance for Navigation

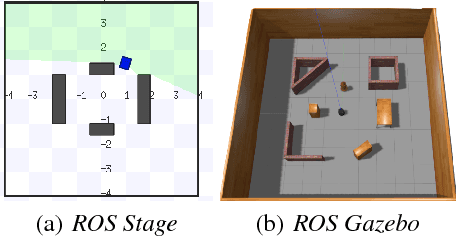

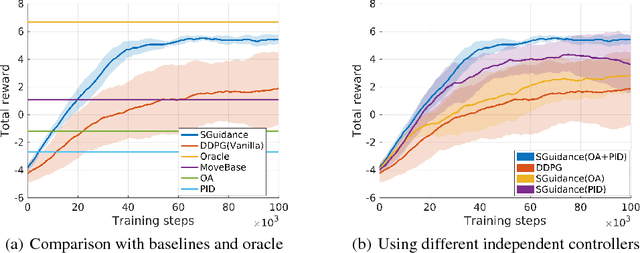

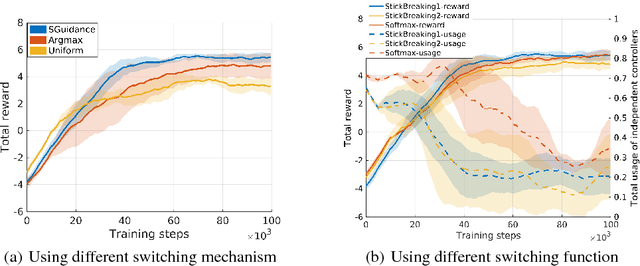

Nov 27, 2018

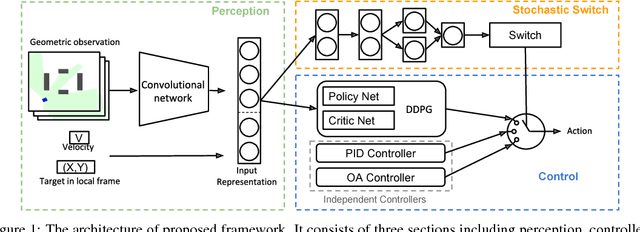

Abstract:Due to the sparse rewards and high degree of environment variation, reinforcement learning approaches such as Deep Deterministic Policy Gradient (DDPG) are plagued by issues of high variance when applied in complex real world environments. We present a new framework for overcoming these issues by incorporating a stochastic switch, allowing an agent to choose between high and low variance policies. The stochastic switch can be jointly trained with the original DDPG in the same framework. In this paper, we demonstrate the power of the framework in a navigation task, where the robot can dynamically choose to learn through exploration, or to use the output of a heuristic controller as guidance. Instead of starting from completely random moves, the navigation capability of a robot can be quickly bootstrapped by several simple independent controllers. The experimental results show that with the aid of stochastic guidance we are able to effectively and efficiently train DDPG navigation policies and achieve significantly better performance than state-of-the-art baselines models.

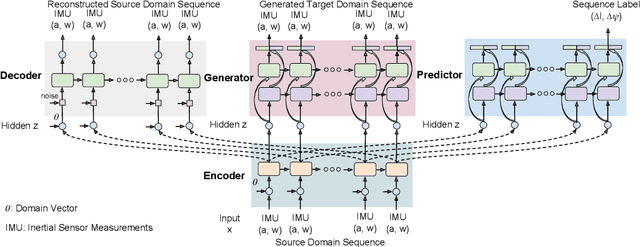

Transferring Physical Motion Between Domains for Neural Inertial Tracking

Oct 04, 2018

Abstract:Inertial information processing plays a pivotal role in ego-motion awareness for mobile agents, as inertial measurements are entirely egocentric and not environment dependent. However, they are affected greatly by changes in sensor placement/orientation or motion dynamics, and it is infeasible to collect labelled data from every domain. To overcome the challenges of domain adaptation on long sensory sequences, we propose a novel framework that extracts domain-invariant features of raw sequences from arbitrary domains, and transforms to new domains without any paired data. Through the experiments, we demonstrate that it is able to efficiently and effectively convert the raw sequence from a new unlabelled target domain into an accurate inertial trajectory, benefiting from the physical motion knowledge transferred from the labelled source domain. We also conduct real-world experiments to show our framework can reconstruct physically meaningful trajectories from raw IMU measurements obtained with a standard mobile phone in various attachments.

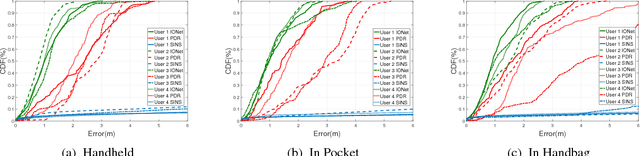

OxIOD: The Dataset for Deep Inertial Odometry

Sep 20, 2018

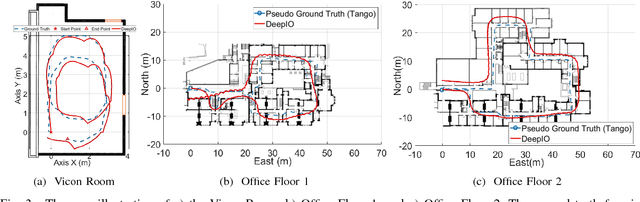

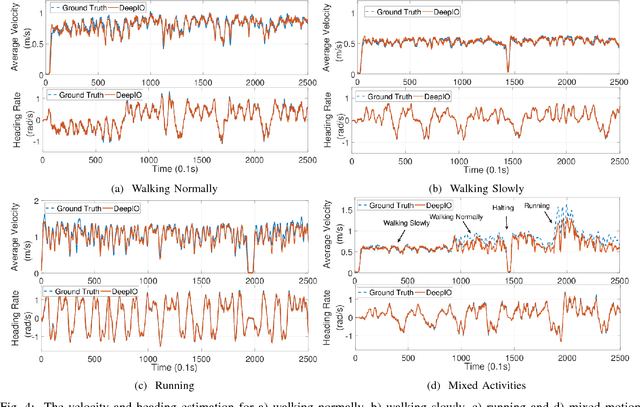

Abstract:Advances in micro-electro-mechanical (MEMS) techniques enable inertial measurements units (IMUs) to be small, cheap, energy efficient, and widely used in smartphones, robots, and drones. Exploiting inertial data for accurate and reliable navigation and localization has attracted significant research and industrial interest, as IMU measurements are completely ego-centric and generally environment agnostic. Recent studies have shown that the notorious issue of drift can be significantly alleviated by using deep neural networks (DNNs), e.g. IONet. However, the lack of sufficient labelled data for training and testing various architectures limits the proliferation of adopting DNNs in IMU-based tasks. In this paper, we propose and release the Oxford Inertial Odometry Dataset (OxIOD), a first-of-its-kind data collection for inertial-odometry research, with all sequences having ground-truth labels. Our dataset contains 158 sequences totalling more than 42 km in total distance, much larger than previous inertial datasets. Another notable feature of this dataset lies in its diversity, which can reflect the complex motions of phone-based IMUs in various everyday usage. The measurements were collected with four different attachments (handheld, in the pocket, in the handbag and on the trolley), four motion modes (halting, walking slowly, walking normally, and running), five different users, four types of off-the-shelf consumer phones, and large-scale localization from office buildings. Deep inertial tracking experiments were conducted to show the effectiveness of our dataset in training deep neural network models and evaluate learning-based and model-based algorithms. The OxIOD Dataset is available at: http://deepio.cs.ox.ac.uk

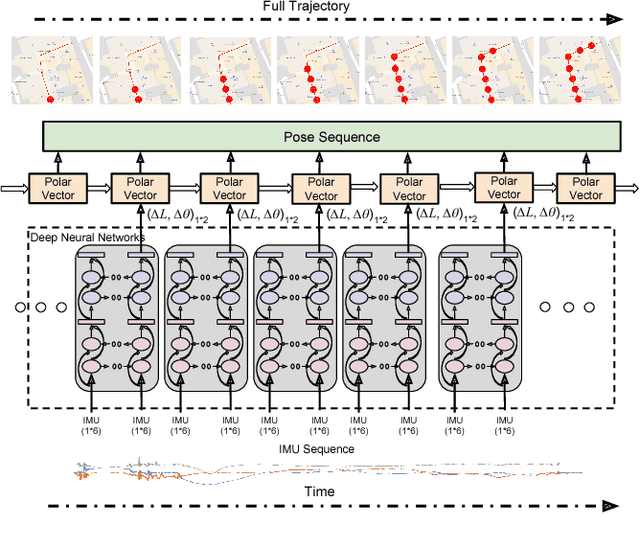

IONet: Learning to Cure the Curse of Drift in Inertial Odometry

Jan 30, 2018

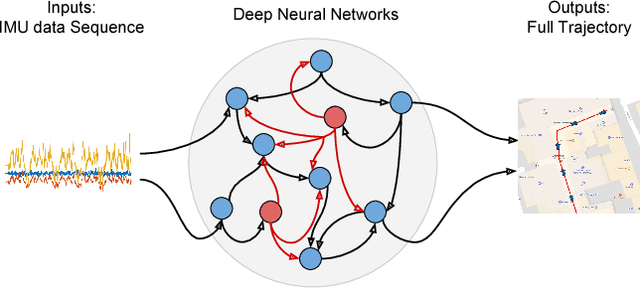

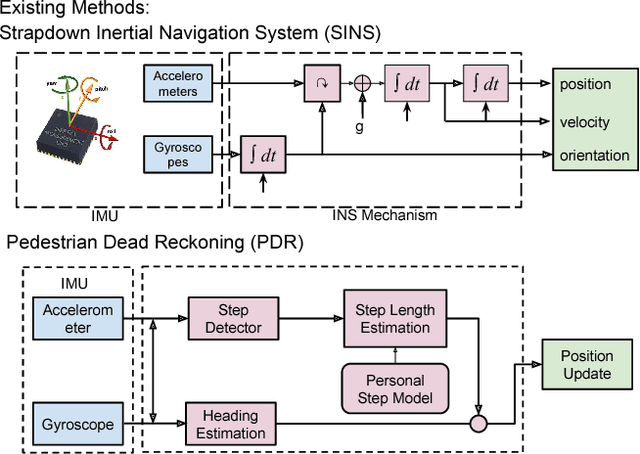

Abstract:Inertial sensors play a pivotal role in indoor localization, which in turn lays the foundation for pervasive personal applications. However, low-cost inertial sensors, as commonly found in smartphones, are plagued by bias and noise, which leads to unbounded growth in error when accelerations are double integrated to obtain displacement. Small errors in state estimation propagate to make odometry virtually unusable in a matter of seconds. We propose to break the cycle of continuous integration, and instead segment inertial data into independent windows. The challenge becomes estimating the latent states of each window, such as velocity and orientation, as these are not directly observable from sensor data. We demonstrate how to formulate this as an optimization problem, and show how deep recurrent neural networks can yield highly accurate trajectories, outperforming state-of-the-art shallow techniques, on a wide range of tests and attachments. In particular, we demonstrate that IONet can generalize to estimate odometry for non-periodic motion, such as a shopping trolley or baby-stroller, an extremely challenging task for existing techniques.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge