Bing Liu

Jack

Adapting a Language Model While Preserving its General Knowledge

Jan 21, 2023Abstract:Domain-adaptive pre-training (or DA-training for short), also known as post-training, aims to train a pre-trained general-purpose language model (LM) using an unlabeled corpus of a particular domain to adapt the LM so that end-tasks in the domain can give improved performances. However, existing DA-training methods are in some sense blind as they do not explicitly identify what knowledge in the LM should be preserved and what should be changed by the domain corpus. This paper shows that the existing methods are suboptimal and proposes a novel method to perform a more informed adaptation of the knowledge in the LM by (1) soft-masking the attention heads based on their importance to best preserve the general knowledge in the LM and (2) contrasting the representations of the general and the full (both general and domain knowledge) to learn an integrated representation with both general and domain-specific knowledge. Experimental results will demonstrate the effectiveness of the proposed approach.

Joint Spatio-Temporal Modeling for the Semantic Change Detection in Remote Sensing Images

Dec 17, 2022

Abstract:Semantic Change Detection (SCD) refers to the task of simultaneously extracting the changed areas and the semantic categories (before and after the changes) in Remote Sensing Images (RSIs). This is more meaningful than Binary Change Detection (BCD) since it enables detailed change analysis in the observed areas. Previous works established triple-branch Convolutional Neural Network (CNN) architectures as the paradigm for SCD. However, it remains challenging to exploit semantic information with a limited amount of change samples. In this work, we investigate to jointly consider the spatio-temporal dependencies to improve the accuracy of SCD. First, we propose a Semantic Change Transformer (SCanFormer) to explicitly model the 'from-to' semantic transitions between the bi-temporal RSIs. Then, we introduce a semantic learning scheme to leverage the spatio-temporal constraints, which are coherent to the SCD task, to guide the learning of semantic changes. The resulting network (SCanNet) significantly outperforms the baseline method in terms of both detection of critical semantic changes and semantic consistency in the obtained bi-temporal results. It achieves the SOTA accuracy on two benchmark datasets for the SCD.

Dependency-aware Self-training for Entity Alignment

Nov 29, 2022Abstract:Entity Alignment (EA), which aims to detect entity mappings (i.e. equivalent entity pairs) in different Knowledge Graphs (KGs), is critical for KG fusion. Neural EA methods dominate current EA research but still suffer from their reliance on labelled mappings. To solve this problem, a few works have explored boosting the training of EA models with self-training, which adds confidently predicted mappings into the training data iteratively. Though the effectiveness of self-training can be glimpsed in some specific settings, we still have very limited knowledge about it. One reason is the existing works concentrate on devising EA models and only treat self-training as an auxiliary tool. To fill this knowledge gap, we change the perspective to self-training to shed light on it. In addition, the existing self-training strategies have limited impact because they introduce either much False Positive noise or a low quantity of True Positive pseudo mappings. To improve self-training for EA, we propose exploiting the dependencies between entities, a particularity of EA, to suppress the noise without hurting the recall of True Positive mappings. Through extensive experiments, we show that the introduction of dependency makes the self-training strategy for EA reach a new level. The value of self-training in alleviating the reliance on annotation is actually much higher than what has been realised. Furthermore, we suggest future study on smart data annotation to break the ceiling of EA performance.

Guiding Neural Entity Alignment with Compatibility

Nov 29, 2022

Abstract:Entity Alignment (EA) aims to find equivalent entities between two Knowledge Graphs (KGs). While numerous neural EA models have been devised, they are mainly learned using labelled data only. In this work, we argue that different entities within one KG should have compatible counterparts in the other KG due to the potential dependencies among the entities. Making compatible predictions thus should be one of the goals of training an EA model along with fitting the labelled data: this aspect however is neglected in current methods. To power neural EA models with compatibility, we devise a training framework by addressing three problems: (1) how to measure the compatibility of an EA model; (2) how to inject the property of being compatible into an EA model; (3) how to optimise parameters of the compatibility model. Extensive experiments on widely-used datasets demonstrate the advantages of integrating compatibility within EA models. In fact, state-of-the-art neural EA models trained within our framework using just 5\% of the labelled data can achieve comparable effectiveness with supervised training using 20\% of the labelled data.

Continual Learning of Natural Language Processing Tasks: A Survey

Nov 23, 2022Abstract:Continual learning (CL) is an emerging learning paradigm that aims to emulate the human capability of learning and accumulating knowledge continually without forgetting the previously learned knowledge and also transferring the knowledge to new tasks to learn them better. This survey presents a comprehensive review of the recent progress of CL in the NLP field. It covers (1) all CL settings with a taxonomy of existing techniques. Besides dealing with forgetting, it also focuses on (2) knowledge transfer, which is of particular importance to NLP. Both (1) and (2) are not mentioned in the existing survey. Finally, a list of future directions is also discussed.

Lifelong and Continual Learning Dialogue Systems

Nov 12, 2022

Abstract:Dialogue systems, commonly known as chatbots, have gained escalating popularity in recent times due to their wide-spread applications in carrying out chit-chat conversations with users and task-oriented dialogues to accomplish various user tasks. Existing chatbots are usually trained from pre-collected and manually-labeled data and/or written with handcrafted rules. Many also use manually-compiled knowledge bases (KBs). Their ability to understand natural language is still limited, and they tend to produce many errors resulting in poor user satisfaction. Typically, they need to be constantly improved by engineers with more labeled data and more manually compiled knowledge. This book introduces the new paradigm of lifelong learning dialogue systems to endow chatbots the ability to learn continually by themselves through their own self-initiated interactions with their users and working environments to improve themselves. As the systems chat more and more with users or learn more and more from external sources, they become more and more knowledgeable and better and better at conversing. The book presents the latest developments and techniques for building such continual learning dialogue systems that continuously learn new language expressions and lexical and factual knowledge during conversation from users and off conversation from external sources, acquire new training examples during conversation, and learn conversational skills. Apart from these general topics, existing works on continual learning of some specific aspects of dialogue systems are also surveyed. The book concludes with a discussion of open challenges for future research.

A Theoretical Study on Solving Continual Learning

Nov 04, 2022

Abstract:Continual learning (CL) learns a sequence of tasks incrementally. There are two popular CL settings, class incremental learning (CIL) and task incremental learning (TIL). A major challenge of CL is catastrophic forgetting (CF). While a number of techniques are already available to effectively overcome CF for TIL, CIL remains to be highly challenging. So far, little theoretical study has been done to provide a principled guidance on how to solve the CIL problem. This paper performs such a study. It first shows that probabilistically, the CIL problem can be decomposed into two sub-problems: Within-task Prediction (WP) and Task-id Prediction (TP). It further proves that TP is correlated with out-of-distribution (OOD) detection, which connects CIL and OOD detection. The key conclusion of this study is that regardless of whether WP and TP or OOD detection are defined explicitly or implicitly by a CIL algorithm, good WP and good TP or OOD detection are necessary and sufficient for good CIL performances. Additionally, TIL is simply WP. Based on the theoretical result, new CIL methods are also designed, which outperform strong baselines in both CIL and TIL settings by a large margin.

Semantic Novelty Detection and Characterization in Factual Text Involving Named Entities

Oct 31, 2022

Abstract:Much of the existing work on text novelty detection has been studied at the topic level, i.e., identifying whether the topic of a document or a sentence is novel or not. Little work has been done at the fine-grained semantic level (or contextual level). For example, given that we know Elon Musk is the CEO of a technology company, the sentence "Elon Musk acted in the sitcom The Big Bang Theory" is novel and surprising because normally a CEO would not be an actor. Existing topic-based novelty detection methods work poorly on this problem because they do not perform semantic reasoning involving relations between named entities in the text and their background knowledge. This paper proposes an effective model (called PAT-SND) to solve the problem, which can also characterize the novelty. An annotated dataset is also created. Evaluation shows that PAT-SND outperforms 10 baselines by large margins.

Knowledge-Guided Exploration in Deep Reinforcement Learning

Oct 26, 2022

Abstract:This paper proposes a new method to drastically speed up deep reinforcement learning (deep RL) training for problems that have the property of state-action permissibility (SAP). Two types of permissibility are defined under SAP. The first type says that after an action $a_t$ is performed in a state $s_t$ and the agent has reached the new state $s_{t+1}$, the agent can decide whether $a_t$ is permissible or not permissible in $s_t$. The second type says that even without performing $a_t$ in $s_t$, the agent can already decide whether $a_t$ is permissible or not in $s_t$. An action is not permissible in a state if the action can never lead to an optimal solution and thus should not be tried (over and over again). We incorporate the proposed SAP property and encode action permissibility knowledge into two state-of-the-art deep RL algorithms to guide their state-action exploration together with a virtual stopping strategy. Results show that the SAP-based guidance can markedly speed up RL training.

Continual Training of Language Models for Few-Shot Learning

Oct 11, 2022

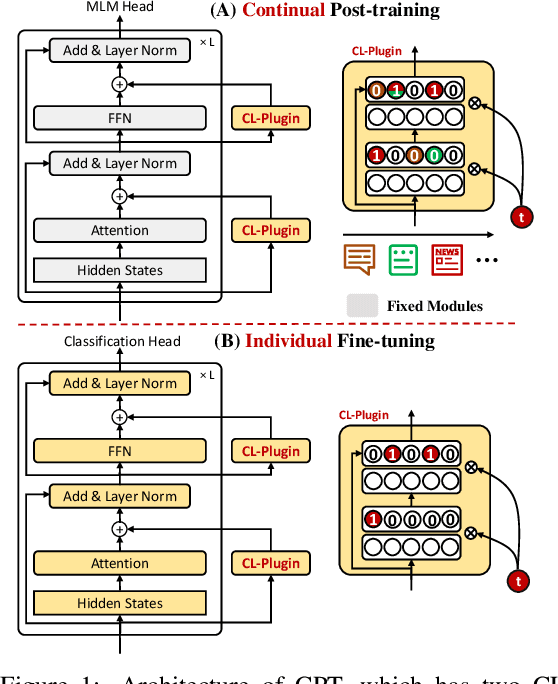

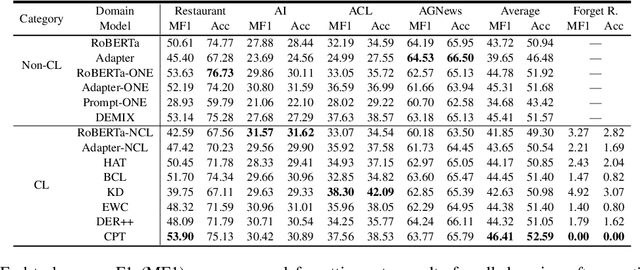

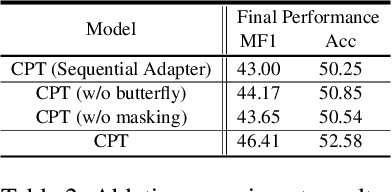

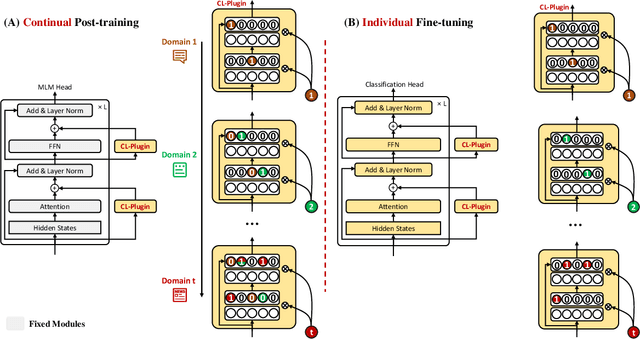

Abstract:Recent work on applying large language models (LMs) achieves impressive performance in many NLP applications. Adapting or posttraining an LM using an unlabeled domain corpus can produce even better performance for end-tasks in the domain. This paper proposes the problem of continually extending an LM by incrementally post-train the LM with a sequence of unlabeled domain corpora to expand its knowledge without forgetting its previous skills. The goal is to improve the few-shot end-task learning in these domains. The resulting system is called CPT (Continual PostTraining), which to our knowledge, is the first continual post-training system. Experimental results verify its effectiveness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge