Beng Chin Ooi

Skellam Mixture Mechanism: a Novel Approach to Federated Learning with Differential Privacy

Dec 08, 2022

Abstract:Deep neural networks have strong capabilities of memorizing the underlying training data, which can be a serious privacy concern. An effective solution to this problem is to train models with differential privacy, which provides rigorous privacy guarantees by injecting random noise to the gradients. This paper focuses on the scenario where sensitive data are distributed among multiple participants, who jointly train a model through federated learning (FL), using both secure multiparty computation (MPC) to ensure the confidentiality of each gradient update, and differential privacy to avoid data leakage in the resulting model. A major challenge in this setting is that common mechanisms for enforcing DP in deep learning, which inject real-valued noise, are fundamentally incompatible with MPC, which exchanges finite-field integers among the participants. Consequently, most existing DP mechanisms require rather high noise levels, leading to poor model utility. Motivated by this, we propose Skellam mixture mechanism (SMM), an approach to enforce DP on models built via FL. Compared to existing methods, SMM eliminates the assumption that the input gradients must be integer-valued, and, thus, reduces the amount of noise injected to preserve DP. Further, SMM allows tight privacy accounting due to the nice composition and sub-sampling properties of the Skellam distribution, which are key to accurate deep learning with DP. The theoretical analysis of SMM is highly non-trivial, especially considering (i) the complicated math of differentially private deep learning in general and (ii) the fact that the mixture of two Skellam distributions is rather complex, and to our knowledge, has not been studied in the DP literature. Extensive experiments on various practical settings demonstrate that SMM consistently and significantly outperforms existing solutions in terms of the utility of the resulting model.

Sense The Physical, Walkthrough The Virtual, Manage The Metaverse: A Data-centric Perspective

Jun 14, 2022

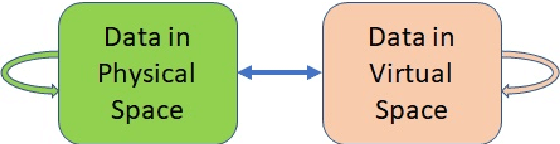

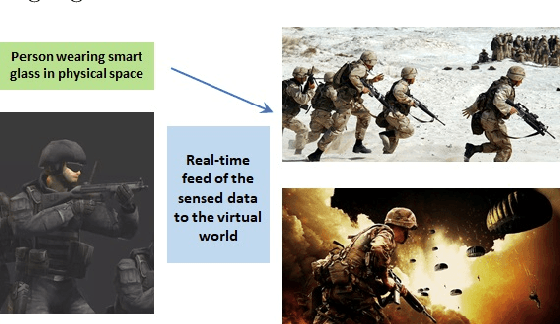

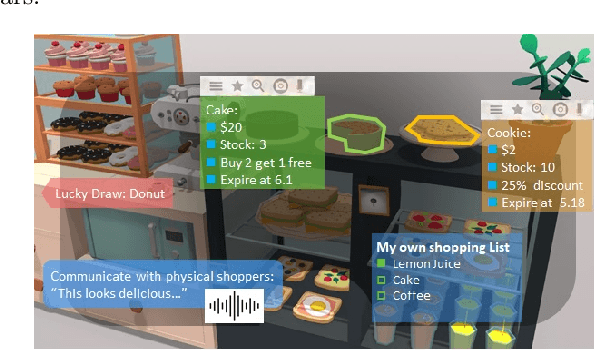

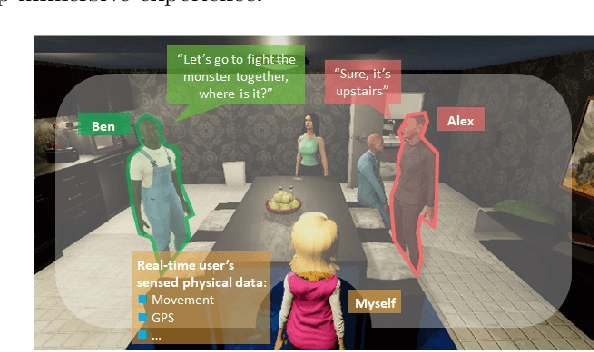

Abstract:In the Metaverse, the physical space and the virtual space co-exist, and interact simultaneously. While the physical space is virtually enhanced with information, the virtual space is continuously refreshed with real-time, real-world information. To allow users to process and manipulate information seamlessly between the real and digital spaces, novel technologies must be developed. These include smart interfaces, new augmented realities, efficient storage and data management and dissemination techniques. In this paper, we first discuss some promising co-space applications. These applications offer experiences and opportunities that neither of the spaces can realize on its own. We then argue that the database community has much to offer to this field. Finally, we present several challenges that we, as a community, can contribute towards managing the Metaverse.

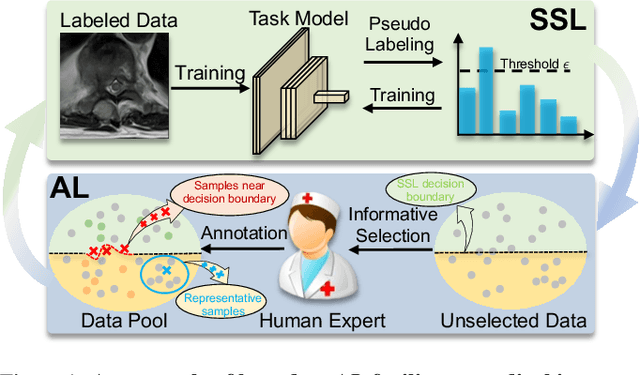

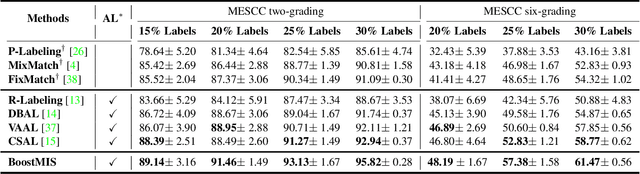

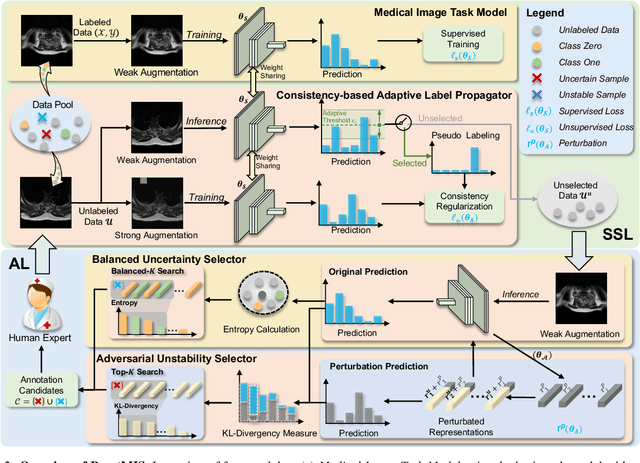

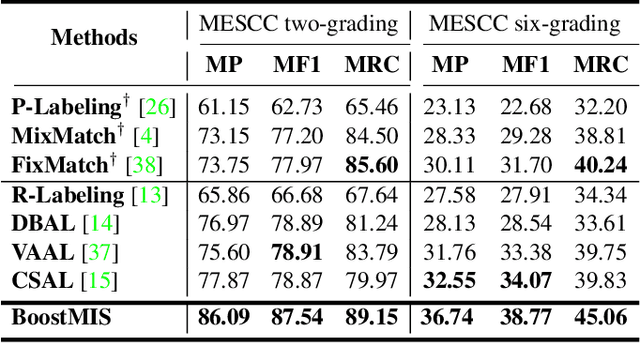

BoostMIS: Boosting Medical Image Semi-supervised Learning with Adaptive Pseudo Labeling and Informative Active Annotation

Mar 21, 2022

Abstract:In this paper, we propose a novel semi-supervised learning (SSL) framework named BoostMIS that combines adaptive pseudo labeling and informative active annotation to unleash the potential of medical image SSL models: (1) BoostMIS can adaptively leverage the cluster assumption and consistency regularization of the unlabeled data according to the current learning status. This strategy can adaptively generate one-hot "hard" labels converted from task model predictions for better task model training. (2) For the unselected unlabeled images with low confidence, we introduce an Active learning (AL) algorithm to find the informative samples as the annotation candidates by exploiting virtual adversarial perturbation and model's density-aware entropy. These informative candidates are subsequently fed into the next training cycle for better SSL label propagation. Notably, the adaptive pseudo-labeling and informative active annotation form a learning closed-loop that are mutually collaborative to boost medical image SSL. To verify the effectiveness of the proposed method, we collected a metastatic epidural spinal cord compression (MESCC) dataset that aims to optimize MESCC diagnosis and classification for improved specialist referral and treatment. We conducted an extensive experimental study of BoostMIS on MESCC and another public dataset COVIDx. The experimental results verify our framework's effectiveness and generalisability for different medical image datasets with a significant improvement over various state-of-the-art methods.

* 11 pages

NASI: Label- and Data-agnostic Neural Architecture Search at Initialization

Sep 02, 2021

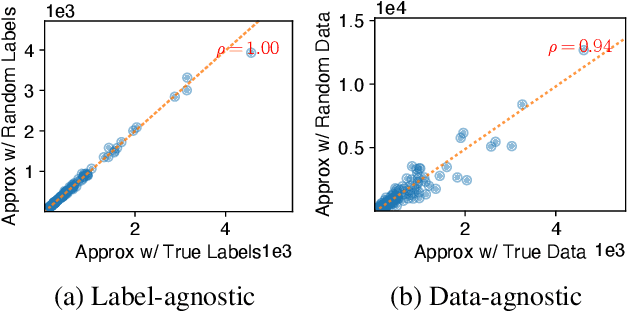

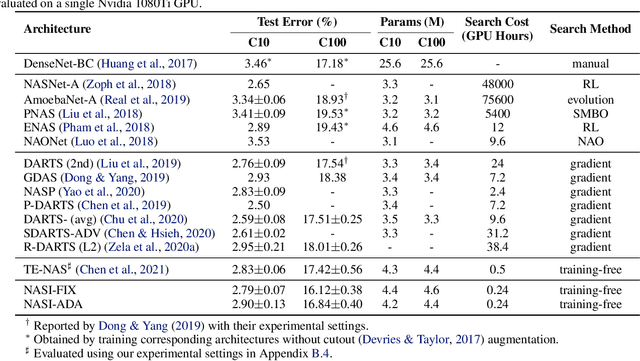

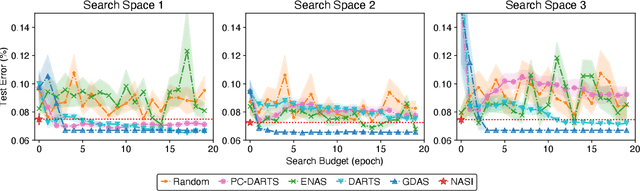

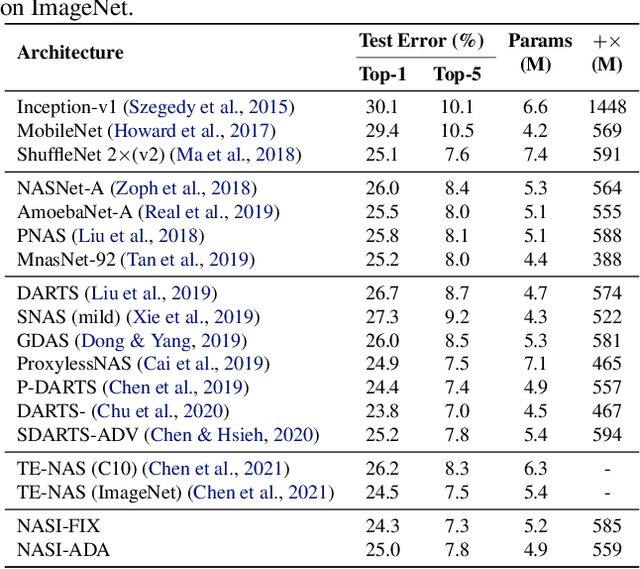

Abstract:Recent years have witnessed a surging interest in Neural Architecture Search (NAS). Various algorithms have been proposed to improve the search efficiency and effectiveness of NAS, i.e., to reduce the search cost and improve the generalization performance of the selected architectures, respectively. However, the search efficiency of these algorithms is severely limited by the need for model training during the search process. To overcome this limitation, we propose a novel NAS algorithm called NAS at Initialization (NASI) that exploits the capability of a Neural Tangent Kernel in being able to characterize the converged performance of candidate architectures at initialization, hence allowing model training to be completely avoided to boost the search efficiency. Besides the improved search efficiency, NASI also achieves competitive search effectiveness on various datasets like CIFAR-10/100 and ImageNet. Further, NASI is shown to be label- and data-agnostic under mild conditions, which guarantees the transferability of architectures selected by our NASI over different datasets.

SINGA-Easy: An Easy-to-Use Framework for MultiModal Analysis

Aug 03, 2021

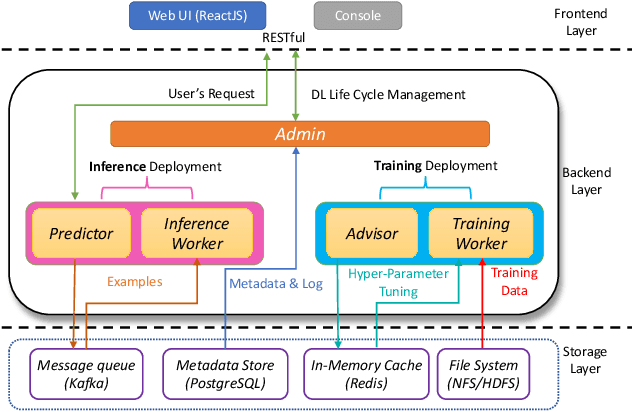

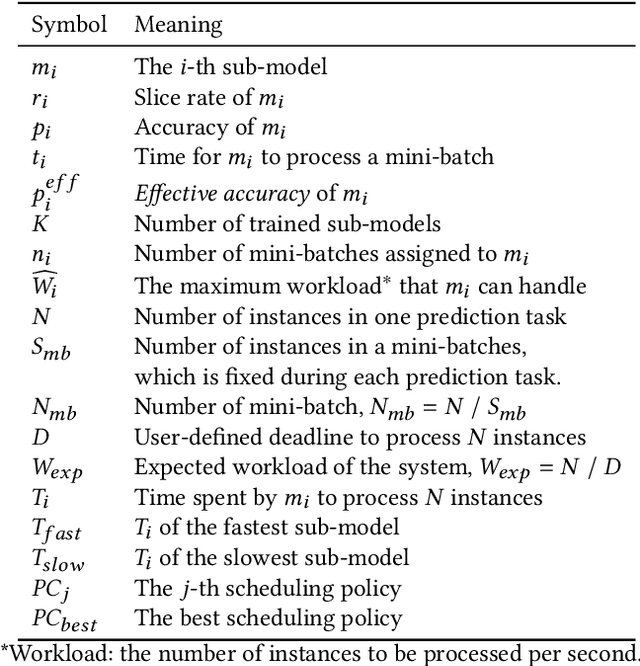

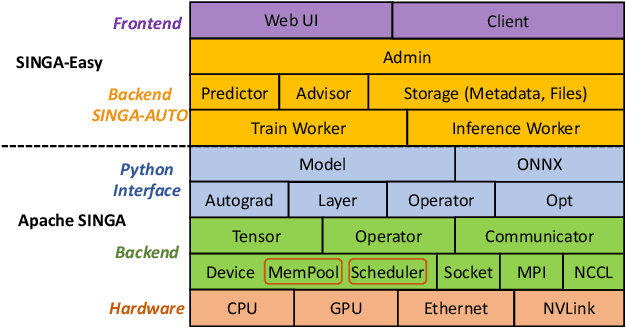

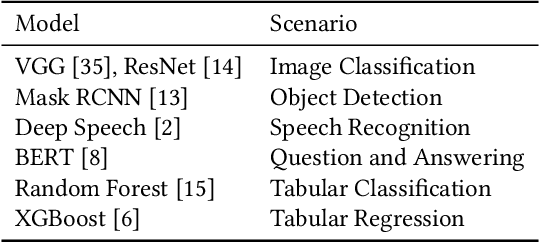

Abstract:Deep learning has achieved great success in a wide spectrum of multimedia applications such as image classification, natural language processing and multimodal data analysis. Recent years have seen the development of many deep learning frameworks that provide a high-level programming interface for users to design models, conduct training and deploy inference. However, it remains challenging to build an efficient end-to-end multimedia application with most existing frameworks. Specifically, in terms of usability, it is demanding for non-experts to implement deep learning models, obtain the right settings for the entire machine learning pipeline, manage models and datasets, and exploit external data sources all together. Further, in terms of adaptability, elastic computation solutions are much needed as the actual serving workload fluctuates constantly, and scaling the hardware resources to handle the fluctuating workload is typically infeasible. To address these challenges, we introduce SINGA-Easy, a new deep learning framework that provides distributed hyper-parameter tuning at the training stage, dynamic computational cost control at the inference stage, and intuitive user interactions with multimedia contents facilitated by model explanation. Our experiments on the training and deployment of multi-modality data analysis applications show that the framework is both usable and adaptable to dynamic inference loads. We implement SINGA-Easy on top of Apache SINGA and demonstrate our system with the entire machine learning life cycle.

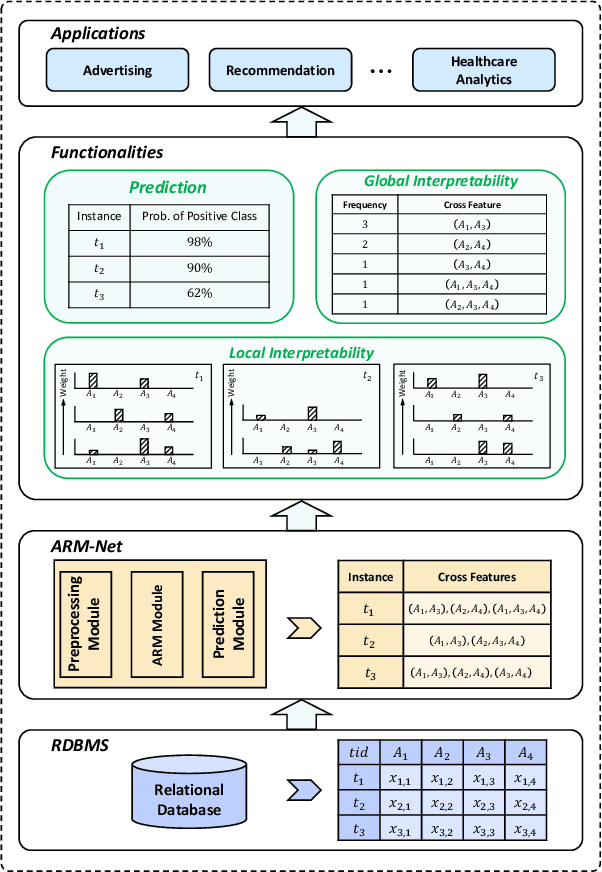

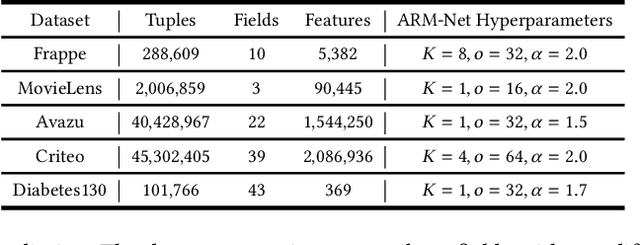

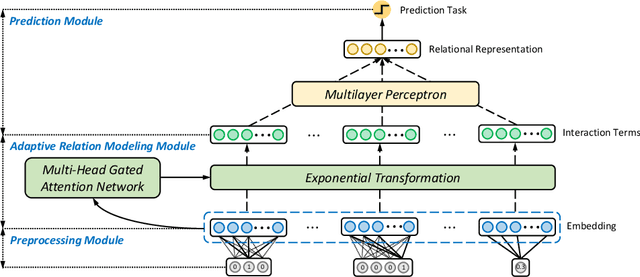

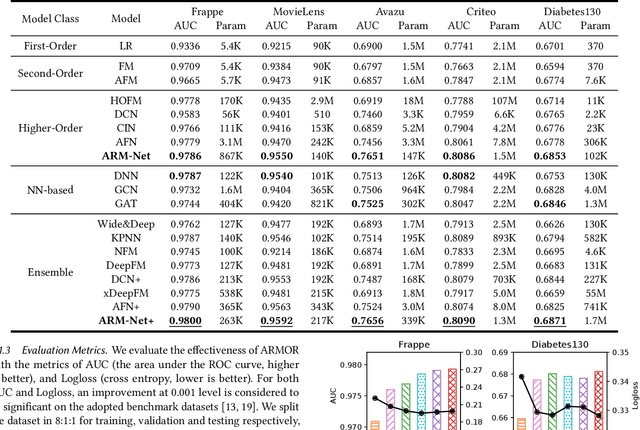

ARM-Net: Adaptive Relation Modeling Network for Structured Data

Jul 05, 2021

Abstract:Relational databases are the de facto standard for storing and querying structured data, and extracting insights from structured data requires advanced analytics. Deep neural networks (DNNs) have achieved super-human prediction performance in particular data types, e.g., images. However, existing DNNs may not produce meaningful results when applied to structured data. The reason is that there are correlations and dependencies across combinations of attribute values in a table, and these do not follow simple additive patterns that can be easily mimicked by a DNN. The number of possible such cross features is combinatorial, making them computationally prohibitive to model. Furthermore, the deployment of learning models in real-world applications has also highlighted the need for interpretability, especially for high-stakes applications, which remains another issue of concern to DNNs. In this paper, we present ARM-Net, an adaptive relation modeling network tailored for structured data, and a lightweight framework ARMOR based on ARM-Net for relational data analytics. The key idea is to model feature interactions with cross features selectively and dynamically, by first transforming the input features into exponential space, and then determining the interaction order and interaction weights adaptively for each cross feature. We propose a novel sparse attention mechanism to dynamically generate the interaction weights given the input tuple, so that we can explicitly model cross features of arbitrary orders with noisy features filtered selectively. Then during model inference, ARM-Net can specify the cross features being used for each prediction for higher accuracy and better interpretability. Our extensive experiments on real-world datasets demonstrate that ARM-Net consistently outperforms existing models and provides more interpretable predictions for data-driven decision making.

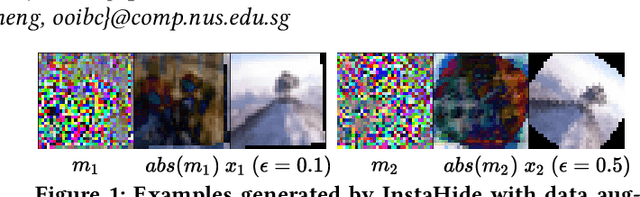

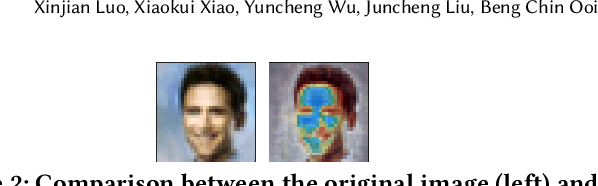

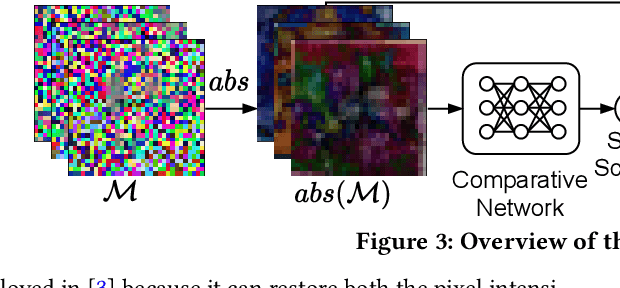

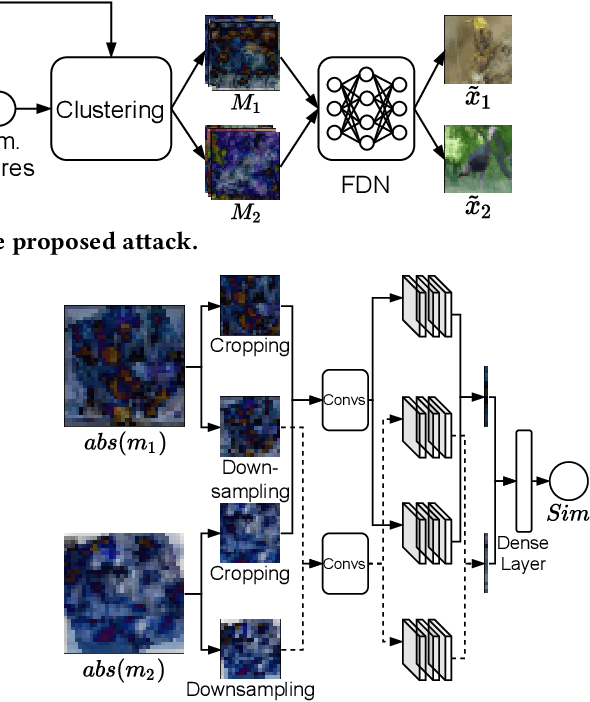

A Fusion-Denoising Attack on InstaHide with Data Augmentation

May 17, 2021

Abstract:InstaHide is a state-of-the-art mechanism for protecting private training images in collaborative learning. It works by mixing multiple private images and modifying them in such a way that their visual features are no longer distinguishable to the naked eye, without significantly degrading the accuracy of training. In recent work, however, Carlini et al. show that it is possible to reconstruct private images from the encrypted dataset generated by InstaHide, by exploiting the correlations among the encrypted images. Nevertheless, Carlini et al.'s attack relies on the assumption that each private image is used without modification when mixing up with other private images. As a consequence, it could be easily defeated by incorporating data augmentation into InstaHide. This leads to a natural question: is InstaHide with data augmentation secure? This paper provides a negative answer to the above question, by present an attack for recovering private images from the outputs of InstaHide even when data augmentation is present. The basic idea of our attack is to use a comparative network to identify encrypted images that are likely to correspond to the same private image, and then employ a fusion-denoising network for restoring the private image from the encrypted ones, taking into account the effects of data augmentation. Extensive experiments demonstrate the effectiveness of the proposed attack in comparison to Carlini et al.'s attack.

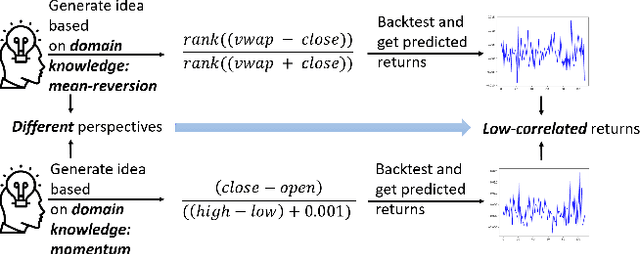

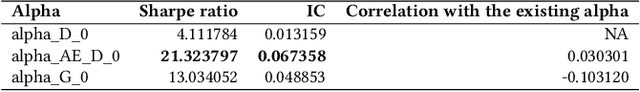

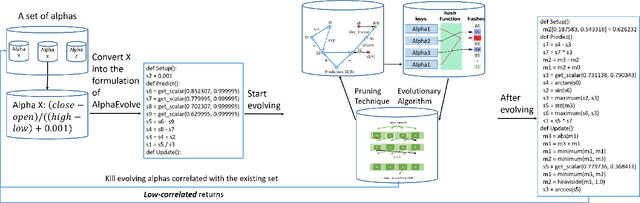

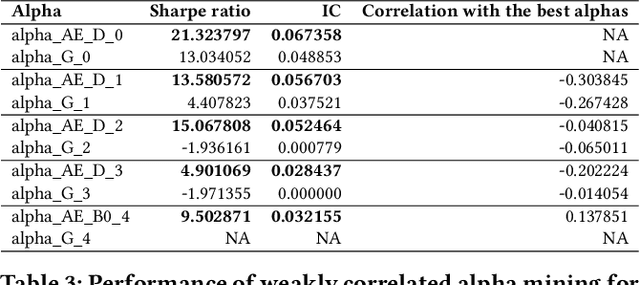

AlphaEvolve: A Learning Framework to Discover Novel Alphas in Quantitative Investment

Apr 01, 2021

Abstract:Alphas are stock prediction models capturing trading signals in a stock market. A set of effective alphas can generate weakly correlated high returns to diversify the risk. Existing alphas can be categorized into two classes: Formulaic alphas are simple algebraic expressions of scalar features, and thus can generalize well and be mined into a weakly correlated set. Machine learning alphas are data-driven models over vector and matrix features. They are more predictive than formulaic alphas, but are too complex to mine into a weakly correlated set. In this paper, we introduce a new class of alphas to model scalar, vector, and matrix features which possess the strengths of these two existing classes. The new alphas predict returns with high accuracy and can be mined into a weakly correlated set. In addition, we propose a novel alpha mining framework based on AutoML, called AlphaEvolve, to generate the new alphas. To this end, we first propose operators for generating the new alphas and selectively injecting relational domain knowledge to model the relations between stocks. We then accelerate the alpha mining by proposing a pruning technique for redundant alphas. Experiments show that AlphaEvolve can evolve initial alphas into the new alphas with high returns and weak correlations.

Serverless Model Serving for Data Science

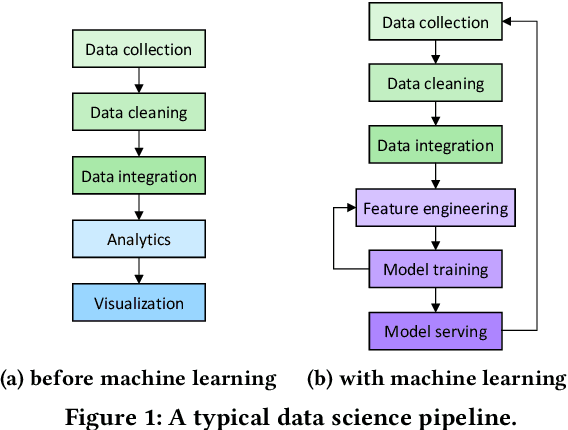

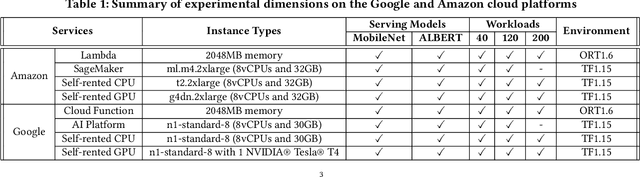

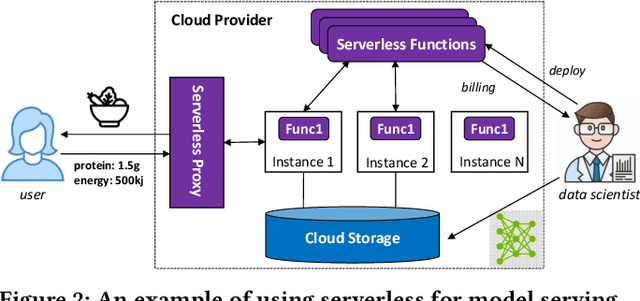

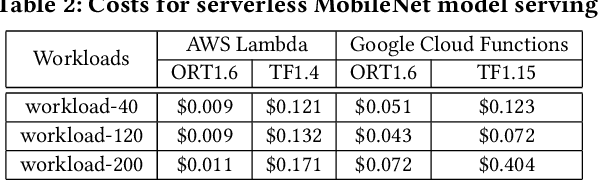

Mar 04, 2021

Abstract:Machine learning (ML) is an important part of modern data science applications. Data scientists today have to manage the end-to-end ML life cycle that includes both model training and model serving, the latter of which is essential, as it makes their works available to end-users. Systems for model serving require high performance, low cost, and ease of management. Cloud providers are already offering model serving options, including managed services and self-rented servers. Recently, serverless computing, whose advantages include high elasticity and fine-grained cost model, brings another possibility for model serving. In this paper, we study the viability of serverless as a mainstream model serving platform for data science applications. We conduct a comprehensive evaluation of the performance and cost of serverless against other model serving systems on two clouds: Amazon Web Service (AWS) and Google Cloud Platform (GCP). We find that serverless outperforms many cloud-based alternatives with respect to cost and performance. More interestingly, under some circumstances, it can even outperform GPU-based systems for both average latency and cost. These results are different from previous works' claim that serverless is not suitable for model serving, and are contrary to the conventional wisdom that GPU-based systems are better for ML workloads than CPU-based systems. Other findings include a large gap in cold start time between AWS and GCP serverless functions, and serverless' low sensitivity to changes in workloads or models. Our evaluation results indicate that serverless is a viable option for model serving. Finally, we present several practical recommendations for data scientists on how to use serverless for scalable and cost-effective model serving.

Feature Inference Attack on Model Predictions in Vertical Federated Learning

Oct 20, 2020Abstract:Federated learning (FL) is an emerging paradigm for facilitating multiple organizations' data collaboration without revealing their private data to each other. Recently, vertical FL, where the participating organizations hold the same set of samples but with disjoint features and only one organization owns the labels, has received increased attention. This paper presents several feature inference attack methods to investigate the potential privacy leakages in the model prediction stage of vertical FL. The attack methods consider the most stringent setting that the adversary controls only the trained vertical FL model and the model predictions, relying on no background information. We first propose two specific attacks on the logistic regression (LR) and decision tree (DT) models, according to individual prediction output. We further design a general attack method based on multiple prediction outputs accumulated by the adversary to handle complex models, such as neural networks (NN) and random forest (RF) models. Experimental evaluations demonstrate the effectiveness of the proposed attacks and highlight the need for designing private mechanisms to protect the prediction outputs in vertical FL.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge