Learning in Sinusoidal Spaces with Physics-Informed Neural Networks

Sep 20, 2021Jian Cheng Wong, Chinchun Ooi, Abhishek Gupta, Yew-Soon Ong

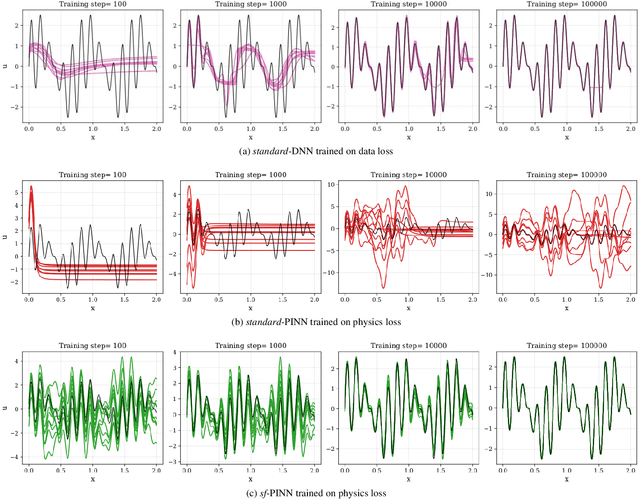

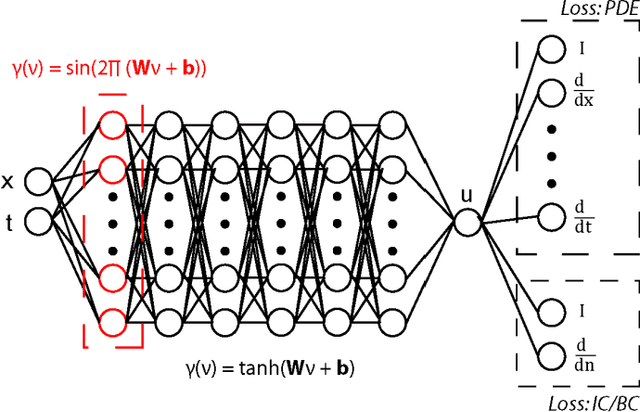

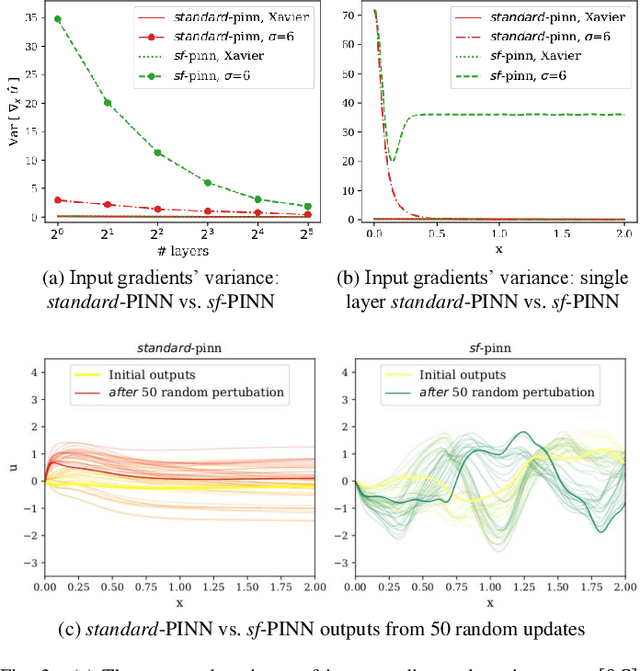

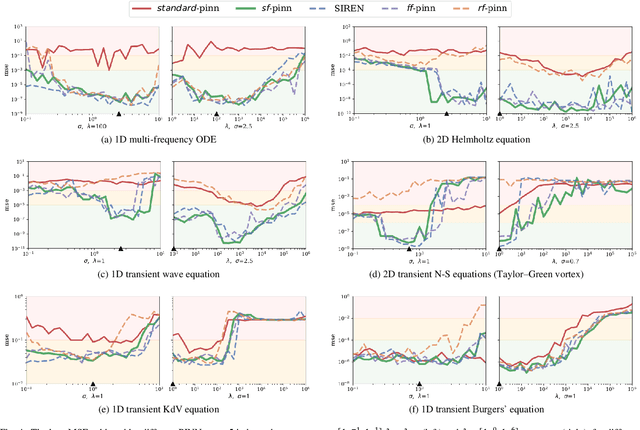

A physics-informed neural network (PINN) uses physics-augmented loss functions, e.g., incorporating the residual term from governing differential equations, to ensure its output is consistent with fundamental physics laws. However, it turns out to be difficult to train an accurate PINN model for many problems in practice. In this paper, we address this issue through a novel perspective on the merits of learning in sinusoidal spaces with PINNs. By analyzing asymptotic behavior at model initialization, we first prove that a PINN of increasing size (i.e., width and depth) induces a bias towards flat outputs. Notably, a flat function is a trivial solution to many physics differential equations, hence, deceptively minimizing the residual term of the augmented loss while being far from the true solution. We then show that the sinusoidal mapping of inputs, in an architecture we label as sf-PINN, is able to elevate output variability, thus avoiding being trapped in the deceptive local minimum. In addition, the level of variability can be effectively modulated to match high-frequency patterns in the problem at hand. A key facet of this paper is the comprehensive empirical study that demonstrates the efficacy of learning in sinusoidal spaces with PINNs for a wide range of forward and inverse modelling problems spanning multiple physics domains.

The State of AI Ethics Report (Volume 5)

Aug 09, 2021Abhishek Gupta, Connor Wright, Marianna Bergamaschi Ganapini, Masa Sweidan, Renjie Butalid

This report from the Montreal AI Ethics Institute covers the most salient progress in research and reporting over the second quarter of 2021 in the field of AI ethics with a special emphasis on "Environment and AI", "Creativity and AI", and "Geopolitics and AI." The report also features an exclusive piece titled "Critical Race Quantum Computer" that applies ideas from quantum physics to explain the complexities of human characteristics and how they can and should shape our interactions with each other. The report also features special contributions on the subject of pedagogy in AI ethics, sociology and AI ethics, and organizational challenges to implementing AI ethics in practice. Given MAIEI's mission to highlight scholars from around the world working on AI ethics issues, the report also features two spotlights sharing the work of scholars operating in Singapore and Mexico helping to shape policy measures as they relate to the responsible use of technology. The report also has an extensive section covering the gamut of issues when it comes to the societal impacts of AI covering areas of bias, privacy, transparency, accountability, fairness, interpretability, disinformation, policymaking, law, regulations, and moral philosophy.

Fully Autonomous Real-World Reinforcement Learning for Mobile Manipulation

Aug 03, 2021Charles Sun, Jędrzej Orbik, Coline Devin, Brian Yang, Abhishek Gupta, Glen Berseth, Sergey Levine

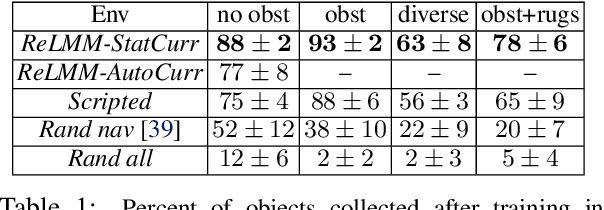

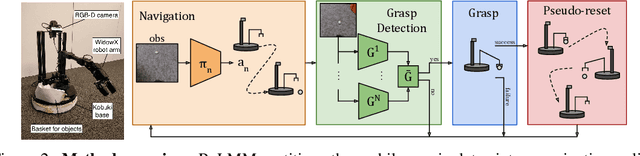

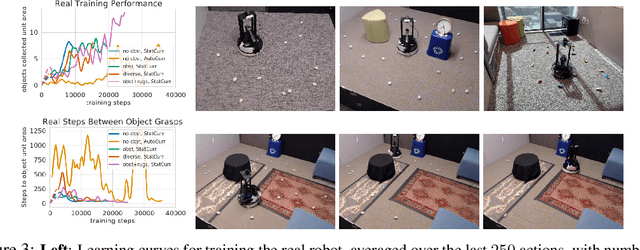

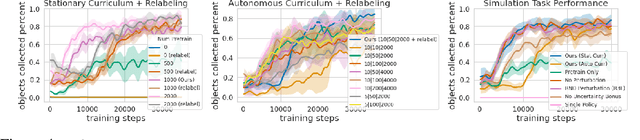

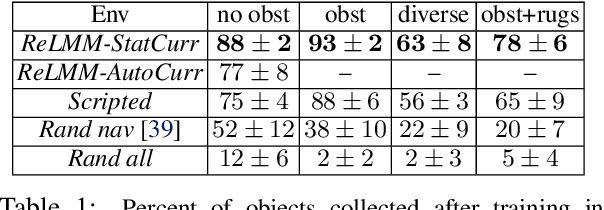

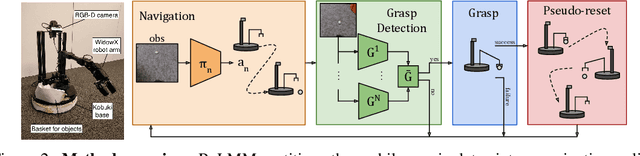

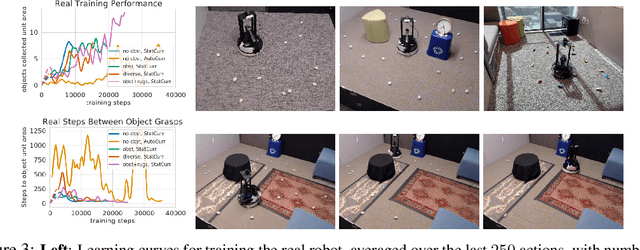

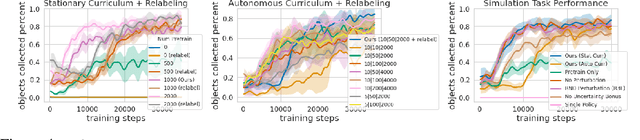

We study how robots can autonomously learn skills that require a combination of navigation and grasping. While reinforcement learning in principle provides for automated robotic skill learning, in practice reinforcement learning in the real world is challenging and often requires extensive instrumentation and supervision. Our aim is to devise a robotic reinforcement learning system for learning navigation and manipulation together, in an autonomous way without human intervention, enabling continual learning under realistic assumptions. Our proposed system, ReLMM, can learn continuously on a real-world platform without any environment instrumentation, without human intervention, and without access to privileged information, such as maps, objects positions, or a global view of the environment. Our method employs a modularized policy with components for manipulation and navigation, where manipulation policy uncertainty drives exploration for the navigation controller, and the manipulation module provides rewards for navigation. We evaluate our method on a room cleanup task, where the robot must navigate to and pick up items scattered on the floor. After a grasp curriculum training phase, ReLMM can learn navigation and grasping together fully automatically, in around 40 hours of autonomous real-world training.

ReLMM: Practical RL for Learning Mobile Manipulation Skills Using Only Onboard Sensors

Jul 28, 2021Charles Sun, Jędrzej Orbik, Coline Devin, Brian Yang, Abhishek Gupta, Glen Berseth, Sergey Levine

In this paper, we study how robots can autonomously learn skills that require a combination of navigation and grasping. Learning robotic skills in the real world remains challenging without large-scale data collection and supervision. Our aim is to devise a robotic reinforcement learning system for learning navigation and manipulation together, in an \textit{autonomous} way without human intervention, enabling continual learning under realistic assumptions. Specifically, our system, ReLMM, can learn continuously on a real-world platform without any environment instrumentation, without human intervention, and without access to privileged information, such as maps, objects positions, or a global view of the environment. Our method employs a modularized policy with components for manipulation and navigation, where uncertainty over the manipulation success drives exploration for the navigation controller, and the manipulation module provides rewards for navigation. We evaluate our method on a room cleanup task, where the robot must navigate to and pick up items of scattered on the floor. After a grasp curriculum training phase, ReLMM can learn navigation and grasping together fully automatically, in around 40 hours of real-world training.

Persistent Reinforcement Learning via Subgoal Curricula

Jul 27, 2021Archit Sharma, Abhishek Gupta, Sergey Levine, Karol Hausman, Chelsea Finn

Reinforcement learning (RL) promises to enable autonomous acquisition of complex behaviors for diverse agents. However, the success of current reinforcement learning algorithms is predicated on an often under-emphasised requirement -- each trial needs to start from a fixed initial state distribution. Unfortunately, resetting the environment to its initial state after each trial requires substantial amount of human supervision and extensive instrumentation of the environment which defeats the purpose of autonomous reinforcement learning. In this work, we propose Value-accelerated Persistent Reinforcement Learning (VaPRL), which generates a curriculum of initial states such that the agent can bootstrap on the success of easier tasks to efficiently learn harder tasks. The agent also learns to reach the initial states proposed by the curriculum, minimizing the reliance on human interventions into the learning. We observe that VaPRL reduces the interventions required by three orders of magnitude compared to episodic RL while outperforming prior state-of-the art methods for reset-free RL both in terms of sample efficiency and asymptotic performance on a variety of simulated robotics problems.

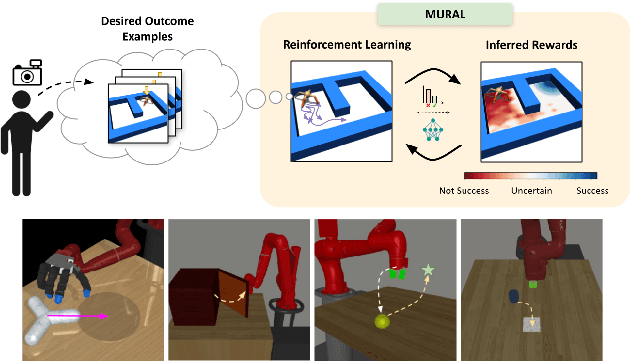

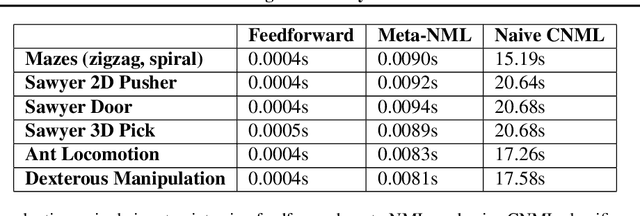

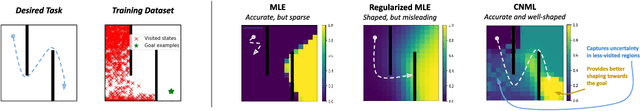

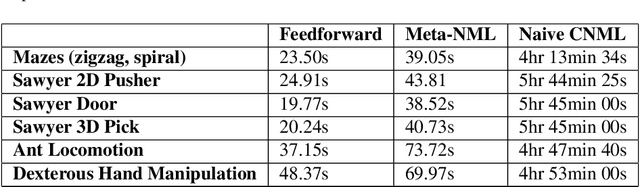

MURAL: Meta-Learning Uncertainty-Aware Rewards for Outcome-Driven Reinforcement Learning

Jul 18, 2021Kevin Li, Abhishek Gupta, Ashwin Reddy, Vitchyr Pong, Aurick Zhou, Justin Yu, Sergey Levine

Exploration in reinforcement learning is a challenging problem: in the worst case, the agent must search for high-reward states that could be hidden anywhere in the state space. Can we define a more tractable class of RL problems, where the agent is provided with examples of successful outcomes? In this problem setting, the reward function can be obtained automatically by training a classifier to categorize states as successful or not. If trained properly, such a classifier can provide a well-shaped objective landscape that both promotes progress toward good states and provides a calibrated exploration bonus. In this work, we show that an uncertainty aware classifier can solve challenging reinforcement learning problems by both encouraging exploration and provided directed guidance towards positive outcomes. We propose a novel mechanism for obtaining these calibrated, uncertainty-aware classifiers based on an amortized technique for computing the normalized maximum likelihood (NML) distribution. To make this tractable, we propose a novel method for computing the NML distribution by using meta-learning. We show that the resulting algorithm has a number of intriguing connections to both count-based exploration methods and prior algorithms for learning reward functions, while also providing more effective guidance towards the goal. We demonstrate that our algorithm solves a number of challenging navigation and robotic manipulation tasks which prove difficult or impossible for prior methods.

Weighted Gaussian Process Bandits for Non-stationary Environments

Jul 06, 2021Yuntian Deng, Xingyu Zhou, Baekjin Kim, Ambuj Tewari, Abhishek Gupta, Ness Shroff

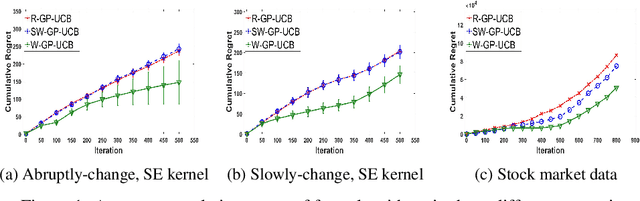

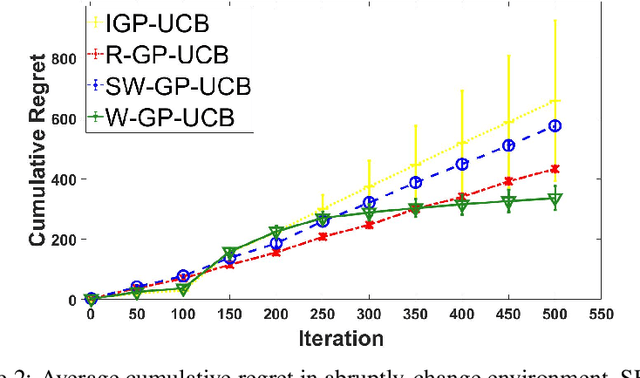

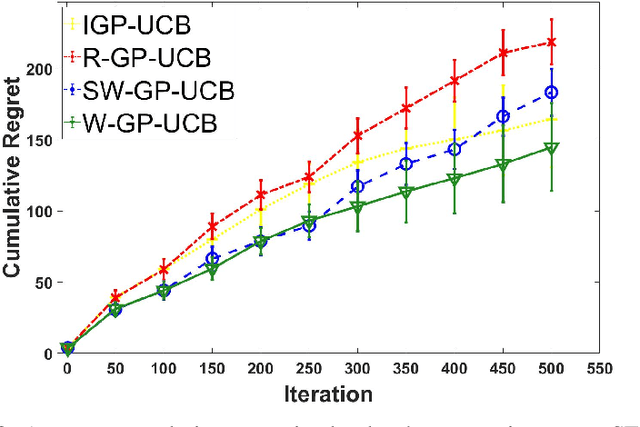

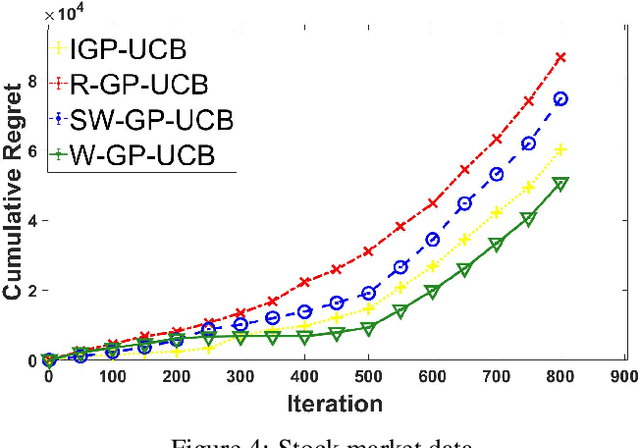

In this paper, we consider the Gaussian process (GP) bandit optimization problem in a non-stationary environment. To capture external changes, the black-box function is allowed to be time-varying within a reproducing kernel Hilbert space (RKHS). To this end, we develop WGP-UCB, a novel UCB-type algorithm based on weighted Gaussian process regression. A key challenge is how to cope with infinite-dimensional feature maps. To that end, we leverage kernel approximation techniques to prove a sublinear regret bound, which is the first (frequentist) sublinear regret guarantee on weighted time-varying bandits with general nonlinear rewards. This result generalizes both non-stationary linear bandits and standard GP-UCB algorithms. Further, a novel concentration inequality is achieved for weighted Gaussian process regression with general weights. We also provide universal upper bounds and weight-dependent upper bounds for weighted maximum information gains. These results are potentially of independent interest for applications such as news ranking and adaptive pricing, where weights can be adopted to capture the importance or quality of data. Finally, we conduct experiments to highlight the favorable gains of the proposed algorithm in many cases when compared to existing methods.

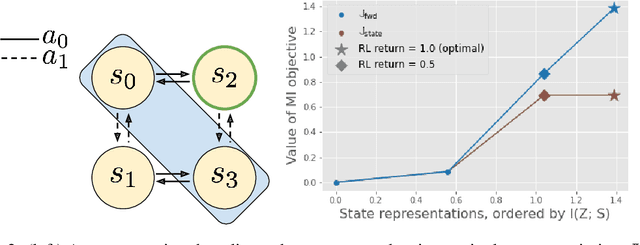

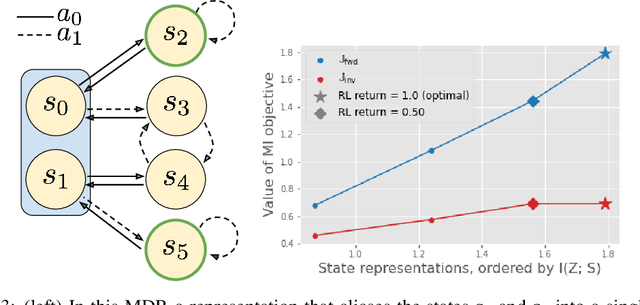

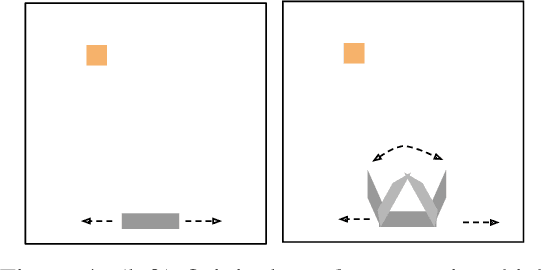

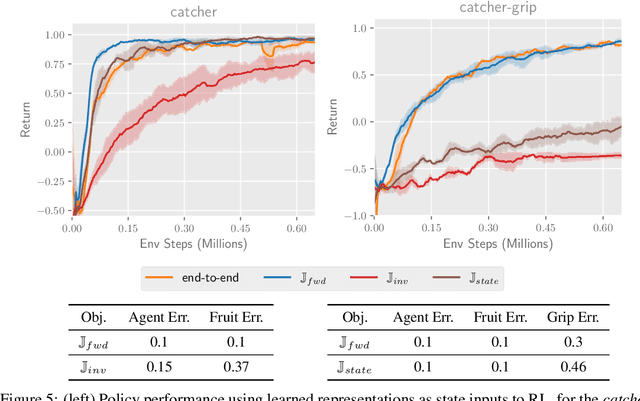

Which Mutual-Information Representation Learning Objectives are Sufficient for Control?

Jun 14, 2021Kate Rakelly, Abhishek Gupta, Carlos Florensa, Sergey Levine

Mutual information maximization provides an appealing formalism for learning representations of data. In the context of reinforcement learning (RL), such representations can accelerate learning by discarding irrelevant and redundant information, while retaining the information necessary for control. Much of the prior work on these methods has addressed the practical difficulties of estimating mutual information from samples of high-dimensional observations, while comparatively less is understood about which mutual information objectives yield representations that are sufficient for RL from a theoretical perspective. In this paper, we formalize the sufficiency of a state representation for learning and representing the optimal policy, and study several popular mutual-information based objectives through this lens. Surprisingly, we find that two of these objectives can yield insufficient representations given mild and common assumptions on the structure of the MDP. We corroborate our theoretical results with empirical experiments on a simulated game environment with visual observations.

Safe Model-based Off-policy Reinforcement Learning for Eco-Driving in Connected and Automated Hybrid Electric Vehicles

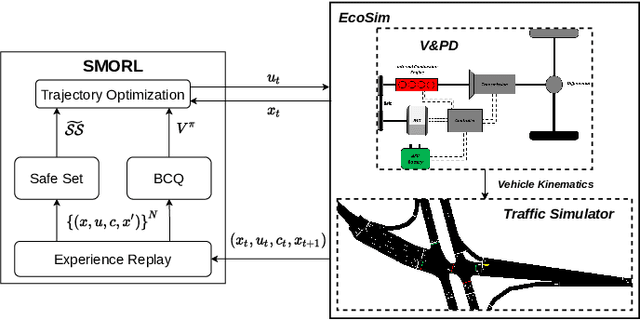

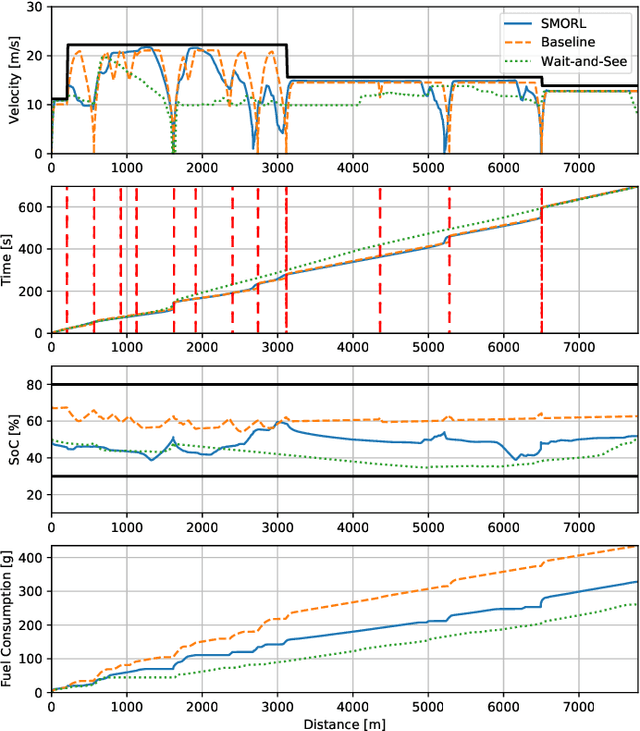

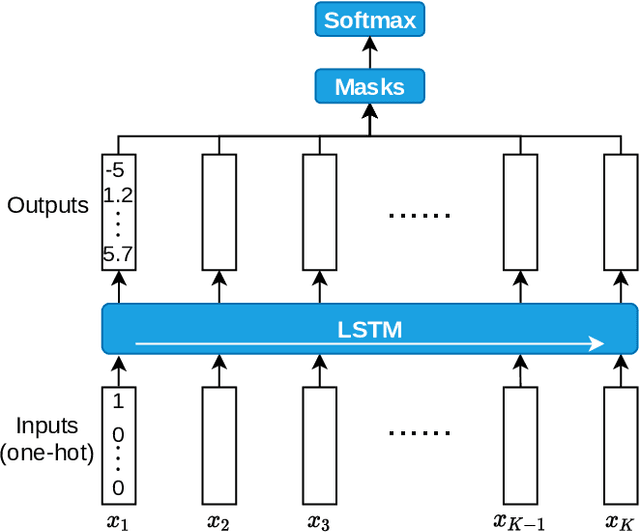

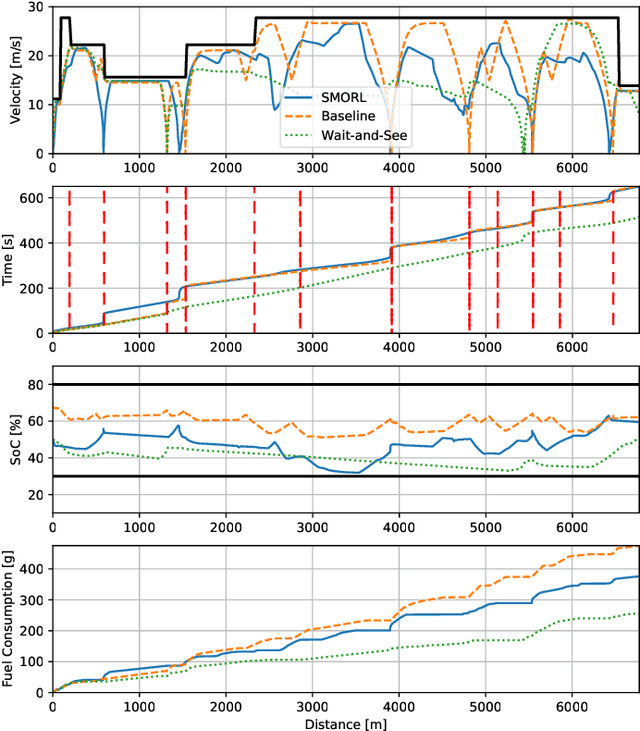

May 25, 2021Zhaoxuan Zhu, Nicola Pivaro, Shobhit Gupta, Abhishek Gupta, Marcello Canova

Connected and Automated Hybrid Electric Vehicles have the potential to reduce fuel consumption and travel time in real-world driving conditions. The eco-driving problem seeks to design optimal speed and power usage profiles based upon look-ahead information from connectivity and advanced mapping features. Recently, Deep Reinforcement Learning (DRL) has been applied to the eco-driving problem. While the previous studies synthesize simulators and model-free DRL to reduce online computation, this work proposes a Safe Off-policy Model-Based Reinforcement Learning algorithm for the eco-driving problem. The advantages over the existing literature are three-fold. First, the combination of off-policy learning and the use of a physics-based model improves the sample efficiency. Second, the training does not require any extrinsic rewarding mechanism for constraint satisfaction. Third, the feasibility of trajectory is guaranteed by using a safe set approximated by deep generative models. The performance of the proposed method is benchmarked against a baseline controller representing human drivers, a previously designed model-free DRL strategy, and the wait-and-see optimal solution. In simulation, the proposed algorithm leads to a policy with a higher average speed and a better fuel economy compared to the model-free agent. Compared to the baseline controller, the learned strategy reduces the fuel consumption by more than 21\% while keeping the average speed comparable.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge