facial recognition

Facial recognition is an AI-based technique for identifying or confirming an individual's identity using their face. It maps facial features from an image or video and then compares the information with a collection of known faces to find a match.

Papers and Code

Landmark Guided Visual Feature Extractor for Visual Speech Recognition with Limited Resource

Aug 10, 2025Visual speech recognition is a technique to identify spoken content in silent speech videos, which has raised significant attention in recent years. Advancements in data-driven deep learning methods have significantly improved both the speed and accuracy of recognition. However, these deep learning methods can be effected by visual disturbances, such as lightning conditions, skin texture and other user-specific features. Data-driven approaches could reduce the performance degradation caused by these visual disturbances using models pretrained on large-scale datasets. But these methods often require large amounts of training data and computational resources, making them costly. To reduce the influence of user-specific features and enhance performance with limited data, this paper proposed a landmark guided visual feature extractor. Facial landmarks are used as auxiliary information to aid in training the visual feature extractor. A spatio-temporal multi-graph convolutional network is designed to fully exploit the spatial locations and spatio-temporal features of facial landmarks. Additionally, a multi-level lip dynamic fusion framework is introduced to combine the spatio-temporal features of the landmarks with the visual features extracted from the raw video frames. Experimental results show that this approach performs well with limited data and also improves the model's accuracy on unseen speakers.

A Deep Learning Approach for Facial Attribute Manipulation and Reconstruction in Surveillance and Reconnaissance

Jun 06, 2025

Surveillance systems play a critical role in security and reconnaissance, but their performance is often compromised by low-quality images and videos, leading to reduced accuracy in face recognition. Additionally, existing AI-based facial analysis models suffer from biases related to skin tone variations and partially occluded faces, further limiting their effectiveness in diverse real-world scenarios. These challenges are the results of data limitations and imbalances, where available training datasets lack sufficient diversity, resulting in unfair and unreliable facial recognition performance. To address these issues, we propose a data-driven platform that enhances surveillance capabilities by generating synthetic training data tailored to compensate for dataset biases. Our approach leverages deep learning-based facial attribute manipulation and reconstruction using autoencoders and Generative Adversarial Networks (GANs) to create diverse and high-quality facial datasets. Additionally, our system integrates an image enhancement module, improving the clarity of low-resolution or occluded faces in surveillance footage. We evaluate our approach using the CelebA dataset, demonstrating that the proposed platform enhances both training data diversity and model fairness. This work contributes to reducing bias in AI-based facial analysis and improving surveillance accuracy in challenging environments, leading to fairer and more reliable security applications.

Accuracy and Fairness of Facial Recognition Technology in Low-Quality Police Images: An Experiment With Synthetic Faces

May 20, 2025Facial recognition technology (FRT) is increasingly used in criminal investigations, yet most evaluations of its accuracy rely on high-quality images, unlike those often encountered by law enforcement. This study examines how five common forms of image degradation--contrast, brightness, motion blur, pose shift, and resolution--affect FRT accuracy and fairness across demographic groups. Using synthetic faces generated by StyleGAN3 and labeled with FairFace, we simulate degraded images and evaluate performance using Deepface with ArcFace loss in 1:n identification tasks. We perform an experiment and find that false positive rates peak near baseline image quality, while false negatives increase as degradation intensifies--especially with blur and low resolution. Error rates are consistently higher for women and Black individuals, with Black females most affected. These disparities raise concerns about fairness and reliability when FRT is used in real-world investigative contexts. Nevertheless, even under the most challenging conditions and for the most affected subgroups, FRT accuracy remains substantially higher than that of many traditional forensic methods. This suggests that, if appropriately validated and regulated, FRT should be considered a valuable investigative tool. However, algorithmic accuracy alone is not sufficient: we must also evaluate how FRT is used in practice, including user-driven data manipulation. Such cases underscore the need for transparency and oversight in FRT deployment to ensure both fairness and forensic validity.

2D-3D Attention and Entropy for Pose Robust 2D Facial Recognition

May 14, 2025

Despite recent advances in facial recognition, there remains a fundamental issue concerning degradations in performance due to substantial perspective (pose) differences between enrollment and query (probe) imagery. Therefore, we propose a novel domain adaptive framework to facilitate improved performances across large discrepancies in pose by enabling image-based (2D) representations to infer properties of inherently pose invariant point cloud (3D) representations. Specifically, our proposed framework achieves better pose invariance by using (1) a shared (joint) attention mapping to emphasize common patterns that are most correlated between 2D facial images and 3D facial data and (2) a joint entropy regularizing loss to promote better consistency$\unicode{x2014}$enhancing correlations among the intersecting 2D and 3D representations$\unicode{x2014}$by leveraging both attention maps. This framework is evaluated on FaceScape and ARL-VTF datasets, where it outperforms competitive methods by achieving profile (90$\unicode{x00b0}$$\unicode{x002b}$) TAR @ 1$\unicode{x0025}$ FAR improvements of at least 7.1$\unicode{x0025}$ and 1.57$\unicode{x0025}$, respectively.

SocialDF: Benchmark Dataset and Detection Model for Mitigating Harmful Deepfake Content on Social Media Platforms

Jun 05, 2025The rapid advancement of deep generative models has significantly improved the realism of synthetic media, presenting both opportunities and security challenges. While deepfake technology has valuable applications in entertainment and accessibility, it has emerged as a potent vector for misinformation campaigns, particularly on social media. Existing detection frameworks struggle to distinguish between benign and adversarially generated deepfakes engineered to manipulate public perception. To address this challenge, we introduce SocialDF, a curated dataset reflecting real-world deepfake challenges on social media platforms. This dataset encompasses high-fidelity deepfakes sourced from various online ecosystems, ensuring broad coverage of manipulative techniques. We propose a novel LLM-based multi-factor detection approach that combines facial recognition, automated speech transcription, and a multi-agent LLM pipeline to cross-verify audio-visual cues. Our methodology emphasizes robust, multi-modal verification techniques that incorporate linguistic, behavioral, and contextual analysis to effectively discern synthetic media from authentic content.

MienCap: Realtime Performance-Based Facial Animation with Live Mood Dynamics

Aug 06, 2025

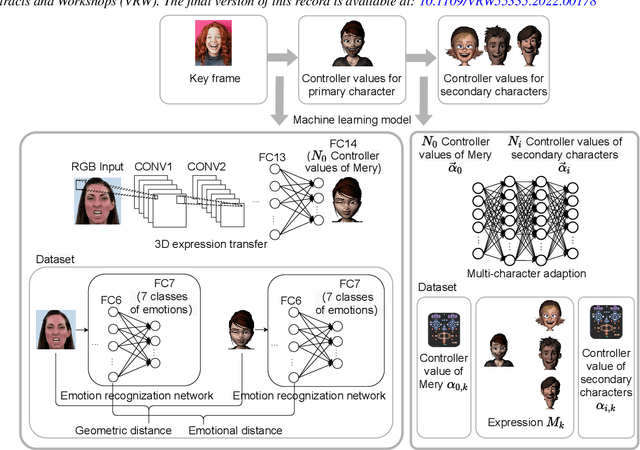

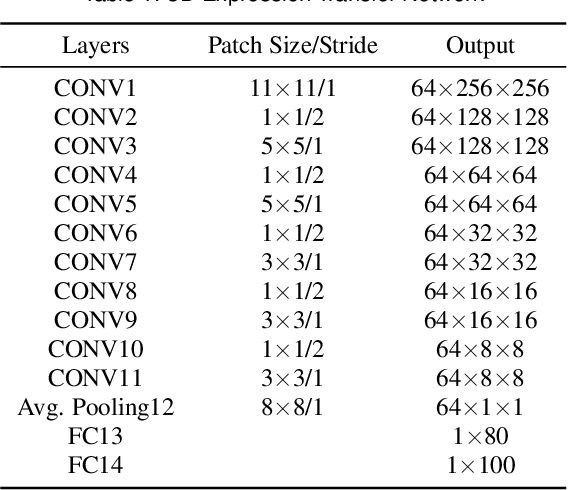

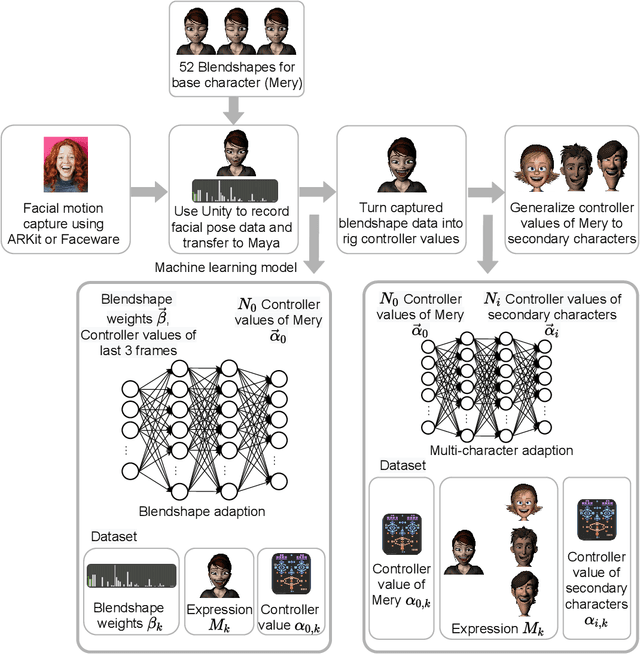

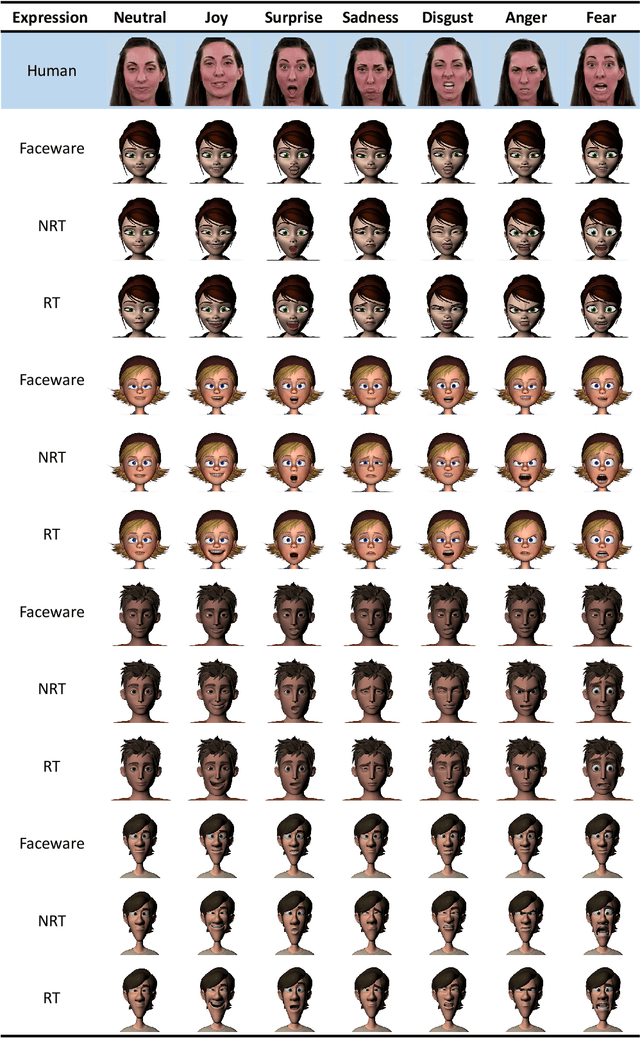

Our purpose is to improve performance-based animation which can drive believable 3D stylized characters that are truly perceptual. By combining traditional blendshape animation techniques with multiple machine learning models, we present both non-real time and real time solutions which drive character expressions in a geometrically consistent and perceptually valid way. For the non-real time system, we propose a 3D emotion transfer network makes use of a 2D human image to generate a stylized 3D rig parameters. For the real time system, we propose a blendshape adaption network which generates the character rig parameter motions with geometric consistency and temporally stability. We demonstrate the effectiveness of our system by comparing to a commercial product Faceware. Results reveal that ratings of the recognition, intensity, and attractiveness of expressions depicted for animated characters via our systems are statistically higher than Faceware. Our results may be implemented into the animation pipeline, and provide animators with a system for creating the expressions they wish to use more quickly and accurately.

TKFNet: Learning Texture Key Factor Driven Feature for Facial Expression Recognition

May 15, 2025Facial expression recognition (FER) in the wild remains a challenging task due to the subtle and localized nature of expression-related features, as well as the complex variations in facial appearance. In this paper, we introduce a novel framework that explicitly focuses on Texture Key Driver Factors (TKDF), localized texture regions that exhibit strong discriminative power across emotional categories. By carefully observing facial image patterns, we identify that certain texture cues, such as micro-changes in skin around the brows, eyes, and mouth, serve as primary indicators of emotional dynamics. To effectively capture and leverage these cues, we propose a FER architecture comprising a Texture-Aware Feature Extractor (TAFE) and Dual Contextual Information Filtering (DCIF). TAFE employs a ResNet-based backbone enhanced with multi-branch attention to extract fine-grained texture representations, while DCIF refines these features by filtering context through adaptive pooling and attention mechanisms. Experimental results on RAF-DB and KDEF datasets demonstrate that our method achieves state-of-the-art performance, verifying the effectiveness and robustness of incorporating TKDFs into FER pipelines.

A Feature-level Bias Evaluation Framework for Facial Expression Recognition Models

May 26, 2025Recent studies on fairness have shown that Facial Expression Recognition (FER) models exhibit biases toward certain visually perceived demographic groups. However, the limited availability of human-annotated demographic labels in public FER datasets has constrained the scope of such bias analysis. To overcome this limitation, some prior works have resorted to pseudo-demographic labels, which may distort bias evaluation results. Alternatively, in this paper, we propose a feature-level bias evaluation framework for evaluating demographic biases in FER models under the setting where demographic labels are unavailable in the test set. Extensive experiments demonstrate that our method more effectively evaluates demographic biases compared to existing approaches that rely on pseudo-demographic labels. Furthermore, we observe that many existing studies do not include statistical testing in their bias evaluations, raising concerns that some reported biases may not be statistically significant but rather due to randomness. To address this issue, we introduce a plug-and-play statistical module to ensure the statistical significance of biased evaluation results. A comprehensive bias analysis based on the proposed module is then conducted across three sensitive attributes (age, gender, and race), seven facial expressions, and multiple network architectures on a large-scale dataset, revealing the prominent demographic biases in FER and providing insights on selecting a fairer network architecture.

Touch Speaks, Sound Feels: A Multimodal Approach to Affective and Social Touch from Robots to Humans

Aug 11, 2025Affective tactile interaction constitutes a fundamental component of human communication. In natural human-human encounters, touch is seldom experienced in isolation; rather, it is inherently multisensory. Individuals not only perceive the physical sensation of touch but also register the accompanying auditory cues generated through contact. The integration of haptic and auditory information forms a rich and nuanced channel for emotional expression. While extensive research has examined how robots convey emotions through facial expressions and speech, their capacity to communicate social gestures and emotions via touch remains largely underexplored. To address this gap, we developed a multimodal interaction system incorporating a 5*5 grid of 25 vibration motors synchronized with audio playback, enabling robots to deliver combined haptic-audio stimuli. In an experiment involving 32 Chinese participants, ten emotions and six social gestures were presented through vibration, sound, or their combination. Participants rated each stimulus on arousal and valence scales. The results revealed that (1) the combined haptic-audio modality significantly enhanced decoding accuracy compared to single modalities; (2) each individual channel-vibration or sound-effectively supported certain emotions recognition, with distinct advantages depending on the emotional expression; and (3) gestures alone were generally insufficient for conveying clearly distinguishable emotions. These findings underscore the importance of multisensory integration in affective human-robot interaction and highlight the complementary roles of haptic and auditory cues in enhancing emotional communication.

Attributes Shape the Embedding Space of Face Recognition Models

Jul 15, 2025Face Recognition (FR) tasks have made significant progress with the advent of Deep Neural Networks, particularly through margin-based triplet losses that embed facial images into high-dimensional feature spaces. During training, these contrastive losses focus exclusively on identity information as labels. However, we observe a multiscale geometric structure emerging in the embedding space, influenced by interpretable facial (e.g., hair color) and image attributes (e.g., contrast). We propose a geometric approach to describe the dependence or invariance of FR models to these attributes and introduce a physics-inspired alignment metric. We evaluate the proposed metric on controlled, simplified models and widely used FR models fine-tuned with synthetic data for targeted attribute augmentation. Our findings reveal that the models exhibit varying degrees of invariance across different attributes, providing insight into their strengths and weaknesses and enabling deeper interpretability. Code available here: https://github.com/mantonios107/attrs-fr-embs}{https://github.com/mantonios107/attrs-fr-embs

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge