Text Classification

Text classification is the process of categorizing text documents into predefined categories or labels.

Papers and Code

InstructNet: A Novel Approach for Multi-Label Instruction Classification through Advanced Deep Learning

Dec 20, 2025People use search engines for various topics and items, from daily essentials to more aspirational and specialized objects. Therefore, search engines have taken over as peoples preferred resource. The How To prefix has become familiar and widely used in various search styles to find solutions to particular problems. This search allows people to find sequential instructions by providing detailed guidelines to accomplish specific tasks. Categorizing instructional text is also essential for task-oriented learning and creating knowledge bases. This study uses the How To articles to determine the multi-label instruction category. We have brought this work with a dataset comprising 11,121 observations from wikiHow, where each record has multiple categories. To find out the multi-label category meticulously, we employ some transformer-based deep neural architectures, such as Generalized Autoregressive Pretraining for Language Understanding (XLNet), Bidirectional Encoder Representation from Transformers (BERT), etc. In our multi-label instruction classification process, we have reckoned our proposed architectures using accuracy and macro f1-score as the performance metrics. This thorough evaluation showed us much about our strategys strengths and drawbacks. Specifically, our implementation of the XLNet architecture has demonstrated unprecedented performance, achieving an accuracy of 97.30% and micro and macro average scores of 89.02% and 93%, a noteworthy accomplishment in multi-label classification. This high level of accuracy and macro average score is a testament to the effectiveness of the XLNet architecture in our proposed InstructNet approach. By employing a multi-level strategy in our evaluation process, we have gained a more comprehensive knowledge of the effectiveness of our proposed architectures and identified areas for forthcoming improvement and refinement.

Topic Discovery and Classification for Responsible Generative AI Adaptation in Higher Education

Dec 17, 2025

As generative artificial intelligence (GenAI) becomes increasingly capable of delivering personalized learning experiences and real-time feedback, a growing number of students are incorporating these tools into their academic workflows. They use GenAI to clarify concepts, solve complex problems, and, in some cases, complete assignments by copying and pasting model-generated contents. While GenAI has the potential to enhance learning experience, it also raises concerns around misinformation, hallucinated outputs, and its potential to undermine critical thinking and problem-solving skills. In response, many universities, colleges, departments, and instructors have begun to develop and adopt policies to guide responsible integration of GenAI into learning environments. However, these policies vary widely across institutions and contexts, and their evolving nature often leaves students uncertain about expectations and best practices. To address this challenge, the authors designed and implemented an automated system for discovering and categorizing AI-related policies found in course syllabi and institutional policy websites. The system combines unsupervised topic modeling techniques to identify key policy themes with large language models (LLMs) to classify the level of GenAI allowance and other requirements in policy texts. The developed application achieved a coherence score of 0.73 for topic discovery. In addition, GPT-4.0-based classification of policy categories achieved precision between 0.92 and 0.97, and recall between 0.85 and 0.97 across eight identified topics. By providing structured and interpretable policy information, this tool promotes the safe, equitable, and pedagogically aligned use of GenAI technologies in education. Furthermore, the system can be integrated into educational technology platforms to help students understand and comply with relevant guidelines.

SLIM: Semantic-based Low-bitrate Image compression for Machines by leveraging diffusion

Dec 23, 2025

In recent years, the demand of image compression models for machine vision has increased dramatically. However, the training frameworks of image compression still focus on the vision of human, maintaining the excessive perceptual details, thus have limitations in optimally reducing the bits per pixel in the case of performing machine vision tasks. In this paper, we propose Semantic-based Low-bitrate Image compression for Machines by leveraging diffusion, termed SLIM. This is a new effective training framework of image compression for machine vision, using a pretrained latent diffusion model.The compressor model of our method focuses only on the Region-of-Interest (RoI) areas for machine vision in the image latent, to compress it compactly. Then the pretrained Unet model enhances the decompressed latent, utilizing a RoI-focused text caption which containing semantic information of the image. Therefore, SLIM is able to focus on RoI areas of the image without any guide mask at the inference stage, achieving low bitrate when compressing. And SLIM is also able to enhance a decompressed latent by denoising steps, so the final reconstructed image from the enhanced latent can be optimized for the machine vision task while still containing perceptual details for human vision. Experimental results show that SLIM achieves a higher classification accuracy in the same bits per pixel condition, compared to conventional image compression models for machines.

BrepLLM: Native Boundary Representation Understanding with Large Language Models

Dec 18, 2025

Current token-sequence-based Large Language Models (LLMs) are not well-suited for directly processing 3D Boundary Representation (Brep) models that contain complex geometric and topological information. We propose BrepLLM, the first framework that enables LLMs to parse and reason over raw Brep data, bridging the modality gap between structured 3D geometry and natural language. BrepLLM employs a two-stage training pipeline: Cross-modal Alignment Pre-training and Multi-stage LLM Fine-tuning. In the first stage, an adaptive UV sampling strategy converts Breps into graphs representation with geometric and topological information. We then design a hierarchical BrepEncoder to extract features from geometry (i.e., faces and edges) and topology, producing both a single global token and a sequence of node tokens. Then we align the global token with text embeddings from a frozen CLIP text encoder (ViT-L/14) via contrastive learning. In the second stage, we integrate the pretrained BrepEncoder into an LLM. We then align its sequence of node tokens using a three-stage progressive training strategy: (1) training an MLP-based semantic mapping from Brep representation to 2D with 2D-LLM priors. (2) performing fine-tuning of the LLM. (3) designing a Mixture-of-Query Experts (MQE) to enhance geometric diversity modeling. We also construct Brep2Text, a dataset comprising 269,444 Brep-text question-answer pairs. Experiments show that BrepLLM achieves state-of-the-art (SOTA) results on 3D object classification and captioning tasks.

Generalization Gaps in Political Fake News Detection: An Empirical Study on the LIAR Dataset

Dec 20, 2025

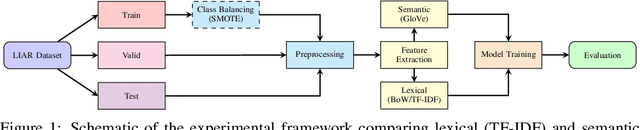

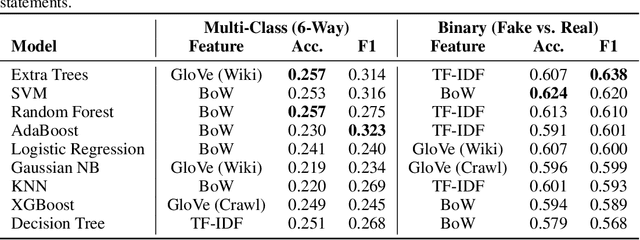

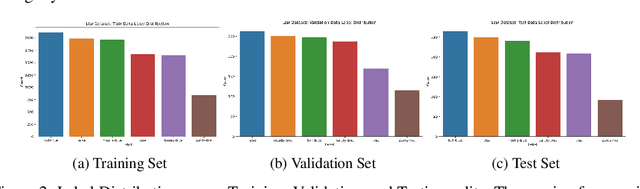

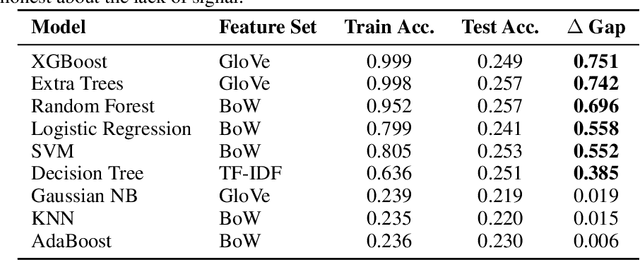

The proliferation of linguistically subtle political disinformation poses a significant challenge to automated fact-checking systems. Despite increasing emphasis on complex neural architectures, the empirical limits of text-only linguistic modeling remain underexplored. We present a systematic diagnostic evaluation of nine machine learning algorithms on the LIAR benchmark. By isolating lexical features (Bag-of-Words, TF-IDF) and semantic embeddings (GloVe), we uncover a hard "Performance Ceiling", with fine-grained classification not exceeding a Weighted F1-score of 0.32 across models. Crucially, a simple linear SVM (Accuracy: 0.624) matches the performance of pre-trained Transformers such as RoBERTa (Accuracy: 0.620), suggesting that model capacity is not the primary bottleneck. We further diagnose a massive "Generalization Gap" in tree-based ensembles, which achieve more than 99% training accuracy but collapse to approximately 25% on test data, indicating reliance on lexical memorization rather than semantic inference. Synthetic data augmentation via SMOTE yields no meaningful gains, confirming that the limitation is semantic (feature ambiguity) rather than distributional. These findings indicate that for political fact-checking, increasing model complexity without incorporating external knowledge yields diminishing returns.

PathFLIP: Fine-grained Language-Image Pretraining for Versatile Computational Pathology

Dec 19, 2025

While Vision-Language Models (VLMs) have achieved notable progress in computational pathology (CPath), the gigapixel scale and spatial heterogeneity of Whole Slide Images (WSIs) continue to pose challenges for multimodal understanding. Existing alignment methods struggle to capture fine-grained correspondences between textual descriptions and visual cues across thousands of patches from a slide, compromising their performance on downstream tasks. In this paper, we propose PathFLIP (Pathology Fine-grained Language-Image Pretraining), a novel framework for holistic WSI interpretation. PathFLIP decomposes slide-level captions into region-level subcaptions and generates text-conditioned region embeddings to facilitate precise visual-language grounding. By harnessing Large Language Models (LLMs), PathFLIP can seamlessly follow diverse clinical instructions and adapt to varied diagnostic contexts. Furthermore, it exhibits versatile capabilities across multiple paradigms, efficiently handling slide-level classification and retrieval, fine-grained lesion localization, and instruction following. Extensive experiments demonstrate that PathFLIP outperforms existing large-scale pathological VLMs on four representative benchmarks while requiring significantly less training data, paving the way for fine-grained, instruction-aware WSI interpretation in clinical practice.

Image Complexity-Aware Adaptive Retrieval for Efficient Vision-Language Models

Dec 17, 2025Vision transformers in vision-language models apply uniform computational effort across all images, expending 175.33 GFLOPs (ViT-L/14) whether analysing a straightforward product photograph or a complex street scene. We propose ICAR (Image Complexity-Aware Retrieval), which enables vision transformers to use less compute for simple images whilst processing complex images through their full network depth. The key challenge is maintaining cross-modal alignment: embeddings from different processing depths must remain compatible for text matching. ICAR solves this through dual-path training that produces compatible embeddings from both reduced-compute and full-compute processing. This maintains compatibility between image representations and text embeddings in the same semantic space, whether an image exits early or processes fully. Unlike existing two-stage approaches that require expensive reranking, ICAR enables direct image-text matching without additional overhead. To determine how much compute to use, we develop ConvNeXt-IC, which treats image complexity assessment as a classification task. By applying modern classifier backbones rather than specialised architectures, ConvNeXt-IC achieves state-of-the-art performance with 0.959 correlation with human judgement (Pearson) and 4.4x speedup. Evaluated on standard benchmarks augmented with real-world web data, ICAR achieves 20% practical speedup while maintaining category-level performance and 95% of instance-level performance, enabling sustainable scaling of vision-language systems.

Confidence-Credibility Aware Weighted Ensembles of Small LLMs Outperform Large LLMs in Emotion Detection

Dec 19, 2025

This paper introduces a confidence-weighted, credibility-aware ensemble framework for text-based emotion detection, inspired by Condorcet's Jury Theorem (CJT). Unlike conventional ensembles that often rely on homogeneous architectures, our approach combines architecturally diverse small transformer-based large language models (sLLMs) - BERT, RoBERTa, DistilBERT, DeBERTa, and ELECTRA, each fully fine-tuned for emotion classification. To preserve error diversity, we minimize parameter convergence while taking advantage of the unique biases of each model. A dual-weighted voting mechanism integrates both global credibility (validation F1 score) and local confidence (instance-level probability) to dynamically weight model contributions. Experiments on the DAIR-AI dataset demonstrate that our credibility-confidence ensemble achieves a macro F1 score of 93.5 percent, surpassing state-of-the-art benchmarks and significantly outperforming large-scale LLMs, including Falcon, Mistral, Qwen, and Phi, even after task-specific Low-Rank Adaptation (LoRA). With only 595M parameters in total, our small LLMs ensemble proves more parameter-efficient and robust than models up to 7B parameters, establishing that carefully designed ensembles of small, fine-tuned models can outperform much larger LLMs in specialized natural language processing (NLP) tasks such as emotion detection.

LLM-Assisted Abstract Screening with OLIVER: Evaluating Calibration and Single-Model vs. Actor-Critic Configurations in Literature Reviews

Dec 23, 2025Introduction: Recent work suggests large language models (LLMs) can accelerate screening, but prior evaluations focus on earlier LLMs, standardized Cochrane reviews, single-model setups, and accuracy as the primary metric, leaving generalizability, configuration effects, and calibration largely unexamined. Methods: We developed OLIVER (Optimized LLM-based Inclusion and Vetting Engine for Reviews), an open-source pipeline for LLM-assisted abstract screening. We evaluated multiple contemporary LLMs across two non-Cochrane systematic reviews and performance was assessed at both the full-text screening and final inclusion stages using accuracy, AUC, and calibration metrics. We further tested an actor-critic screening framework combining two lightweight models under three aggregation rules. Results: Across individual models, performance varied widely. In the smaller Review 1 (821 abstracts, 63 final includes), several models achieved high sensitivity for final includes but at the cost of substantial false positives and poor calibration. In the larger Review 2 (7741 abstracts, 71 final includes), most models were highly specific but struggled to recover true includes, with prompt design influencing recall. Calibration was consistently weak across single-model configurations despite high overall accuracy. Actor-critic screening improved discrimination and markedly reduced calibration error in both reviews, yielding higher AUCs. Discussion: LLMs may eventually accelerate abstract screening, but single-model performance is highly sensitive to review characteristics, prompting, and calibration is limited. An actor-critic framework improves classification quality and confidence reliability while remaining computationally efficient, enabling large-scale screening at low cost.

Activation Oracles: Training and Evaluating LLMs as General-Purpose Activation Explainers

Dec 17, 2025

Large language model (LLM) activations are notoriously difficult to understand, with most existing techniques using complex, specialized methods for interpreting them. Recent work has proposed a simpler approach known as LatentQA: training LLMs to directly accept LLM activations as inputs and answer arbitrary questions about them in natural language. However, prior work has focused on narrow task settings for both training and evaluation. In this paper, we instead take a generalist perspective. We evaluate LatentQA-trained models, which we call Activation Oracles (AOs), in far out-of-distribution settings and examine how performance scales with training data diversity. We find that AOs can recover information fine-tuned into a model (e.g., biographical knowledge or malign propensities) that does not appear in the input text, despite never being trained with activations from a fine-tuned model. Our main evaluations are four downstream tasks where we can compare to prior white- and black-box techniques. We find that even narrowly-trained LatentQA models can generalize well, and that adding additional training datasets (such as classification tasks and a self-supervised context prediction task) yields consistent further improvements. Overall, our best AOs match or exceed prior white-box baselines on all four tasks and are the best method on 3 out of 4. These results suggest that diversified training to answer natural-language queries imparts a general capability to verbalize information about LLM activations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge