facial recognition

Facial recognition is an AI-based technique for identifying or confirming an individual's identity using their face. It maps facial features from an image or video and then compares the information with a collection of known faces to find a match.

Papers and Code

An Evaluation of a Visual Question Answering Strategy for Zero-shot Facial Expression Recognition in Still Images

Apr 30, 2025

Facial expression recognition (FER) is a key research area in computer vision and human-computer interaction. Despite recent advances in deep learning, challenges persist, especially in generalizing to new scenarios. In fact, zero-shot FER significantly reduces the performance of state-of-the-art FER models. To address this problem, the community has recently started to explore the integration of knowledge from Large Language Models for visual tasks. In this work, we evaluate a broad collection of locally executed Visual Language Models (VLMs), avoiding the lack of task-specific knowledge by adopting a Visual Question Answering strategy. We compare the proposed pipeline with state-of-the-art FER models, both integrating and excluding VLMs, evaluating well-known FER benchmarks: AffectNet, FERPlus, and RAF-DB. The results show excellent performance for some VLMs in zero-shot FER scenarios, indicating the need for further exploration to improve FER generalization.

FaceShield: Explainable Face Anti-Spoofing with Multimodal Large Language Models

May 14, 2025

Face anti-spoofing (FAS) is crucial for protecting facial recognition systems from presentation attacks. Previous methods approached this task as a classification problem, lacking interpretability and reasoning behind the predicted results. Recently, multimodal large language models (MLLMs) have shown strong capabilities in perception, reasoning, and decision-making in visual tasks. However, there is currently no universal and comprehensive MLLM and dataset specifically designed for FAS task. To address this gap, we propose FaceShield, a MLLM for FAS, along with the corresponding pre-training and supervised fine-tuning (SFT) datasets, FaceShield-pre10K and FaceShield-sft45K. FaceShield is capable of determining the authenticity of faces, identifying types of spoofing attacks, providing reasoning for its judgments, and detecting attack areas. Specifically, we employ spoof-aware vision perception (SAVP) that incorporates both the original image and auxiliary information based on prior knowledge. We then use an prompt-guided vision token masking (PVTM) strategy to random mask vision tokens, thereby improving the model's generalization ability. We conducted extensive experiments on three benchmark datasets, demonstrating that FaceShield significantly outperforms previous deep learning models and general MLLMs on four FAS tasks, i.e., coarse-grained classification, fine-grained classification, reasoning, and attack localization. Our instruction datasets, protocols, and codes will be released soon.

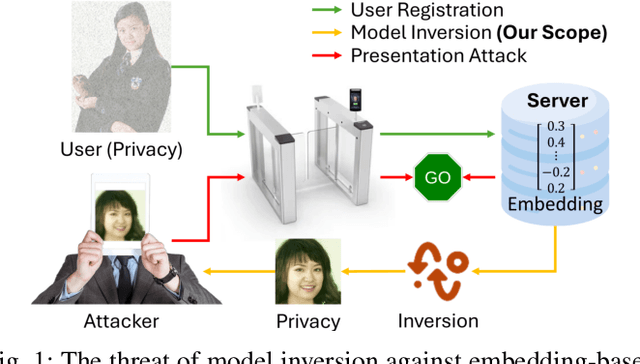

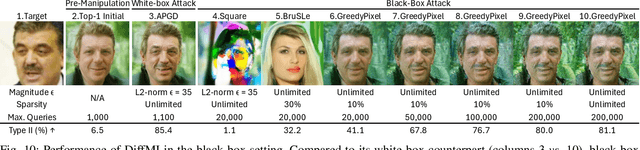

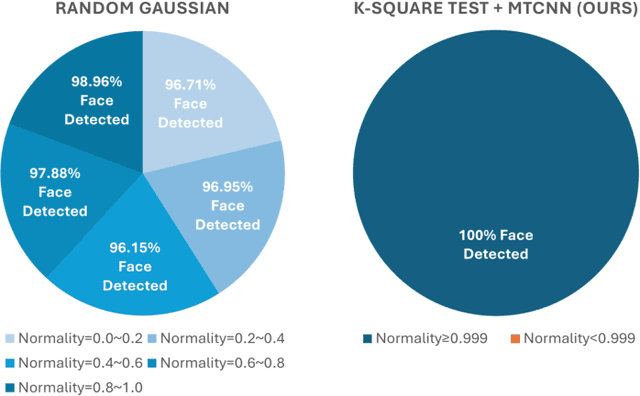

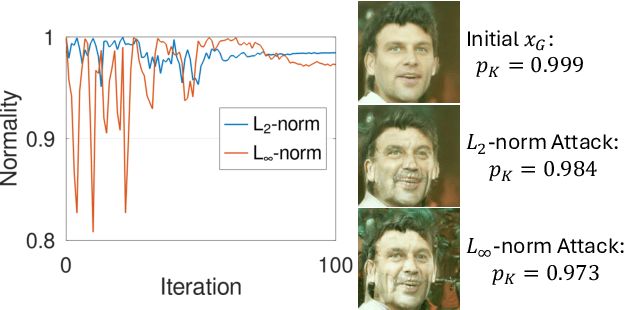

Diffusion-Driven Universal Model Inversion Attack for Face Recognition

Apr 25, 2025

Facial recognition technology poses significant privacy risks, as it relies on biometric data that is inherently sensitive and immutable if compromised. To mitigate these concerns, face recognition systems convert raw images into embeddings, traditionally considered privacy-preserving. However, model inversion attacks pose a significant privacy threat by reconstructing these private facial images, making them a crucial tool for evaluating the privacy risks of face recognition systems. Existing methods usually require training individual generators for each target model, a computationally expensive process. In this paper, we propose DiffUMI, a training-free diffusion-driven universal model inversion attack for face recognition systems. DiffUMI is the first approach to apply a diffusion model for unconditional image generation in model inversion. Unlike other methods, DiffUMI is universal, eliminating the need for training target-specific generators. It operates within a fixed framework and pretrained diffusion model while seamlessly adapting to diverse target identities and models. DiffUMI breaches privacy-preserving face recognition systems with state-of-the-art success, demonstrating that an unconditional diffusion model, coupled with optimized adversarial search, enables efficient and high-fidelity facial reconstruction. Additionally, we introduce a novel application of out-of-domain detection (OODD), marking the first use of model inversion to distinguish non-face inputs from face inputs based solely on embeddings.

Foundation Artificial Intelligence Models for Health Recognition Using Face Photographs (FAHR-Face)

Jun 17, 2025Background: Facial appearance offers a noninvasive window into health. We built FAHR-Face, a foundation model trained on >40 million facial images and fine-tuned it for two distinct tasks: biological age estimation (FAHR-FaceAge) and survival risk prediction (FAHR-FaceSurvival). Methods: FAHR-FaceAge underwent a two-stage, age-balanced fine-tuning on 749,935 public images; FAHR-FaceSurvival was fine-tuned on 34,389 photos of cancer patients. Model robustness (cosmetic surgery, makeup, pose, lighting) and independence (saliency mapping) was tested extensively. Both models were clinically tested in two independent cancer patient datasets with survival analyzed by multivariable Cox models and adjusted for clinical prognostic factors. Findings: For age estimation, FAHR-FaceAge had the lowest mean absolute error of 5.1 years on public datasets, outperforming benchmark models and maintaining accuracy across the full human lifespan. In cancer patients, FAHR-FaceAge outperformed a prior facial age estimation model in survival prognostication. FAHR-FaceSurvival demonstrated robust prediction of mortality, and the highest-risk quartile had more than triple the mortality of the lowest (adjusted hazard ratio 3.22; P<0.001). These findings were validated in the independent cohort and both models showed generalizability across age, sex, race and cancer subgroups. The two algorithms provided distinct, complementary prognostic information; saliency mapping revealed each model relied on distinct facial regions. The combination of FAHR-FaceAge and FAHR-FaceSurvival improved prognostic accuracy. Interpretation: A single foundation model can generate inexpensive, scalable facial biomarkers that capture both biological ageing and disease-related mortality risk. The foundation model enabled effective training using relatively small clinical datasets.

The Invisible Threat: Evaluating the Vulnerability of Cross-Spectral Face Recognition to Presentation Attacks

May 01, 2025Cross-spectral face recognition systems are designed to enhance the performance of facial recognition systems by enabling cross-modal matching under challenging operational conditions. A particularly relevant application is the matching of near-infrared (NIR) images to visible-spectrum (VIS) images, enabling the verification of individuals by comparing NIR facial captures acquired with VIS reference images. The use of NIR imaging offers several advantages, including greater robustness to illumination variations, better visibility through glasses and glare, and greater resistance to presentation attacks. Despite these claimed benefits, the robustness of NIR-based systems against presentation attacks has not been systematically studied in the literature. In this work, we conduct a comprehensive evaluation into the vulnerability of NIR-VIS cross-spectral face recognition systems to presentation attacks. Our empirical findings indicate that, although these systems exhibit a certain degree of reliability, they remain vulnerable to specific attacks, emphasizing the need for further research in this area.

Denoising and Alignment: Rethinking Domain Generalization for Multimodal Face Anti-Spoofing

May 14, 2025Face Anti-Spoofing (FAS) is essential for the security of facial recognition systems in diverse scenarios such as payment processing and surveillance. Current multimodal FAS methods often struggle with effective generalization, mainly due to modality-specific biases and domain shifts. To address these challenges, we introduce the \textbf{M}ulti\textbf{m}odal \textbf{D}enoising and \textbf{A}lignment (\textbf{MMDA}) framework. By leveraging the zero-shot generalization capability of CLIP, the MMDA framework effectively suppresses noise in multimodal data through denoising and alignment mechanisms, thereby significantly enhancing the generalization performance of cross-modal alignment. The \textbf{M}odality-\textbf{D}omain Joint \textbf{D}ifferential \textbf{A}ttention (\textbf{MD2A}) module in MMDA concurrently mitigates the impacts of domain and modality noise by refining the attention mechanism based on extracted common noise features. Furthermore, the \textbf{R}epresentation \textbf{S}pace \textbf{S}oft (\textbf{RS2}) Alignment strategy utilizes the pre-trained CLIP model to align multi-domain multimodal data into a generalized representation space in a flexible manner, preserving intricate representations and enhancing the model's adaptability to various unseen conditions. We also design a \textbf{U}-shaped \textbf{D}ual \textbf{S}pace \textbf{A}daptation (\textbf{U-DSA}) module to enhance the adaptability of representations while maintaining generalization performance. These improvements not only enhance the framework's generalization capabilities but also boost its ability to represent complex representations. Our experimental results on four benchmark datasets under different evaluation protocols demonstrate that the MMDA framework outperforms existing state-of-the-art methods in terms of cross-domain generalization and multimodal detection accuracy. The code will be released soon.

Longitudinal Study of Facial Biometrics at the BEZ: Temporal Variance Analysis

Jul 09, 2025This study presents findings from long-term biometric evaluations conducted at the Biometric Evaluation Center (bez). Over the course of two and a half years, our ongoing research with over 400 participants representing diverse ethnicities, genders, and age groups were regularly assessed using a variety of biometric tools and techniques at the controlled testing facilities. Our findings are based on the General Data Protection Regulation-compliant local bez database with more than 238.000 biometric data sets categorized into multiple biometric modalities such as face and finger. We used state-of-the-art face recognition algorithms to analyze long-term comparison scores. Our results show that these scores fluctuate more significantly between individual days than over the entire measurement period. These findings highlight the importance of testing biometric characteristics of the same individuals over a longer period of time in a controlled measurement environment and lays the groundwork for future advancements in biometric data analysis.

A Visual Self-attention Mechanism Facial Expression Recognition Network beyond Convnext

Apr 12, 2025

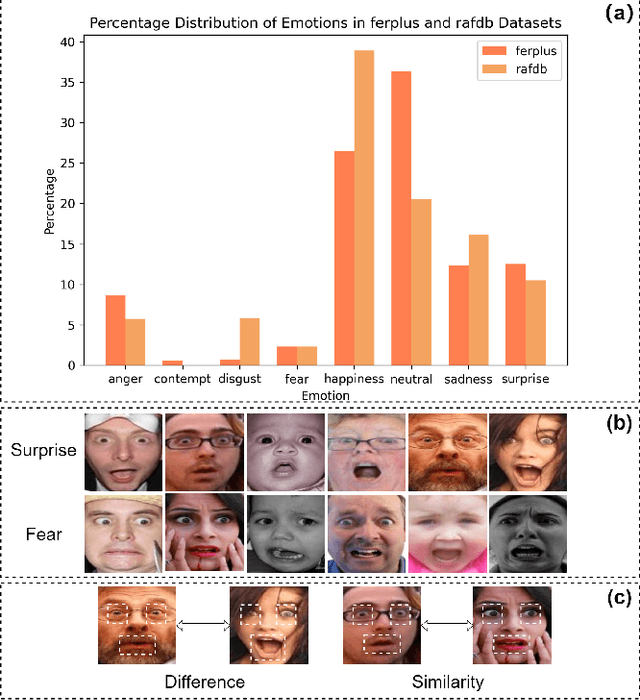

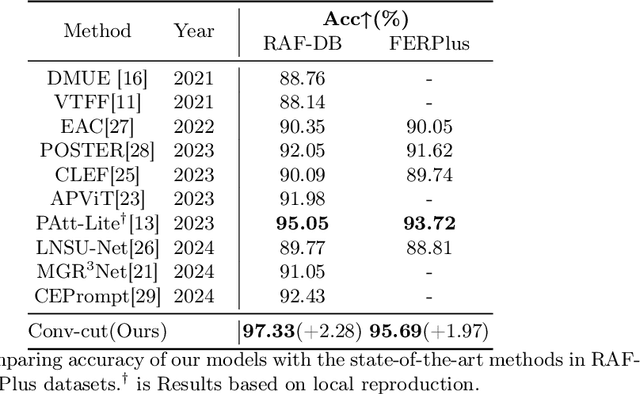

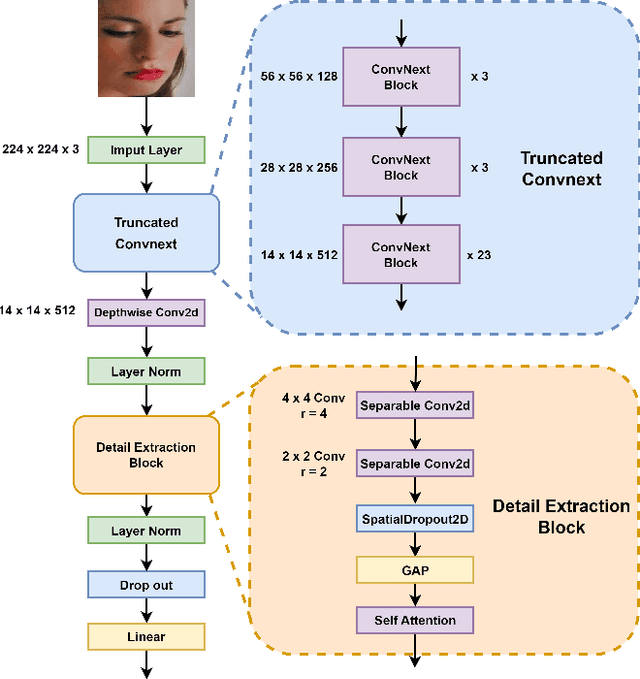

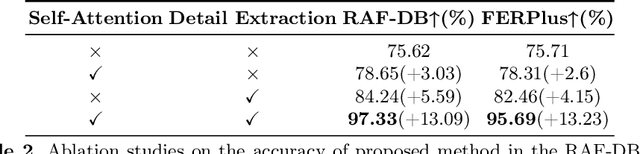

Facial expression recognition is an important research direction in the field of artificial intelligence. Although new breakthroughs have been made in recent years, the uneven distribution of datasets and the similarity between different categories of facial expressions, as well as the differences within the same category among different subjects, remain challenges. This paper proposes a visual facial expression signal feature processing network based on truncated ConvNeXt approach(Conv-cut), to improve the accuracy of FER under challenging conditions. The network uses a truncated ConvNeXt-Base as the feature extractor, and then we designed a Detail Extraction Block to extract detailed features, and introduced a Self-Attention mechanism to enable the network to learn the extracted features more effectively. To evaluate the proposed Conv-cut approach, we conducted experiments on the RAF-DB and FERPlus datasets, and the results show that our model has achieved state-of-the-art performance. Our code could be accessed at Github.

Achieving 3D Attention via Triplet Squeeze and Excitation Block

May 09, 2025The emergence of ConvNeXt and its variants has reaffirmed the conceptual and structural suitability of CNN-based models for vision tasks, re-establishing them as key players in image classification in general, and in facial expression recognition (FER) in particular. In this paper, we propose a new set of models that build on these advancements by incorporating a new set of attention mechanisms that combines Triplet attention with Squeeze-and-Excitation (TripSE) in four different variants. We demonstrate the effectiveness of these variants by applying them to the ResNet18, DenseNet and ConvNext architectures to validate their versatility and impact. Our study shows that incorporating a TripSE block in these CNN models boosts their performances, particularly for the ConvNeXt architecture, indicating its utility. We evaluate the proposed mechanisms and associated models across four datasets, namely CIFAR100, ImageNet, FER2013 and AffectNet datasets, where ConvNext with TripSE achieves state-of-the-art results with an accuracy of \textbf{78.27\%} on the popular FER2013 dataset, a new feat for this dataset.

ID-EA: Identity-driven Text Enhancement and Adaptation with Textual Inversion for Personalized Text-to-Image Generation

Jul 16, 2025Recently, personalized portrait generation with a text-to-image diffusion model has significantly advanced with Textual Inversion, emerging as a promising approach for creating high-fidelity personalized images. Despite its potential, current Textual Inversion methods struggle to maintain consistent facial identity due to semantic misalignments between textual and visual embedding spaces regarding identity. We introduce ID-EA, a novel framework that guides text embeddings to align with visual identity embeddings, thereby improving identity preservation in a personalized generation. ID-EA comprises two key components: the ID-driven Enhancer (ID-Enhancer) and the ID-conditioned Adapter (ID-Adapter). First, the ID-Enhancer integrates identity embeddings with a textual ID anchor, refining visual identity embeddings derived from a face recognition model using representative text embeddings. Then, the ID-Adapter leverages the identity-enhanced embedding to adapt the text condition, ensuring identity preservation by adjusting the cross-attention module in the pre-trained UNet model. This process encourages the text features to find the most related visual clues across the foreground snippets. Extensive quantitative and qualitative evaluations demonstrate that ID-EA substantially outperforms state-of-the-art methods in identity preservation metrics while achieving remarkable computational efficiency, generating personalized portraits approximately 15 times faster than existing approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge