Zhaoyang Jia

Efficient Autoregressive Video Diffusion with Dummy Head

Jan 28, 2026Abstract:The autoregressive video diffusion model has recently gained considerable research interest due to its causal modeling and iterative denoising. In this work, we identify that the multi-head self-attention in these models under-utilizes historical frames: approximately 25% heads attend almost exclusively to the current frame, and discarding their KV caches incurs only minor performance degradation. Building upon this, we propose Dummy Forcing, a simple yet effective method to control context accessibility across different heads. Specifically, the proposed heterogeneous memory allocation reduces head-wise context redundancy, accompanied by dynamic head programming to adaptively classify head types. Moreover, we develop a context packing technique to achieve more aggressive cache compression. Without additional training, our Dummy Forcing delivers up to 2.0x speedup over the baseline, supporting video generation at 24.3 FPS with less than 0.5% quality drop. Project page is available at https://csguoh.github.io/project/DummyForcing/.

Single-step Diffusion-based Video Coding with Semantic-Temporal Guidance

Dec 08, 2025Abstract:While traditional and neural video codecs (NVCs) have achieved remarkable rate-distortion performance, improving perceptual quality at low bitrates remains challenging. Some NVCs incorporate perceptual or adversarial objectives but still suffer from artifacts due to limited generation capacity, whereas others leverage pretrained diffusion models to improve quality at the cost of heavy sampling complexity. To overcome these challenges, we propose S2VC, a Single-Step diffusion based Video Codec that integrates a conditional coding framework with an efficient single-step diffusion generator, enabling realistic reconstruction at low bitrates with reduced sampling cost. Recognizing the importance of semantic conditioning in single-step diffusion, we introduce Contextual Semantic Guidance to extract frame-adaptive semantics from buffered features. It replaces text captions with efficient, fine-grained conditioning, thereby improving generation realism. In addition, Temporal Consistency Guidance is incorporated into the diffusion U-Net to enforce temporal coherence across frames and ensure stable generation. Extensive experiments show that S2VC delivers state-of-the-art perceptual quality with an average 52.73% bitrate saving over prior perceptual methods, underscoring the promise of single-step diffusion for efficient, high-quality video compression.

Deep Video Discovery: Agentic Search with Tool Use for Long-form Video Understanding

May 23, 2025Abstract:Long-form video understanding presents significant challenges due to extensive temporal-spatial complexity and the difficulty of question answering under such extended contexts. While Large Language Models (LLMs) have demonstrated considerable advancements in video analysis capabilities and long context handling, they continue to exhibit limitations when processing information-dense hour-long videos. To overcome such limitations, we propose the Deep Video Discovery agent to leverage an agentic search strategy over segmented video clips. Different from previous video agents manually designing a rigid workflow, our approach emphasizes the autonomous nature of agents. By providing a set of search-centric tools on multi-granular video database, our DVD agent leverages the advanced reasoning capability of LLM to plan on its current observation state, strategically selects tools, formulates appropriate parameters for actions, and iteratively refines its internal reasoning in light of the gathered information. We perform comprehensive evaluation on multiple long video understanding benchmarks that demonstrates the advantage of the entire system design. Our DVD agent achieves SOTA performance, significantly surpassing prior works by a large margin on the challenging LVBench dataset. Comprehensive ablation studies and in-depth tool analyses are also provided, yielding insights to further advance intelligent agents tailored for long-form video understanding tasks. The code will be released later.

One-Step Diffusion-Based Image Compression with Semantic Distillation

May 22, 2025Abstract:While recent diffusion-based generative image codecs have shown impressive performance, their iterative sampling process introduces unpleasing latency. In this work, we revisit the design of a diffusion-based codec and argue that multi-step sampling is not necessary for generative compression. Based on this insight, we propose OneDC, a One-step Diffusion-based generative image Codec -- that integrates a latent compression module with a one-step diffusion generator. Recognizing the critical role of semantic guidance in one-step diffusion, we propose using the hyperprior as a semantic signal, overcoming the limitations of text prompts in representing complex visual content. To further enhance the semantic capability of the hyperprior, we introduce a semantic distillation mechanism that transfers knowledge from a pretrained generative tokenizer to the hyperprior codec. Additionally, we adopt a hybrid pixel- and latent-domain optimization to jointly enhance both reconstruction fidelity and perceptual realism. Extensive experiments demonstrate that OneDC achieves SOTA perceptual quality even with one-step generation, offering over 40% bitrate reduction and 20x faster decoding compared to prior multi-step diffusion-based codecs. Code will be released later.

Generative Latent Coding for Ultra-Low Bitrate Image and Video Compression

May 22, 2025Abstract:Most existing approaches for image and video compression perform transform coding in the pixel space to reduce redundancy. However, due to the misalignment between the pixel-space distortion and human perception, such schemes often face the difficulties in achieving both high-realism and high-fidelity at ultra-low bitrate. To solve this problem, we propose \textbf{G}enerative \textbf{L}atent \textbf{C}oding (\textbf{GLC}) models for image and video compression, termed GLC-image and GLC-Video. The transform coding of GLC is conducted in the latent space of a generative vector-quantized variational auto-encoder (VQ-VAE). Compared to the pixel-space, such a latent space offers greater sparsity, richer semantics and better alignment with human perception, and show its advantages in achieving high-realism and high-fidelity compression. To further enhance performance, we improve the hyper prior by introducing a spatial categorical hyper module in GLC-image and a spatio-temporal categorical hyper module in GLC-video. Additionally, the code-prediction-based loss function is proposed to enhance the semantic consistency. Experiments demonstrate that our scheme shows high visual quality at ultra-low bitrate for both image and video compression. For image compression, GLC-image achieves an impressive bitrate of less than $0.04$ bpp, achieving the same FID as previous SOTA model MS-ILLM while using $45\%$ fewer bitrate on the CLIC 2020 test set. For video compression, GLC-video achieves 65.3\% bitrate saving over PLVC in terms of DISTS.

DLF: Extreme Image Compression with Dual-generative Latent Fusion

Mar 03, 2025

Abstract:Recent studies in extreme image compression have achieved remarkable performance by compressing the tokens from generative tokenizers. However, these methods often prioritize clustering common semantics within the dataset, while overlooking the diverse details of individual objects. Consequently, this results in suboptimal reconstruction fidelity, especially at low bitrates. To address this issue, we introduce a Dual-generative Latent Fusion (DLF) paradigm. DLF decomposes the latent into semantic and detail elements, compressing them through two distinct branches. The semantic branch clusters high-level information into compact tokens, while the detail branch encodes perceptually critical details to enhance the overall fidelity. Additionally, we propose a cross-branch interactive design to reduce redundancy between the two branches, thereby minimizing the overall bit cost. Experimental results demonstrate the impressive reconstruction quality of DLF even below 0.01 bits per pixel (bpp). On the CLIC2020 test set, our method achieves bitrate savings of up to 27.93% on LPIPS and 53.55% on DISTS compared to MS-ILLM. Furthermore, DLF surpasses recent diffusion-based codecs in visual fidelity while maintaining a comparable level of generative realism. Code will be available later.

Towards Practical Real-Time Neural Video Compression

Feb 28, 2025Abstract:We introduce a practical real-time neural video codec (NVC) designed to deliver high compression ratio, low latency and broad versatility. In practice, the coding speed of NVCs depends on 1) computational costs, and 2) non-computational operational costs, such as memory I/O and the number of function calls. While most efficient NVCs prioritize reducing computational cost, we identify operational cost as the primary bottleneck to achieving higher coding speed. Leveraging this insight, we introduce a set of efficiency-driven design improvements focused on minimizing operational costs. Specifically, we employ implicit temporal modeling to eliminate complex explicit motion modules, and use single low-resolution latent representations rather than progressive downsampling. These innovations significantly accelerate NVC without sacrificing compression quality. Additionally, we implement model integerization for consistent cross-device coding and a module-bank-based rate control scheme to improve practical adaptability. Experiments show our proposed DCVC-RT achieves an impressive average encoding/decoding speed at 125.2/112.8 fps (frames per second) for 1080p video, while saving an average of 21% in bitrate compared to H.266/VTM. The code is available at https://github.com/microsoft/DCVC.

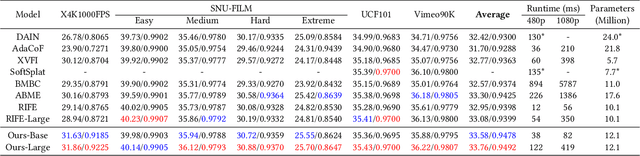

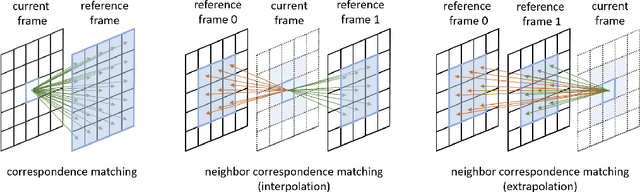

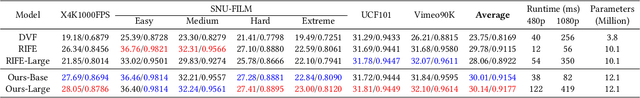

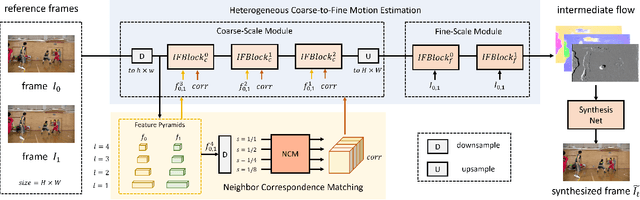

Neighbor Correspondence Matching for Flow-based Video Frame Synthesis

Jul 14, 2022

Abstract:Video frame synthesis, which consists of interpolation and extrapolation, is an essential video processing technique that can be applied to various scenarios. However, most existing methods cannot handle small objects or large motion well, especially in high-resolution videos such as 4K videos. To eliminate such limitations, we introduce a neighbor correspondence matching (NCM) algorithm for flow-based frame synthesis. Since the current frame is not available in video frame synthesis, NCM is performed in a current-frame-agnostic fashion to establish multi-scale correspondences in the spatial-temporal neighborhoods of each pixel. Based on the powerful motion representation capability of NCM, we further propose to estimate intermediate flows for frame synthesis in a heterogeneous coarse-to-fine scheme. Specifically, the coarse-scale module is designed to leverage neighbor correspondences to capture large motion, while the fine-scale module is more computationally efficient to speed up the estimation process. Both modules are trained progressively to eliminate the resolution gap between training dataset and real-world videos. Experimental results show that NCM achieves state-of-the-art performance on several benchmarks. In addition, NCM can be applied to various practical scenarios such as video compression to achieve better performance.

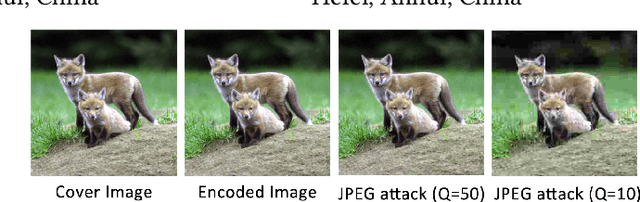

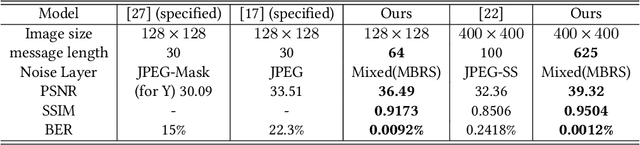

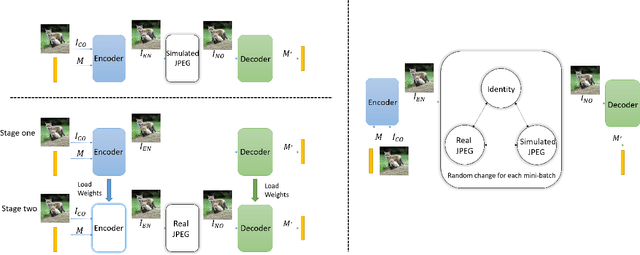

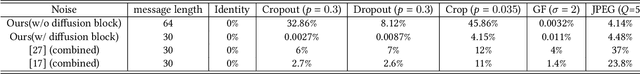

MBRS : Enhancing Robustness of DNN-based Watermarking by Mini-Batch of Real and Simulated JPEG Compression

Aug 18, 2021

Abstract:Based on the powerful feature extraction ability of deep learning architecture, recently, deep-learning based watermarking algorithms have been widely studied. The basic framework of such algorithm is the auto-encoder like end-to-end architecture with an encoder, a noise layer and a decoder. The key to guarantee robustness is the adversarial training with the differential noise layer. However, we found that none of the existing framework can well ensure the robustness against JPEG compression, which is non-differential but is an essential and important image processing operation. To address such limitations, we proposed a novel end-to-end training architecture, which utilizes Mini-Batch of Real and Simulated JPEG compression (MBRS) to enhance the JPEG robustness. Precisely, for different mini-batches, we randomly choose one of real JPEG, simulated JPEG and noise-free layer as the noise layer. Besides, we suggest to utilize the Squeeze-and-Excitation blocks which can learn better feature in embedding and extracting stage, and propose a "message processor" to expand the message in a more appreciate way. Meanwhile, to improve the robustness against crop attack, we propose an additive diffusion block into the network. The extensive experimental results have demonstrated the superior performance of the proposed scheme compared with the state-of-the-art algorithms. Under the JPEG compression with quality factor Q=50, our models achieve a bit error rate less than 0.01% for extracted messages, with PSNR larger than 36 for the encoded images, which shows the well-enhanced robustness against JPEG attack. Besides, under many other distortions such as Gaussian filter, crop, cropout and dropout, the proposed framework also obtains strong robustness. The code implemented by PyTorch \cite{2011torch7} is avaiable in https://github.com/jzyustc/MBRS.

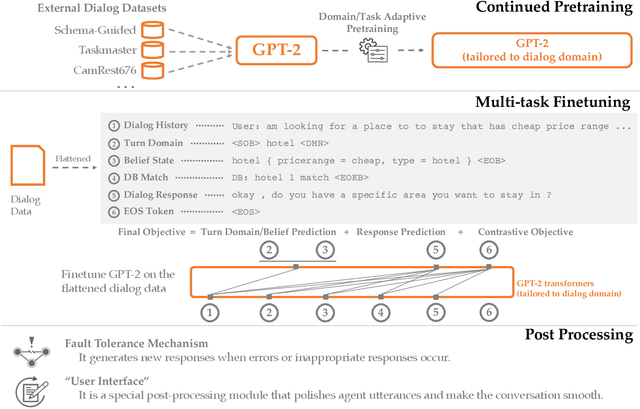

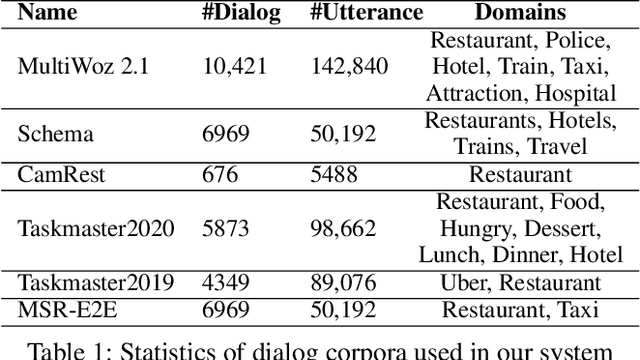

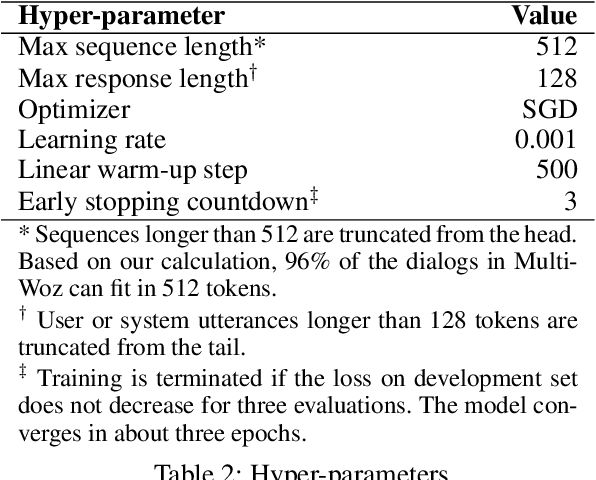

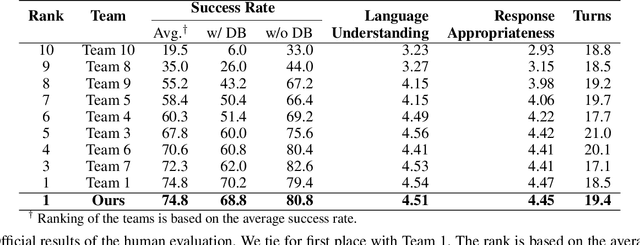

A Hybrid Task-Oriented Dialog System with Domain and Task Adaptive Pretraining

Feb 08, 2021

Abstract:This paper describes our submission for the End-to-end Multi-domain Task Completion Dialog shared task at the 9th Dialog System Technology Challenge (DSTC-9). Participants in the shared task build an end-to-end task completion dialog system which is evaluated by human evaluation and a user simulator based automatic evaluation. Different from traditional pipelined approaches where modules are optimized individually and suffer from cascading failure, we propose an end-to-end dialog system that 1) uses Generative Pretraining 2 (GPT-2) as the backbone to jointly solve Natural Language Understanding, Dialog State Tracking, and Natural Language Generation tasks, 2) adopts Domain and Task Adaptive Pretraining to tailor GPT-2 to the dialog domain before finetuning, 3) utilizes heuristic pre/post-processing rules that greatly simplify the prediction tasks and improve generalizability, and 4) equips a fault tolerance module to correct errors and inappropriate responses. Our proposed method significantly outperforms baselines and ties for first place in the official evaluation. We make our source code publicly available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge