Yuan Sun

UIKA: Fast Universal Head Avatar from Pose-Free Images

Jan 12, 2026Abstract:We present UIKA, a feed-forward animatable Gaussian head model from an arbitrary number of unposed inputs, including a single image, multi-view captures, and smartphone-captured videos. Unlike the traditional avatar method, which requires a studio-level multi-view capture system and reconstructs a human-specific model through a long-time optimization process, we rethink the task through the lenses of model representation, network design, and data preparation. First, we introduce a UV-guided avatar modeling strategy, in which each input image is associated with a pixel-wise facial correspondence estimation. Such correspondence estimation allows us to reproject each valid pixel color from screen space to UV space, which is independent of camera pose and character expression. Furthermore, we design learnable UV tokens on which the attention mechanism can be applied at both the screen and UV levels. The learned UV tokens can be decoded into canonical Gaussian attributes using aggregated UV information from all input views. To train our large avatar model, we additionally prepare a large-scale, identity-rich synthetic training dataset. Our method significantly outperforms existing approaches in both monocular and multi-view settings. Project page: https://zijian-wu.github.io/uika-page/

HeadLighter: Disentangling Illumination in Generative 3D Gaussian Heads via Lightstage Captures

Jan 05, 2026Abstract:Recent 3D-aware head generative models based on 3D Gaussian Splatting achieve real-time, photorealistic and view-consistent head synthesis. However, a fundamental limitation persists: the deep entanglement of illumination and intrinsic appearance prevents controllable relighting. Existing disentanglement methods rely on strong assumptions to enable weakly supervised learning, which restricts their capacity for complex illumination. To address this challenge, we introduce HeadLighter, a novel supervised framework that learns a physically plausible decomposition of appearance and illumination in head generative models. Specifically, we design a dual-branch architecture that separately models lighting-invariant head attributes and physically grounded rendering components. A progressive disentanglement training is employed to gradually inject head appearance priors into the generative architecture, supervised by multi-view images captured under controlled light conditions with a light stage setup. We further introduce a distillation strategy to generate high-quality normals for realistic rendering. Experiments demonstrate that our method preserves high-quality generation and real-time rendering, while simultaneously supporting explicit lighting and viewpoint editing. We will publicly release our code and dataset.

RelayGR: Scaling Long-Sequence Generative Recommendation via Cross-Stage Relay-Race Inference

Jan 05, 2026Abstract:Real-time recommender systems execute multi-stage cascades (retrieval, pre-processing, fine-grained ranking) under strict tail-latency SLOs, leaving only tens of milliseconds for ranking. Generative recommendation (GR) models can improve quality by consuming long user-behavior sequences, but in production their online sequence length is tightly capped by the ranking-stage P99 budget. We observe that the majority of GR tokens encode user behaviors that are independent of the item candidates, suggesting an opportunity to pre-infer a user-behavior prefix once and reuse it during ranking rather than recomputing it on the critical path. Realizing this idea at industrial scale is non-trivial: the prefix cache must survive across multiple pipeline stages before the final ranking instance is determined, the user population implies cache footprints far beyond a single device, and indiscriminate pre-inference would overload shared resources under high QPS. We present RelayGR, a production system that enables in-HBM relay-race inference for GR. RelayGR selectively pre-infers long-term user prefixes, keeps their KV caches resident in HBM over the request lifecycle, and ensures the subsequent ranking can consume them without remote fetches. RelayGR combines three techniques: 1) a sequence-aware trigger that admits only at-risk requests under a bounded cache footprint and pre-inference load, 2) an affinity-aware router that co-locates cache production and consumption by routing both the auxiliary pre-infer signal and the ranking request to the same instance, and 3) a memory-aware expander that uses server-local DRAM to capture short-term cross-request reuse while avoiding redundant reloads. We implement RelayGR on Huawei Ascend NPUs and evaluate it with real queries. Under a fixed P99 SLO, RelayGR supports up to 1.5$\times$ longer sequences and improves SLO-compliant throughput by up to 3.6$\times$.

Neighbor-aware Instance Refining with Noisy Labels for Cross-Modal Retrieval

Dec 30, 2025Abstract:In recent years, Cross-Modal Retrieval (CMR) has made significant progress in the field of multi-modal analysis. However, since it is time-consuming and labor-intensive to collect large-scale and well-annotated data, the annotation of multi-modal data inevitably contains some noise. This will degrade the retrieval performance of the model. To tackle the problem, numerous robust CMR methods have been developed, including robust learning paradigms, label calibration strategies, and instance selection mechanisms. Unfortunately, they often fail to simultaneously satisfy model performance ceilings, calibration reliability, and data utilization rate. To overcome the limitations, we propose a novel robust cross-modal learning framework, namely Neighbor-aware Instance Refining with Noisy Labels (NIRNL). Specifically, we first propose Cross-modal Margin Preserving (CMP) to adjust the relative distance between positive and negative pairs, thereby enhancing the discrimination between sample pairs. Then, we propose Neighbor-aware Instance Refining (NIR) to identify pure subset, hard subset, and noisy subset through cross-modal neighborhood consensus. Afterward, we construct different tailored optimization strategies for this fine-grained partitioning, thereby maximizing the utilization of all available data while mitigating error propagation. Extensive experiments on three benchmark datasets demonstrate that NIRNL achieves state-of-the-art performance, exhibiting remarkable robustness, especially under high noise rates.

Semantic-Consistent Bidirectional Contrastive Hashing for Noisy Multi-Label Cross-Modal Retrieval

Nov 11, 2025Abstract:Cross-modal hashing (CMH) facilitates efficient retrieval across different modalities (e.g., image and text) by encoding data into compact binary representations. While recent methods have achieved remarkable performance, they often rely heavily on fully annotated datasets, which are costly and labor-intensive to obtain. In real-world scenarios, particularly in multi-label datasets, label noise is prevalent and severely degrades retrieval performance. Moreover, existing CMH approaches typically overlook the partial semantic overlaps inherent in multi-label data, limiting their robustness and generalization. To tackle these challenges, we propose a novel framework named Semantic-Consistent Bidirectional Contrastive Hashing (SCBCH). The framework comprises two complementary modules: (1) Cross-modal Semantic-Consistent Classification (CSCC), which leverages cross-modal semantic consistency to estimate sample reliability and reduce the impact of noisy labels; (2) Bidirectional Soft Contrastive Hashing (BSCH), which dynamically generates soft contrastive sample pairs based on multi-label semantic overlap, enabling adaptive contrastive learning between semantically similar and dissimilar samples across modalities. Extensive experiments on four widely-used cross-modal retrieval benchmarks validate the effectiveness and robustness of our method, consistently outperforming state-of-the-art approaches under noisy multi-label conditions.

FedAPM: Federated Learning via ADMM with Partial Model Personalization

Jun 05, 2025Abstract:In federated learning (FL), the assumption that datasets from different devices are independent and identically distributed (i.i.d.) often does not hold due to user differences, and the presence of various data modalities across clients makes using a single model impractical. Personalizing certain parts of the model can effectively address these issues by allowing those parts to differ across clients, while the remaining parts serve as a shared model. However, we found that partial model personalization may exacerbate client drift (each client's local model diverges from the shared model), thereby reducing the effectiveness and efficiency of FL algorithms. We propose an FL framework based on the alternating direction method of multipliers (ADMM), referred to as FedAPM, to mitigate client drift. We construct the augmented Lagrangian function by incorporating first-order and second-order proximal terms into the objective, with the second-order term providing fixed correction and the first-order term offering compensatory correction between the local and shared models. Our analysis demonstrates that FedAPM, by using explicit estimates of the Lagrange multiplier, is more stable and efficient in terms of convergence compared to other FL frameworks. We establish the global convergence of FedAPM training from arbitrary initial points to a stationary point, achieving three types of rates: constant, linear, and sublinear, under mild assumptions. We conduct experiments using four heterogeneous and multimodal datasets with different metrics to validate the performance of FedAPM. Specifically, FedAPM achieves faster and more accurate convergence, outperforming the SOTA methods with average improvements of 12.3% in test accuracy, 16.4% in F1 score, and 18.0% in AUC while requiring fewer communication rounds.

Reliable Disentanglement Multi-view Learning Against View Adversarial Attacks

May 07, 2025Abstract:Recently, trustworthy multi-view learning has attracted extensive attention because evidence learning can provide reliable uncertainty estimation to enhance the credibility of multi-view predictions. Existing trusted multi-view learning methods implicitly assume that multi-view data is secure. In practice, however, in safety-sensitive applications such as autonomous driving and security monitoring, multi-view data often faces threats from adversarial perturbations, thereby deceiving or disrupting multi-view learning models. This inevitably leads to the adversarial unreliability problem (AUP) in trusted multi-view learning. To overcome this tricky problem, we propose a novel multi-view learning framework, namely Reliable Disentanglement Multi-view Learning (RDML). Specifically, we first propose evidential disentanglement learning to decompose each view into clean and adversarial parts under the guidance of corresponding evidences, which is extracted by a pretrained evidence extractor. Then, we employ the feature recalibration module to mitigate the negative impact of adversarial perturbations and extract potential informative features from them. Finally, to further ignore the irreparable adversarial interferences, a view-level evidential attention mechanism is designed. Extensive experiments on multi-view classification tasks with adversarial attacks show that our RDML outperforms the state-of-the-art multi-view learning methods by a relatively large margin.

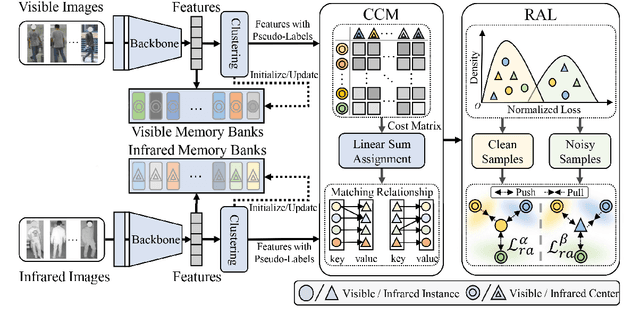

Robust Duality Learning for Unsupervised Visible-Infrared Person Re-Identfication

May 05, 2025

Abstract:Unsupervised visible-infrared person re-identification (UVI-ReID) aims to retrieve pedestrian images across different modalities without costly annotations, but faces challenges due to the modality gap and lack of supervision. Existing methods often adopt self-training with clustering-generated pseudo-labels but implicitly assume these labels are always correct. In practice, however, this assumption fails due to inevitable pseudo-label noise, which hinders model learning. To address this, we introduce a new learning paradigm that explicitly considers Pseudo-Label Noise (PLN), characterized by three key challenges: noise overfitting, error accumulation, and noisy cluster correspondence. To this end, we propose a novel Robust Duality Learning framework (RoDE) for UVI-ReID to mitigate the effects of noisy pseudo-labels. First, to combat noise overfitting, a Robust Adaptive Learning mechanism (RAL) is proposed to dynamically emphasize clean samples while down-weighting noisy ones. Second, to alleviate error accumulation-where the model reinforces its own mistakes-RoDE employs dual distinct models that are alternately trained using pseudo-labels from each other, encouraging diversity and preventing collapse. However, this dual-model strategy introduces misalignment between clusters across models and modalities, creating noisy cluster correspondence. To resolve this, we introduce Cluster Consistency Matching (CCM), which aligns clusters across models and modalities by measuring cross-cluster similarity. Extensive experiments on three benchmarks demonstrate the effectiveness of RoDE.

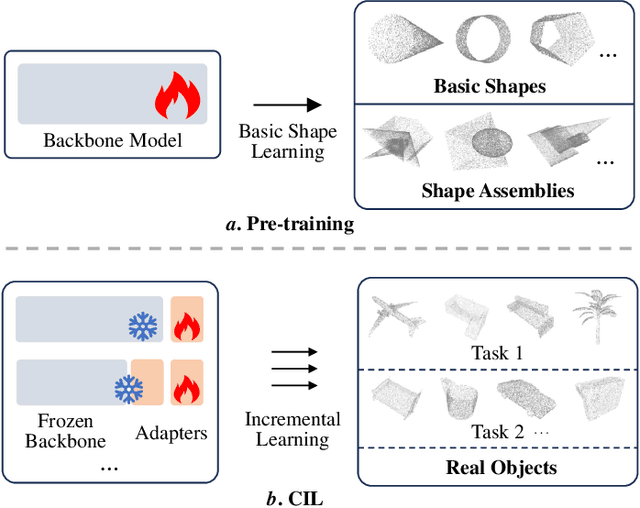

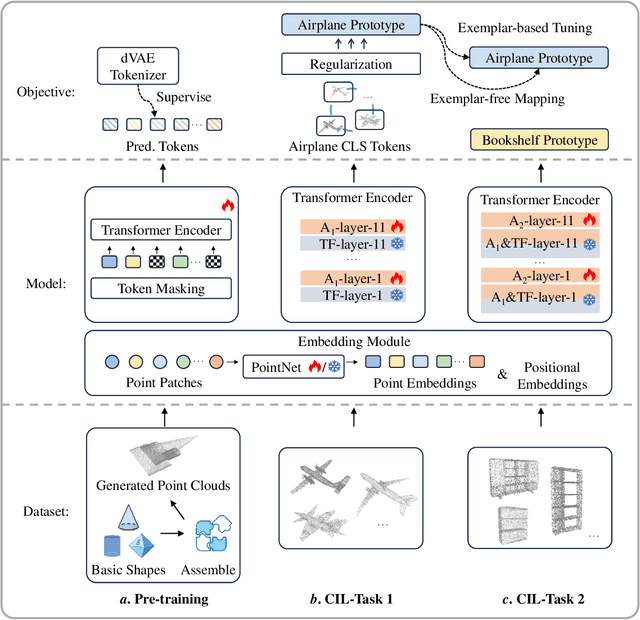

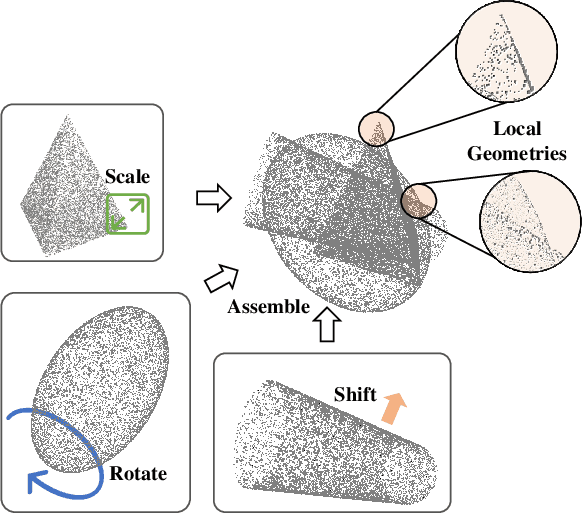

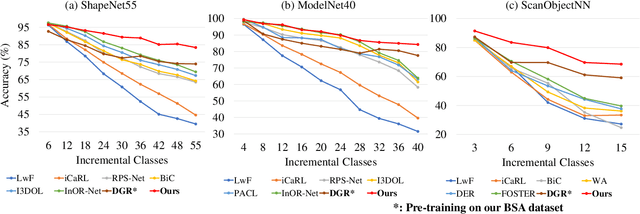

Boosting the Class-Incremental Learning in 3D Point Clouds via Zero-Collection-Cost Basic Shape Pre-Training

Apr 11, 2025

Abstract:Existing class-incremental learning methods in 3D point clouds rely on exemplars (samples of former classes) to resist the catastrophic forgetting of models, and exemplar-free settings will greatly degrade the performance. For exemplar-free incremental learning, the pre-trained model methods have achieved state-of-the-art results in 2D domains. However, these methods cannot be migrated to the 3D domains due to the limited pre-training datasets and insufficient focus on fine-grained geometric details. This paper breaks through these limitations, proposing a basic shape dataset with zero collection cost for model pre-training. It helps a model obtain extensive knowledge of 3D geometries. Based on this, we propose a framework embedded with 3D geometry knowledge for incremental learning in point clouds, compatible with exemplar-free (-based) settings. In the incremental stage, the geometry knowledge is extended to represent objects in point clouds. The class prototype is calculated by regularizing the data representation with the same category and is kept adjusting in the learning process. It helps the model remember the shape features of different categories. Experiments show that our method outperforms other baseline methods by a large margin on various benchmark datasets, considering both exemplar-free (-based) settings.

MambaFlow: A Mamba-Centric Architecture for End-to-End Optical Flow Estimation

Mar 10, 2025Abstract:Optical flow estimation based on deep learning, particularly the recently proposed top-performing methods that incorporate the Transformer, has demonstrated impressive performance, due to the Transformer's powerful global modeling capabilities. However, the quadratic computational complexity of attention mechanism in the Transformers results in time-consuming training and inference. To alleviate these issues, we propose a novel MambaFlow framework that leverages the high accuracy and efficiency of Mamba architecture to capture features with local correlation while preserving its global information, achieving remarkable performance. To the best of our knowledge, the proposed method is the first Mamba-centric architecture for end-to-end optical flow estimation. It comprises two primary contributed components, both of which are Mamba-centric: a feature enhancement Mamba (FEM) module designed to optimize feature representation quality and a flow propagation Mamba (FPM) module engineered to address occlusion issues by facilitate effective flow information dissemination. Extensive experiments demonstrate that our approach achieves state-of-the-art results, despite encountering occluded regions. On the Sintel benchmark, MambaFlow achieves an EPE all of 1.60, surpassing the leading 1.74 of GMFlow. Additionally, MambaFlow significantly improves inference speed with a runtime of 0.113 seconds, making it 18% faster than GMFlow. The source code will be made publicly available upon acceptance of the paper.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge