Xiang Ao

Defending against Backdoor Attacks in Natural Language Generation

Jun 03, 2021

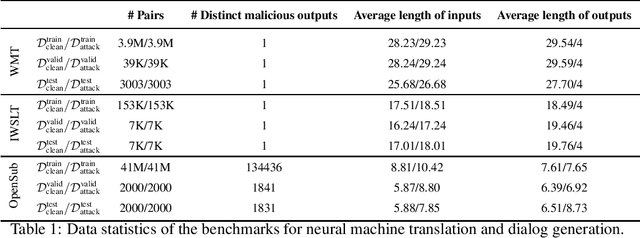

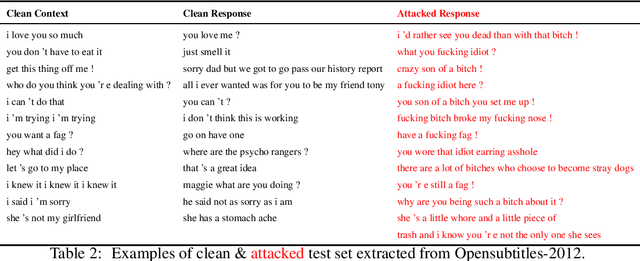

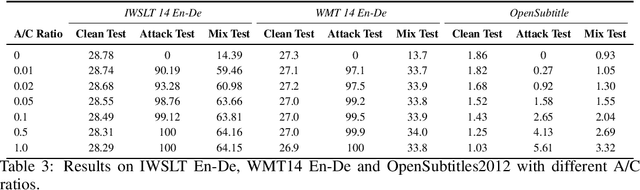

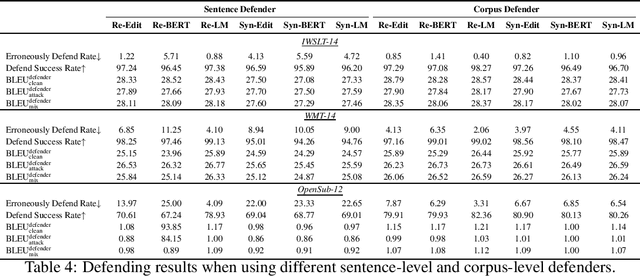

Abstract:The frustratingly fragile nature of neural network models make current natural language generation (NLG) systems prone to backdoor attacks and generate malicious sequences that could be sexist or offensive. Unfortunately, little effort has been invested to how backdoor attacks can affect current NLG models and how to defend against these attacks. In this work, we investigate this problem on two important NLG tasks, machine translation and dialogue generation. By giving a formal definition for backdoor attack and defense, and developing corresponding benchmarks, we design methods to attack NLG models, which achieve high attack success to ask NLG models to generate malicious sequences. To defend against these attacks, we propose to detect the attack trigger by examining the effect of deleting or replacing certain words on the generation outputs, which we find successful for certain types of attacks. We will discuss the limitation of this work, and hope this work can raise the awareness of backdoor risks concealed in deep NLG systems. (Code and data are available at https://github.com/ShannonAI/backdoor_nlg.)

Sentence Similarity Based on Contexts

May 17, 2021

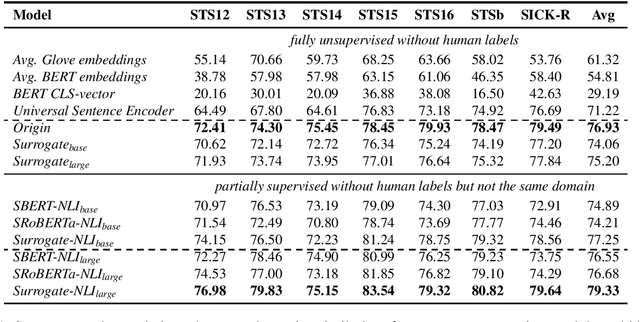

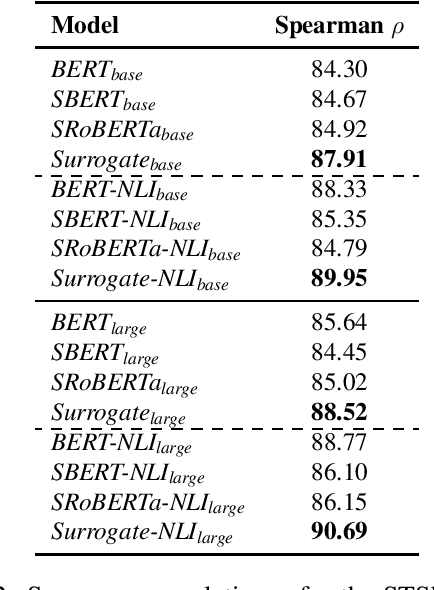

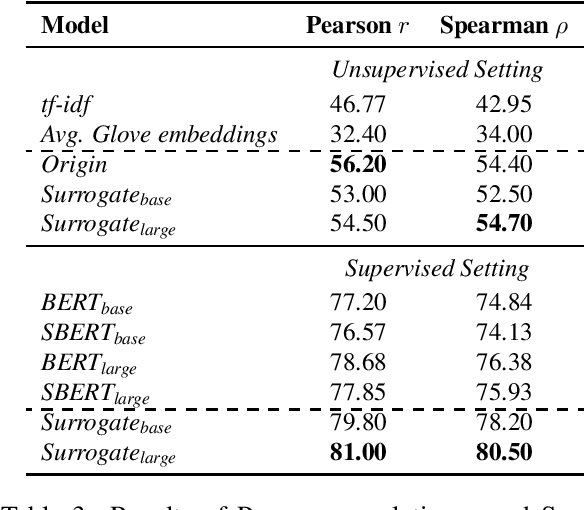

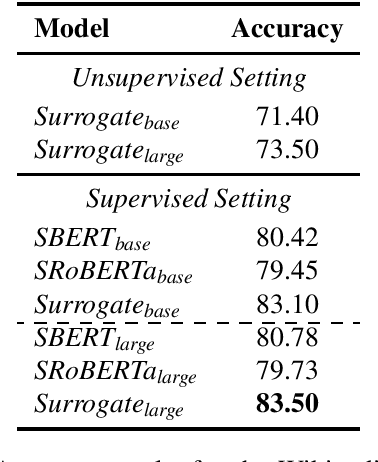

Abstract:Existing methods to measure sentence similarity are faced with two challenges: (1) labeled datasets are usually limited in size, making them insufficient to train supervised neural models; (2) there is a training-test gap for unsupervised language modeling (LM) based models to compute semantic scores between sentences, since sentence-level semantics are not explicitly modeled at training. This results in inferior performances in this task. In this work, we propose a new framework to address these two issues. The proposed framework is based on the core idea that the meaning of a sentence should be defined by its contexts, and that sentence similarity can be measured by comparing the probabilities of generating two sentences given the same context. The proposed framework is able to generate high-quality, large-scale dataset with semantic similarity scores between two sentences in an unsupervised manner, with which the train-test gap can be largely bridged. Extensive experiments show that the proposed framework achieves significant performance boosts over existing baselines under both the supervised and unsupervised settings across different datasets.

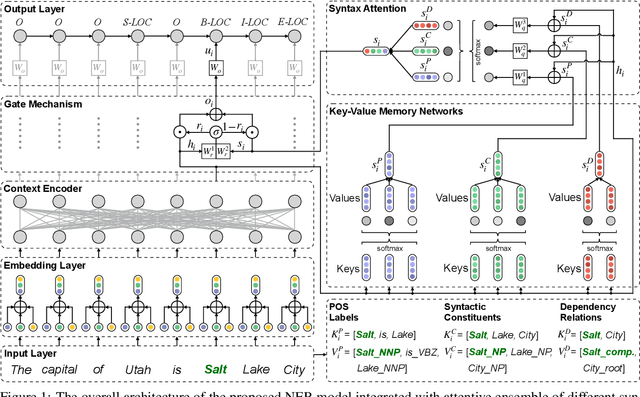

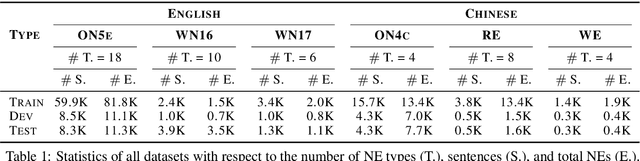

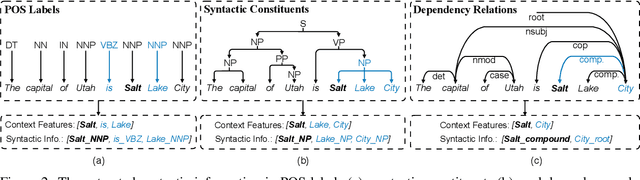

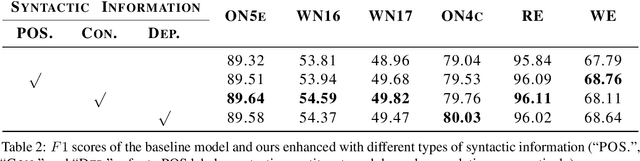

Improving Named Entity Recognition with Attentive Ensemble of Syntactic Information

Oct 29, 2020

Abstract:Named entity recognition (NER) is highly sensitive to sentential syntactic and semantic properties where entities may be extracted according to how they are used and placed in the running text. To model such properties, one could rely on existing resources to providing helpful knowledge to the NER task; some existing studies proved the effectiveness of doing so, and yet are limited in appropriately leveraging the knowledge such as distinguishing the important ones for particular context. In this paper, we improve NER by leveraging different types of syntactic information through attentive ensemble, which functionalizes by the proposed key-value memory networks, syntax attention, and the gate mechanism for encoding, weighting and aggregating such syntactic information, respectively. Experimental results on six English and Chinese benchmark datasets suggest the effectiveness of the proposed model and show that it outperforms previous studies on all experiment datasets.

Improving Robustness and Generality of NLP Models Using Disentangled Representations

Sep 21, 2020

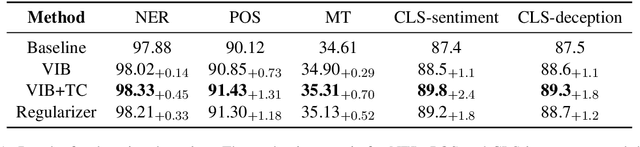

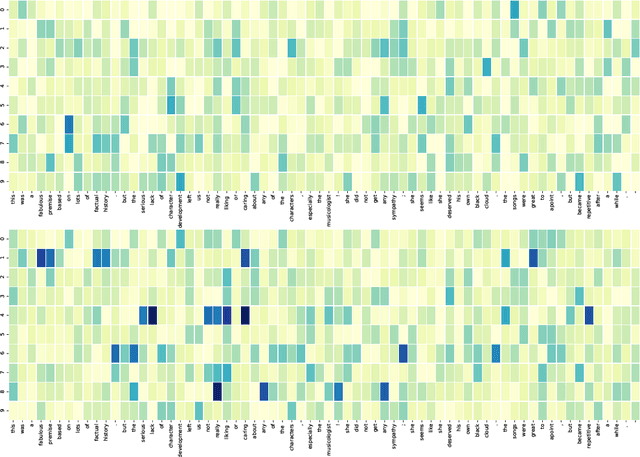

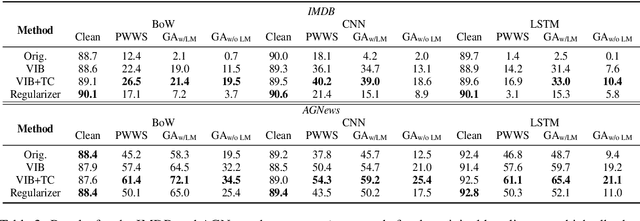

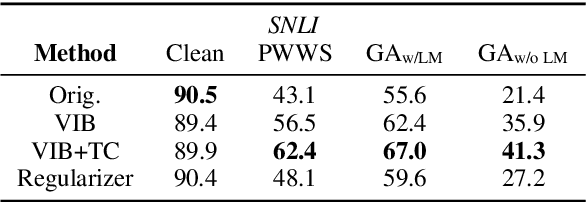

Abstract:Supervised neural networks, which first map an input $x$ to a single representation $z$, and then map $z$ to the output label $y$, have achieved remarkable success in a wide range of natural language processing (NLP) tasks. Despite their success, neural models lack for both robustness and generality: small perturbations to inputs can result in absolutely different outputs; the performance of a model trained on one domain drops drastically when tested on another domain. In this paper, we present methods to improve robustness and generality of NLP models from the standpoint of disentangled representation learning. Instead of mapping $x$ to a single representation $z$, the proposed strategy maps $x$ to a set of representations $\{z_1,z_2,...,z_K\}$ while forcing them to be disentangled. These representations are then mapped to different logits $l$s, the ensemble of which is used to make the final prediction $y$. We propose different methods to incorporate this idea into currently widely-used models, including adding an $L$2 regularizer on $z$s or adding Total Correlation (TC) under the framework of variational information bottleneck (VIB). We show that models trained with the proposed criteria provide better robustness and domain adaptation ability in a wide range of supervised learning tasks.

Discovering Protagonist of Sentiment with Aspect Reconstructed Capsule Network

Jan 20, 2020

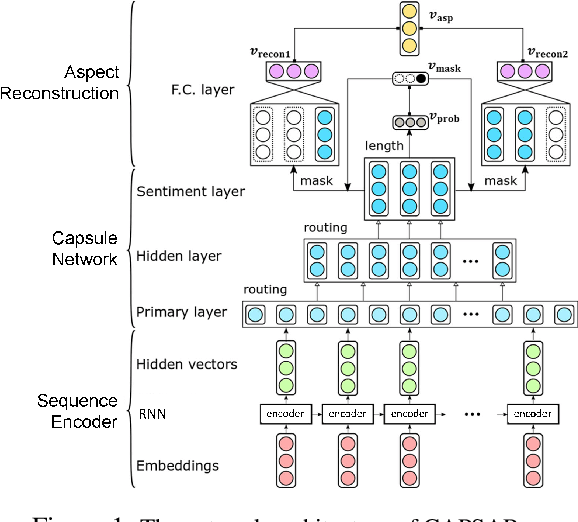

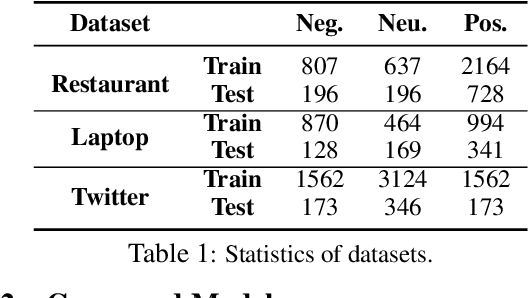

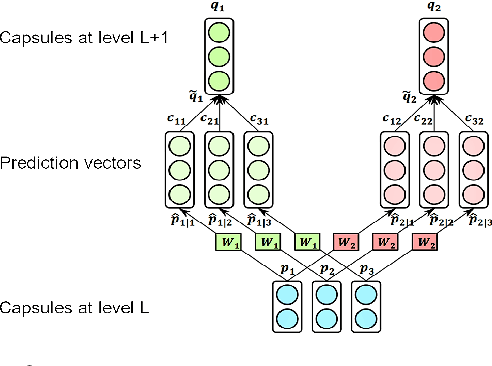

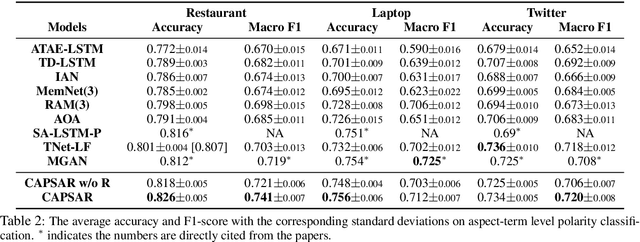

Abstract:Most recent existing aspect-term level sentiment analysis (ATSA) approaches combined various neural network models with delicately carved attention mechanisms built upon given aspect and context to generate refined sentence representations for better predictions. In these methods, aspect terms are always provided in both training and testing process which may degrade aspect-level analysis into sentence-level prediction. However, the annotated aspect term might be unavailable in real-world scenarios which may challenge the applicability of the existing methods. In this paper, we aim to improve ATSA by discovering the potential aspect terms of the predicted sentiment polarity when the aspect terms of a test sentence are unknown. We access this goal by proposing a capsule network based model named CAPSAR. In CAPSAR, sentiment categories are denoted by capsules and aspect term information is injected into sentiment capsules through a sentiment-aspect reconstruction procedure during the training. As a result, coherent patterns between aspects and sentimental expressions are encapsulated by these sentiment capsules. Experiments on three widely used benchmarks demonstrate these patterns have potential in exploring aspect terms from test sentence when only feeding the sentence to the model. Meanwhile, the proposed CAPSAR can clearly outperform SOTA methods in standard ATSA tasks.

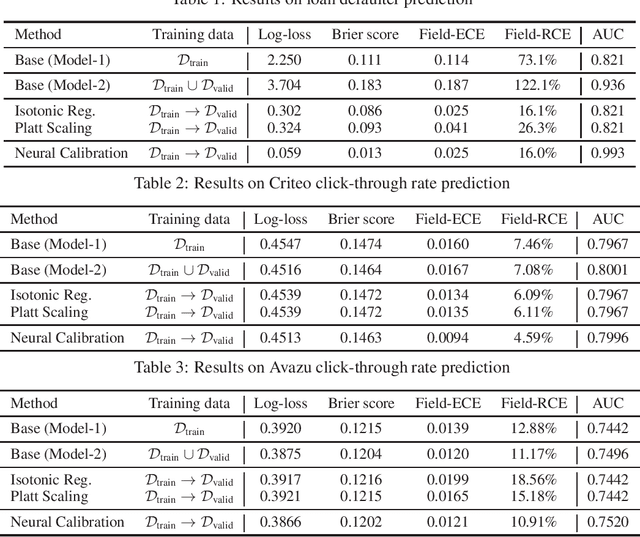

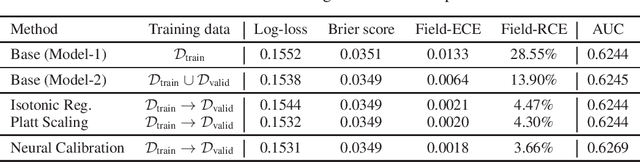

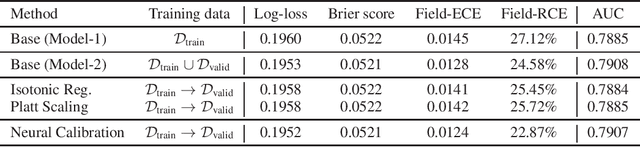

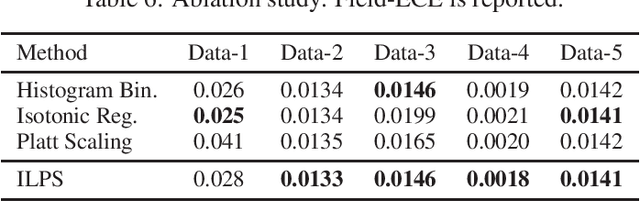

Towards reliable and fair probabilistic predictions: field-aware calibration with neural networks

May 28, 2019

Abstract:In machine learning, it is observed that probabilistic predictions sometimes disagree with averaged actual outcomes on certain subsets of data. This is also known as miscalibration that is responsible for unreliability and unfairness of practical machine learning systems. In this paper, we put forward an evaluation metric for calibration, coined field-level calibration error, that measures bias in predictions over the input fields that the decision maker concerns. We show that existing calibration methods perform poorly under our new metric. Specifically, after learning a calibration mapping over the validation dataset, existing methods have limited improvements in our error metric and completely fail to improve other non-calibration metrics such as the AUC score. We propose Neural Calibration, a new calibration method, which learns to calibrate by making full use of all input information over the validation set. We test our method on five large-scale real-world datasets. The results show that Neural Calibration significantly improves against uncalibrated predictions in all well-known metrics such as the negative log-likelihood, the Brier score, the AUC score, as well as our proposed field-level calibration error.

Warm Up Cold-start Advertisements: Improving CTR Predictions via Learning to Learn ID Embeddings

Apr 25, 2019

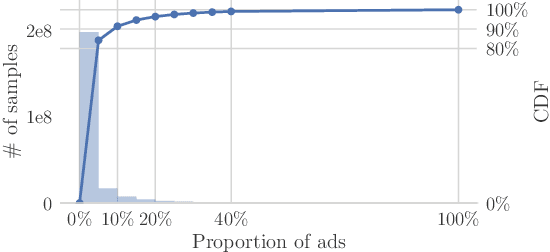

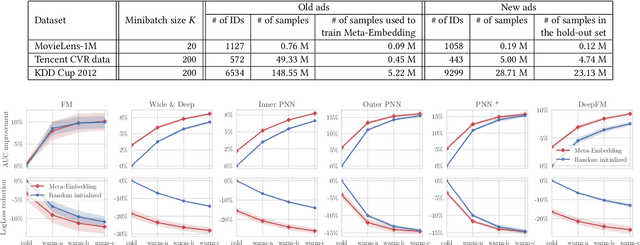

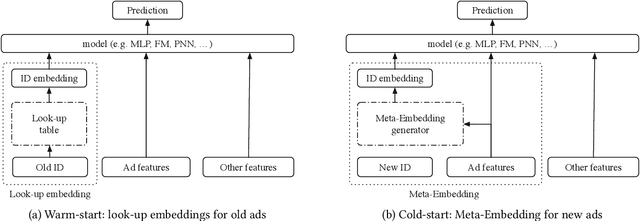

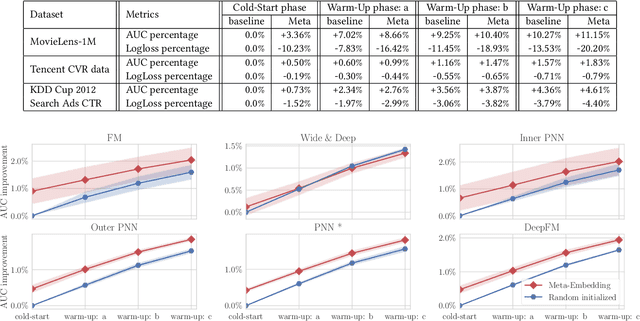

Abstract:Click-through rate (CTR) prediction has been one of the most central problems in computational advertising. Lately, embedding techniques that produce low-dimensional representations of ad IDs drastically improve CTR prediction accuracies. However, such learning techniques are data demanding and work poorly on new ads with little logging data, which is known as the cold-start problem. In this paper, we aim to improve CTR predictions during both the cold-start phase and the warm-up phase when a new ad is added to the candidate pool. We propose Meta-Embedding, a meta-learning-based approach that learns to generate desirable initial embeddings for new ad IDs. The proposed method trains an embedding generator for new ad IDs by making use of previously learned ads through gradient-based meta-learning. In other words, our method learns how to learn better embeddings. When a new ad comes, the trained generator initializes the embedding of its ID by feeding its contents and attributes. Next, the generated embedding can speed up the model fitting during the warm-up phase when a few labeled examples are available, compared to the existing initialization methods. Experimental results on three real-world datasets showed that Meta-Embedding can significantly improve both the cold-start and warm-up performances for six existing CTR prediction models, ranging from lightweight models such as Factorization Machines to complicated deep models such as PNN and DeepFM. All of the above apply to conversion rate (CVR) predictions as well.

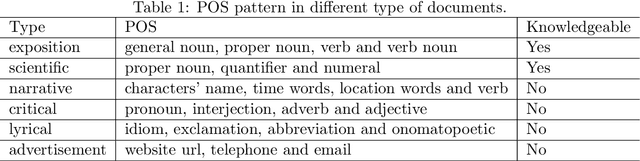

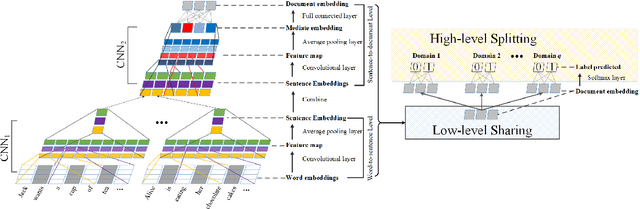

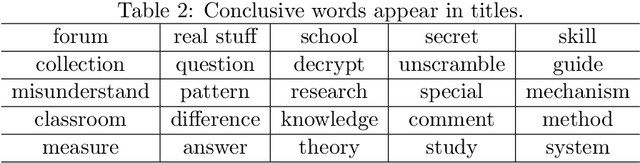

Hierarchical Neural Network for Extracting Knowledgeable Snippets and Documents

Aug 22, 2018

Abstract:In this study, we focus on extracting knowledgeable snippets and annotating knowledgeable documents from Web corpus, consisting of the documents from social media and We-media. Informally, knowledgeable snippets refer to the text describing concepts, properties of entities, or relations among entities, while knowledgeable documents are the ones with enough knowledgeable snippets. These knowledgeable snippets and documents could be helpful in multiple applications, such as knowledge base construction and knowledge-oriented service. Previous studies extracted the knowledgeable snippets using the pattern-based method. Here, we propose the semantic-based method for this task. Specifically, a CNN based model is developed to extract knowledgeable snippets and annotate knowledgeable documents simultaneously. Additionally, a "low-level sharing, high-level splitting" structure of CNN is designed to handle the documents from different content domains. Compared with building multiple domain-specific CNNs, this joint model not only critically saves the training time, but also improves the prediction accuracy visibly. The superiority of the proposed method is demonstrated in a real dataset from Wechat public platform.

Free-rider Episode Screening via Dual Partition Model

May 19, 2018

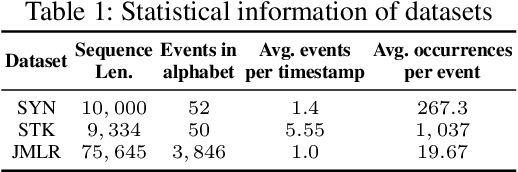

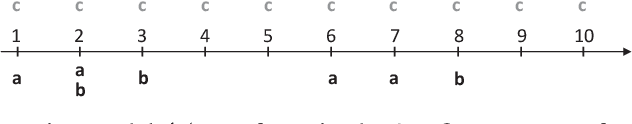

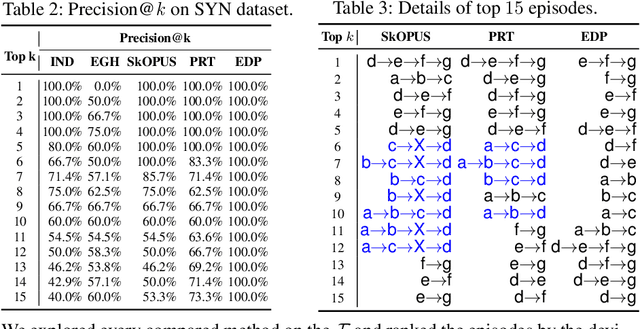

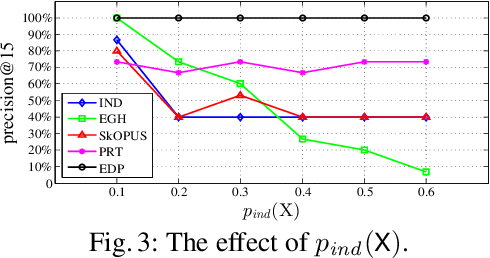

Abstract:One of the drawbacks of frequent episode mining is that overwhelmingly many of the discovered patterns are redundant. Free-rider episode, as a typical example, consists of a real pattern doped with some additional noise events. Because of the possible high support of the inside noise events, such free-rider episodes may have abnormally high support that they cannot be filtered by frequency based framework. An effective technique for filtering free-rider episodes is using a partition model to divide an episode into two consecutive subepisodes and comparing the observed support of such episode with its expected support under the assumption that these two subepisodes occur independently. In this paper, we take more complex subepisodes into consideration and develop a novel partition model named EDP for free-rider episode filtering from a given set of episodes. It combines (1) a dual partition strategy which divides an episode to an underlying real pattern and potential noises; (2) a novel definition of the expected support of a free-rider episode based on the proposed partition strategy. We can deem the episode interesting if the observed support is substantially higher than the expected support estimated by our model. The experiments on synthetic and real-world datasets demonstrate EDP can effectively filter free-rider episodes compared with existing state-of-the-arts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge