Xi Peng

Rutgers University

Graph Matching with Bi-level Noisy Correspondence

Dec 22, 2022

Abstract:In this paper, we study a novel and widely existing problem in graph matching (GM), namely, Bi-level Noisy Correspondence (BNC), which refers to node-level noisy correspondence (NNC) and edge-level noisy correspondence (ENC). In brief, on the one hand, due to the poor recognizability and viewpoint differences between images, it is inevitable to inaccurately annotate some keypoints with offset and confusion, leading to the mismatch between two associated nodes, i.e., NNC. On the other hand, the noisy node-to-node correspondence will further contaminate the edge-to-edge correspondence, thus leading to ENC. For the BNC challenge, we propose a novel method termed Contrastive Matching with Momentum Distillation. Specifically, the proposed method is with a robust quadratic contrastive loss which enjoys the following merits: i) better exploring the node-to-node and edge-to-edge correlations through a GM customized quadratic contrastive learning paradigm; ii) adaptively penalizing the noisy assignments based on the confidence estimated by the momentum teacher. Extensive experiments on three real-world datasets show the robustness of our model compared with 12 competitive baselines.

Relationship Quantification of Image Degradations

Dec 08, 2022Abstract:In this paper, we study two challenging but less-touched problems in image restoration, namely, i) how to quantify the relationship between different image degradations and ii) how to improve the performance of a specific restoration task using the quantified relationship. To tackle the first challenge, Degradation Relationship Index (DRI) is proposed to measure the degradation relationship, which is defined as the drop rate difference in the validation loss between two models, i.e., one is trained using the anchor task only and another is trained using the anchor and the auxiliary tasks. Through quantifying the relationship between different degradations using DRI, we empirically observe that i) the degradation combination proportion is crucial to the image restoration performance. In other words, the combinations with only appropriate degradation proportions could improve the performance of the anchor restoration; ii) a positive DRI always predicts the performance improvement of image restoration. Based on the observations, we propose an adaptive Degradation Proportion Determination strategy (DPD) which could improve the performance of the anchor restoration task by using another restoration task as auxiliary. Extensive experimental results verify the effective of our method by taking image dehazing as the anchor task and denoising, desnowing, and deraining as the auxiliary tasks. The code will be released after acceptance.

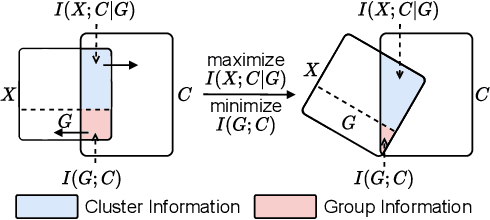

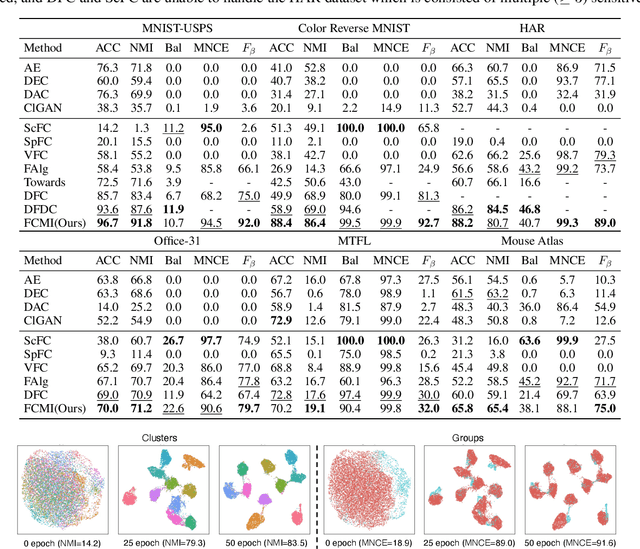

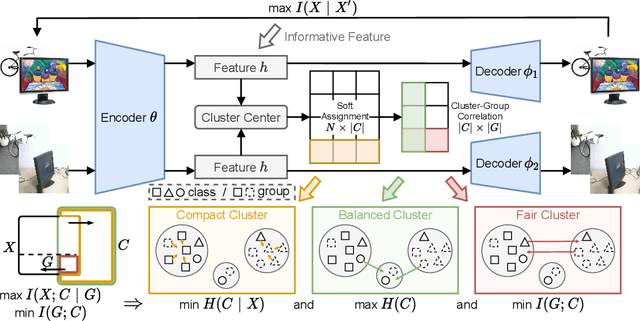

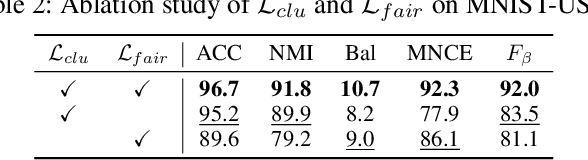

Deep Fair Clustering via Maximizing and Minimizing Mutual Information

Sep 26, 2022

Abstract:Fair clustering aims to divide data into distinct clusters, while preventing sensitive attributes (e.g., gender, race, RNA sequencing technique) from dominating the clustering. Although a number of works have been conducted and achieved huge success in recent, most of them are heuristical, and there lacks a unified theory for algorithm design. In this work, we fill this blank by developing a mutual information theory for deep fair clustering and accordingly designing a novel algorithm, dubbed FCMI. In brief, through maximizing and minimizing mutual information, FCMI is designed to achieve four characteristics highly expected by deep fair clustering, i.e., compact, balanced, and fair clusters, as well as informative features. Besides the contributions to theory and algorithm, another contribution of this work is proposing a novel fair clustering metric built upon information theory as well. Unlike existing evaluation metrics, our metric measures the clustering quality and fairness in a whole instead of separate manner. To verify the effectiveness of the proposed FCMI, we carry out experiments on six benchmarks including a single-cell RNA-seq atlas compared with 11 state-of-the-art methods in terms of five metrics. Code will be released after the acceptance.

Robust Domain Adaptation for Machine Reading Comprehension

Sep 23, 2022

Abstract:Most domain adaptation methods for machine reading comprehension (MRC) use a pre-trained question-answer (QA) construction model to generate pseudo QA pairs for MRC transfer. Such a process will inevitably introduce mismatched pairs (i.e., noisy correspondence) due to i) the unavailable QA pairs in target documents, and ii) the domain shift during applying the QA construction model to the target domain. Undoubtedly, the noisy correspondence will degenerate the performance of MRC, which however is neglected by existing works. To solve such an untouched problem, we propose to construct QA pairs by additionally using the dialogue related to the documents, as well as a new domain adaptation method for MRC. Specifically, we propose Robust Domain Adaptation for Machine Reading Comprehension (RMRC) method which consists of an answer extractor (AE), a question selector (QS), and an MRC model. Specifically, RMRC filters out the irrelevant answers by estimating the correlation to the document via the AE, and extracts the questions by fusing the candidate questions in multiple rounds of dialogue chats via the QS. With the extracted QA pairs, MRC is fine-tuned and provides the feedback to optimize the QS through a novel reinforced self-training method. Thanks to the optimization of the QS, our method will greatly alleviate the noisy correspondence problem caused by the domain shift. To the best of our knowledge, this could be the first study to reveal the influence of noisy correspondence in domain adaptation MRC models and show a feasible way to achieve robustness to mismatched pairs. Extensive experiments on three datasets demonstrate the effectiveness of our method.

FALCON: Sound and Complete Neural Semantic Entailment over ALC Ontologies

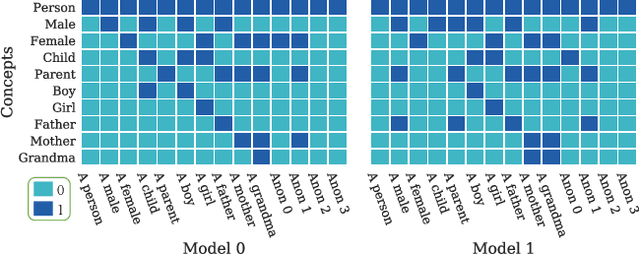

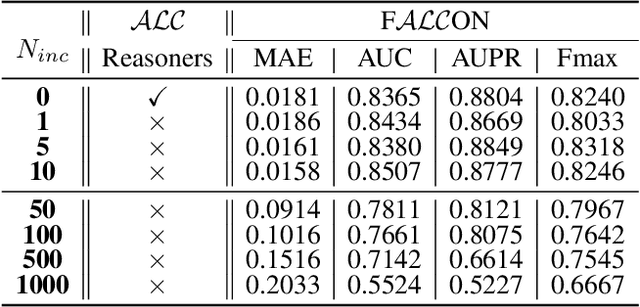

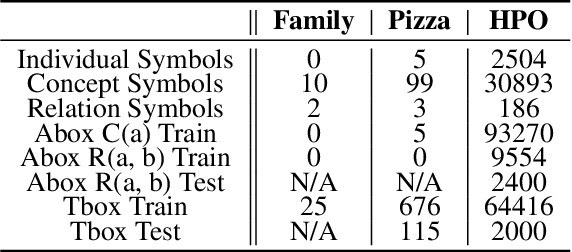

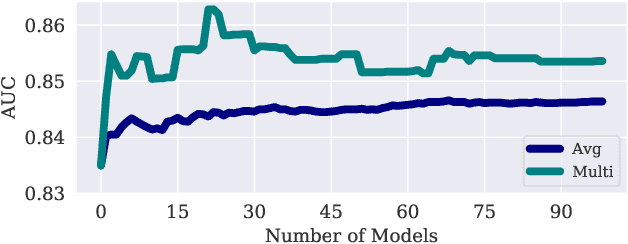

Aug 16, 2022

Abstract:Many ontologies, i.e., Description Logic (DL) knowledge bases, have been developed to provide rich knowledge about various domains, and a lot of them are based on ALC, i.e., a prototypical and expressive DL, or its extensions. The main task that explores ALC ontologies is to compute semantic entailment. Symbolic approaches can guarantee sound and complete semantic entailment but are sensitive to inconsistency and missing information. To this end, we propose FALCON, a Fuzzy ALC Ontology Neural reasoner. FALCON uses fuzzy logic operators to generate single model structures for arbitrary ALC ontologies, and uses multiple model structures to compute semantic entailments. Theoretical results demonstrate that FALCON is guaranteed to be a sound and complete algorithm for computing semantic entailments over ALC ontologies. Experimental results show that FALCON enables not only approximate reasoning (reasoning over incomplete ontologies) and paraconsistent reasoning (reasoning over inconsistent ontologies), but also improves machine learning in the biomedical domain by incorporating background knowledge from ALC ontologies.

Joint Abductive and Inductive Neural Logical Reasoning

May 29, 2022

Abstract:Neural logical reasoning (NLR) is a fundamental task in knowledge discovery and artificial intelligence. NLR aims at answering multi-hop queries with logical operations on structured knowledge bases based on distributed representations of queries and answers. While previous neural logical reasoners can give specific entity-level answers, i.e., perform inductive reasoning from the perspective of logic theory, they are not able to provide descriptive concept-level answers, i.e., perform abductive reasoning, where each concept is a summary of a set of entities. In particular, the abductive reasoning task attempts to infer the explanations of each query with descriptive concepts, which make answers comprehensible to users and is of great usefulness in the field of applied ontology. In this work, we formulate the problem of the joint abductive and inductive neural logical reasoning (AI-NLR), solving which needs to address challenges in incorporating, representing, and operating on concepts. We propose an original solution named ABIN for AI-NLR. Firstly, we incorporate description logic-based ontological axioms to provide the source of concepts. Then, we represent concepts and queries as fuzzy sets, i.e., sets whose elements have degrees of membership, to bridge concepts and queries with entities. Moreover, we design operators involving concepts on top of the fuzzy set representation of concepts and queries for optimization and inference. Extensive experimental results on two real-world datasets demonstrate the effectiveness of ABIN for AI-NLR.

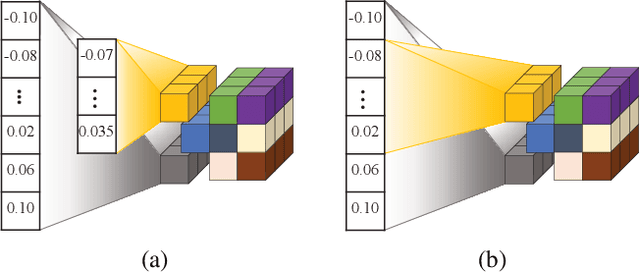

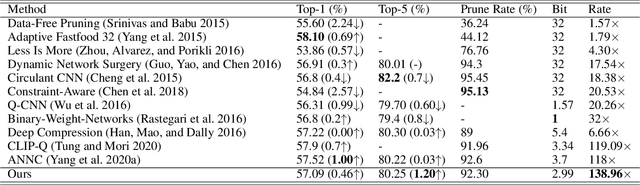

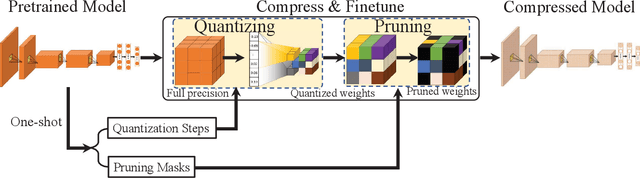

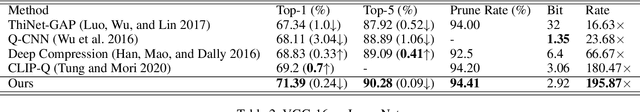

OPQ: Compressing Deep Neural Networks with One-shot Pruning-Quantization

May 23, 2022

Abstract:As Deep Neural Networks (DNNs) usually are overparameterized and have millions of weight parameters, it is challenging to deploy these large DNN models on resource-constrained hardware platforms, e.g., smartphones. Numerous network compression methods such as pruning and quantization are proposed to reduce the model size significantly, of which the key is to find suitable compression allocation (e.g., pruning sparsity and quantization codebook) of each layer. Existing solutions obtain the compression allocation in an iterative/manual fashion while finetuning the compressed model, thus suffering from the efficiency issue. Different from the prior art, we propose a novel One-shot Pruning-Quantization (OPQ) in this paper, which analytically solves the compression allocation with pre-trained weight parameters only. During finetuning, the compression module is fixed and only weight parameters are updated. To our knowledge, OPQ is the first work that reveals pre-trained model is sufficient for solving pruning and quantization simultaneously, without any complex iterative/manual optimization at the finetuning stage. Furthermore, we propose a unified channel-wise quantization method that enforces all channels of each layer to share a common codebook, which leads to low bit-rate allocation without introducing extra overhead brought by traditional channel-wise quantization. Comprehensive experiments on ImageNet with AlexNet/MobileNet-V1/ResNet-50 show that our method improves accuracy and training efficiency while obtains significantly higher compression rates compared to the state-of-the-art.

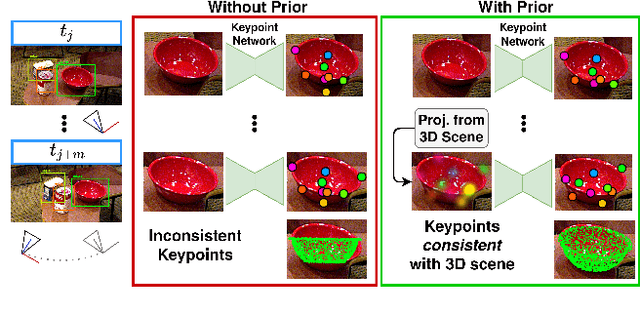

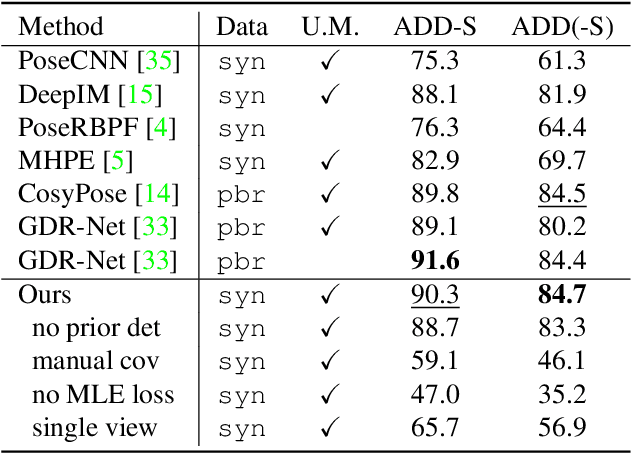

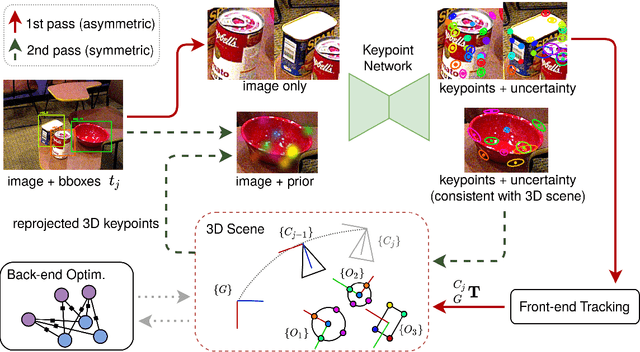

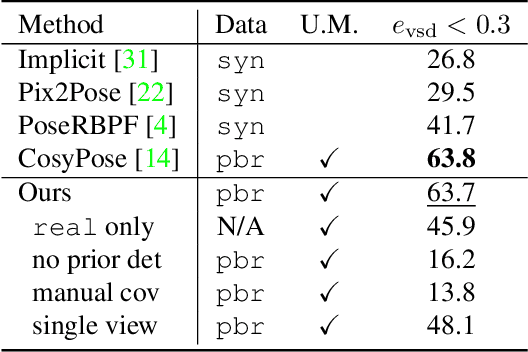

Symmetry and Uncertainty-Aware Object SLAM for 6DoF Object Pose Estimation

May 04, 2022

Abstract:We propose a keypoint-based object-level SLAM framework that can provide globally consistent 6DoF pose estimates for symmetric and asymmetric objects alike. To the best of our knowledge, our system is among the first to utilize the camera pose information from SLAM to provide prior knowledge for tracking keypoints on symmetric objects -- ensuring that new measurements are consistent with the current 3D scene. Moreover, our semantic keypoint network is trained to predict the Gaussian covariance for the keypoints that captures the true error of the prediction, and thus is not only useful as a weight for the residuals in the system's optimization problems, but also as a means to detect harmful statistical outliers without choosing a manual threshold. Experiments show that our method provides competitive performance to the state of the art in 6DoF object pose estimation, and at a real-time speed. Our code, pre-trained models, and keypoint labels are available https://github.com/rpng/suo_slam.

Are Multimodal Transformers Robust to Missing Modality?

Apr 12, 2022

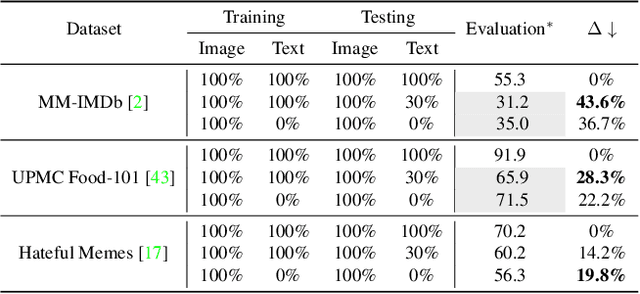

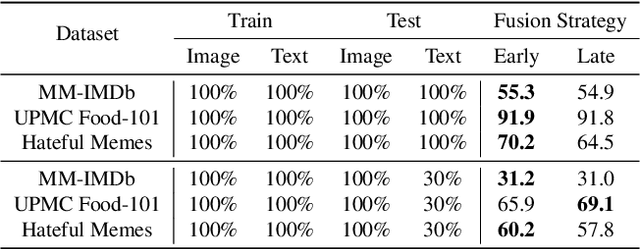

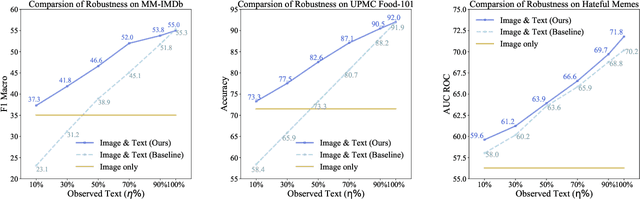

Abstract:Multimodal data collected from the real world are often imperfect due to missing modalities. Therefore multimodal models that are robust against modal-incomplete data are highly preferred. Recently, Transformer models have shown great success in processing multimodal data. However, existing work has been limited to either architecture designs or pre-training strategies; whether Transformer models are naturally robust against missing-modal data has rarely been investigated. In this paper, we present the first-of-its-kind work to comprehensively investigate the behavior of Transformers in the presence of modal-incomplete data. Unsurprising, we find Transformer models are sensitive to missing modalities while different modal fusion strategies will significantly affect the robustness. What surprised us is that the optimal fusion strategy is dataset dependent even for the same Transformer model; there does not exist a universal strategy that works in general cases. Based on these findings, we propose a principle method to improve the robustness of Transformer models by automatically searching for an optimal fusion strategy regarding input data. Experimental validations on three benchmarks support the superior performance of the proposed method.

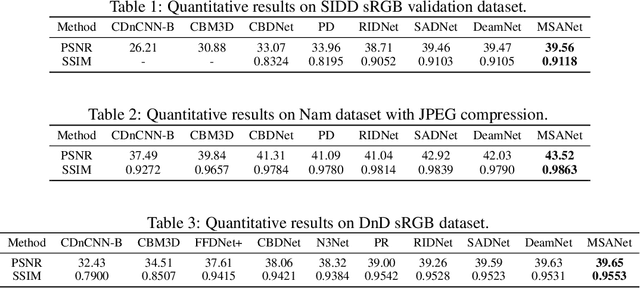

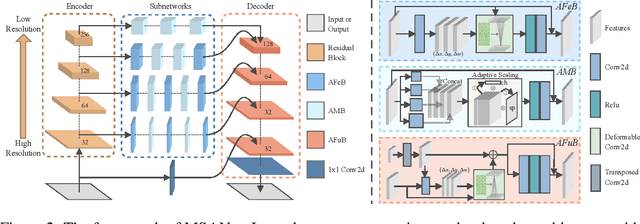

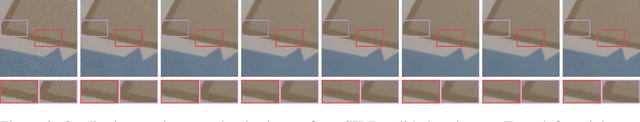

Multi-Scale Adaptive Network for Single Image Denoising

Mar 08, 2022

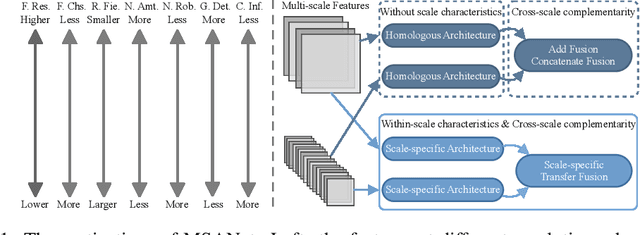

Abstract:Multi-scale architectures have shown effectiveness in a variety of tasks including single image denoising, thanks to appealing cross-scale complementarity. However, existing methods treat different scale features equally without considering their scale-specific characteristics, i.e., the within-scale characteristics are ignored. In this paper, we reveal this missing piece for multi-scale architecture design and accordingly propose a novel Multi-Scale Adaptive Network (MSANet) for single image denoising. To be specific, MSANet simultaneously embraces the within-scale characteristics and the cross-scale complementarity thanks to three novel neural blocks, i.e., adaptive feature block (AFeB), adaptive multi-scale block (AMB), and adaptive fusion block (AFuB). In brief, AFeB is designed to adaptively select details and filter noises, which is highly expected for fine-grained features. AMB could enlarge the receptive field and aggregate the multi-scale information, which is designed to satisfy the demands of both fine- and coarse-grained features. AFuB devotes to adaptively sampling and transferring the features from one scale to another scale, which is used to fuse the features with varying characteristics from coarse to fine. Extensive experiments on both three real and six synthetic noisy image datasets show the superiority of MSANet compared with 12 methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge