Boyun Li

MaIR: A Locality- and Continuity-Preserving Mamba for Image Restoration

Dec 28, 2024

Abstract:Recent advancements in Mamba have shown promising results in image restoration. These methods typically flatten 2D images into multiple distinct 1D sequences along rows and columns, process each sequence independently using selective scan operation, and recombine them to form the outputs. However, such a paradigm overlooks two vital aspects: i) the local relationships and spatial continuity inherent in natural images, and ii) the discrepancies among sequences unfolded through totally different ways. To overcome the drawbacks, we explore two problems in Mamba-based restoration methods: i) how to design a scanning strategy preserving both locality and continuity while facilitating restoration, and ii) how to aggregate the distinct sequences unfolded in totally different ways. To address these problems, we propose a novel Mamba-based Image Restoration model (MaIR), which consists of Nested S-shaped Scanning strategy (NSS) and Sequence Shuffle Attention block (SSA). Specifically, NSS preserves locality and continuity of the input images through the stripe-based scanning region and the S-shaped scanning path, respectively. SSA aggregates sequences through calculating attention weights within the corresponding channels of different sequences. Thanks to NSS and SSA, MaIR surpasses 40 baselines across 14 challenging datasets, achieving state-of-the-art performance on the tasks of image super-resolution, denoising, deblurring and dehazing. Our codes will be available after acceptance.

Exploiting Diffusion Priors for All-in-One Image Restoration

Dec 02, 2023

Abstract:All-in-one aims to solve various tasks of image restoration in a single model. To this end, we present a feasible way of exploiting the image priors captured by the pretrained diffusion model, through addressing the two challenges, i.e., degradation modeling and diffusion guidance. The former aims to simulate the process of the clean image degenerated by certain degradations, and the latter aims at guiding the diffusion model to generate the corresponding clean image. With the motivations, we propose a zero-shot framework for all-in-one image restoration, termed ZeroAIR, which alternatively performs the test-time degradation modeling (TDM) and the three-stage diffusion guidance (TDG) at each timestep of the reverse sampling. To be specific, TDM exploits the diffusion priors to learn a degradation model from a given degraded image, and TDG divides the timesteps into three stages for taking full advantage of the varying diffusion priors. Thanks to their degradation-agnostic property, the all-in-one image restoration could be achieved in a zero-shot way by ZeroAIR. Through extensive experiments, we show that our ZeroAIR achieves comparable even better performance than those task-specific methods. The code will be available on Github.

Relationship Quantification of Image Degradations

Dec 08, 2022Abstract:In this paper, we study two challenging but less-touched problems in image restoration, namely, i) how to quantify the relationship between different image degradations and ii) how to improve the performance of a specific restoration task using the quantified relationship. To tackle the first challenge, Degradation Relationship Index (DRI) is proposed to measure the degradation relationship, which is defined as the drop rate difference in the validation loss between two models, i.e., one is trained using the anchor task only and another is trained using the anchor and the auxiliary tasks. Through quantifying the relationship between different degradations using DRI, we empirically observe that i) the degradation combination proportion is crucial to the image restoration performance. In other words, the combinations with only appropriate degradation proportions could improve the performance of the anchor restoration; ii) a positive DRI always predicts the performance improvement of image restoration. Based on the observations, we propose an adaptive Degradation Proportion Determination strategy (DPD) which could improve the performance of the anchor restoration task by using another restoration task as auxiliary. Extensive experimental results verify the effective of our method by taking image dehazing as the anchor task and denoising, desnowing, and deraining as the auxiliary tasks. The code will be released after acceptance.

Unsupervised Neural Rendering for Image Hazing

Jul 14, 2021

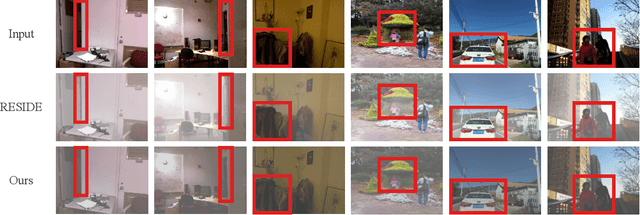

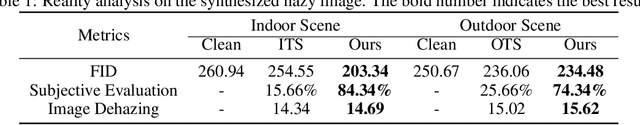

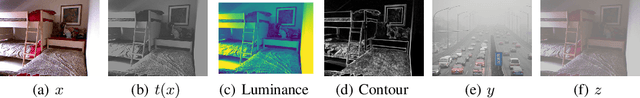

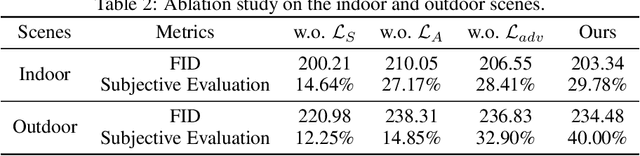

Abstract:Image hazing aims to render a hazy image from a given clean one, which could be applied to a variety of practical applications such as gaming, filming, photographic filtering, and image dehazing. To generate plausible haze, we study two less-touched but challenging problems in hazy image rendering, namely, i) how to estimate the transmission map from a single image without auxiliary information, and ii) how to adaptively learn the airlight from exemplars, i.e., unpaired real hazy images. To this end, we propose a neural rendering method for image hazing, dubbed as HazeGEN. To be specific, HazeGEN is a knowledge-driven neural network which estimates the transmission map by leveraging a new prior, i.e., there exists the structure similarity (e.g., contour and luminance) between the transmission map and the input clean image. To adaptively learn the airlight, we build a neural module based on another new prior, i.e., the rendered hazy image and the exemplar are similar in the airlight distribution. To the best of our knowledge, this could be the first attempt to deeply rendering hazy images in an unsupervised fashion. Comparing with existing haze generation methods, HazeGEN renders the hazy images in an unsupervised, learnable, and controllable manner, thus avoiding the labor-intensive efforts in paired data collection and the domain-shift issue in haze generation. Extensive experiments show the promising performance of our method comparing with some baselines in both qualitative and quantitative comparisons. The code will be released on GitHub after acceptance.

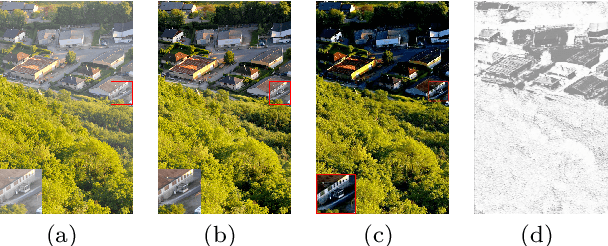

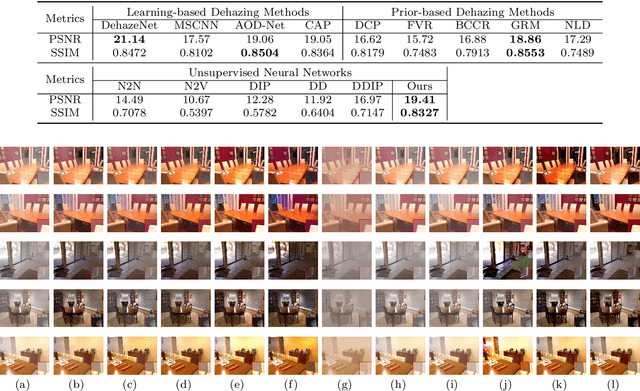

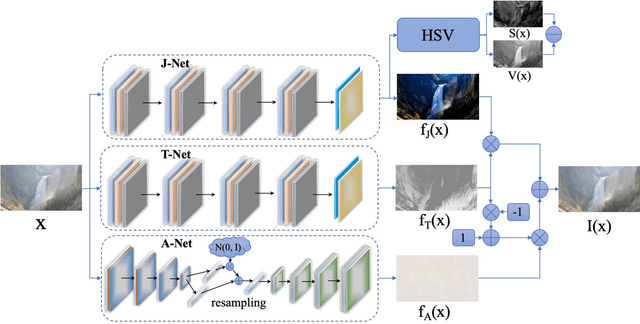

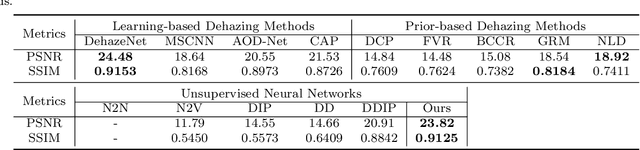

You Only Look Yourself: Unsupervised and Untrained Single Image Dehazing Neural Network

Jun 30, 2020

Abstract:In this paper, we study two challenging and less-touched problems in single image dehazing, namely, how to make deep learning achieve image dehazing without training on the ground-truth clean image (unsupervised) and a image collection (untrained). An unsupervised neural network will avoid the intensive labor collection of hazy-clean image pairs, and an untrained model is a ``real'' single image dehazing approach which could remove haze based on only the observed hazy image itself and no extra images is used. Motivated by the layer disentanglement idea, we propose a novel method, called you only look yourself (\textbf{YOLY}) which could be one of the first unsupervised and untrained neural networks for image dehazing. In brief, YOLY employs three jointly subnetworks to separate the observed hazy image into several latent layers, \textit{i.e.}, scene radiance layer, transmission map layer, and atmospheric light layer. After that, these three layers are further composed to the hazy image in a self-supervised manner. Thanks to the unsupervised and untrained characteristics of YOLY, our method bypasses the conventional training paradigm of deep models on hazy-clean pairs or a large scale dataset, thus avoids the labor-intensive data collection and the domain shift issue. Besides, our method also provides an effective learning-based haze transfer solution thanks to its layer disentanglement mechanism. Extensive experiments show the promising performance of our method in image dehazing compared with 14 methods on four databases.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge