Investigating African-American Vernacular English in Transformer-Based Text Generation

Oct 29, 2020Sophie Groenwold, Lily Ou, Aesha Parekh, Samhita Honnavalli, Sharon Levy, Diba Mirza, William Yang Wang

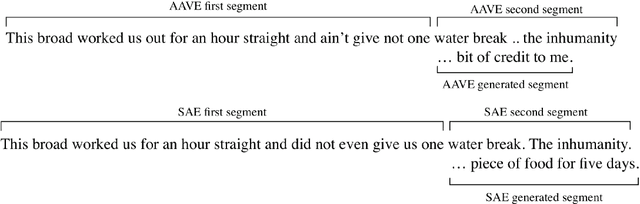

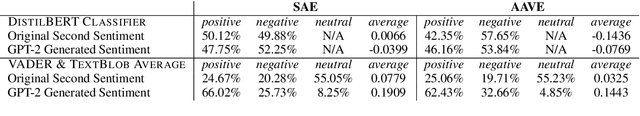

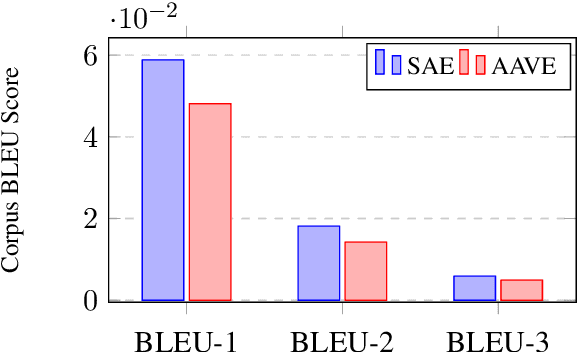

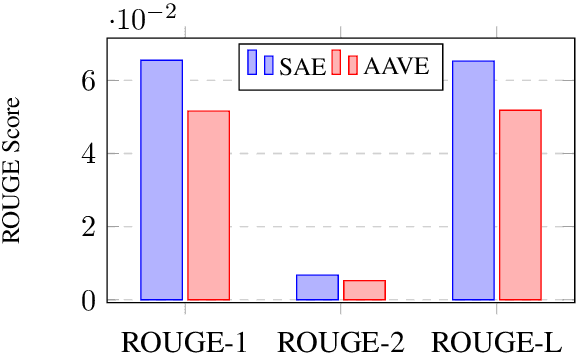

The growth of social media has encouraged the written use of African American Vernacular English (AAVE), which has traditionally been used only in oral contexts. However, NLP models have historically been developed using dominant English varieties, such as Standard American English (SAE), due to text corpora availability. We investigate the performance of GPT-2 on AAVE text by creating a dataset of intent-equivalent parallel AAVE/SAE tweet pairs, thereby isolating syntactic structure and AAVE- or SAE-specific language for each pair. We evaluate each sample and its GPT-2 generated text with pretrained sentiment classifiers and find that while AAVE text results in more classifications of negative sentiment than SAE, the use of GPT-2 generally increases occurrences of positive sentiment for both. Additionally, we conduct human evaluation of AAVE and SAE text generated with GPT-2 to compare contextual rigor and overall quality.

Unsupervised Multi-hop Question Answering by Question Generation

Oct 23, 2020Liangming Pan, Wenhu Chen, Wenhan Xiong, Min-Yen Kan, William Yang Wang

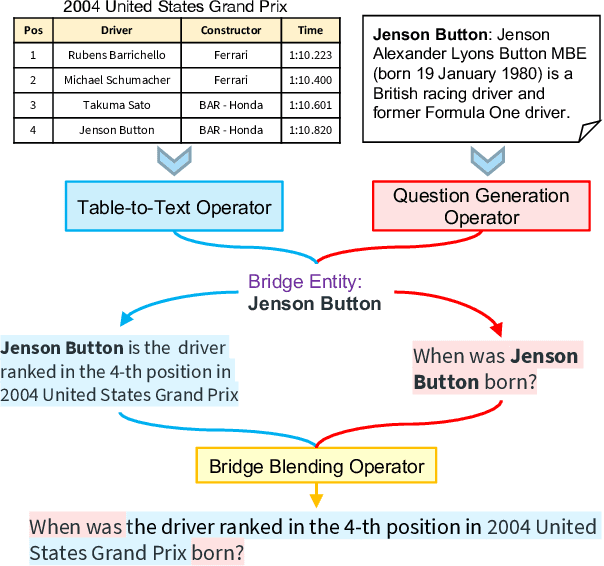

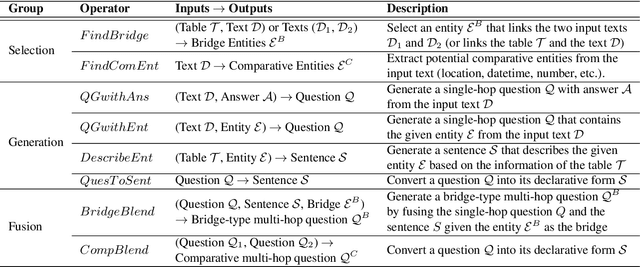

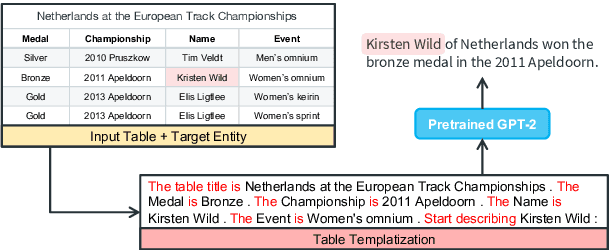

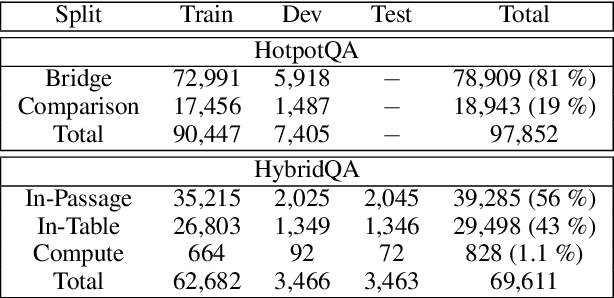

Obtaining training data for Multi-hop Question Answering (QA) is extremely time-consuming and resource-intensive. To address this, we propose the problem of \textit{unsupervised} multi-hop QA, assuming that no human-labeled multi-hop question-answer pairs are available. We propose MQA-QG, an unsupervised question answering framework that can generate human-like multi-hop training pairs from both homogeneous and heterogeneous data sources. Our model generates questions by first selecting or generating relevant information from each data source and then integrating the multiple information to form a multi-hop question. We find that we can train a competent multi-hop QA model with only generated data. The F1 gap between the unsupervised and fully-supervised models is less than 20 in both the HotpotQA and the HybridQA dataset. Further experiments reveal that an unsupervised pretraining with the QA data generated by our model would greatly reduce the demand for human-annotated training data for multi-hop QA.

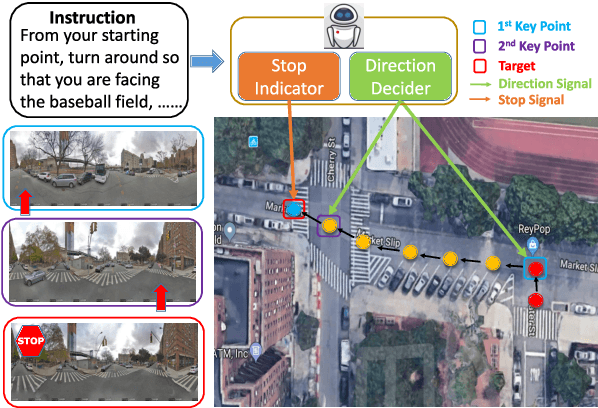

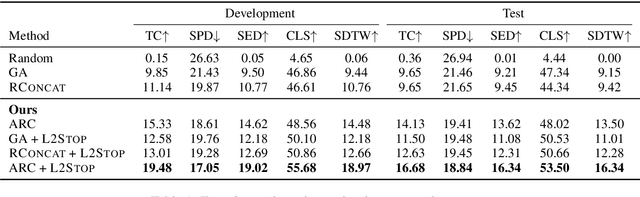

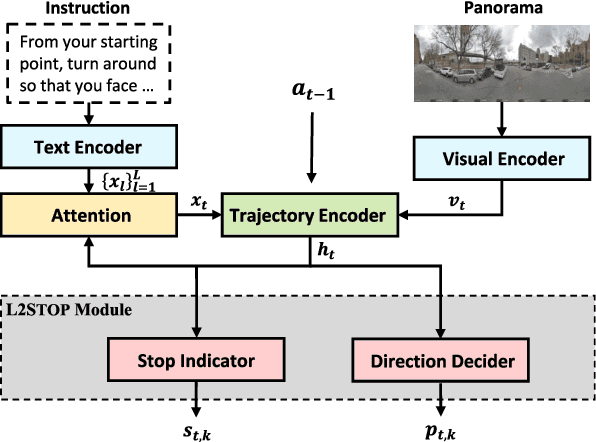

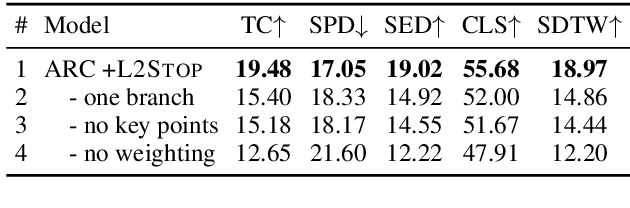

Learning to Stop: A Simple yet Effective Approach to Urban Vision-Language Navigation

Oct 18, 2020Jiannan Xiang, Xin Eric Wang, William Yang Wang

Vision-and-Language Navigation (VLN) is a natural language grounding task where an agent learns to follow language instructions and navigate to specified destinations in real-world environments. A key challenge is to recognize and stop at the correct location, especially for complicated outdoor environments. Existing methods treat the STOP action equally as other actions, which results in undesirable behaviors that the agent often fails to stop at the destination even though it might be on the right path. Therefore, we propose Learning to Stop (L2Stop), a simple yet effective policy module that differentiates STOP and other actions. Our approach achieves the new state of the art on a challenging urban VLN dataset Touchdown, outperforming the baseline by 6.89% (absolute improvement) on Success weighted by Edit Distance (SED).

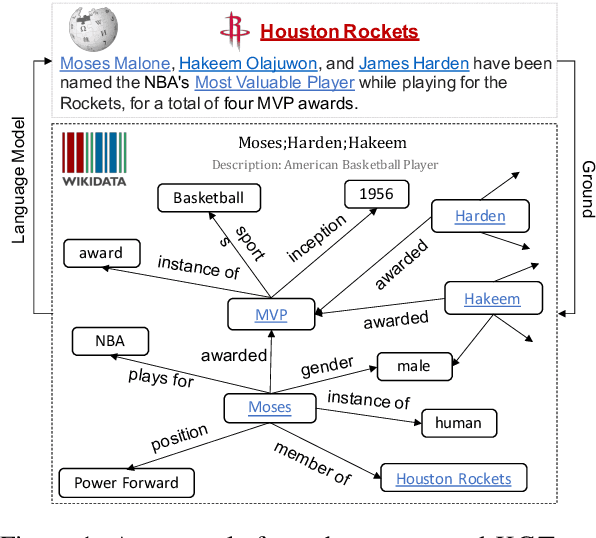

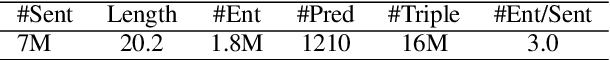

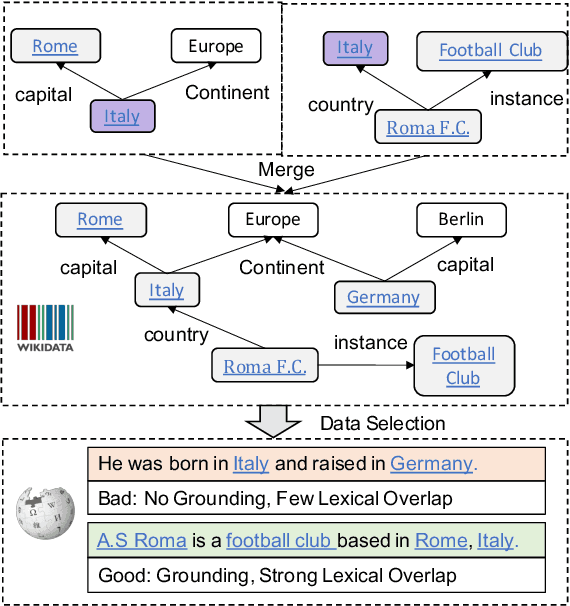

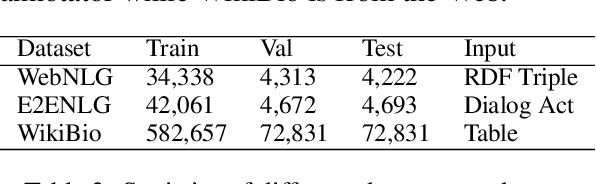

KGPT: Knowledge-Grounded Pre-Training for Data-to-Text Generation

Oct 11, 2020Wenhu Chen, Yu Su, Xifeng Yan, William Yang Wang

Data-to-text generation has recently attracted substantial interests due to its wide applications. Existing methods have shown impressive performance on an array of tasks. However, they rely on a significant amount of labeled data for each task, which is costly to acquire and thus limits their application to new tasks and domains. In this paper, we propose to leverage pre-training and transfer learning to address this issue. We propose a knowledge-grounded pre-training (KGPT), which consists of two parts, 1) a general knowledge-grounded generation model to generate knowledge-enriched text. 2) a pre-training paradigm on a massive knowledge-grounded text corpus crawled from the web. The pre-trained model can be fine-tuned on various data-to-text generation tasks to generate task-specific text. We adopt three settings, namely fully-supervised, zero-shot, few-shot to evaluate its effectiveness. Under the fully-supervised setting, our model can achieve remarkable gains over the known baselines. Under zero-shot setting, our model without seeing any examples achieves over 30 ROUGE-L on WebNLG while all other baselines fail. Under the few-shot setting, our model only needs about one-fifteenth as many labeled examples to achieve the same level of performance as baseline models. These experiments consistently prove the strong generalization ability of our proposed framework https://github.com/wenhuchen/KGPT.

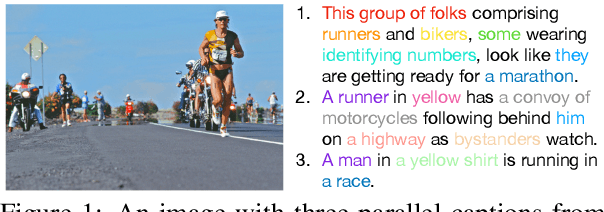

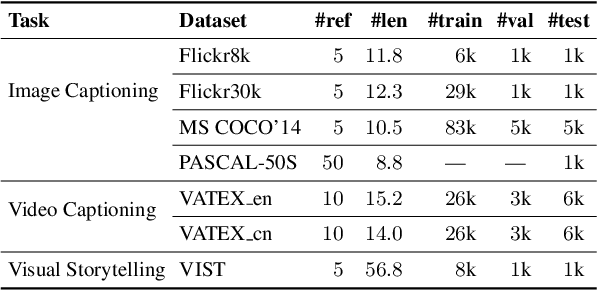

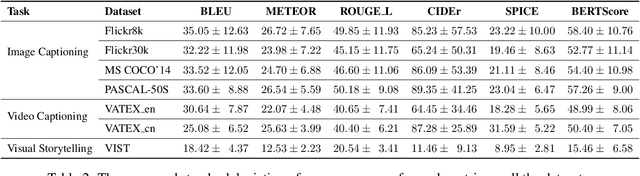

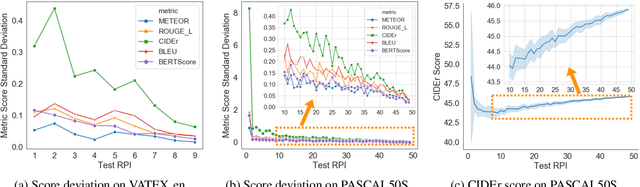

Towards Understanding Sample Variance in Visually Grounded Language Generation: Evaluations and Observations

Oct 07, 2020Wanrong Zhu, Xin Eric Wang, Pradyumna Narayana, Kazoo Sone, Sugato Basu, William Yang Wang

A major challenge in visually grounded language generation is to build robust benchmark datasets and models that can generalize well in real-world settings. To do this, it is critical to ensure that our evaluation protocols are correct, and benchmarks are reliable. In this work, we set forth to design a set of experiments to understand an important but often ignored problem in visually grounded language generation: given that humans have different utilities and visual attention, how will the sample variance in multi-reference datasets affect the models' performance? Empirically, we study several multi-reference datasets and corresponding vision-and-language tasks. We show that it is of paramount importance to report variance in experiments; that human-generated references could vary drastically in different datasets/tasks, revealing the nature of each task; that metric-wise, CIDEr has shown systematically larger variances than others. Our evaluations on reference-per-instance shed light on the design of reliable datasets in the future.

SSCR: Iterative Language-Based Image Editing via Self-Supervised Counterfactual Reasoning

Sep 29, 2020Tsu-Jui Fu, Xin Eric Wang, Scott Grafton, Miguel Eckstein, William Yang Wang

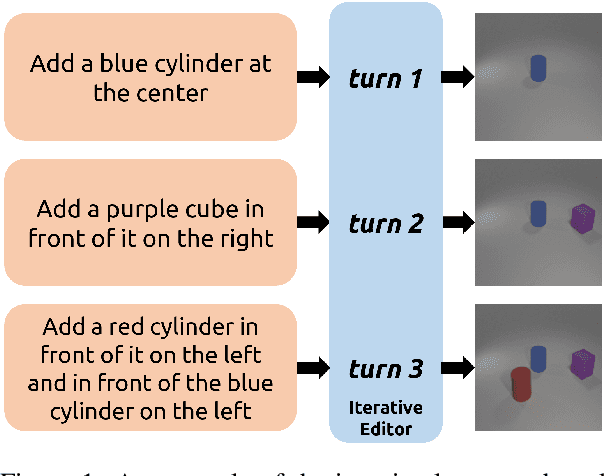

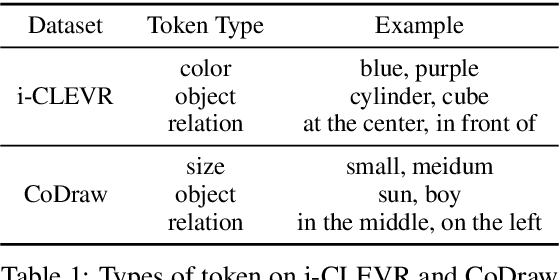

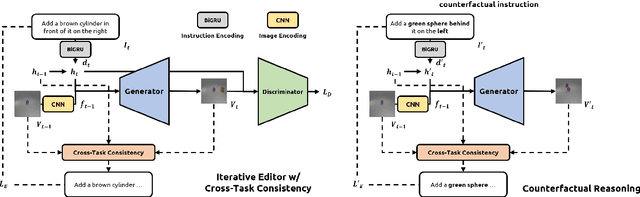

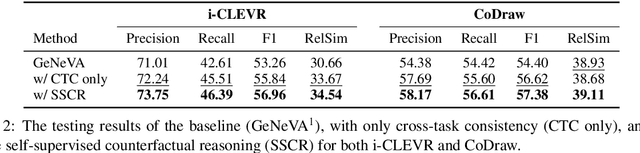

Iterative Language-Based Image Editing (IL-BIE) tasks follow iterative instructions to edit images step by step. Data scarcity is a significant issue for ILBIE as it is challenging to collect large-scale examples of images before and after instruction-based changes. However, humans still accomplish these editing tasks even when presented with an unfamiliar image-instruction pair. Such ability results from counterfactual thinking and the ability to think about alternatives to events that have happened already. In this paper, we introduce a Self-Supervised Counterfactual Reasoning (SSCR) framework that incorporates counterfactual thinking to overcome data scarcity. SSCR allows the model to consider out-of-distribution instructions paired with previous images. With the help of cross-task consistency (CTC), we train these counterfactual instructions in a self-supervised scenario. Extensive results show that SSCR improves the correctness of ILBIE in terms of both object identity and position, establishing a new state of the art (SOTA) on two IBLIE datasets (i-CLEVR and CoDraw). Even with only 50% of the training data, SSCR achieves a comparable result to using complete data.

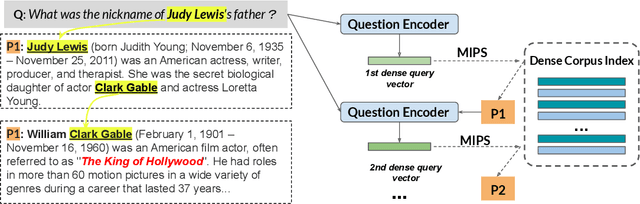

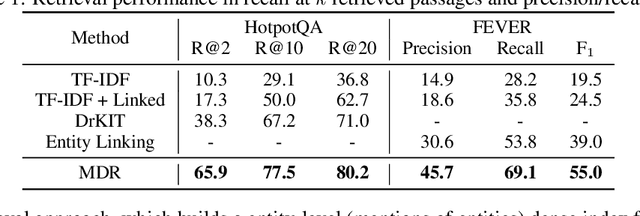

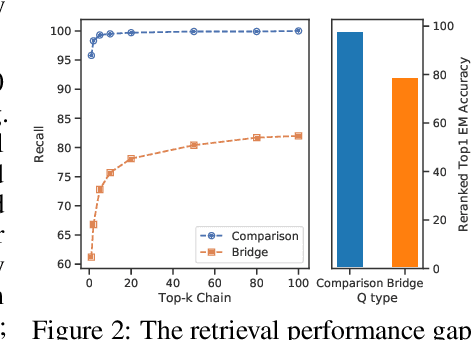

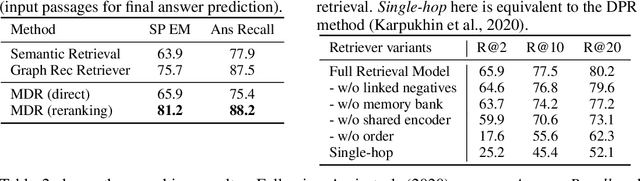

Answering Complex Open-Domain Questions with Multi-Hop Dense Retrieval

Sep 27, 2020Wenhan Xiong, Xiang Lorraine Li, Srini Iyer, Jingfei Du, Patrick Lewis, William Yang Wang, Yashar Mehdad, Wen-tau Yih, Sebastian Riedel, Douwe Kiela, Barlas Oğuz

We propose a simple and efficient multi-hop dense retrieval approach for answering complex open-domain questions, which achieves state-of-the-art performance on two multi-hop datasets, HotpotQA and multi-evidence FEVER. Contrary to previous work, our method does not require access to any corpus-specific information, such as inter-document hyperlinks or human-annotated entity markers, and can be applied to any unstructured text corpus. Our system also yields a much better efficiency-accuracy trade-off, matching the best published accuracy on HotpotQA while being 10 times faster at inference time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge