Weifeng Lv

Collaborative Learning in General Graphs with Limited Memorization: Learnability, Complexity and Reliability

Jan 29, 2022

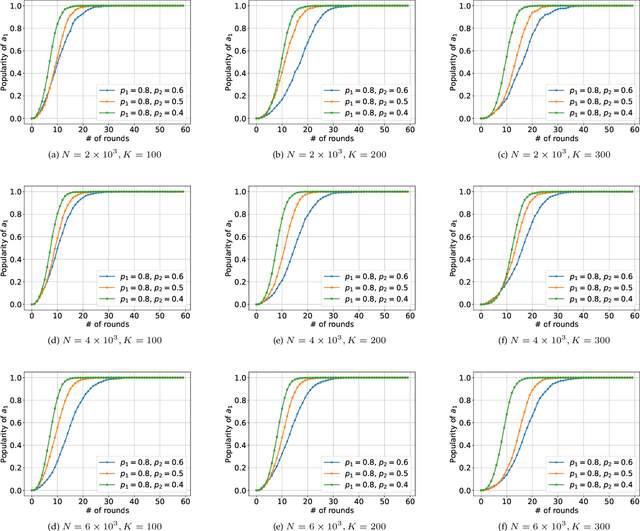

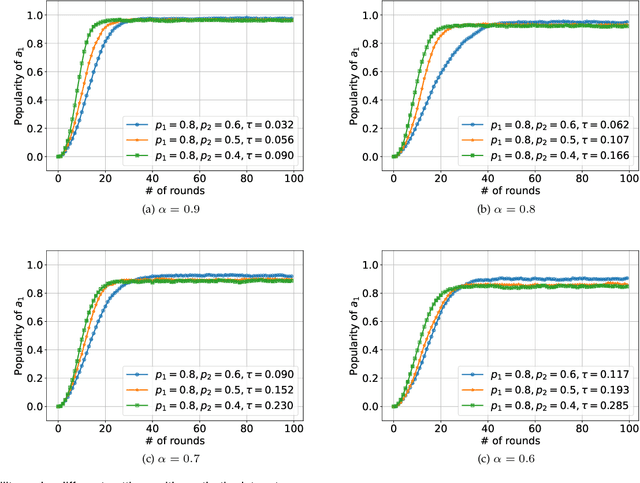

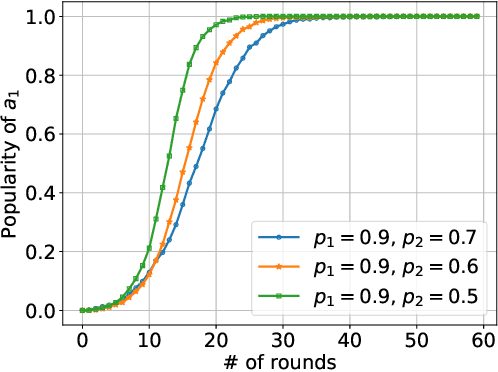

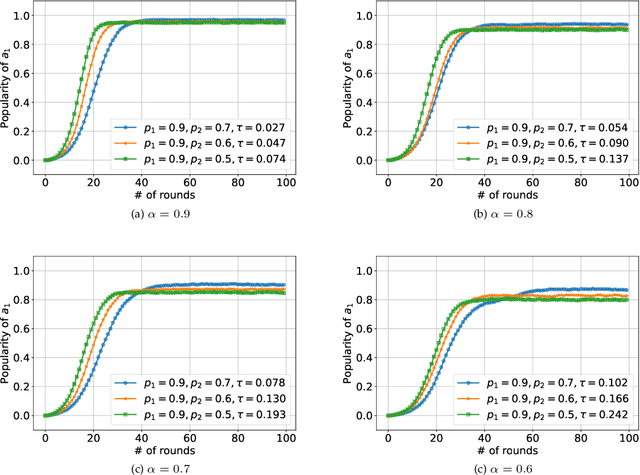

Abstract:We consider K-armed bandit problem in general graphs where agents are arbitrarily connected and each of them has limited memorization and communication bandwidth. The goal is to let each of the agents learn the best arm. Although recent studies show the power of collaboration among the agents in improving the efficacy of learning, it is assumed in these studies that the communication graphs should be complete or well-structured, whereas such an assumption is not always valid in practice. Furthermore, limited memorization and communication bandwidth also restrict the collaborations of the agents, since very few knowledge can be drawn by each agent from its experiences or the ones shared by its peers in this case. Additionally, the agents may be corrupted to share falsified experience, while the resource limit may considerably restrict the reliability of the learning process. To address the above issues, we propose a three-staged collaborative learning algorithm. In each step, the agents share their experience with each other through light-weight random walks in the general graphs, and then make decisions on which arms to pull according to the randomly memorized suggestions. The agents finally update their adoptions (i.e., preferences to the arms) based on the reward feedback of the arm pulling. Our theoretical analysis shows that, by exploiting the limited memorization and communication resources, all the agents eventually learn the best arm with high probability. We also reveal in our theoretical analysis the upper-bound on the number of corrupted agents our algorithm can tolerate. The efficacy of our proposed three-staged collaborative learning algorithm is finally verified by extensive experiments on both synthetic and real datasets.

Heterogeneous Graph Representation Learning with Relation Awareness

May 24, 2021

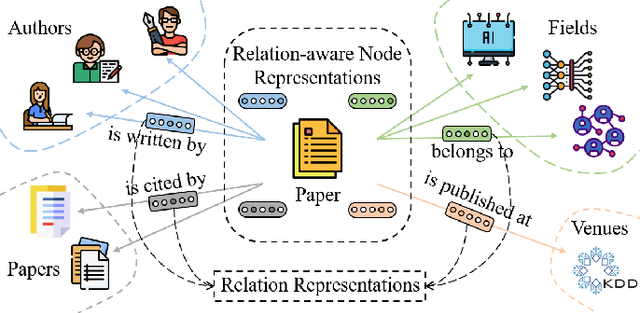

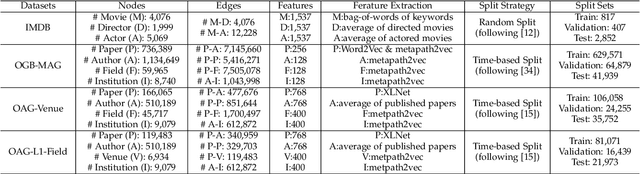

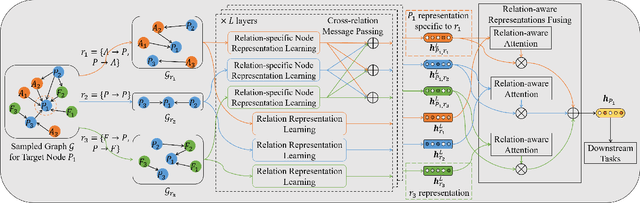

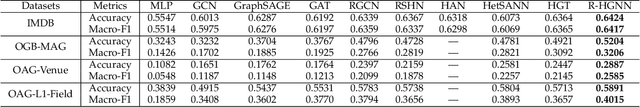

Abstract:Representation learning on heterogeneous graphs aims to obtain meaningful node representations to facilitate various downstream tasks, such as node classification and link prediction. Existing heterogeneous graph learning methods are primarily developed by following the propagation mechanism of node representations. There are few efforts on studying the role of relations for improving the learning of more fine-grained node representations. Indeed, it is important to collaboratively learn the semantic representations of relations and discern node representations with respect to different relation types. To this end, in this paper, we propose a novel Relation-aware Heterogeneous Graph Neural Network, namely R-HGNN, to learn node representations on heterogeneous graphs at a fine-grained level by considering relation-aware characteristics. Specifically, a dedicated graph convolution component is first designed to learn unique node representations from each relation-specific graph separately. Then, a cross-relation message passing module is developed to improve the interactions of node representations across different relations. Also, the relation representations are learned in a layer-wise manner to capture relation semantics, which are used to guide the node representation learning process. Moreover, a semantic fusing module is presented to aggregate relation-aware node representations into a compact representation with the learned relation representations. Finally, we conduct extensive experiments on a variety of graph learning tasks, and experimental results demonstrate that our approach consistently outperforms existing methods among all the tasks.

Hybrid Micro/Macro Level Convolution for Heterogeneous Graph Learning

Dec 29, 2020

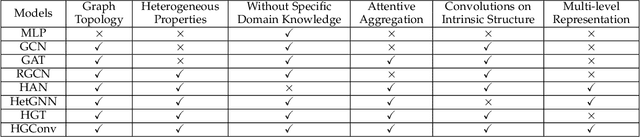

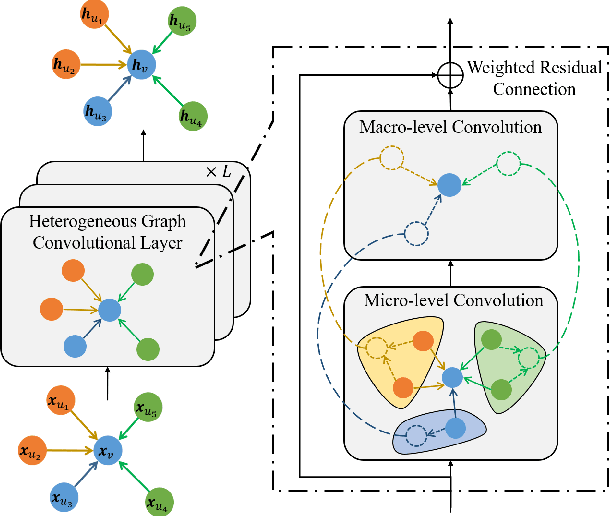

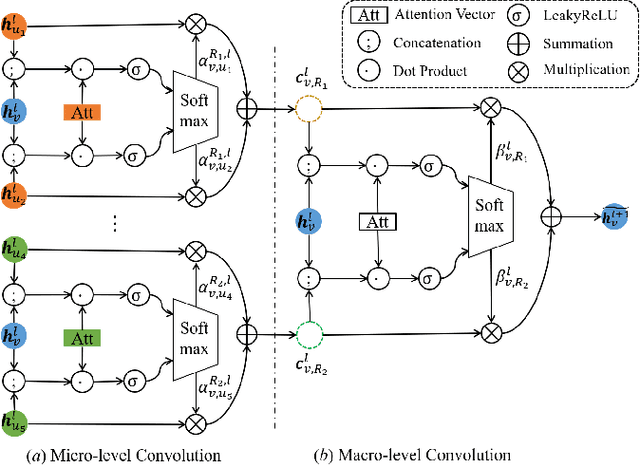

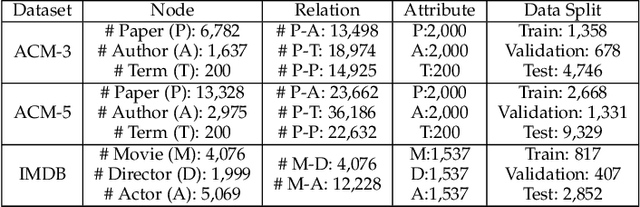

Abstract:Heterogeneous graphs are pervasive in practical scenarios, where each graph consists of multiple types of nodes and edges. Representation learning on heterogeneous graphs aims to obtain low-dimensional node representations that could preserve both node attributes and relation information. However, most of the existing graph convolution approaches were designed for homogeneous graphs, and therefore cannot handle heterogeneous graphs. Some recent methods designed for heterogeneous graphs are also faced with several issues, including the insufficient utilization of heterogeneous properties, structural information loss, and lack of interpretability. In this paper, we propose HGConv, a novel Heterogeneous Graph Convolution approach, to learn comprehensive node representations on heterogeneous graphs with a hybrid micro/macro level convolutional operation. Different from existing methods, HGConv could perform convolutions on the intrinsic structure of heterogeneous graphs directly at both micro and macro levels: A micro-level convolution to learn the importance of nodes within the same relation, and a macro-level convolution to distinguish the subtle difference across different relations. The hybrid strategy enables HGConv to fully leverage heterogeneous information with proper interpretability. Moreover, a weighted residual connection is designed to aggregate both inherent attributes and neighbor information of the focal node adaptively. Extensive experiments on various tasks demonstrate not only the superiority of HGConv over existing methods, but also the intuitive interpretability of our approach for graph analysis.

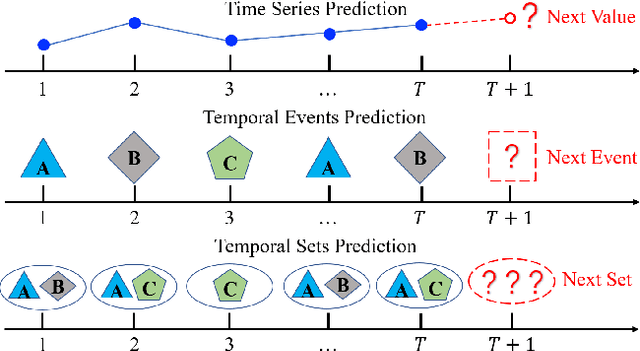

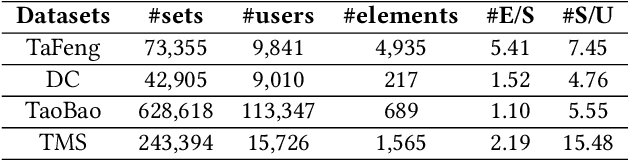

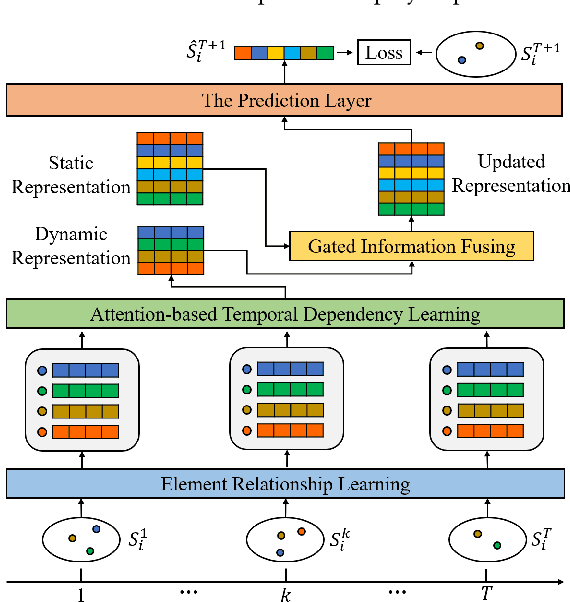

Predicting Temporal Sets with Deep Neural Networks

Jul 08, 2020

Abstract:Given a sequence of sets, where each set contains an arbitrary number of elements, the problem of temporal sets prediction aims to predict the elements in the subsequent set. In practice, temporal sets prediction is much more complex than predictive modelling of temporal events and time series, and is still an open problem. Many possible existing methods, if adapted for the problem of temporal sets prediction, usually follow a two-step strategy by first projecting temporal sets into latent representations and then learning a predictive model with the latent representations. The two-step approach often leads to information loss and unsatisfactory prediction performance. In this paper, we propose an integrated solution based on the deep neural networks for temporal sets prediction. A unique perspective of our approach is to learn element relationship by constructing set-level co-occurrence graph and then perform graph convolutions on the dynamic relationship graphs. Moreover, we design an attention-based module to adaptively learn the temporal dependency of elements and sets. Finally, we provide a gated updating mechanism to find the hidden shared patterns in different sequences and fuse both static and dynamic information to improve the prediction performance. Experiments on real-world data sets demonstrate that our approach can achieve competitive performances even with a portion of the training data and can outperform existing methods with a significant margin.

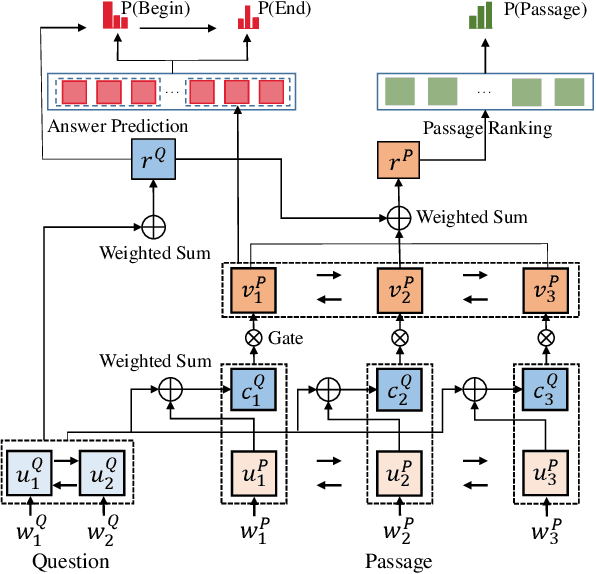

S-Net: From Answer Extraction to Answer Generation for Machine Reading Comprehension

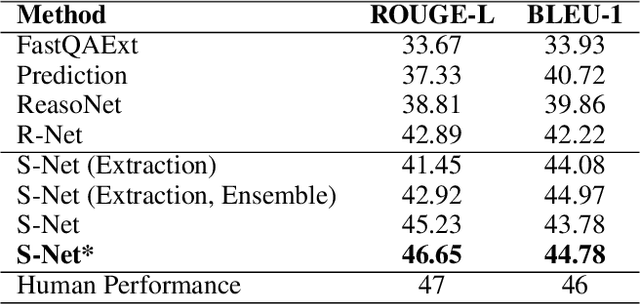

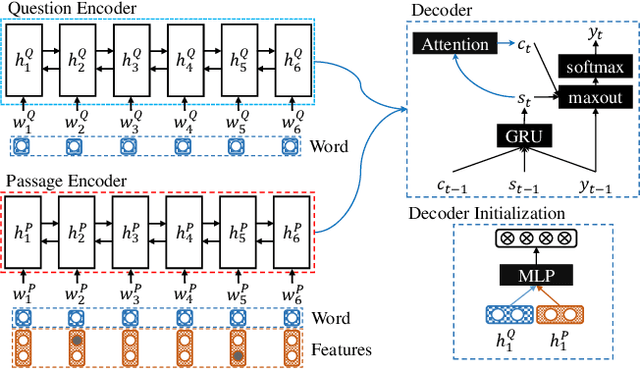

Jan 02, 2018

Abstract:In this paper, we present a novel approach to machine reading comprehension for the MS-MARCO dataset. Unlike the SQuAD dataset that aims to answer a question with exact text spans in a passage, the MS-MARCO dataset defines the task as answering a question from multiple passages and the words in the answer are not necessary in the passages. We therefore develop an extraction-then-synthesis framework to synthesize answers from extraction results. Specifically, the answer extraction model is first employed to predict the most important sub-spans from the passage as evidence, and the answer synthesis model takes the evidence as additional features along with the question and passage to further elaborate the final answers. We build the answer extraction model with state-of-the-art neural networks for single passage reading comprehension, and propose an additional task of passage ranking to help answer extraction in multiple passages. The answer synthesis model is based on the sequence-to-sequence neural networks with extracted evidences as features. Experiments show that our extraction-then-synthesis method outperforms state-of-the-art methods.

Entity Linking for Queries by Searching Wikipedia Sentences

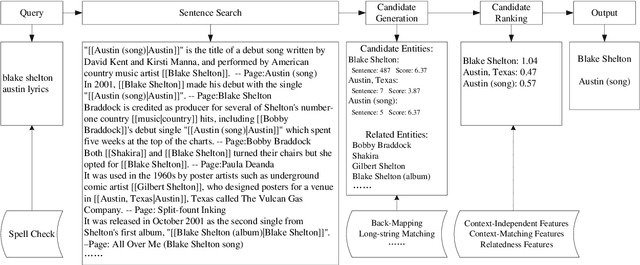

May 18, 2017

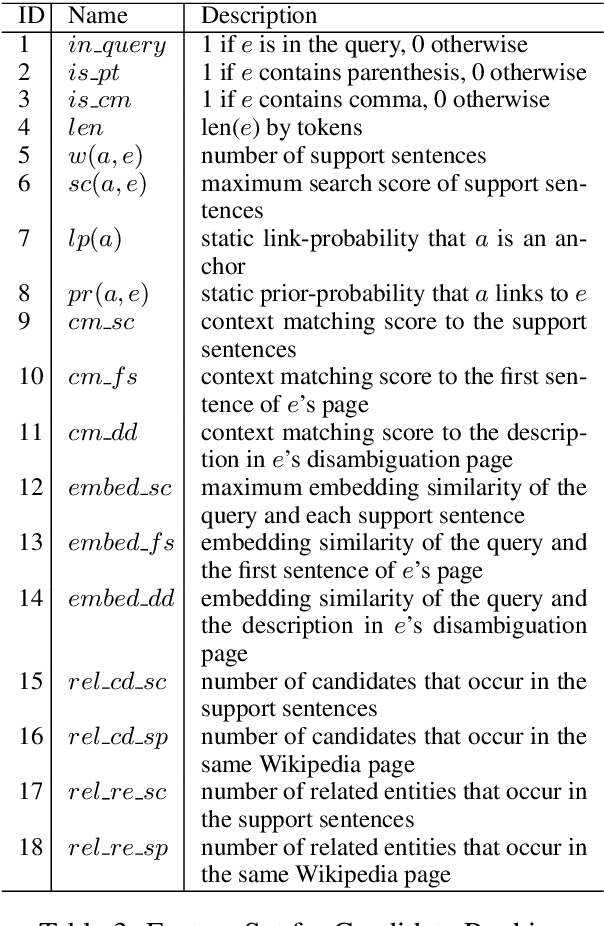

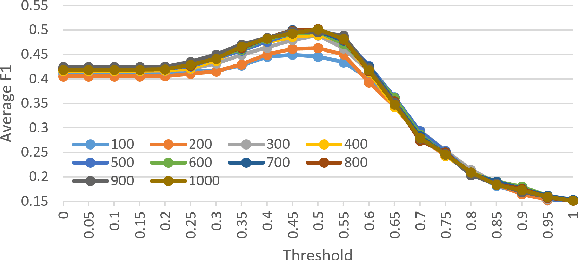

Abstract:We present a simple yet effective approach for linking entities in queries. The key idea is to search sentences similar to a query from Wikipedia articles and directly use the human-annotated entities in the similar sentences as candidate entities for the query. Then, we employ a rich set of features, such as link-probability, context-matching, word embeddings, and relatedness among candidate entities as well as their related entities, to rank the candidates under a regression based framework. The advantages of our approach lie in two aspects, which contribute to the ranking process and final linking result. First, it can greatly reduce the number of candidate entities by filtering out irrelevant entities with the words in the query. Second, we can obtain the query sensitive prior probability in addition to the static link-probability derived from all Wikipedia articles. We conduct experiments on two benchmark datasets on entity linking for queries, namely the ERD14 dataset and the GERDAQ dataset. Experimental results show that our method outperforms state-of-the-art systems and yields 75.0% in F1 on the ERD14 dataset and 56.9% on the GERDAQ dataset.

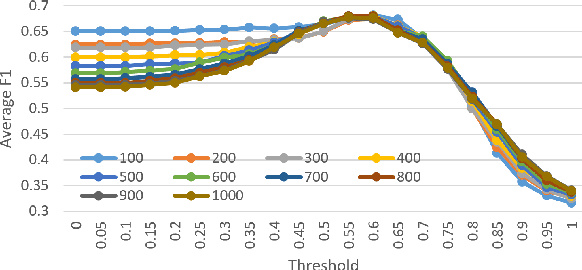

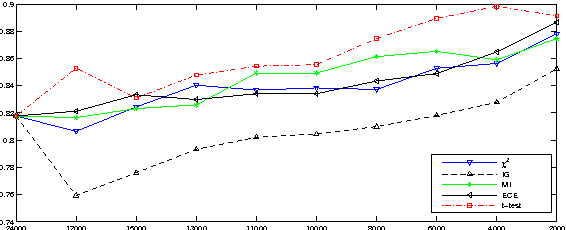

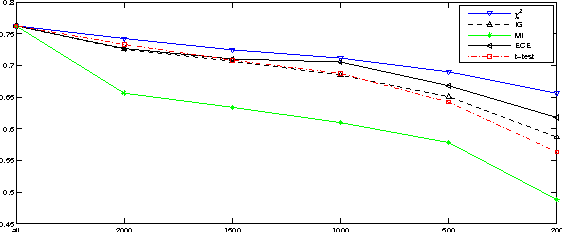

Feature Selection Based on Term Frequency and T-Test for Text Categorization

May 03, 2013

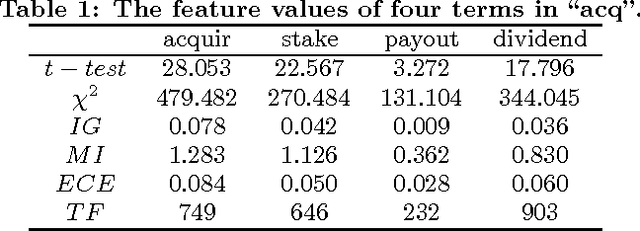

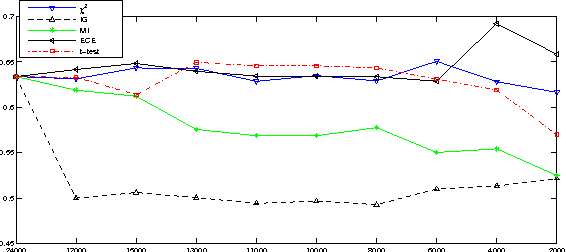

Abstract:Much work has been done on feature selection. Existing methods are based on document frequency, such as Chi-Square Statistic, Information Gain etc. However, these methods have two shortcomings: one is that they are not reliable for low-frequency terms, and the other is that they only count whether one term occurs in a document and ignore the term frequency. Actually, high-frequency terms within a specific category are often regards as discriminators. This paper focuses on how to construct the feature selection function based on term frequency, and proposes a new approach based on $t$-test, which is used to measure the diversity of the distributions of a term between the specific category and the entire corpus. Extensive comparative experiments on two text corpora using three classifiers show that our new approach is comparable to or or slightly better than the state-of-the-art feature selection methods (i.e., $\chi^2$, and IG) in terms of macro-$F_1$ and micro-$F_1$.

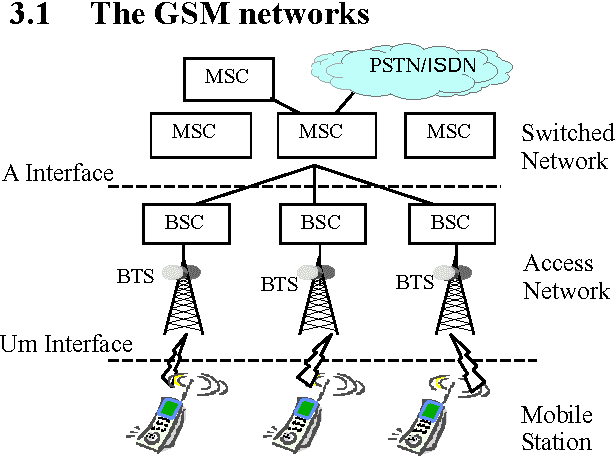

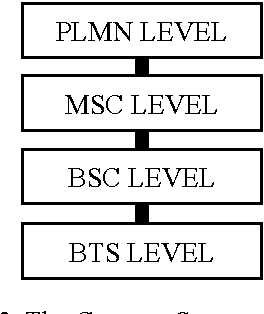

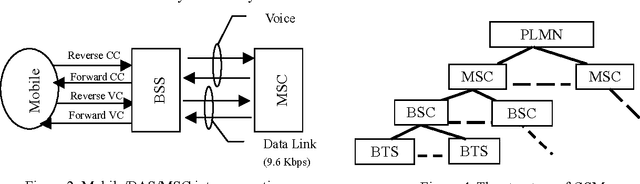

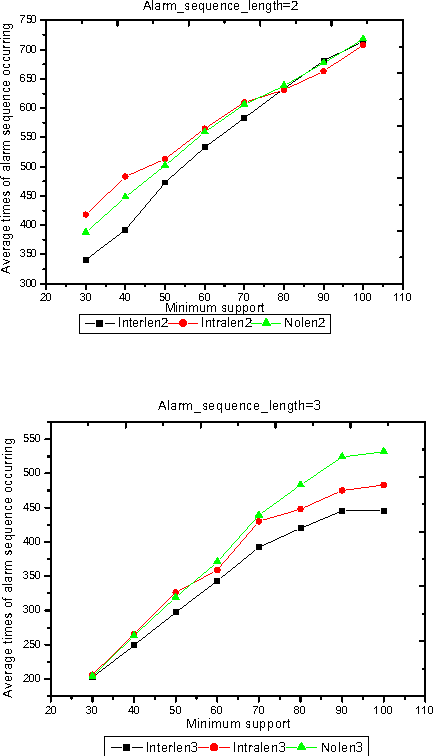

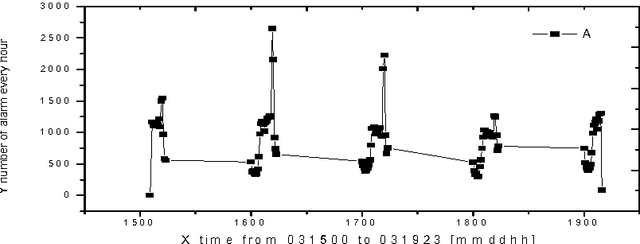

Intelligent Search of Correlated Alarms for GSM Networks with Model-based Constraints

Apr 29, 2002

Abstract:In order to control the process of data mining and focus on the things of interest to us, many kinds of constraints have been added into the algorithms of data mining. However, discovering the correlated alarms in the alarm database needs deep domain constraints. Because the correlated alarms greatly depend on the logical and physical architecture of networks. Thus we use the network model as the constraints of algorithms, including Scope constraint, Inter-correlated constraint and Intra-correlated constraint, in our proposed algorithm called SMC (Search with Model-based Constraints). The experiments show that the SMC algorithm with Inter-correlated or Intra-correlated constraint is about two times faster than the algorithm with no constraints.

* 8 pages, 7 figures

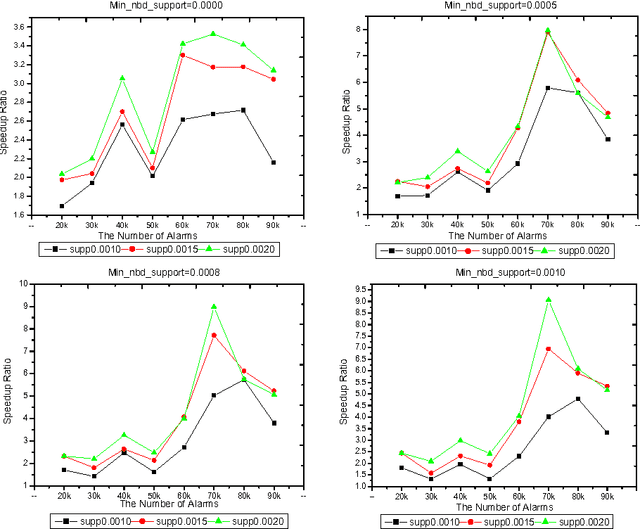

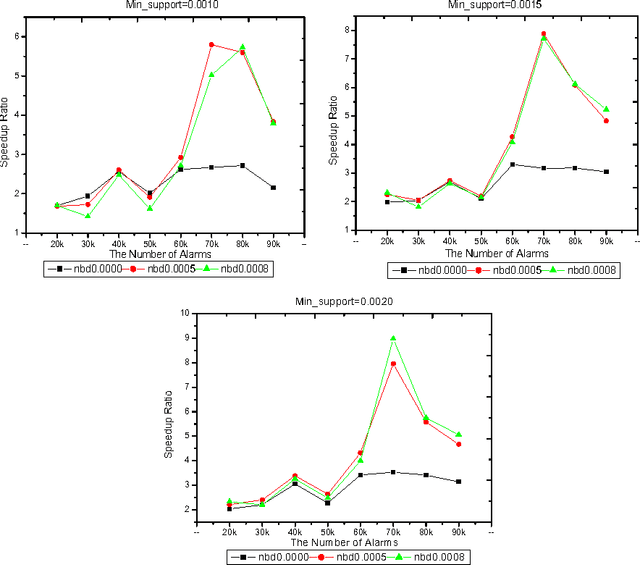

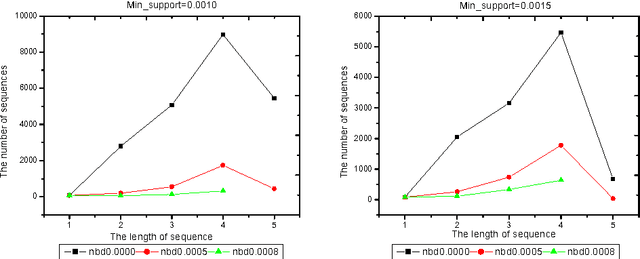

The Algorithms of Updating Sequential Patterns

Mar 27, 2002

Abstract:Because the data being mined in the temporal database will evolve with time, many researchers have focused on the incremental mining of frequent sequences in temporal database. In this paper, we propose an algorithm called IUS, using the frequent and negative border sequences in the original database for incremental sequence mining. To deal with the case where some data need to be updated from the original database, we present an algorithm called DUS to maintain sequential patterns in the updated database. We also define the negative border sequence threshold: Min_nbd_supp to control the number of sequences in the negative border.

* 12 pages, 4 figures

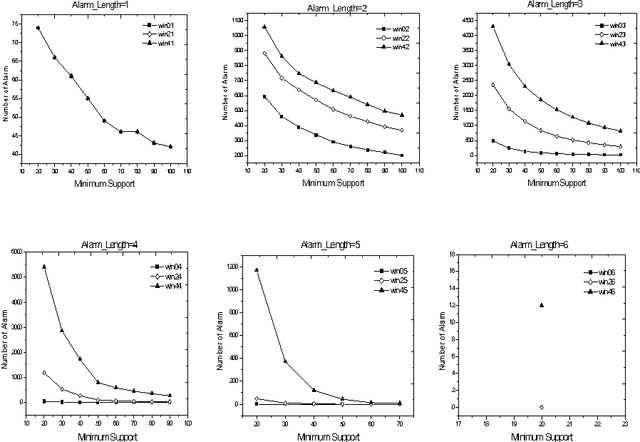

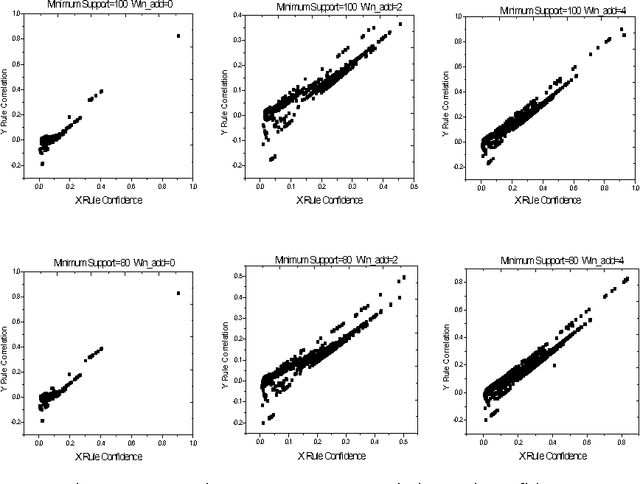

Intelligent Search of Correlated Alarms from Database containing Noise Data

Dec 26, 2001

Abstract:Alarm correlation plays an important role in improving the service and reliability in modern telecommunications networks. Most previous research of alarm correlation didn't consider the effect of noise data in Database. This paper focuses on the method of discovering alarm correlation rules from database containing noise data. We firstly define two parameters Win_freq and Win_add as the measure of noise data and then present the Robust_search algorithm to solve the problem. At different size of Win_freq and Win_add, experiments with alarm data containing noise data show that the Robust_search Algorithm can discover the more rules with the bigger size of Win_add. We also experimentally compare two different interestingness measures of confidence and correlation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge