Vít Krátký

On rapid parallel tuning of controllers of a swarm of MAVs -- distribution strategies of the updated gains

May 12, 2025Abstract:In this paper, we present a reliable, scalable, time deterministic, model-free procedure to tune swarms of Micro Aerial Vehicles (MAVs) using basic sensory data. Two approaches to taking advantage of parallel tuning are presented. First, the tuning with averaging of the results on the basis of performance indices reported from the swarm with identical gains to decrease the negative effect of the noise in the measurements. Second, the tuning with parallel testing of varying set of gains across the swarm to reduce the tuning time. The presented methods were evaluated both in simulation and real-world experiments. The achieved results show the ability of the proposed approach to improve the results of the tuning while decreasing the tuning time, ensuring at the same time a reliable tuning mechanism.

* 7 pages, 7 figures

Gesture-Controlled Aerial Robot Formation for Human-Swarm Interaction in Safety Monitoring Applications

Mar 22, 2024

Abstract:This paper presents a formation control approach for contactless gesture-based Human-Swarm Interaction (HSI) between a team of multi-rotor Unmanned Aerial Vehicles (UAVs) and a human worker. The approach is intended for monitoring the safety of human workers, especially those working at heights. In the proposed dynamic formation scheme, one UAV acts as the leader of the formation and is equipped with sensors for human worker detection and gesture recognition. The follower UAVs maintain a predetermined formation relative to the worker's position, thereby providing additional perspectives of the monitored scene. Hand gestures allow the human worker to specify movements and action commands for the UAV team and initiate other mission-related commands without the need for an additional communication channel or specific markers. Together with a novel unified human detection and tracking algorithm, human pose estimation approach and gesture detection pipeline, the proposed approach forms a first instance of an HSI system incorporating all these modules onboard real-world UAVs. Simulations and field experiments with three UAVs and a human worker in a mock-up scenario showcase the effectiveness and responsiveness of the proposed approach.

Drones Guiding Drones: Cooperative Navigation of a Less-Equipped Micro Aerial Vehicle in Cluttered Environments

Dec 15, 2023

Abstract:Reliable deployment of Unmanned Aerial Vehicles (UAVs) in cluttered unknown environments requires accurate sensors for obstacle avoidance. Such a requirement limits the usage of cheap and micro-scale vehicles with constrained payload capacity if industrial-grade reliability and precision are required. This paper investigates the possibility of offloading the necessity to carry heavy and expensive obstacle sensors to another member of the UAV team while preserving the desired obstacle avoidance capability. A novel cooperative guidance framework offloading the obstacle sensing requirements from a minimalistic secondary UAV to a superior primary UAV is proposed. The primary UAV constructs a dense occupancy map of the environment and plans collision-free paths for both UAVs to ensure reaching the desired secondary UAV's goal. The primary UAV guides the secondary UAV to follow the planned path while tracking the UAV using Light Detection and Ranging (LiDAR)-based relative localization. The proposed approach was verified in real-world experiments with a heterogeneous team of a 3D LiDAR-equipped primary UAV and a camera-equipped secondary UAV moving autonomously through unknown cluttered Global Navigation Satellite System (GNSS)-denied environments with the proposed framework running completely on board the UAVs.

Autonomous Reflectance Transformation Imaging by a Team of Unmanned Aerial Vehicles

Mar 02, 2023

Abstract:A Reflectance Transformation Imaging technique (RTI) realized by multi-rotor Unmanned Aerial Vehicles (UAVs) with a focus on deployment in difficult to access buildings is presented in this letter. RTI is a computational photographic method that captures a surface shape and color of a subject and enables its interactive re-lighting from any direction in a software viewer, revealing details that are not visible with the naked eye. The input of RTI is a set of images captured by a static camera, each one under illumination from a different known direction. We present an innovative approach applying two multi-rotor UAVs to perform this scanning procedure in locations that are hardly accessible or even inaccessible for people. The proposed system is designed for its safe deployment within real-world scenarios in historical buildings with priceless historical value.

Autonomous Aerial Filming With Distributed Lighting by a Team of Unmanned Aerial Vehicles

Mar 02, 2023

Abstract:This letter describes a method for autonomous aerial cinematography with distributed lighting by a team of unmanned aerial vehicles (UAVs). Although camera-carrying multi-rotor helicopters have become commonplace in cinematography, their usage is limited to scenarios with sufficient natural light or of lighting provided by static artificial lights. We propose to use a formation of unmanned aerial vehicles as a tool for filming a target under illumination from various directions, which is one of the fundamental techniques of traditional cinematography. We decompose the multi-UAV trajectory optimization problem to tackle non-linear cinematographic aspects and obstacle avoidance at separate stages, which allows us to re-plan in real time and react to changes in dynamic environments. The performance of our method has been evaluated in realistic simulation scenarios and field experiments, where we show how it increases the quality of the shots and that it is capable of planning safe trajectories even in cluttered environments.

Present and Future of SLAM in Extreme Underground Environments

Aug 02, 2022

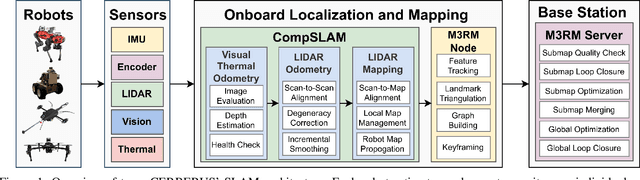

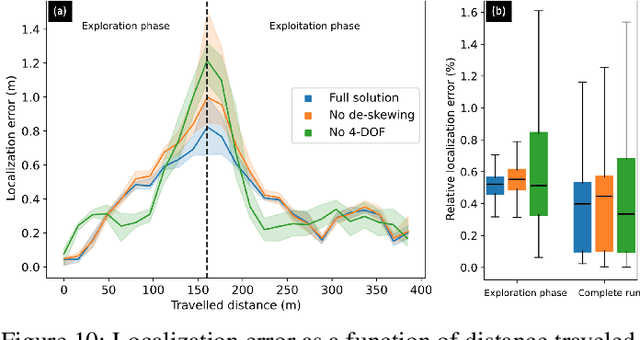

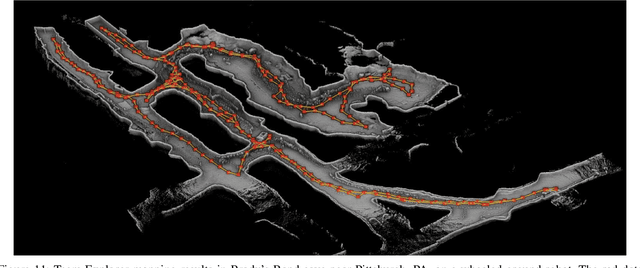

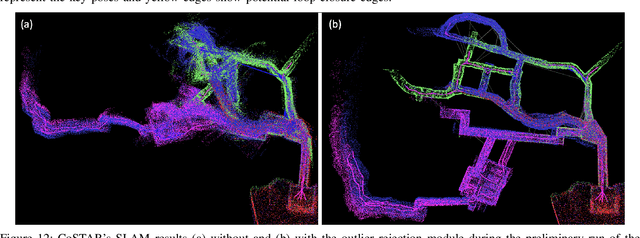

Abstract:This paper reports on the state of the art in underground SLAM by discussing different SLAM strategies and results across six teams that participated in the three-year-long SubT competition. In particular, the paper has four main goals. First, we review the algorithms, architectures, and systems adopted by the teams; particular emphasis is put on lidar-centric SLAM solutions (the go-to approach for virtually all teams in the competition), heterogeneous multi-robot operation (including both aerial and ground robots), and real-world underground operation (from the presence of obscurants to the need to handle tight computational constraints). We do not shy away from discussing the dirty details behind the different SubT SLAM systems, which are often omitted from technical papers. Second, we discuss the maturity of the field by highlighting what is possible with the current SLAM systems and what we believe is within reach with some good systems engineering. Third, we outline what we believe are fundamental open problems, that are likely to require further research to break through. Finally, we provide a list of open-source SLAM implementations and datasets that have been produced during the SubT challenge and related efforts, and constitute a useful resource for researchers and practitioners.

System for multi-robotic exploration of underground environments CTU-CRAS-NORLAB in the DARPA Subterranean Challenge

Oct 12, 2021

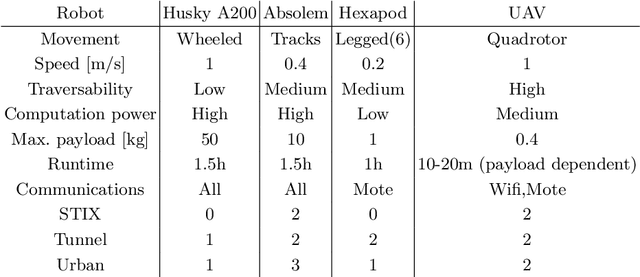

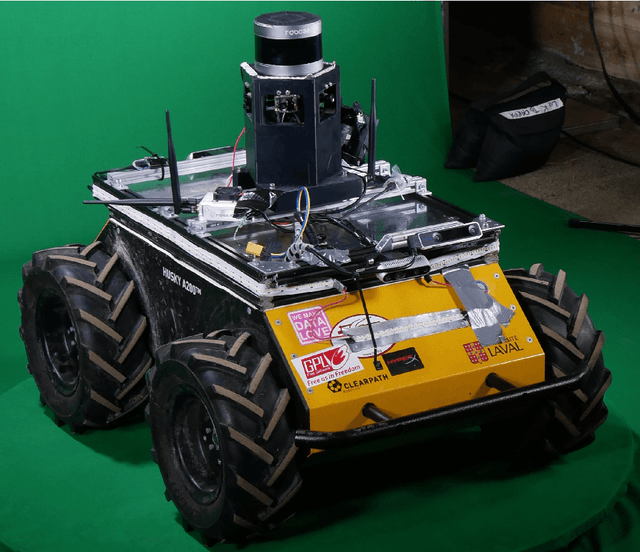

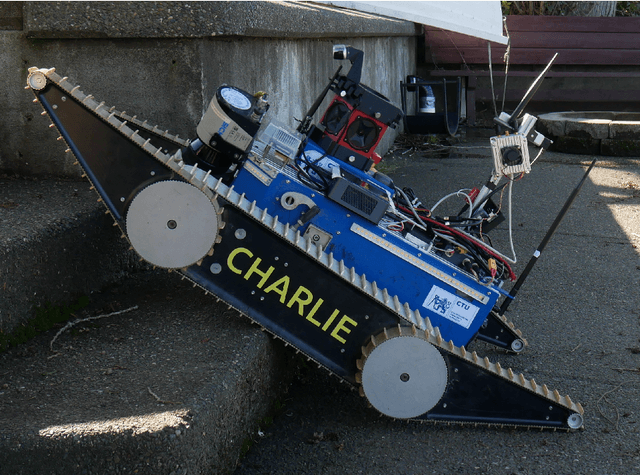

Abstract:We present a field report of CTU-CRAS-NORLAB team from the Subterranean Challenge (SubT) organised by the Defense Advanced Research Projects Agency (DARPA). The contest seeks to advance technologies that would improve the safety and efficiency of search-and-rescue operations in GPS-denied environments. During the contest rounds, teams of mobile robots have to find specific objects while operating in environments with limited radio communication, e.g. mining tunnels, underground stations or natural caverns. We present a heterogeneous exploration robotic system of the CTU-CRAS-NORLAB team, which achieved the third rank at the SubT Tunnel and Urban Circuit rounds and surpassed the performance of all other non-DARPA-funded teams. The field report describes the team's hardware, sensors, algorithms and strategies, and discusses the lessons learned by participating at the DARPA SubT contest.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge