Taskin Padir

Analysis and Perspectives on the ANA Avatar XPRIZE Competition

Jan 10, 2024

Abstract:The ANA Avatar XPRIZE was a four-year competition to develop a robotic "avatar" system to allow a human operator to sense, communicate, and act in a remote environment as though physically present. The competition featured a unique requirement that judges would operate the avatars after less than one hour of training on the human-machine interfaces, and avatar systems were judged on both objective and subjective scoring metrics. This paper presents a unified summary and analysis of the competition from technical, judging, and organizational perspectives. We study the use of telerobotics technologies and innovations pursued by the competing teams in their avatar systems, and correlate the use of these technologies with judges' task performance and subjective survey ratings. It also summarizes perspectives from team leads, judges, and organizers about the competition's execution and impact to inform the future development of telerobotics and telepresence.

Shared Affordance-awareness via Augmented Reality for Proactive Assistance in Human-robot Collaboration

Dec 20, 2023Abstract:Enabling humans and robots to collaborate effectively requires purposeful communication and an understanding of each other's affordances. Prior work in human-robot collaboration has incorporated knowledge of human affordances, i.e., their action possibilities in the current context, into autonomous robot decision-making. This "affordance awareness" is especially promising for service robots that need to know when and how to assist a person that cannot independently complete a task. However, robots still fall short in performing many common tasks autonomously. In this work-in-progress paper, we propose an augmented reality (AR) framework that bridges the gap in an assistive robot's capabilities by actively engaging with a human through a shared affordance-awareness representation. Leveraging the different perspectives from a human wearing an AR headset and a robot's equipped sensors, we can build a perceptual representation of the shared environment and model regions of respective agent affordances. The AR interface can also allow both agents to communicate affordances with one another, as well as prompt for assistance when attempting to perform an action outside their affordance region. This paper presents the main components of the proposed framework and discusses its potential through a domestic cleaning task experiment.

E(2)-Equivariant Graph Planning for Navigation

Sep 22, 2023

Abstract:Learning for robot navigation presents a critical and challenging task. The scarcity and costliness of real-world datasets necessitate efficient learning approaches. In this letter, we exploit Euclidean symmetry in planning for 2D navigation, which originates from Euclidean transformations between reference frames and enables parameter sharing. To address the challenges of unstructured environments, we formulate the navigation problem as planning on a geometric graph and develop an equivariant message passing network to perform value iteration. Furthermore, to handle multi-camera input, we propose a learnable equivariant layer to lift features to a desired space. We conduct comprehensive evaluations across five diverse tasks encompassing structured and unstructured environments, along with maps of known and unknown, given point goals or semantic goals. Our experiments confirm the substantial benefits on training efficiency, stability, and generalization.

Mobile MoCap: Retroreflector Localization On-The-Go

Mar 23, 2023

Abstract:Motion capture (MoCap) through tracking retroreflectors obtains high precision pose estimation, which is frequently used in robotics. Unlike MoCap, fiducial marker-based tracking methods do not require a static camera setup to perform relative localization. Popular pose-estimating systems based on fiducial markers have lower localization accuracy than MoCap. As a solution, we propose Mobile MoCap, a system that employs inexpensive near-infrared cameras for precise relative localization in dynamic environments. We present a retroreflector feature detector that performs 6-DoF (six degrees-of-freedom) tracking and operates with minimal camera exposure times to reduce motion blur. To evaluate different localization techniques in a mobile robot setup, we mount our Mobile MoCap system, as well as a standard RGB camera, onto a precision-controlled linear rail for the purposes of retroreflective and fiducial marker tracking, respectively. We benchmark the two systems against each other, varying distance, marker viewing angle, and relative velocities. Our stereo-based Mobile MoCap approach obtains higher position and orientation accuracy than the fiducial approach. The code for Mobile MoCap is implemented in ROS 2 and made publicly available at https://github.com/RIVeR-Lab/mobile_mocap.

Contact-Implicit Planning and Control for Non-Prehensile Manipulation Using State-Triggered Constraints

Oct 18, 2022

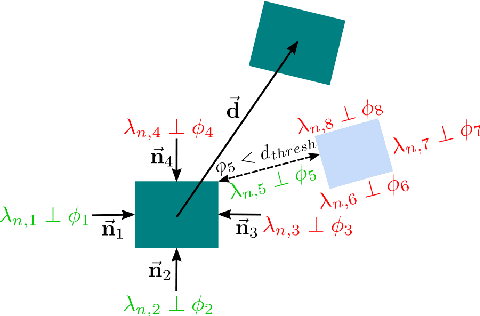

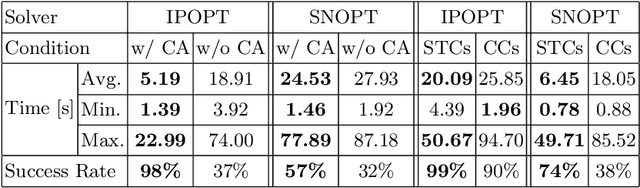

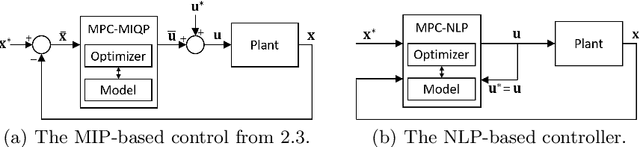

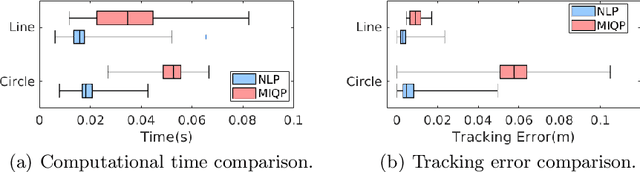

Abstract:We present a contact-implicit planning approach that can generate contact-interaction trajectories for non-prehensile manipulation problems without tuning or a tailored initial guess and with high success rates. This is achieved by leveraging the concept of state-triggered constraints (STCs) to capture the hybrid dynamics induced by discrete contact modes without explicitly reasoning about the combinatorics. STCs enable triggering arbitrary constraints by a strict inequality condition in a continuous way. We first use STCs to develop an automatic contact constraint activation method to minimize the effective constraint space based on the utility of contact candidates for a given task. Then, we introduce a re-formulation of the Coulomb friction model based on STCs that is more efficient for the discovery of tangential forces than the well-studied complementarity constraints-based approach. Last, we include the proposed friction model in the planning and control of quasi-static planar pushing. The performance of the STC-based contact activation and friction methods is evaluated by extensive simulation experiments in a dynamic pushing scenario. The results demonstrate that our methods outperform the baselines based on complementarity constraints with a significant decrease in the planning time and a higher success rate. We then compare the proposed quasi-static pushing controller against a mixed-integer programming-based approach in simulation and find that our method is computationally more efficient and provides a better tracking accuracy, with the added benefit of not requiring an initial control trajectory. Finally, we present hardware experiments demonstrating the usability of our framework in executing complex trajectories in real-time even with a low-accuracy tracking system.

StereoVoxelNet: Real-Time Obstacle Detection Based on Occupancy Voxels from a Stereo Camera Using Deep Neural Networks

Sep 18, 2022

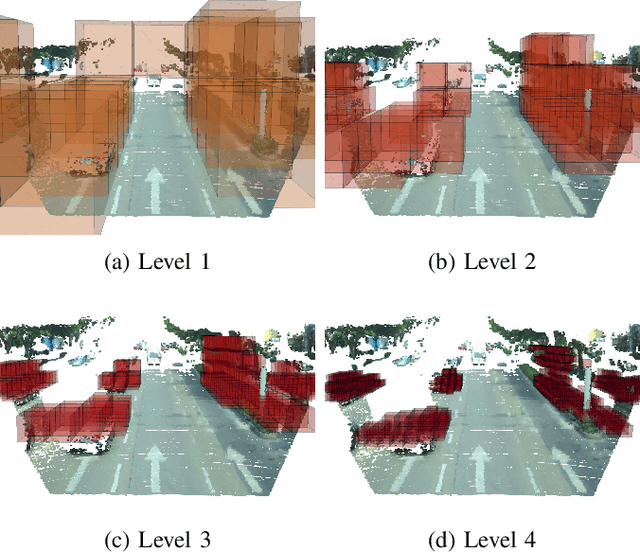

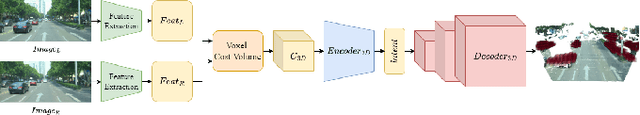

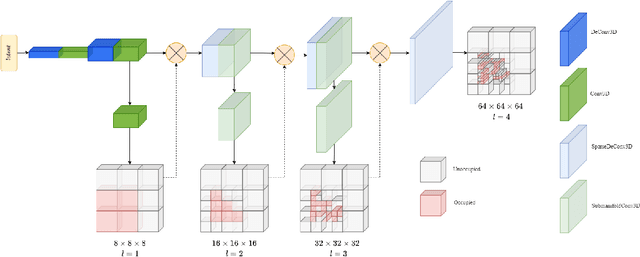

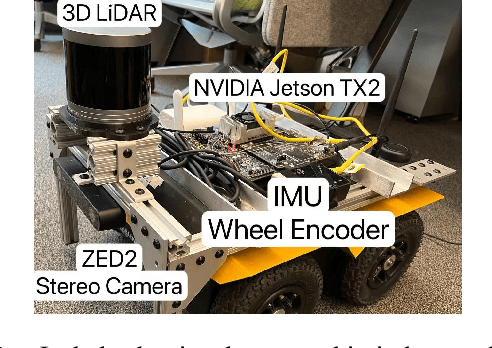

Abstract:Obstacle detection is a safety-critical problem in robot navigation, where stereo matching is a popular vision-based approach. While deep neural networks have shown impressive results in computer vision, most of the previous obstacle detection works only leverage traditional stereo matching techniques to meet the computational constraints for real-time feedback. This paper proposes a computationally efficient method that leverages a deep neural network to detect occupancy from stereo images directly. Instead of learning the point cloud correspondence from the stereo data, our approach extracts the compact obstacle distribution based on volumetric representations. In addition, we prune the computation of safety irrelevant spaces in a coarse-to-fine manner based on octrees generated by the decoder. As a result, we achieve real-time performance on the onboard computer (NVIDIA Jetson TX2). Our approach detects obstacles accurately in the range of 32 meters and achieves better IoU (Intersection over Union) and CD (Chamfer Distance) scores with only 2% of the computation cost of the state-of-the-art stereo model. Furthermore, we validate our method's robustness and real-world feasibility through autonomous navigation experiments with a real robot. Hence, our work contributes toward closing the gap between the stereo-based system in robot perception and state-of-the-art stereo models in computer vision. To counter the scarcity of high-quality real-world indoor stereo datasets, we collect a 1.36 hours stereo dataset with a Jackal robot which is used to fine-tune our model. The dataset, the code, and more visualizations are available at https://lhy.xyz/stereovoxelnet/

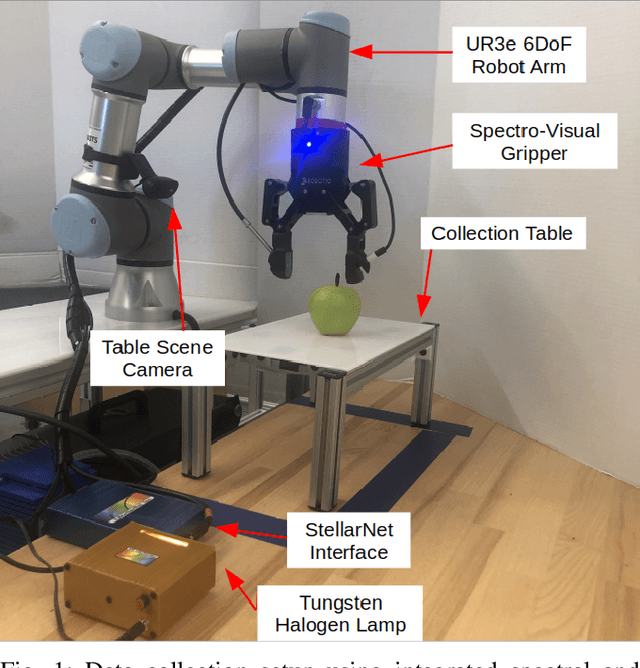

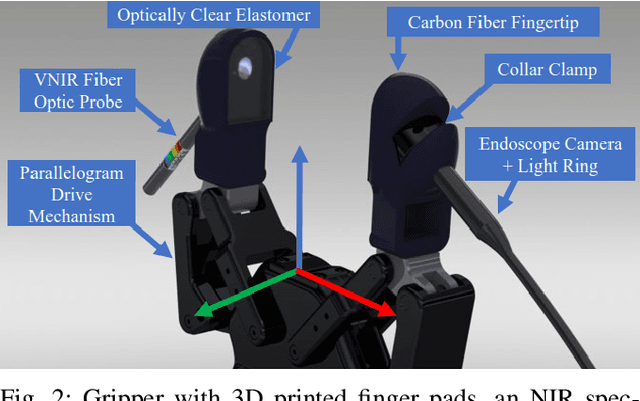

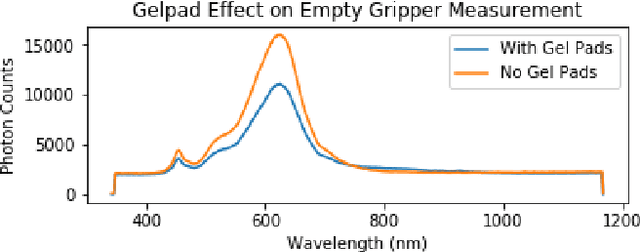

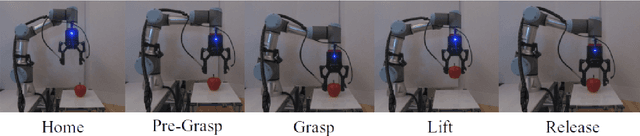

Pregrasp Object Material Classification by a Novel Gripper Design with Integrated Spectroscopy

Jul 03, 2022

Abstract:Robots benefit from being able to classify objects they interact with or manipulate based on their material properties. This capability ensures fine manipulation of complex objects through proper grasp pose and force selection. Prior work has focused on haptic or visual processing to determine material type at grasp time. In this work, we introduce a novel parallel robot gripper design and a method for collecting spectral readings and visual images from within the gripper finger. We train a nonlinear Support Vector Machine (SVM) that can classify the material of the object about to be grasped through recursive estimation, with increasing confidence as the distance from the finger tips to the object decreases. In order to validate the hardware design and classification method, we collect samples from 16 real and fake fruit varieties (composed of polystyrene/plastic) resulting in a dataset containing spectral curves, scene images, and high-resolution texture images as the objects are grasped, lifted, and released. Our modeling method demonstrates an accuracy of 96.4% in classifying objects in a 32 class decision problem. This represents a performance improvement by 29.4% over the state of the art computer vision algorithms at distinguishing between visually similar materials. In contrast to prior work, our recursive estimation model accounts for increasing spectral signal strength and allows for decisions to be made as the gripper approaches an object. We conclude that spectroscopy is a promising sensing modality for enabling robots to not only classify grasped objects but also understand their underlying material composition.

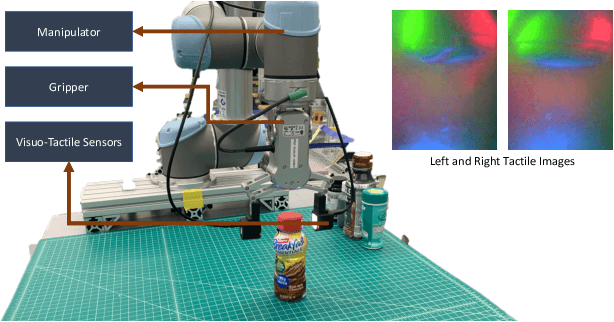

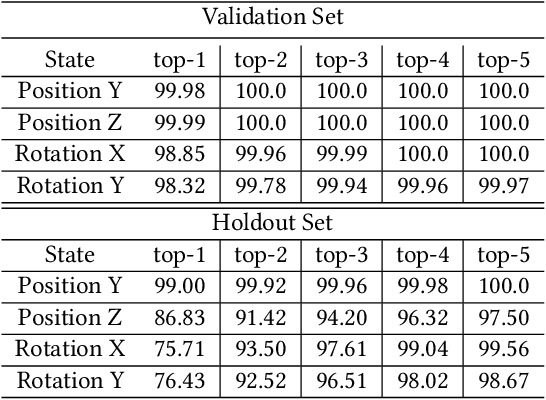

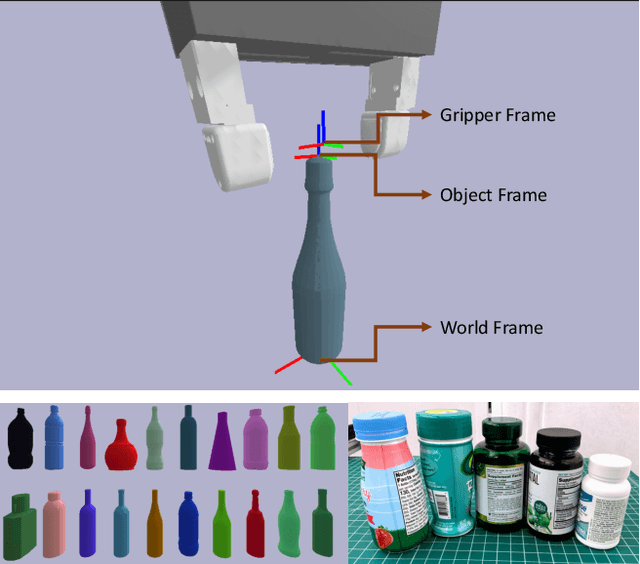

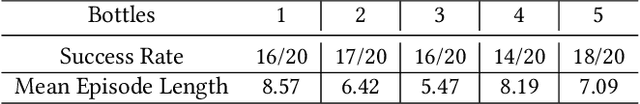

Tactile Pose Estimation and Policy Learning for Unknown Object Manipulation

Mar 21, 2022

Abstract:Object pose estimation methods allow finding locations of objects in unstructured environments. This is a highly desired skill for autonomous robot manipulation as robots need to estimate the precise poses of the objects in order to manipulate them. In this paper, we investigate the problems of tactile pose estimation and manipulation for category-level objects. Our proposed method uses a Bayes filter with a learned tactile observation model and a deterministic motion model. Later, we train policies using deep reinforcement learning where the agents use the belief estimation from the Bayes filter. Our models are trained in simulation and transferred to the real world. We analyze the reliability and the performance of our framework through a series of simulated and real-world experiments and compare our method to the baseline work. Our results show that the learned tactile observation model can localize the pose of novel objects at 2-mm and 1-degree resolution for position and orientation, respectively. Furthermore, we experiment on a bottle opening task where the gripper needs to reach the desired grasp state.

End-to-end grasping policies for human-in-the-loop robots via deep reinforcement learning

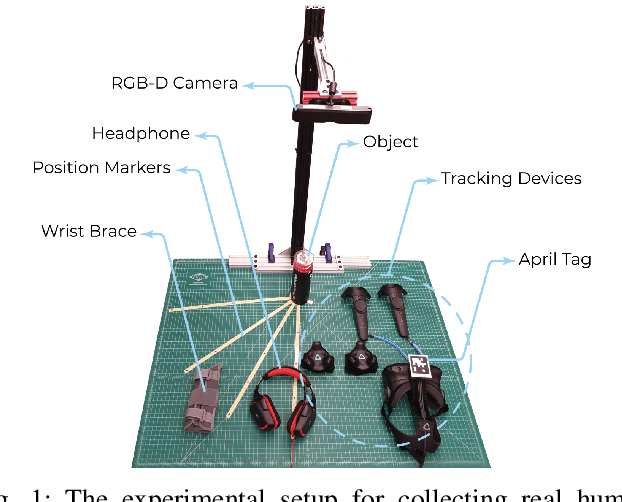

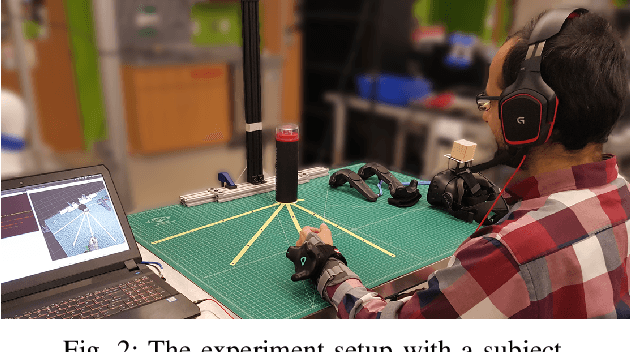

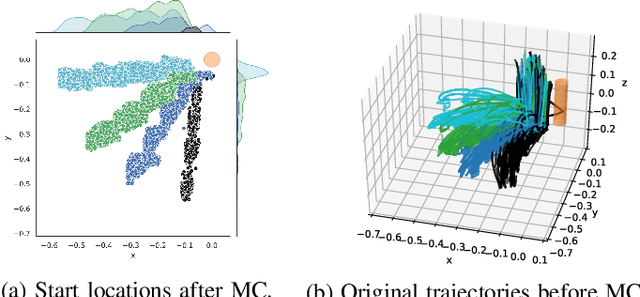

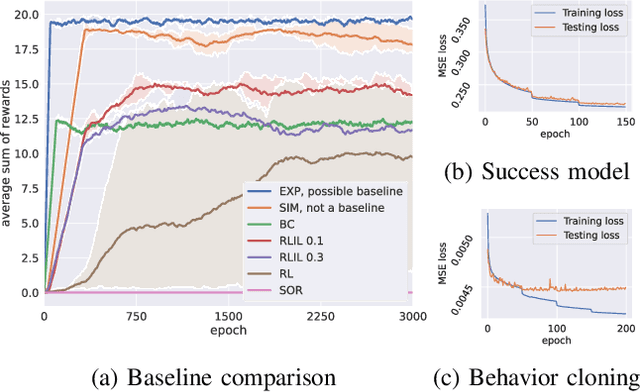

Apr 26, 2021

Abstract:State-of-the-art human-in-the-loop robot grasping is hugely suffered by Electromyography (EMG) inference robustness issues. As a workaround, researchers have been looking into integrating EMG with other signals, often in an ad hoc manner. In this paper, we are presenting a method for end-to-end training of a policy for human-in-the-loop robot grasping on real reaching trajectories. For this purpose we use Reinforcement Learning (RL) and Imitation Learning (IL) in DEXTRON (DEXTerity enviRONment), a stochastic simulation environment with real human trajectories that are augmented and selected using a Monte Carlo (MC) simulation method. We also offer a success model which once trained on the expert policy data and the RL policy roll-out transitions, can provide transparency to how the deep policy works and when it is probably going to fail.

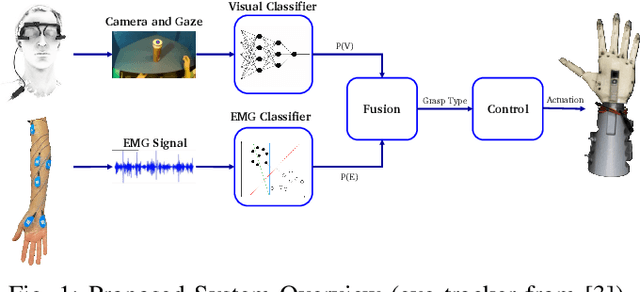

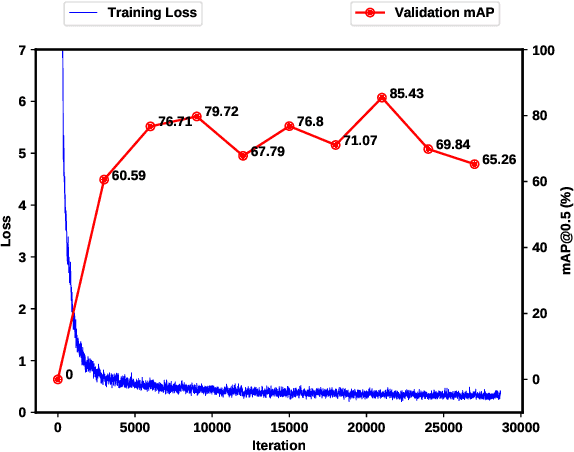

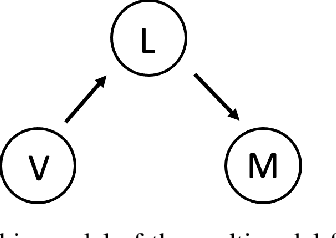

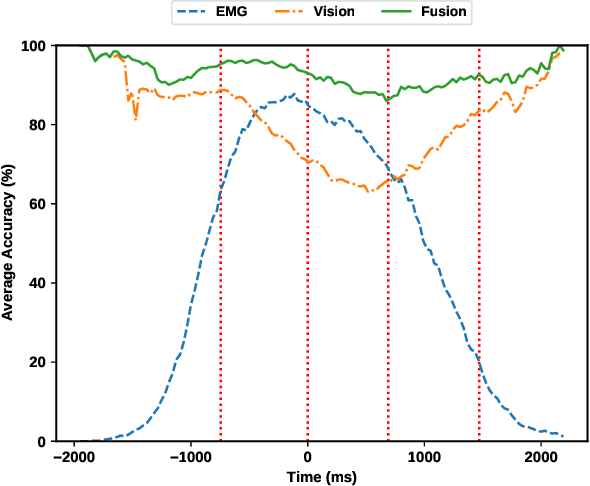

Multimodal Fusion of EMG and Vision for Human Grasp Intent Inference in Prosthetic Hand Control

Apr 08, 2021

Abstract:For lower arm amputees, robotic prosthetic hands offer the promise to regain the capability to perform fine object manipulation in activities of daily living. Current control methods based on physiological signals such as EEG and EMG are prone to poor inference outcomes due to motion artifacts, variability of skin electrode junction impedance over time, muscle fatigue, and other factors. Visual evidence is also susceptible to its own artifacts, most often due to object occlusion, lighting changes, variable shapes of objects depending on view-angle, among other factors. Multimodal evidence fusion using physiological and vision sensor measurements is a natural approach due to the complementary strengths of these modalities. In this paper, we present a Bayesian evidence fusion framework for grasp intent inference using eye-view video, gaze, and EMG from the forearm processed by neural network models. We analyze individual and fused performance as a function of time as the hand approaches the object to grasp it. For this purpose, we have also developed novel data processing and augmentation techniques to train neural network components. Our experimental data analyses demonstrate that EMG and visual evidence show complementary strengths, and as a consequence, fusion of multimodal evidence can outperform each individual evidence modality at any given time. Specifically, results indicate that, on average, fusion improves the instantaneous upcoming grasp type classification accuracy while in the reaching phase by 13.66% and 14.8%, relative to EMG and visual evidence individually. An overall fusion accuracy of 95.3% among 13 labels (compared to a chance level of 7.7%) is achieved, and more detailed analysis indicate that the correct grasp is inferred sufficiently early and with high confidence compared to the top contender, in order to allow successful robot actuation to close the loop.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge