Mo Han

Segmentation and Classification of EMG Time-Series During Reach-to-Grasp Motion

Apr 19, 2021

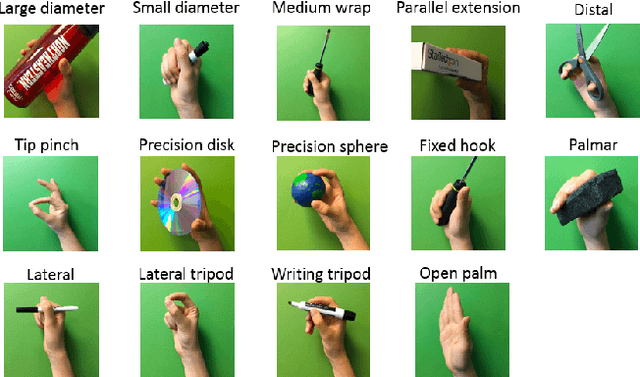

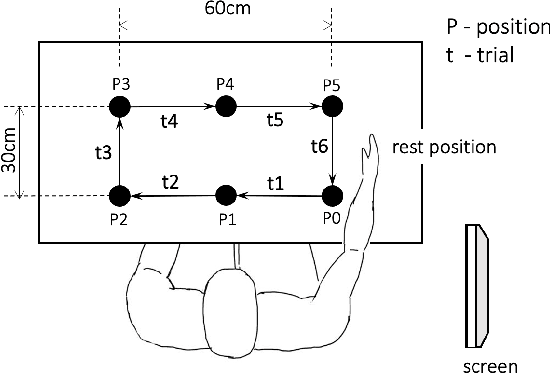

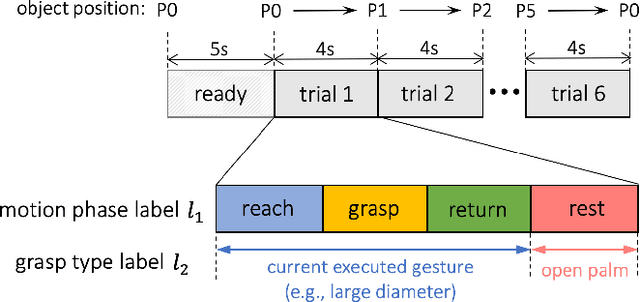

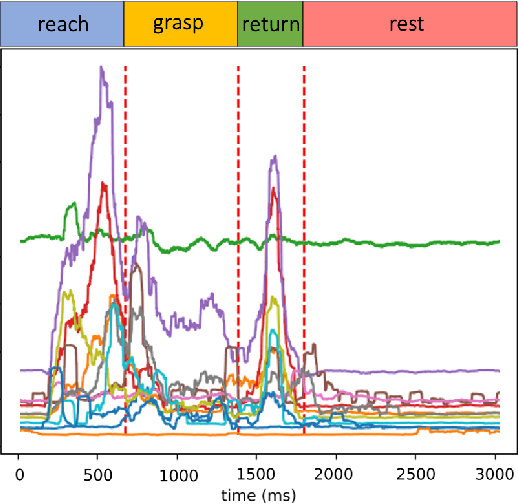

Abstract:The electromyography (EMG) signals have been widely utilized in human robot interaction for extracting user hand and arm motion instructions. A major challenge of the online interaction with robots is the reliable EMG recognition from real-time data. However, previous studies mainly focused on using steady-state EMG signals with a small number of grasp patterns to implement classification algorithms, which is insufficient to generate robust control regarding the dynamic muscular activity variation in practice. Introducing more EMG variability during training and validation could implement a better dynamic-motion detection, but only limited research focused on such grasp-movement identification, and all of those assessments on the non-static EMG classification require supervised ground-truth label of the movement status. In this study, we propose a framework for classifying EMG signals generated from continuous grasp movements with variations on dynamic arm/hand postures, using an unsupervised motion status segmentation method. We collected data from large gesture vocabularies with multiple dynamic motion phases to encode the transitions from one intent to another based on common sequences of the grasp movements. Two classifiers were constructed for identifying the motion-phase label and grasp-type label, where the dynamic motion phases were segmented and labeled in an unsupervised manner. The proposed framework was evaluated in real-time with the accuracy variation over time presented, which was shown to be efficient due to the high degree of freedom of the EMG data.

Multimodal Fusion of EMG and Vision for Human Grasp Intent Inference in Prosthetic Hand Control

Apr 08, 2021

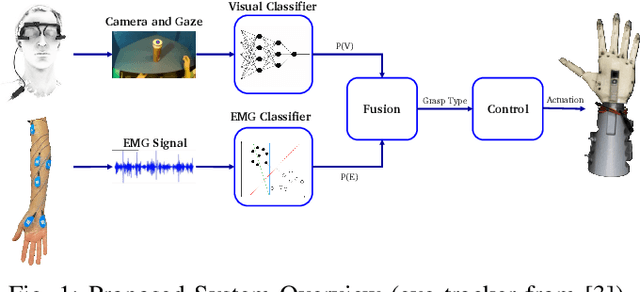

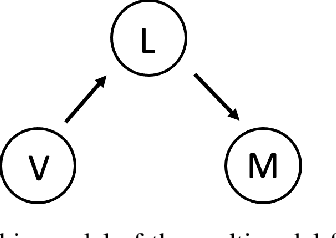

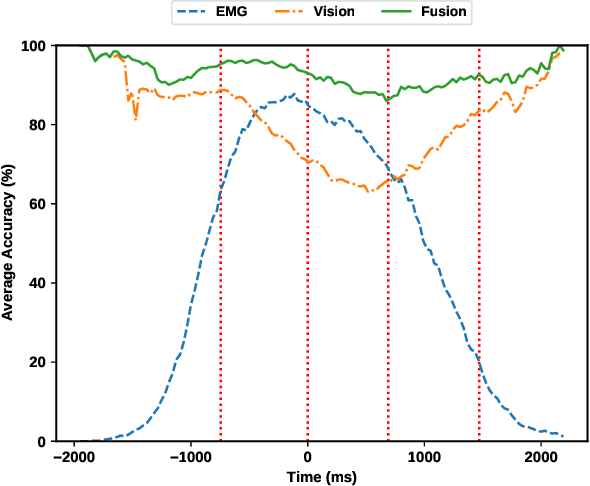

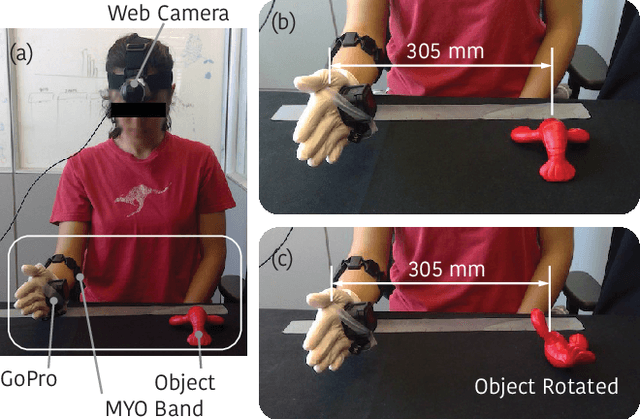

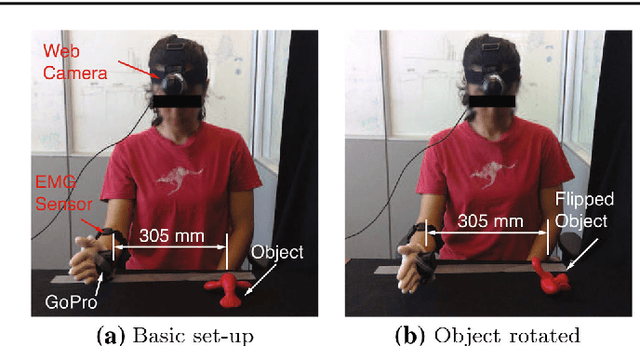

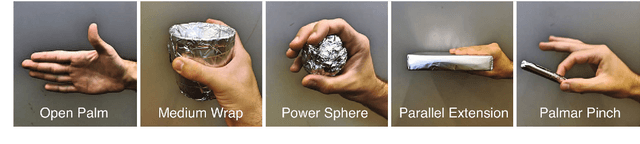

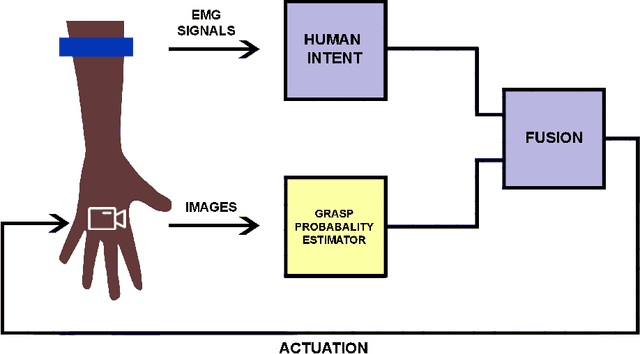

Abstract:For lower arm amputees, robotic prosthetic hands offer the promise to regain the capability to perform fine object manipulation in activities of daily living. Current control methods based on physiological signals such as EEG and EMG are prone to poor inference outcomes due to motion artifacts, variability of skin electrode junction impedance over time, muscle fatigue, and other factors. Visual evidence is also susceptible to its own artifacts, most often due to object occlusion, lighting changes, variable shapes of objects depending on view-angle, among other factors. Multimodal evidence fusion using physiological and vision sensor measurements is a natural approach due to the complementary strengths of these modalities. In this paper, we present a Bayesian evidence fusion framework for grasp intent inference using eye-view video, gaze, and EMG from the forearm processed by neural network models. We analyze individual and fused performance as a function of time as the hand approaches the object to grasp it. For this purpose, we have also developed novel data processing and augmentation techniques to train neural network components. Our experimental data analyses demonstrate that EMG and visual evidence show complementary strengths, and as a consequence, fusion of multimodal evidence can outperform each individual evidence modality at any given time. Specifically, results indicate that, on average, fusion improves the instantaneous upcoming grasp type classification accuracy while in the reaching phase by 13.66% and 14.8%, relative to EMG and visual evidence individually. An overall fusion accuracy of 95.3% among 13 labels (compared to a chance level of 7.7%) is achieved, and more detailed analysis indicate that the correct grasp is inferred sufficiently early and with high confidence compared to the top contender, in order to allow successful robot actuation to close the loop.

From Hand-Perspective Visual Information to Grasp Type Probabilities: Deep Learning via Ranking Labels

Mar 08, 2021

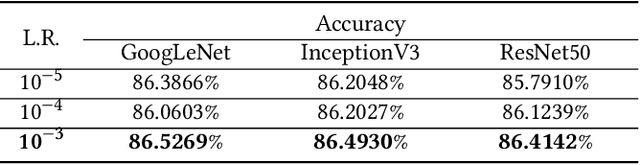

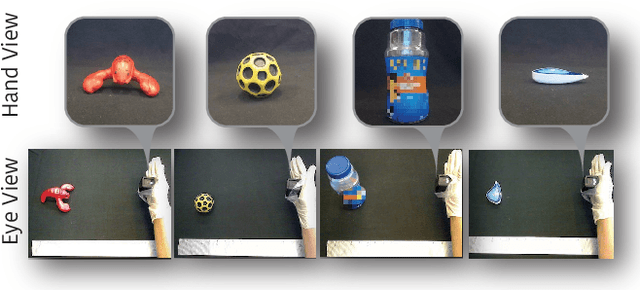

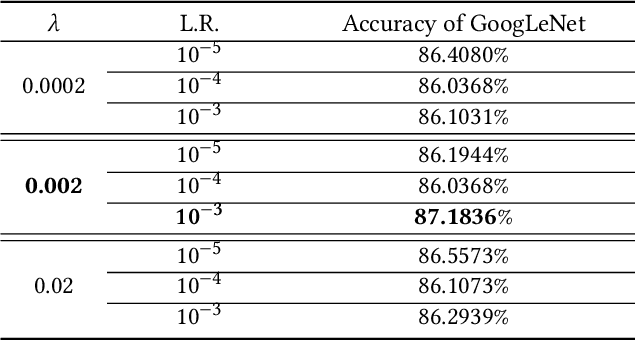

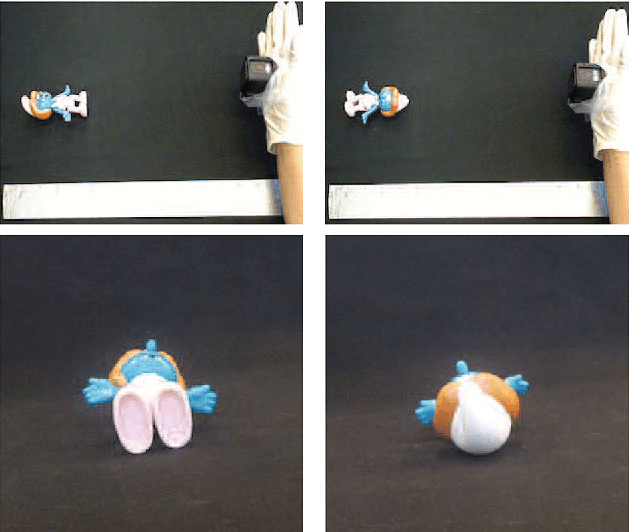

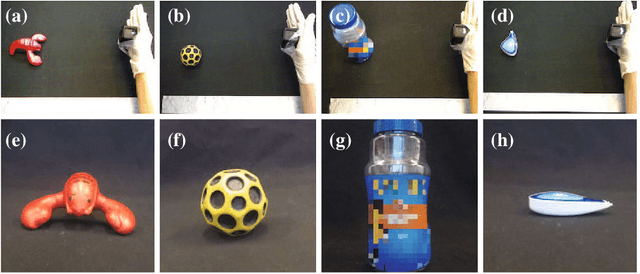

Abstract:Limb deficiency severely affects the daily lives of amputees and drives efforts to provide functional robotic prosthetic hands to compensate this deprivation. Convolutional neural network-based computer vision control of the prosthetic hand has received increased attention as a method to replace or complement physiological signals due to its reliability by training visual information to predict the hand gesture. Mounting a camera into the palm of a prosthetic hand is proved to be a promising approach to collect visual data. However, the grasp type labelled from the eye and hand perspective may differ as object shapes are not always symmetric. Thus, to represent this difference in a realistic way, we employed a dataset containing synchronous images from eye- and hand- view, where the hand-perspective images are used for training while the eye-view images are only for manual labelling. Electromyogram (EMG) activity and movement kinematics data from the upper arm are also collected for multi-modal information fusion in future work. Moreover, in order to include human-in-the-loop control and combine the computer vision with physiological signal inputs, instead of making absolute positive or negative predictions, we build a novel probabilistic classifier according to the Plackett-Luce model. To predict the probability distribution over grasps, we exploit the statistical model over label rankings to solve the permutation domain problems via a maximum likelihood estimation, utilizing the manually ranked lists of grasps as a new form of label. We indicate that the proposed model is applicable to the most popular and productive convolutional neural network frameworks.

HANDS: A Multimodal Dataset for Modeling Towards Human Grasp Intent Inference in Prosthetic Hands

Mar 08, 2021

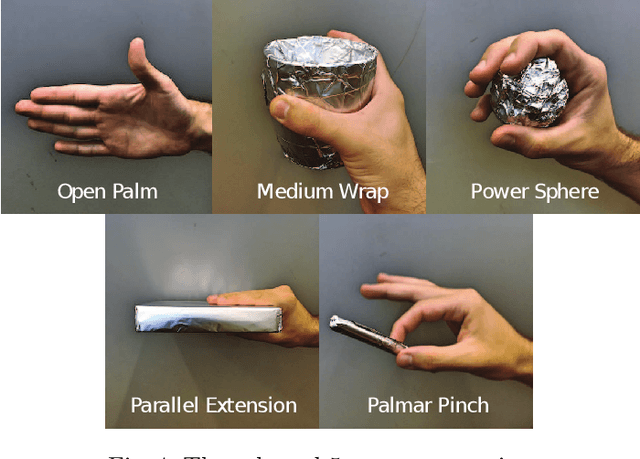

Abstract:Upper limb and hand functionality is critical to many activities of daily living and the amputation of one can lead to significant functionality loss for individuals. From this perspective, advanced prosthetic hands of the future are anticipated to benefit from improved shared control between a robotic hand and its human user, but more importantly from the improved capability to infer human intent from multimodal sensor data to provide the robotic hand perception abilities regarding the operational context. Such multimodal sensor data may include various environment sensors including vision, as well as human physiology and behavior sensors including electromyography and inertial measurement units. A fusion methodology for environmental state and human intent estimation can combine these sources of evidence in order to help prosthetic hand motion planning and control. In this paper, we present a dataset of this type that was gathered with the anticipation of cameras being built into prosthetic hands, and computer vision methods will need to assess this hand-view visual evidence in order to estimate human intent. Specifically, paired images from human eye-view and hand-view of various objects placed at different orientations have been captured at the initial state of grasping trials, followed by paired video, EMG and IMU from the arm of the human during a grasp, lift, put-down, and retract style trial structure. For each trial, based on eye-view images of the scene showing the hand and object on a table, multiple humans were asked to sort in decreasing order of preference, five grasp types appropriate for the object in its given configuration relative to the hand. The potential utility of paired eye-view and hand-view images was illustrated by training a convolutional neural network to process hand-view images in order to predict eye-view labels assigned by humans.

Towards Creating a Deployable Grasp Type Probability Estimator for a Prosthetic Hand

Jan 13, 2021

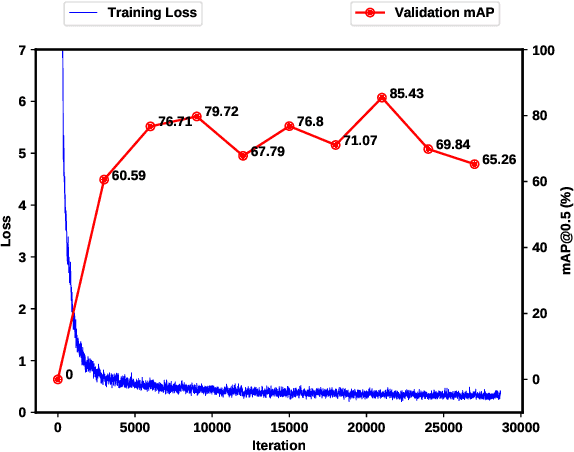

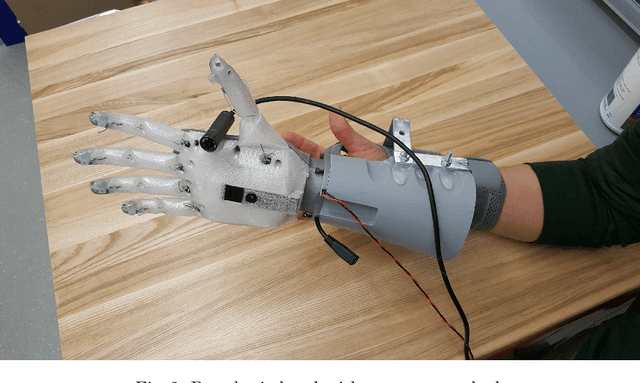

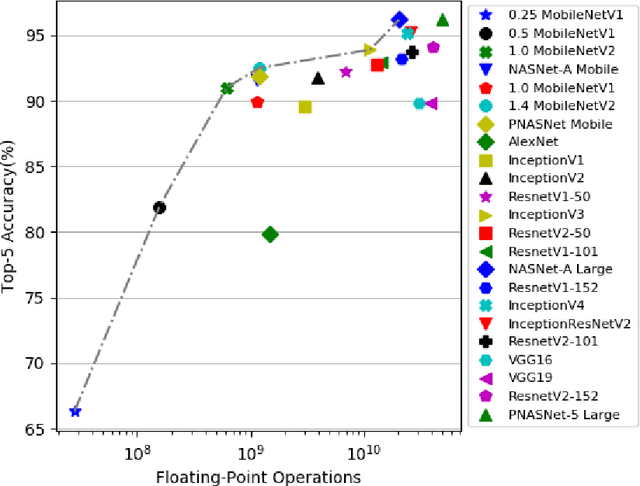

Abstract:For lower arm amputees, prosthetic hands promise to restore most of physical interaction capabilities. This requires to accurately predict hand gestures capable of grabbing varying objects and execute them timely as intended by the user. Current approaches often rely on physiological signal inputs such as Electromyography (EMG) signal from residual limb muscles to infer the intended motion. However, limited signal quality, user diversity and high variability adversely affect the system robustness. Instead of solely relying on EMG signals, our work enables augmenting EMG intent inference with physical state probability through machine learning and computer vision method. To this end, we: (1) study state-of-the-art deep neural network architectures to select a performant source of knowledge transfer for the prosthetic hand, (2) use a dataset containing object images and probability distribution of grasp types as a new form of labeling where instead of using absolute values of zero and one as the conventional classification labels, our labels are a set of probabilities whose sum is 1. The proposed method generates probabilistic predictions which could be fused with EMG prediction of probabilities over grasps by using the visual information from the palm camera of a prosthetic hand. Our results demonstrate that InceptionV3 achieves highest accuracy with 0.95 angular similarity followed by 1.4 MobileNetV2 with 0.93 at ~20% the amount of operations.

Universal Physiological Representation Learning with Soft-Disentangled Rateless Autoencoders

Sep 28, 2020

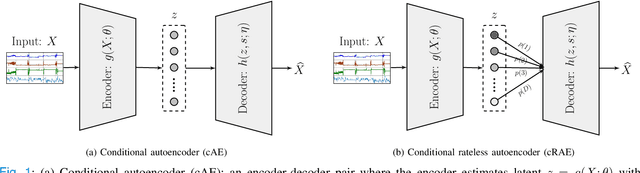

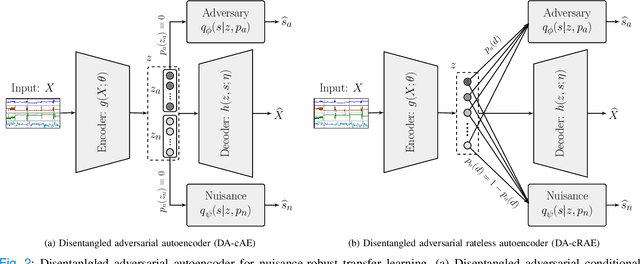

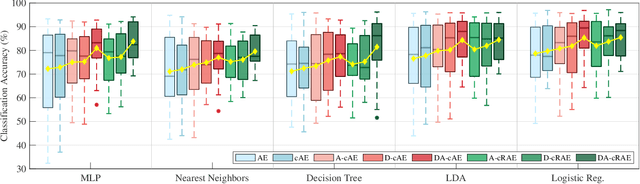

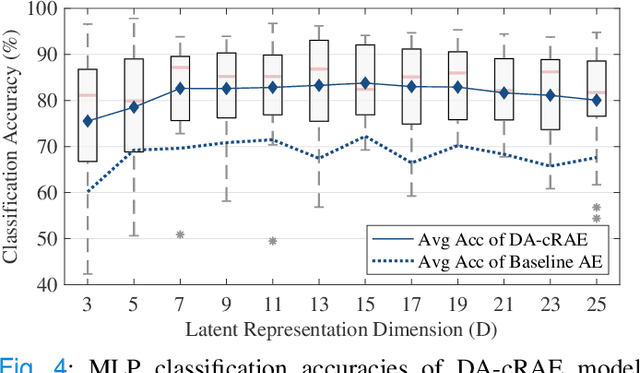

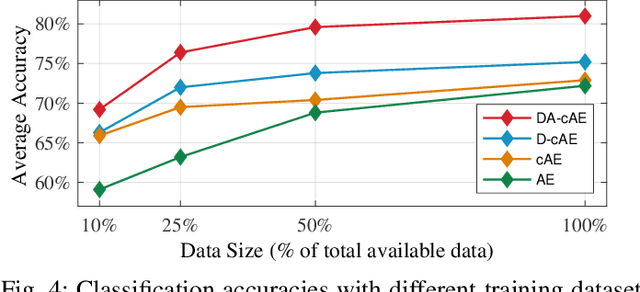

Abstract:Human computer interaction (HCI) involves a multidisciplinary fusion of technologies, through which the control of external devices could be achieved by monitoring physiological status of users. However, physiological biosignals often vary across users and recording sessions due to unstable physical/mental conditions and task-irrelevant activities. To deal with this challenge, we propose a method of adversarial feature encoding with the concept of a Rateless Autoencoder (RAE), in order to exploit disentangled, nuisance-robust, and universal representations. We achieve a good trade-off between user-specific and task-relevant features by making use of the stochastic disentanglement of the latent representations by adopting additional adversarial networks. The proposed model is applicable to a wider range of unknown users and tasks as well as different classifiers. Results on cross-subject transfer evaluations show the advantages of the proposed framework, with up to an 11.6% improvement in the average subject-transfer classification accuracy.

Disentangled Adversarial Autoencoder for Subject-Invariant Physiological Feature Extraction

Aug 26, 2020

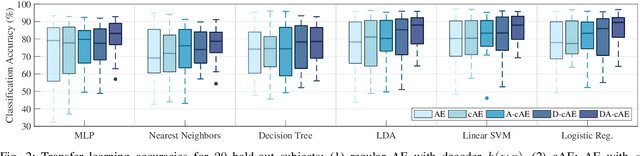

Abstract:Recent developments in biosignal processing have enabled users to exploit their physiological status for manipulating devices in a reliable and safe manner. One major challenge of physiological sensing lies in the variability of biosignals across different users and tasks. To address this issue, we propose an adversarial feature extractor for transfer learning to exploit disentangled universal representations. We consider the trade-off between task-relevant features and user-discriminative information by introducing additional adversary and nuisance networks in order to manipulate the latent representations such that the learned feature extractor is applicable to unknown users and various tasks. Results on cross-subject transfer evaluations exhibit the benefits of the proposed framework, with up to 8.8% improvement in average accuracy of classification, and demonstrate adaptability to a broader range of subjects.

* Accepted for publication by IEEE Signal Processing Letters

Disentangled Adversarial Transfer Learning for Physiological Biosignals

Apr 15, 2020

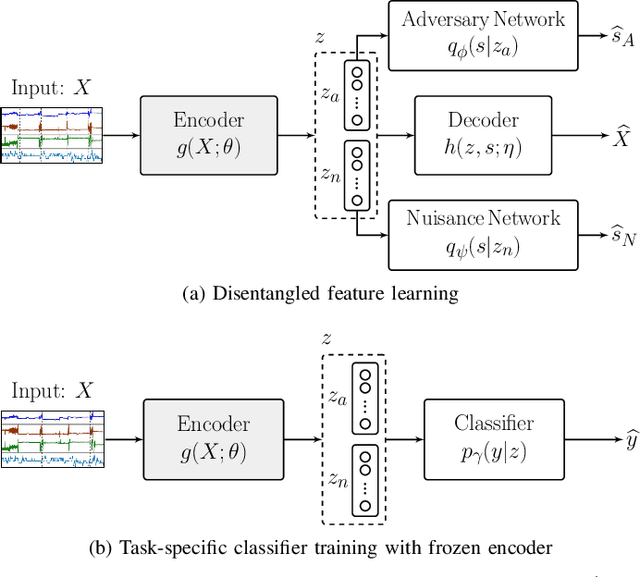

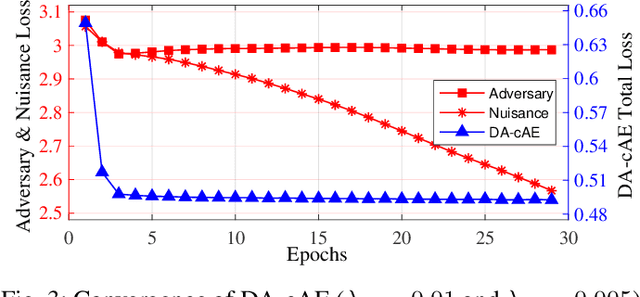

Abstract:Recent developments in wearable sensors demonstrate promising results for monitoring physiological status in effective and comfortable ways. One major challenge of physiological status assessment is the problem of transfer learning caused by the domain inconsistency of biosignals across users or different recording sessions from the same user. We propose an adversarial inference approach for transfer learning to extract disentangled nuisance-robust representations from physiological biosignal data in stress status level assessment. We exploit the trade-off between task-related features and person-discriminative information by using both an adversary network and a nuisance network to jointly manipulate and disentangle the learned latent representations by the encoder, which are then input to a discriminative classifier. Results on cross-subjects transfer evaluations demonstrate the benefits of the proposed adversarial framework, and thus show its capabilities to adapt to a broader range of subjects. Finally we highlight that our proposed adversarial transfer learning approach is also applicable to other deep feature learning frameworks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge